Google researchers have introduced TransformerFAM, a novel architecture that will revolutionize long context processing in large language models (LLM). By integrating a feedback loop mechanism, TransformerFAM promises to improve the network's ability to handle infinitely long sequences. This addresses the limitations posed by the complexity of quadratic attention.

Also read: PyTorch's TorchTune: Revolutionizing LLM fine-tuning

Understand the limitations

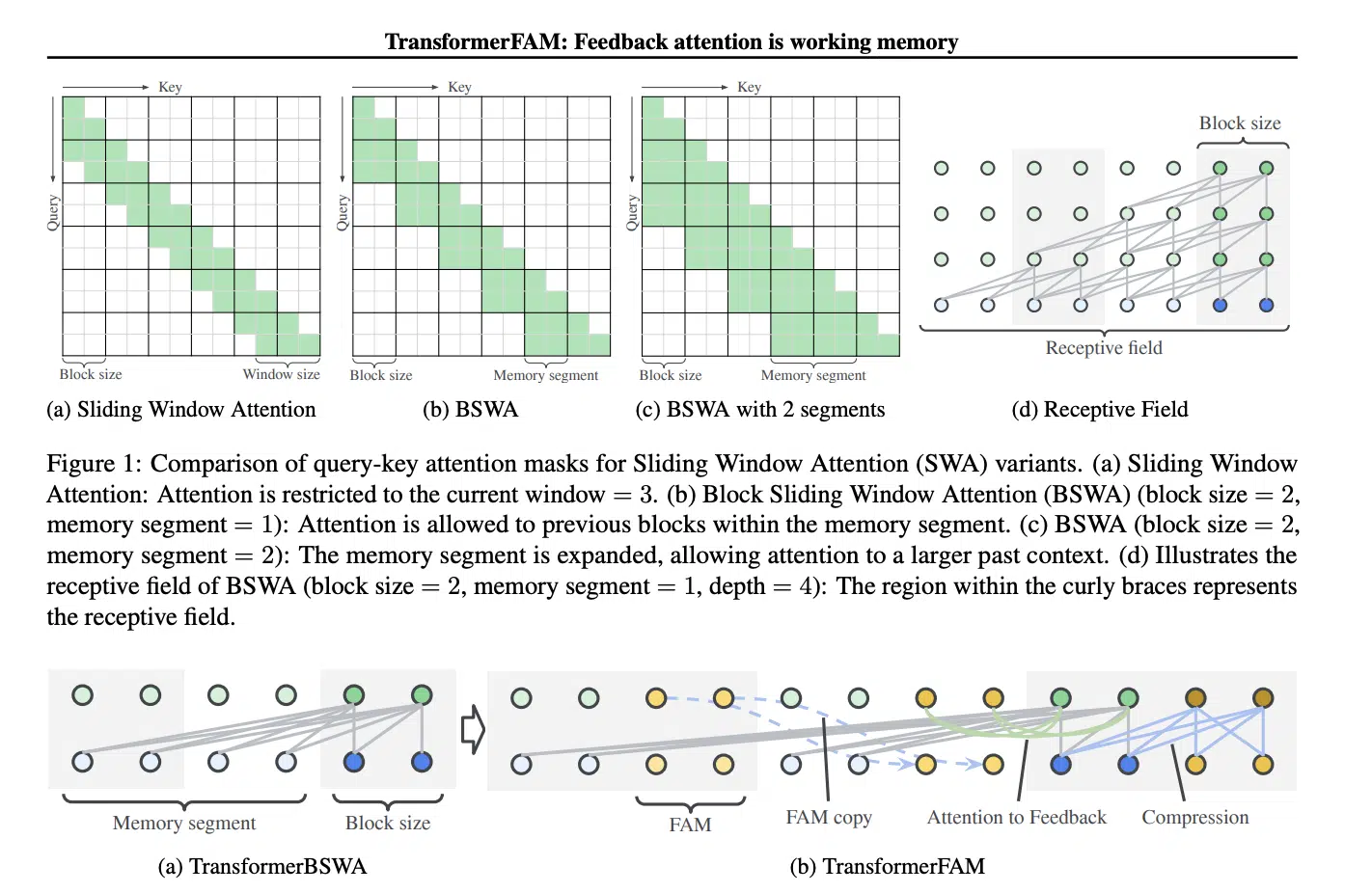

Traditional attention mechanisms in Transformers exhibit quadratic complexity with respect to context length, which limits their effectiveness in processing long sequences. While attempts such as sliding window attention and sparse or linear approaches have been made, they often fall short, especially at larger scales.

The solution: TransformerFAM

In response to these challenges, Google's TransformerFAM introduces a feedback attention mechanism, inspired by the concept of working memory in the human brain. This mechanism allows the model to pay attention to its own latent representations, fostering the emergence of working memory within the Transformer architecture.

Also read: Microsoft Introduces AllHands: LLM Framework for Large-Scale Feedback Analysis

Key features and innovations

TransformerFAM incorporates a Block Sliding Window Attention (BSWA) module, which enables efficient attention to local and long-range dependencies within input and output sequences. By integrating feedback activations into each block, the architecture facilitates the dynamic propagation of global contextual information between blocks.

Performance and potential

Experimental results on various model sizes demonstrate significant improvements on long-context tasks, outperforming other configurations. TransformerFAM's seamless integration with pre-trained models and minimal impact on training efficiency make it a promising solution to enable LLMs to process sequences of unlimited length.

Also read: Databricks DBRX: The Open Source LLM Taking on the Giants

Our opinion

TransformerFAM marks a significant advance in the field of deep learning. It offers a promising solution to the long-standing challenge of processing infinitely long sequences. By leveraging feedback attention and bulk sliding window attention, Google has paved the way for more efficient and effective long context processing in LLMs. This has far-reaching implications for natural language understanding and reasoning tasks.

Follow us Google news to stay up to date with the latest innovations in the world of ai, data science and GenAI.

NEWSLETTER

NEWSLETTER