Introduction

With the launch of many ai tools, finding ai-generated content is crucial nowadays.

It's all due to the widespread spread of false information and the potential to spread hate. ai-generated content can create compelling fake news, deepfakes and other misleading materials that can manipulate public opinion, incite conflict and damage reputations.

You may be wondering if there is a way to check if content is ai-generated. Yes, it is now possible with Google Deepmind SynthID

At a time when the Internet abounds in ai-generated texts, the direct producers and creators of such content are increasingly less able to maintain the authenticity and integrity of their work. In the contemporary digital era, it has become imperative to differentiate between human-generated content and ai-generated content to preserve the trust and value of human work. Hence the introduction of SynthID, the world's first toolset for marking and identifying ai-generated content. Currently, this revolutionary new suite is in its beta version. It invisibly embeds digital watermarks across all media variants to ensure easy and risk-free identification of ai-generated images, audio, text and visuals.

What exactly is SynthID?

At the I/O 2024 conference, Google introduced the SynthlD extension, a digital watermark designed to authenticate synthetic images produced by ai. This Google Deep Mind The technology will now be integrated into its latest video generation tool, the Gemini app and web interface. Originally introduced last year, SynthlD aims to protect users by providing a reliable method to distinguish between genuine and ai-generated content, thereby combating the spread of misinformation.

SynthID is designed to mark and identify images created by ai. This innovative technology embeds an imperceptible digital watermark within the pixels of the ai-generated content, ensuring that the watermark remains invisible to the naked eye but detectable using specific scanning methods. By examining an image for this unique digital signature, SynthID can determine the likelihood that an image was produced by an ai, thereby helping to authenticate the origins of digital images.

Read also: PaliGemma: Google's new ai sees like you and writes like Shakespeare!

<h3 class="wp-block-heading" id="h-the-importance-of-identifying-ai-generated-content”>The importance of identifying ai-generated content

SynthID addresses a critical need in the digital landscape: the ability to identify ai-generated content. While not a panacea for misinformation or misattribution, SynthID represents an important step forward in ai safety. Making ai-generated content traceable promotes transparency and trust, helping users and organizations interact responsibly with ai technologies.

How does SynthID work?

SynthID employs advanced deep learning models and algorithms to embed and detect digital watermarks in different types of media:

- Watermarks: Digital watermarks are integrated directly into ai-generated content without altering its original quality.

- Identification: SynthID scans media for these watermarks, allowing users to verify whether content was generated by Google's ai tools.

The watermarking process

An LLM generates text one token at a time. Tokens can represent a single character, word, or part of a phrase. To create a coherent text sequence, the model predicts the next most likely token based on the previous words and the probability scores assigned to each potential token.

SynthID adjusts the probability score of each predicted token in cases where it does not compromise the quality, accuracy and creativity of the result. This process is repeated across all generated text, incorporating a watermark pattern that SynthID can detect. The next section will cover SynthID for text, images and videos.

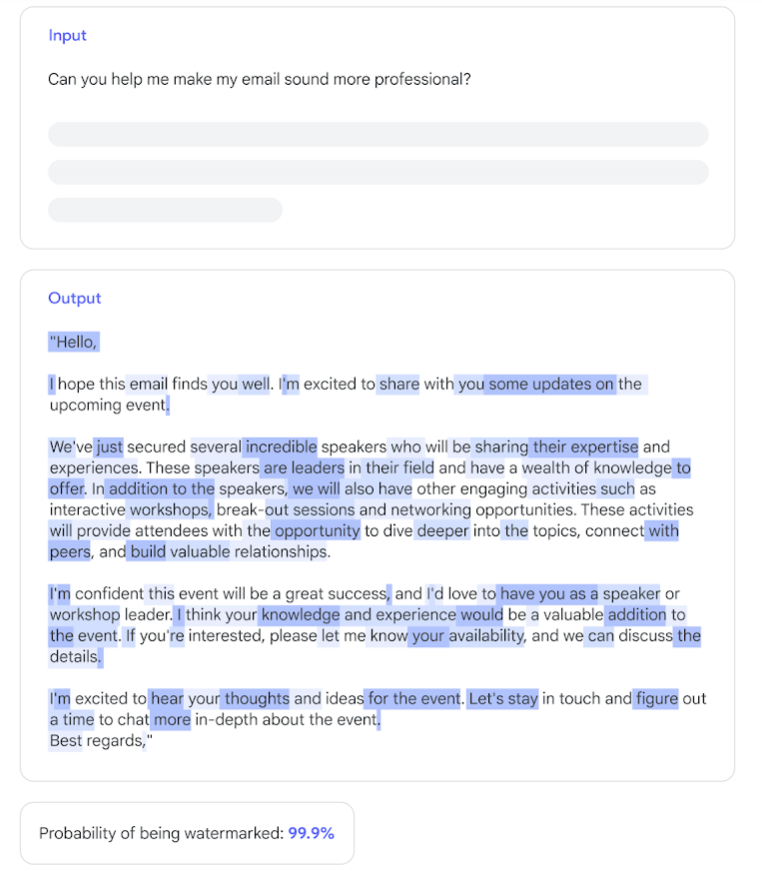

SynthID for text

SynthID's text watermarking capabilities are built into the Gemini app and web experience.

This approach incorporates watermarking into the text generation process of large language models (LLMs), which predict the next token (character, word, or part of phrase) in a sequence. SynthID can watermark text without compromising its quality or creativity by subtly adjusting the probability scores of the tokens. This method is effective for texts of varying lengths and remains robust to minor transformations such as paraphrasing.

SynthID for music and audio

In November 2023, SynthID expanded to include ai-generated music and audio, rolling out first through ai-and-music-experiment/” target=”_blank” rel=”noreferrer noopener nofollow”>lily model. The watermarking process involves converting the audio waveform into a spectrogram, embedding the watermark, and then converting it again. This technique ensures that the watermark remains inaudible and resistant to common audio modifications, such as compression or speed changes.

SynthID for images and videos

SynthID watermarking for images and videos involves embedding watermarks directly into pixels and video frames. This method preserves the quality of the media while allowing the watermark to remain detectable even after modifications such as cropping or compression. The SynthID tool's capabilities are integrated with Vertex ai's text-to-image models and the Veo video generation model, making it easy to seamlessly identify ai-generated content.

Availability and integration

SynthID technology is available to Vertex ai customers and is integrated into products such as ImageFX and VideoFX. Users can identify ai-generated content through features in Google Search and Chrome, promoting the widespread use and accessibility of this technology.

We've also integrated SynthID into Veo, the most capable video generation model to date, available to select creators at VideoFX.

Also read: How to create images using Image 2?

Benefits and limitations

SynthID's watermarking technology excels with longer ai-generated text and diverse content, but is less effective with objective prompts and extensively rewritten or translated text. While it significantly improves ai content detection, it is not foolproof against sophisticated adversaries, but it is a strong deterrent against malicious use of ai.

Also Read: Google I/O 2024 Highlights

Future developments

Google plans to publish a detailed research paper on SynthID text watermarking and open-source the technology through the Responsible Generative ai Toolkit. This will allow developers to integrate SynthID into their models, expanding its impact across the ai ecosystem.

Conclusion

In conclusion, the SynthID tool is a testament to the power of collaborative innovation in ai. By embedding trust and accountability directly into the fabric of digital content, SynthID addresses current challenges and paves the way for a future where ai-generated media can be reliably authenticated. This advancement reinforces the integrity of digital information and sets a new standard for the responsible use of ai, benefiting both users and creators. While we await continued development of the SynthID tool, its impact on the digital landscape promises to be profound and far-reaching.

I hope you found this article helpful in understanding “SynthID: Google is expanding ways to protect ai misinformation.” For more articles like this, explore our blog section today!

NEWSLETTER

NEWSLETTER