Generative ai, an area of artificial intelligence, focuses on creating systems capable of producing human-like text and solving complex reasoning tasks. These models are essential in various applications, including natural language processing. Their main function is to predict subsequent words in a sequence, generate coherent text, and even solve logical and mathematical problems. However, despite their impressive capabilities, generative ai models often need help with the accuracy and reliability of their results, which is particularly problematic in reasoning tasks where a single error can invalidate an entire solution.

A major problem in this field is the tendency of generative ai models to produce results that, while reliable and compelling, may need to be corrected. This challenge is critical in areas where accuracy is paramount, such as education, finance, and healthcare. The core of the problem lies in the models’ inability to consistently generate correct answers, which undermines their potential in high-stakes applications. Improving the accuracy and reliability of these ai systems is therefore a priority for researchers seeking to improve the trustworthiness of ai-generated solutions.

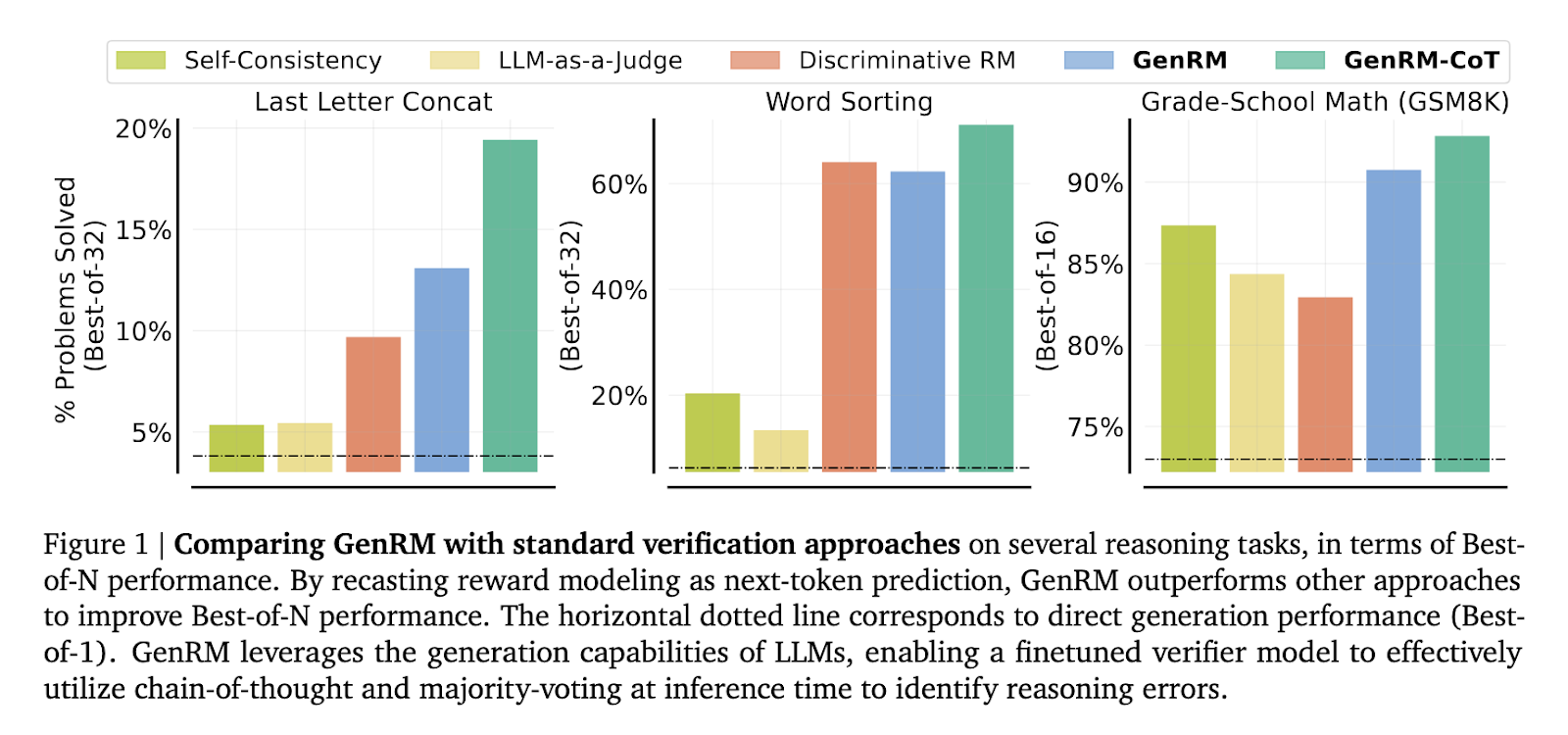

Existing methods to address these issues involve discriminative reward (RM) models, which classify potential answers as correct or incorrect based on assigned scores. However, these models must take full advantage of the generative capabilities of large language models (LLMs). Another common approach is the LLM-as-judge method, in which pre-trained language models evaluate the correctness of solutions. While this method takes advantage of the generative capabilities of LLMs, it often fails to match the performance of more specialized verifiers, particularly on reasoning tasks that require nuanced judgment.

Researchers from Google DeepMind, the University of Toronto, MILA, and UCLA have introduced a new approach called Generative Reward Modeling (GenRM). This method redefines the verification process by framing it as a next-token prediction task, a core capability of generative reward models. Unlike traditional discriminative reward models, GenRM integrates the text generation strengths of generative reward models into the verification process, allowing the model to generate and evaluate potential solutions simultaneously. This approach also supports chain-of-thought (CoT) reasoning, where the model generates intermediate reasoning steps before arriving at a final decision. Thus, the GenRM method not only evaluates the correctness of solutions, but also improves the overall reasoning process by allowing for more detailed and structured evaluations.

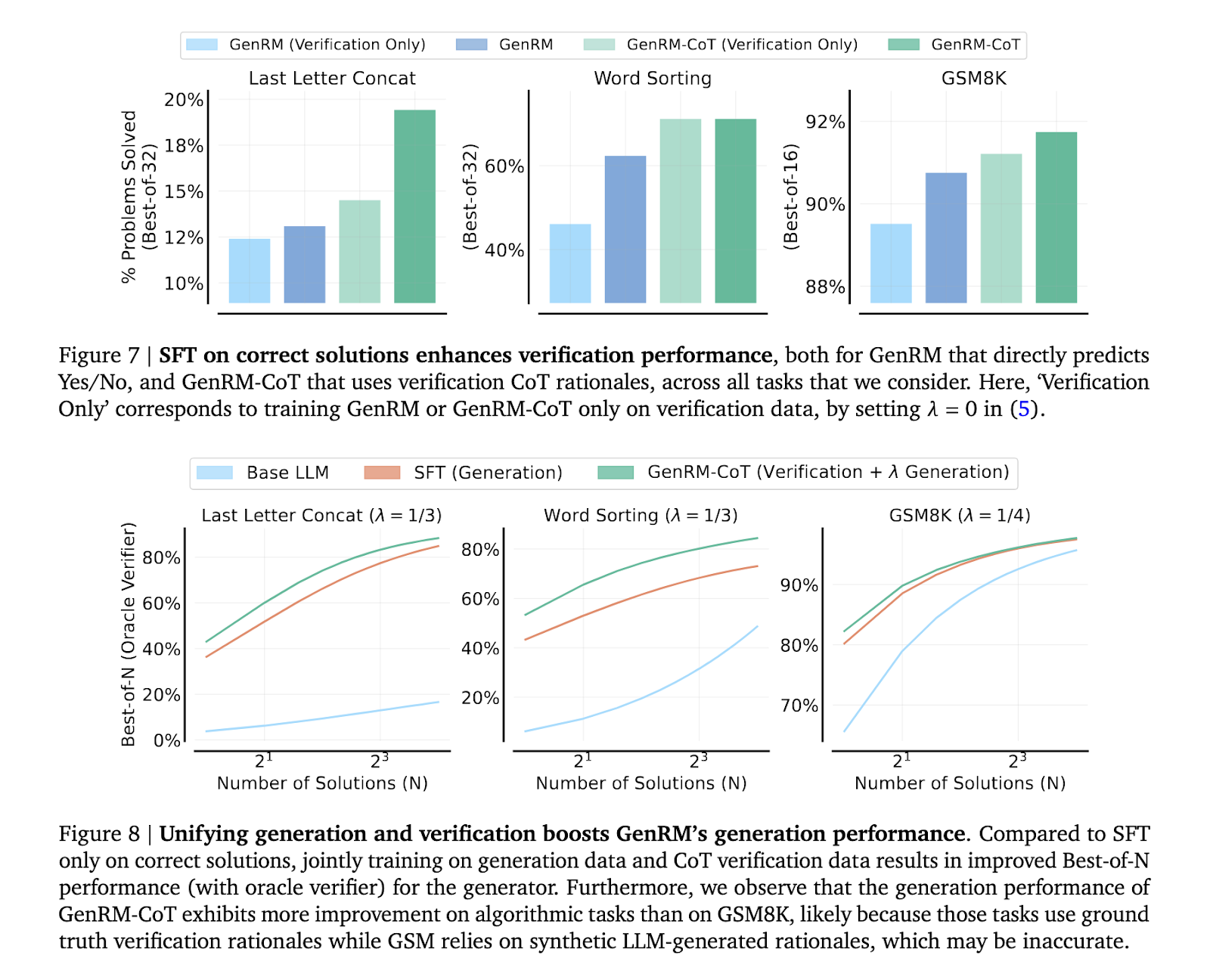

The GenRM methodology employs a unified training approach that combines solution generation and verification. This is achieved by training the model to predict the correctness of a solution through next-token prediction, a technique that leverages the inherent generative capabilities of LLMs. In practice, the model generates intermediate reasoning steps (CoT ratios) that are then used to verify the final solution. This process integrates seamlessly with existing ai training techniques, allowing for simultaneous improvement of generation and verification capabilities. Furthermore, the GenRM model benefits from additional computations at inference time, such as majority voting that aggregates multiple reasoning paths to arrive at the most accurate solution.

The performance of the GenRM model, particularly when combined with CoT reasoning, significantly outperforms traditional verification methods. In a series of rigorous tests, including tasks related to elementary school mathematics and algorithmic problem solving, the GenRM model demonstrated a notable improvement in accuracy. Specifically, the researchers reported a 16% to 64% increase in the percentage of problems solved correctly compared to discriminative problem solving and LLM-as-judge methods. For example, when verifying the results of the Gemini 1.0 Pro model, the GenRM approach improved the problem solving success rate from 73% to 92.8%. This significant performance increase highlights the model’s ability to mitigate errors that standard verifiers often miss, particularly in complex reasoning scenarios. Furthermore, the researchers observed that the GenRM model scales effectively with larger dataset size and increased model capacity, further improving its applicability to various reasoning tasks.

In conclusion, the introduction of the GenRM method by Google DeepMind researchers marks a significant advancement in generative ai, particularly in addressing the verification challenges associated with reasoning tasks. The GenRM model offers a more reliable and accurate approach to solving complex problems by unifying the generation and verification of solutions into a single process. This method improves the accuracy of ai-generated solutions and enhances the overall reasoning process, making it a valuable tool for future ai applications across multiple domains. As generative ai continues to evolve, the GenRM approach provides a solid foundation for future research and development, particularly in areas where accuracy and reliability are crucial.

Take a look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

Below is a highly recommended webinar from our sponsor: ai/webinar-nvidia-nims-and-haystack?utm_campaign=2409-campaign-nvidia-nims-and-haystack-&utm_source=marktechpost&utm_medium=banner-ad-desktop” target=”_blank” rel=”noreferrer noopener”>'Developing High-Performance ai Applications with NVIDIA NIM and Haystack'

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER