Humans have an extraordinary ability to locate sound sources and interpret their environment using auditory signals, a phenomenon called spatial hearing. This ability enables tasks such as identifying speakers in noisy environments or navigating complex environments. Emulating such auditory spatial perception is crucial to improve the immersive experience in technologies such as augmented reality (AR) and virtual reality (VR). However, the transition from monaural (single channel) to binaural (two channel) audio synthesis, which captures spatial auditory effects, faces significant challenges, particularly due to the limited availability of positional and multichannel audio data.

Traditional mono-to-binaural synthesis approaches often rely on digital signal processing (DSP) frameworks. These methods model auditory effects using components such as head-related transfer function (HRTF), ambient impulse response (RIR), and ambient noise, generally treated as linear time-invariant (LTI) systems. Although DSP-based techniques are well established and can generate realistic audio experiences, they do not take into account the nonlinear acoustic wave effects inherent to real-world sound propagation.

Supervised learning models have emerged as an alternative to DSP, leveraging neural networks to synthesize binaural audio. However, such models face two major limitations: first, the paucity of positionally annotated binaural data sets and, second, susceptibility to overfitting to acoustic environments, speaker characteristics, and performance data sets. specific training. The need for specialized equipment for data collection further limits these approaches, making supervised methods expensive and less practical.

To address these challenges, Google researchers have proposed ZeroBAS, a zero-shot neural method for monaural speech synthesis that does not rely on binaural training data. This innovative approach employs parameter-free geometric time warping (GTW) and amplitude scaling (AS) techniques based on source position. These initial binaural signals are further refined using a pre-trained denoising vocoder, producing perceptually realistic binaural audio. Surprisingly, ZeroBAS generalizes effectively across various room conditions, as demonstrated using the newly introduced TUT monaural dataset, and achieves comparable or even better performance than state-of-the-art supervised methods on off-distribution systems. data.

The ZeroBAS framework comprises a three-stage architecture as follows:

- In stage 1, Geometric Time Warping (GTW) transforms the monaural input into two channels (left and right) by simulating interaural time differences (ITD) based on the relative positions of the sound source and the listener's ears. GTW calculates time delays for the left and right ear channels. The warped signals are then linearly interpolated to generate initial binaural channels.

- In stage 2, Amplitude Scaling (AS) improves the spatial realism of warped signals by simulating the interaural level difference (ILD) based on the inverse square law. As human perception of sound spatiality is based on both ITD and ILD, the latter being dominant for high frequency sounds. Using the Euclidean distances of the source from both ears and , the amplitudes are scaled.

- In stage 3, it involves an iterative refinement of the warped and scaled signals using a pre-trained denoising vocoder, Wave setting. This vocoder takes advantage of log-mel spectrogram features and diffusion denoising probabilistic models (DDPM) to generate clean binaural waveforms. By iteratively applying the vocoder, the system mitigates acoustic artifacts and ensures high-quality binaural audio output.

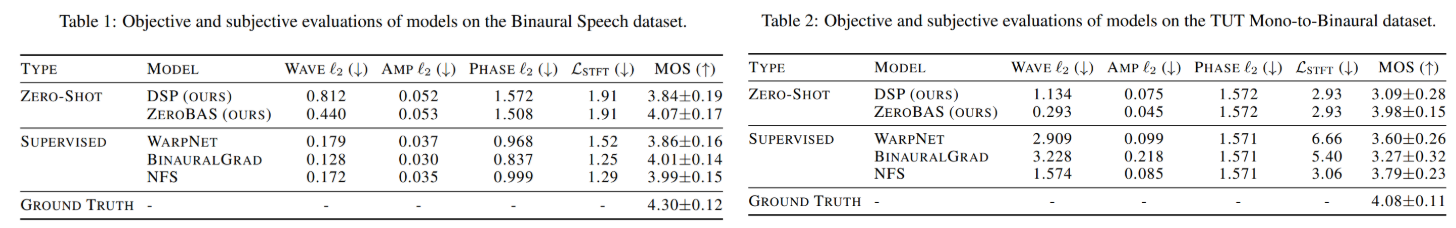

Regarding the evaluations, ZeroBAS was evaluated on two data sets (results in Tables 1 and 2): the binaural speech data set and the newly introduced TUT mono to binaural data set. The latter was designed to test the generalization capabilities of monaural synthesis methods in various acoustic environments. In objective evaluations, ZeroBAS demonstrated significant improvements over DSP baselines and approached the performance of supervised methods despite not being trained on binaural data. In particular, ZeroBAS achieved superior results on the out-of-distribution TUT dataset, highlighting its robustness under various conditions.

Subjective evaluations further confirmed the effectiveness of ZeroBAS. Mean Opinion Score (MOS) evaluations showed that human listeners rated the results of ZeroBAS as slightly more natural than those of the supervised methods. In MUSHRA evaluations, ZeroBAS achieved comparable spatial quality to supervised models, and listeners were unable to discern statistically significant differences.

Although this method is quite remarkable, it has some limitations. ZeroBAS has difficulty directly processing phase information because the vocoder lacks positional conditioning and relies on general models rather than environment-specific models. Despite these limitations, its ability to generalize effectively highlights the potential of zero-shot learning in binaural audio synthesis.

In conclusion, ZeroBAS offers an exciting, space-independent approach to binaural speech synthesis that achieves perceptual quality comparable to supervised methods without requiring binaural training data. Its strong performance in various acoustic environments makes it a promising candidate for real-world applications in AR, VR, and immersive audio systems.

Verify he Paper and Details. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://x.com/intent/follow?screen_name=marktechpost” target=”_blank” rel=”noreferrer noopener”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 65,000 ml.

Recommend open source platform: Parlant is a framework that transforms the way ai agents make decisions in customer-facing scenarios. (Promoted)

Vineet Kumar is a Consulting Intern at MarktechPost. He is currently pursuing his bachelor's degree from the Indian Institute of technology (IIT), Kanpur. He is a machine learning enthusiast. He is passionate about research and the latest advances in Deep Learning, Computer Vision and related fields.

NEWSLETTER

NEWSLETTER