Google has launched a new family of visual language models called PaliGemma. PaliGemma can produce text by receiving an image and text input. The architecture of the PaliGemma vision language model family (Github) consists of the SigLIP-So400m image encoder and the Gemma-2B text decoder. A cutting-edge model that can understand both text and images is called SigLIP. It comprises a jointly trained image and text encoder, similar to CLIP. Like PaLI-3, the combined PaliGemma model can be easily refined on downstream tasks, such as subtitling or reference segmentation, after being pre-trained with image and text data. Gemma is a text generator model that requires a decoder. By using a linear adapter to integrate Gemma with the SigLIP image encoder, PaliGemma becomes a powerful vision language model.

Big_vision was used as the base training code for PaliGemma. Using the same codebase, many other models have already been developed, including CapPa, SigLIP, LiT, BiT, and the original ViT.

The PaliGemma version includes three different model types, each offering a unique set of capabilities:

- PT Checkpoints: These pre-trained models are highly adaptive and designed to excel at a variety of tasks. Combined checkpoints: PT models tuned for a variety of tasks. They can only be used for research purposes and are appropriate for general-purpose inferences with free text prompts.

- FT Checkpoints: A collection of refined models focused on a distinct academic standard. They are only intended for research and come in various resolutions.

The models are available in three different precision levels (bfloat16, float16 and float32) and three different resolution levels (224×224, 448×448 and 896×896). Each repository contains checkpoints for a given job and resolution, with three revisions for each possible precision. The master branch of each repository has float32 checkpoints, while the bfloat16 and float16 revisions have matching precisions. It is important to note that the models supported by the original JAX implementation and the face-hugging transformers have different repositories.

High-resolution models, while offering superior quality, require much more memory due to their longer input sequences. This could be a consideration for users with limited resources. However, the quality increase is negligible for most tasks, making the 224 versions a suitable choice for most uses.

PaliGemma is a one-round visual language model that works best when tailored to a particular use case. It is not designed for conversational use. This means that while it excels at specific tasks, it may not be the best choice for all applications.

Users can specify the task the model will perform by qualifying it with task prefixes such as “detect” or “segment.” This is because the pre-trained models were trained in a way that gave them a wide range of skills, such as question answering, captioning, and segmentation. However, rather than being used immediately, they are designed to be tailored to specific tasks using a comparable prompt structure. The 'mix' family of models, refined on various tasks, can be used for interactive testing.

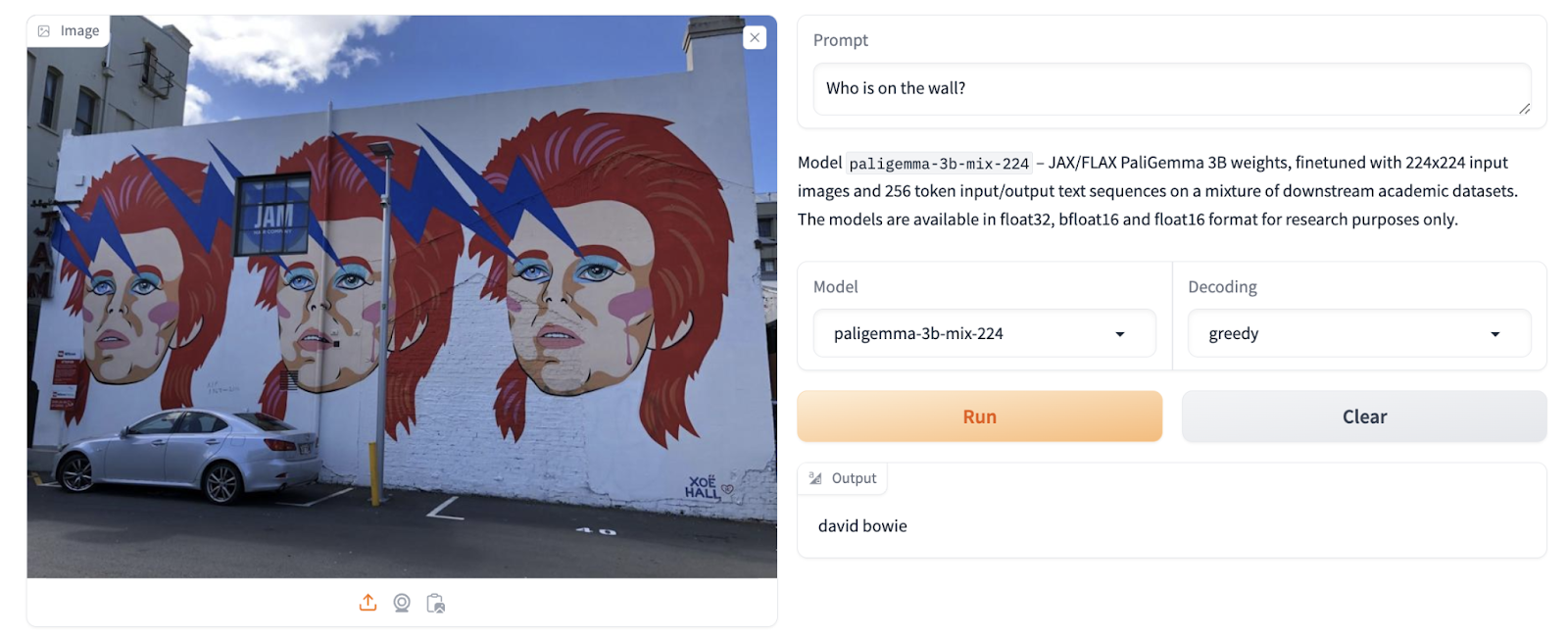

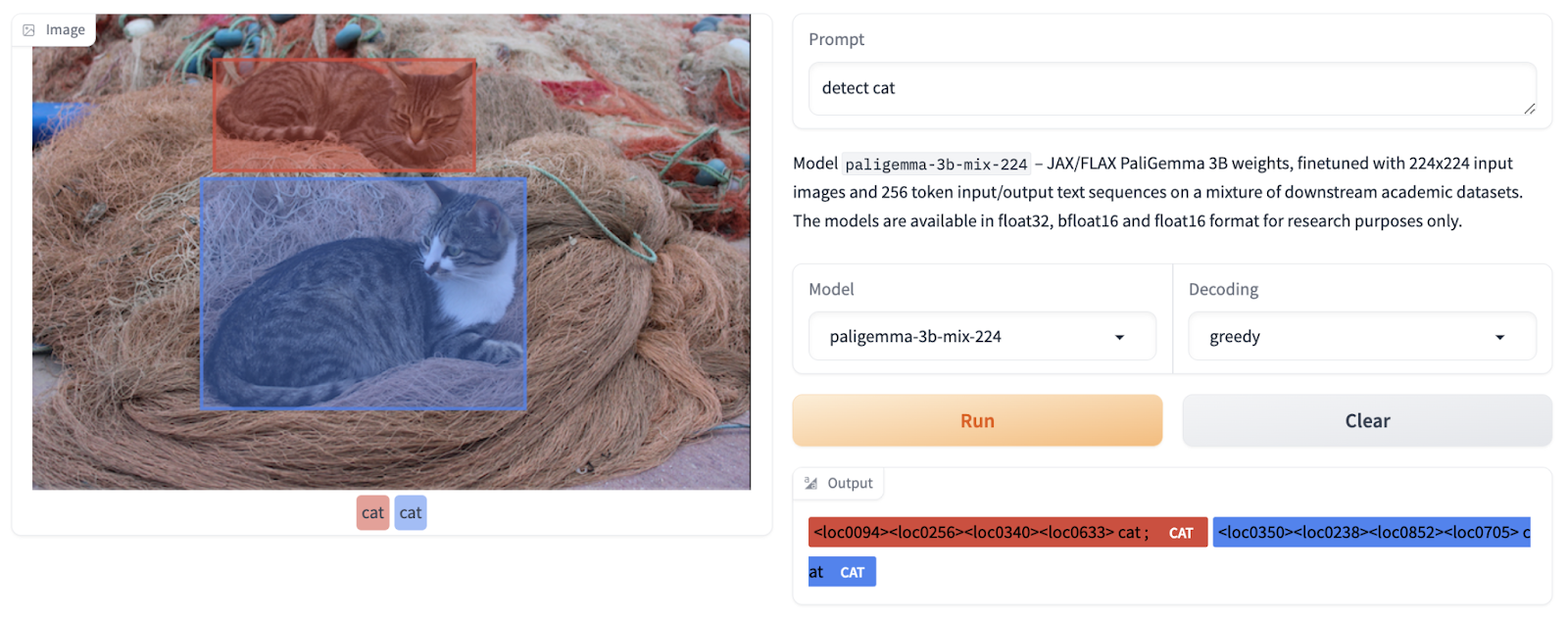

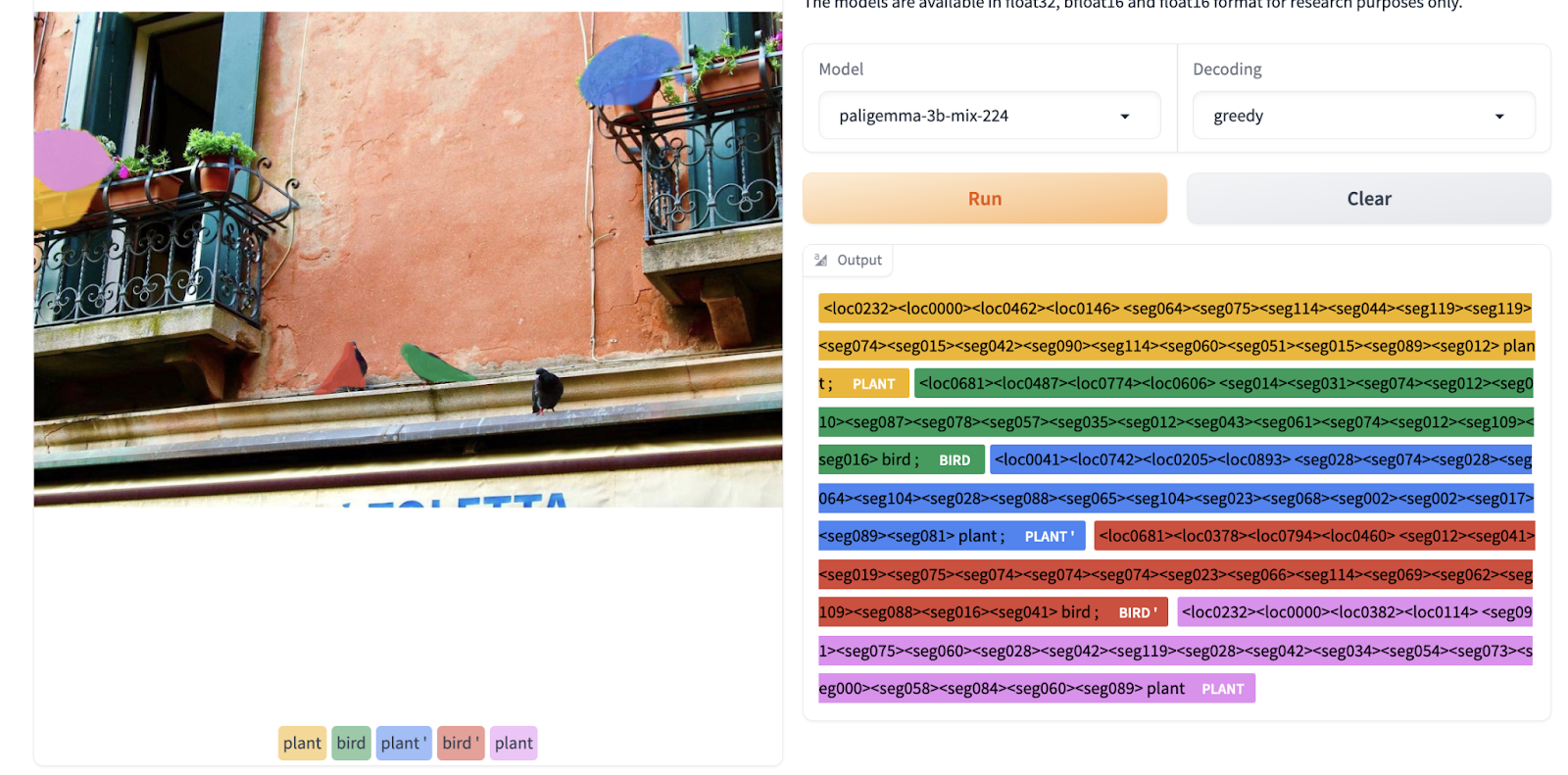

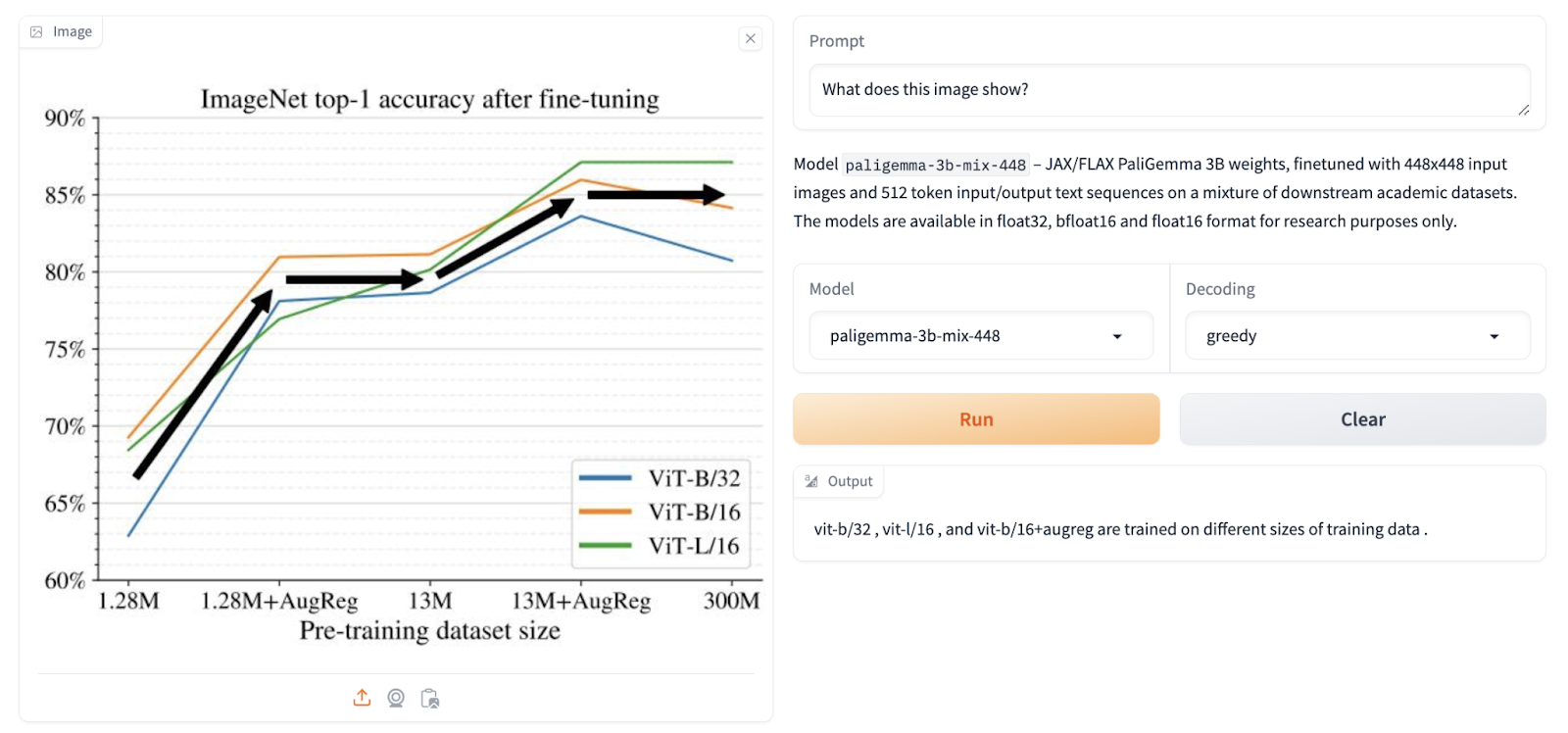

Below are some examples of what PaliGemma can do: it can add captions to images, answer questions about images, detect entities in images, segment entities within images, and reason and understand documents. These are just a few of its many capabilities.

- When requested, PaliGemma can add captions to images. With combined checkpoints, users can experiment with different subtitle prompts to see how they react.

- PaliGemma can answer a question about an image transmitted with it.

- PaliGemma can use the detect (entity) message to find entities in an image. The location of the bounding box coordinates will be printed as unique tokens, where the value is an integer denoting a normalized coordinate.

- When prompted for the segment (entity) message, PaliGemma's combined control points can also segment entities within an image. Because the computer uses natural language descriptions to refer to items of interest, this technique is known as referring expression segmentation. The result is a series of targeting and location tokens. As mentioned above, a bounding box is represented by location tokens. Segmentation masks can be created by processing the segmentation tokens once again.

- PaliGemma's combined checkpoints are very good for reasoning and understanding documents.

field.

Review the Blog, Modeland Manifestation. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 42k+ ML SubReddit

![]()

Dhanshree Shenwai is a Computer Science Engineer and has good experience in FinTech companies covering Finance, Cards & Payments and Banking with a keen interest in ai applications. He is excited to explore new technologies and advancements in today's evolving world that makes life easier for everyone.

(Recommended Reading) GCX by Rightsify – Your go-to source for high-quality, ethically sourced, copyright-cleared ai music training datasets with rich metadata

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>