Reasoning is essential in problem solving, as it allows humans to make decisions and obtain solutions. Two main types of reasoning are used in problem solving: direct reasoning and inverse reasoning. Direct reasoning involves working from a given question toward a solution, using incremental steps. In contrast, backward reasoning starts with a potential solution and works its way back to the original question. This approach is beneficial in tasks that require validation or error checking, as it helps identify inconsistencies or skipped steps in the process.

One of the central challenges of artificial intelligence is incorporating reasoning methods, especially inverse reasoning, into machine learning models. Current systems are based on direct reasoning, generating answers from a given set of data. However, this approach can lead to errors or incomplete solutions, as the model needs to evaluate and correct its reasoning path. Introducing inverse reasoning into ai models, particularly large language models (LLMs), presents an opportunity to improve the accuracy and reliability of these systems.

Existing methods for reasoning in LLMs primarily focus on direct reasoning, where models generate responses based on a prompt. Some strategies, such as knowledge distillation, attempt to improve reasoning by fitting models with correct reasoning steps. These methods are typically used during testing, where the answers generated by the model are verified by reverse reasoning. Although this improves model accuracy, reverse reasoning has not yet been incorporated into the model building process, limiting the potential benefits of this technique.

Researchers from UNC Chapel Hill, Google Cloud ai Research, and Google DeepMind introduced the Reverse Enhanced Thinking (REVTINK) framework. Developed by the Google Cloud ai Research and Google DeepMind teams, REVTINK integrates reverse reasoning directly into LLM training. Rather than using backward reasoning simply as a validation tool, this framework incorporates it into the training process by teaching models to handle both forward and backward reasoning tasks. The goal is to create a more robust and efficient reasoning process that can be used to generate answers for a wide variety of tasks.

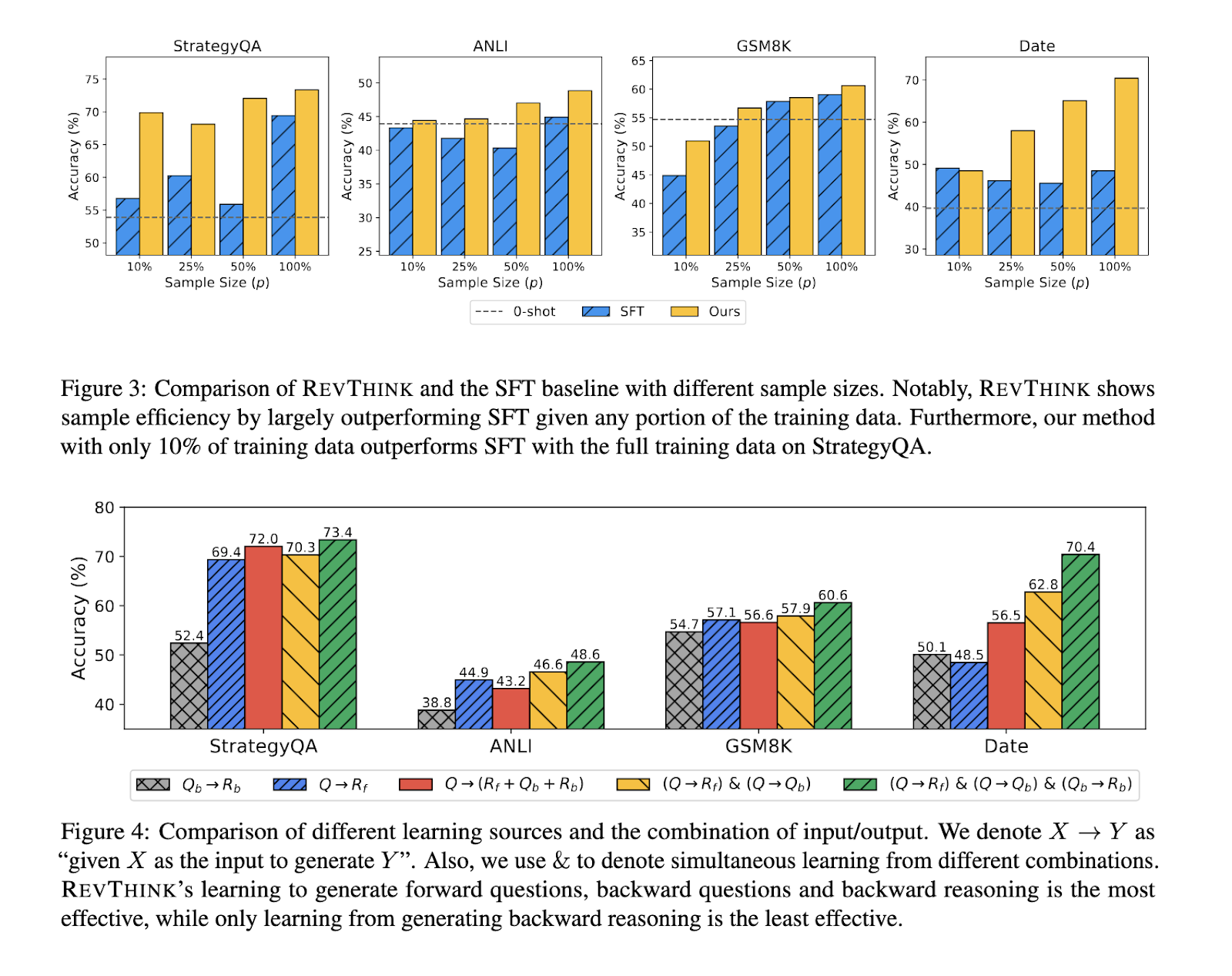

The REVTINK framework trains models on three distinct tasks: generating forward reasoning from a question, backward questioning from a solution, and backward reasoning. By learning to reason in both directions, the model becomes more adept at tackling complex tasks, especially those that require a step-by-step verification process. The dual approach of forward and backward reasoning improves the model's ability to verify and refine its results, ultimately leading to increased accuracy and reduced errors.

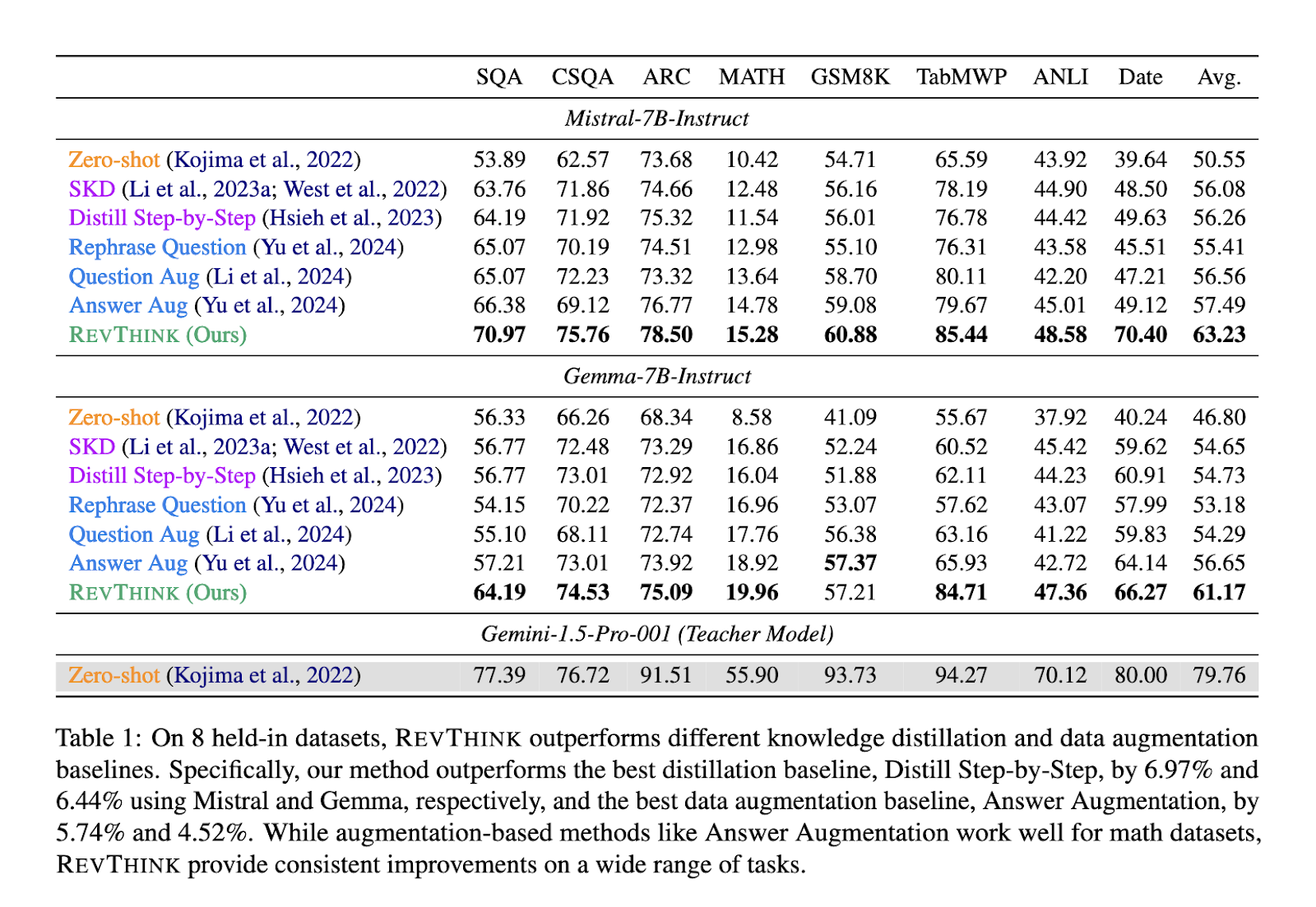

REVTINK performance testing showed significant improvements over traditional methods. The research team evaluated the framework on 12 diverse data sets, including tasks related to common sense reasoning, mathematical problem solving, and logic tasks. Compared to zero performance, the model achieved an average improvement of 13.53%, demonstrating its ability to better understand and generate answers for complex queries. The REVTINK framework outperformed robust knowledge distillation methods by 6.84%, highlighting its superior performance. Furthermore, the model was found to be very efficient in terms of sample usage. Much less training data was needed to achieve these results, making it a more efficient option than traditional methods that rely on larger data sets.

In terms of specific metrics, the performance of the REVTINK model in different domains also illustrated its versatility. The model showed a 9.2% improvement in logical reasoning tasks compared to conventional models. He demonstrated a 14.1% increase in common sense reasoning accuracy, indicating his strong ability to reason in everyday situations. The efficiency of the method was also highlighted as it required 20% less training data and outperformed previous benchmarks. This efficiency makes REVTINK an attractive option for applications where training data may be limited or expensive.

The introduction of REVTINK marks a significant advancement in the way ai models handle reasoning tasks. The model can generate more accurate responses using fewer resources by integrating reverse reasoning into the training process. The framework's ability to improve performance across multiple domains (especially with less data) demonstrates its potential to revolutionize ai reasoning. Overall, REVTINK promises to create more reliable ai systems that handle various tasks, from mathematical problems to real-world decision making.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 60,000 ml.

(<a target="_blank" href="https://landing.deepset.ai/webinar-fast-track-your-llm-apps-deepset-haystack?utm_campaign=2412%20-%20webinar%20-%20Studio%20-%20Transform%20Your%20LLM%20Projects%20with%20deepset%20%26%20Haystack&utm_source=marktechpost&utm_medium=desktop-banner-ad” target=”_blank” rel=”noreferrer noopener”>Must attend webinar): 'Transform proofs of concept into production-ready ai applications and agents' (Promoted)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER