Image by author

Anthropic’s conversational ai assistant, claudius 2, is the latest version that comes with significant improvements in performance, response duration and availability compared to its previous version. The latest version of the model can be accessed through our API and a new public beta website at claude.ai.

Claude 2 is known for being easy to talk to, explaining his reasoning clearly, avoiding harmful outcomes, and having a solid memory. He has enhanced reasoning abilities.

Claude 2 showed significant improvement on the multiple choice section of the bar exam, scoring 76.5% compared to Claude 1.3’s 73.0%. Additionally, Claude 2 outperforms over 90% of human test takers on the reading and writing sections of the GRE. In coding assessments like HumanEval, Claude 2 achieved an accuracy of 71.2%, which is a significant increase from 56.0% in the past.

The Claude 2 API is offered to our thousands of commercial customers at the same price as Claude 1.3. You can easily use it via the Web API as well as Python and Typescript clients. This tutorial will guide you through setting up and using the Claude 2 Python API and help you learn about the various functionalities it offers.

Before we start accessing the API, we must first request the API. Early access. You will complete the form and wait for confirmation. Be sure to use your business email address. I was using @kdnuggets.com.

After receiving the confirmation email, you will be provided access to the console. From there, you can generate API keys by going to account settings.

Install the Anthropic Python client using PiP. Make sure you are using the latest version of Python.

Configure the anthropic client using the API key.

client = anthropic.Anthropic(api_key=os.environ("API_KEY"))Instead of providing the API key to create the client object, you can configure ANTHROPIC_API_KEY environment variable and give it the key.

Here is the basic sync version to generate responses using message.

- Import all necessary modules.

- Initialize the client using the API key.

- To generate a response, you must provide the model name, maximum tokens, and message.

- Your message usually has

HUMAN_PROMPT(‘\n\nHuman:’) andAI_PROMPT(‘\n\nAssistant:’). - Print the answer.

from anthropic import Anthropic, HUMAN_PROMPT, AI_PROMPT

import os

anthropic = Anthropic(

api_key= os.environ("ANTHROPIC_API_KEY"),

)

completion = anthropic.completions.create(

model="claude-2",

max_tokens_to_sample=300,

prompt=f"{HUMAN_PROMPT} How do I find soul mate?{AI_PROMPT}",

)

print(completion.completion)Production:

As we can see, we got pretty good results. I think it’s even better than GPT-4.

Here are some tips for finding your soulmate:

- Focus on becoming your best self. Pursue your passions and interests, grow as a person, and work on developing yourself into someone you admire. When you are living your best life, you will attract the right person for you.

- Put yourself out there and meet new people. Expand your social circles by trying new activities, joining clubs, volunteering, or using dating apps. The more people you meet, the more likely you are to encounter someone special.........You can also call the Claude 2 API using asynchronous requests.

Synchronous APIs execute requests sequentially, blocking them until a response is received before invoking the next call, while asynchronous APIs allow multiple simultaneous requests without blocking, handling responses as they complete through callbacks, promises or events; This gives asynchronous APIs greater efficiency and scalability.

- Import AsyncAnthropic instead of Anthropic

- Define a function with asynchronous syntax.

- Wear

awaitwith every API call

from anthropic import AsyncAnthropic

anthropic = AsyncAnthropic()

async def main():

completion = await anthropic.completions.create(

model="claude-2",

max_tokens_to_sample=300,

prompt=f"{HUMAN_PROMPT} What percentage of nitrogen is present in the air?{AI_PROMPT}",

)

print(completion.completion)

await main()Production:

We got accurate results.

About 78% of the air is nitrogen. Specifically:

- Nitrogen makes up approximately 78.09% of the air by volume.

- Oxygen makes up approximately 20.95% of air.

- The remaining 0.96% is made up of other gases like argon, carbon dioxide, neon, helium, and hydrogen.Note: If you are using the asynchronous feature in Jupyter Notebook, use

await main(). Otherwise, useasyncio.run(main()).

Streaming has become increasingly popular for large language models. Instead of waiting for the full response, you can start processing the result as soon as it is available. This approach helps reduce perceived latency by returning the output of the language model token by token, rather than all at once.

You just have to establish a new argument. stream to True depending on completion. Caude 2 uses server-side events (SSE) to support response transmission.

stream = anthropic.completions.create(

prompt=f"{HUMAN_PROMPT} Could you please write a Python code to analyze a loan dataset?{AI_PROMPT}",

max_tokens_to_sample=300,

model="claude-2",

stream=True,

)

for completion in stream:

print(completion.completion, end="", flush=True)Production:

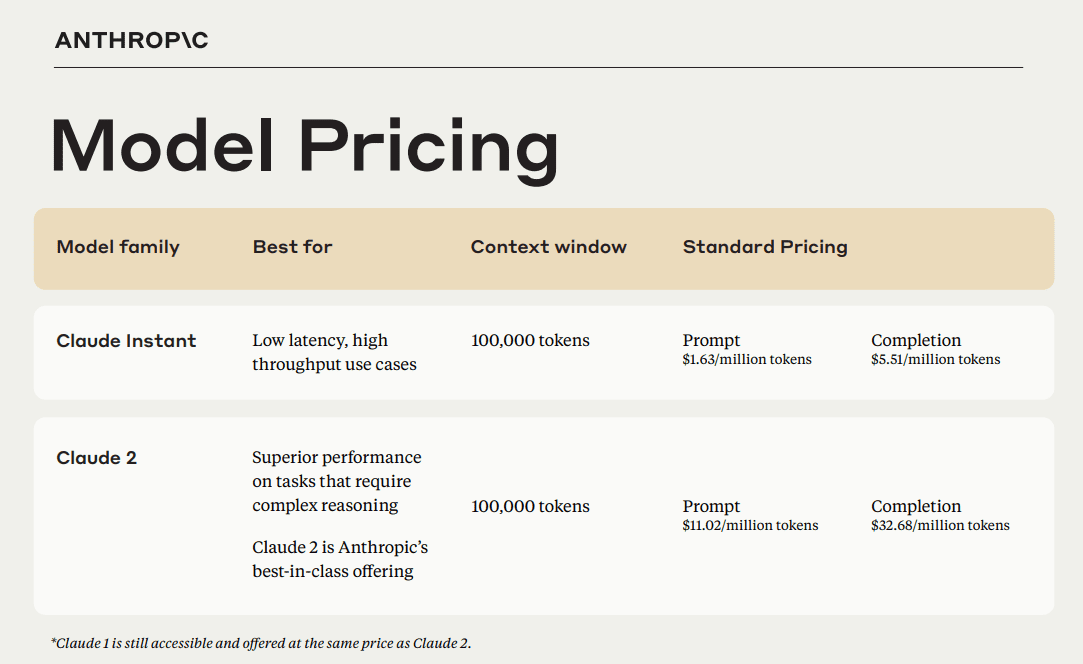

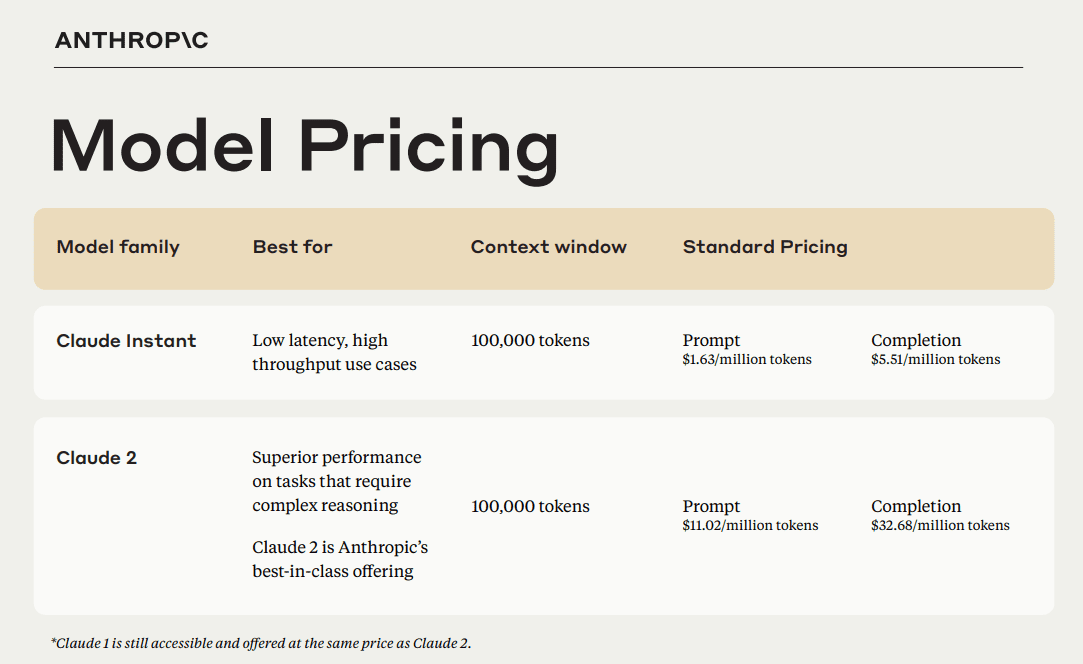

Billing is the most important aspect of integrating the API into your application. It will help you plan the budget and charge your clients. Only LLM APIs are charged on a per-token basis. You can refer to the following table to understand the pricing structure.

Anthropo Image

An easy way to count the number of tokens is by providing a message or response to the count_tokens function.

client = Anthropic()

client.count_tokens('What percentage of nitrogen is present in the air?')In addition to basic response generation, you can use the API to fully integrate into your application.

- Using types: Requests and responses use the TypedDicts and Pydantic models respectively for type checking and autocompletion.

- Handling errors: Errors generated include APIConnectionError for connection problems and APIStatusError for HTTP errors.

- Default headers: The anthropic version header is added automatically. This can be customized.

- Login: Logging can be enabled by setting the ANTHROPIC_LOG environment variable.

- HTTP Client Configuration: The HTTPx client can be customized for proxies, transports, etc.

- HTTP resource management: The client can be closed manually or used in a context manager.

- Versioned: It follows semantic versioning conventions, but some backwards-incompatible changes may be released as minor versions.

The Anthropic Python API provides easy access to Claude 2’s next-generation conversational ai model, allowing developers to integrate Claude’s advanced natural language capabilities into their applications. The API offers synchronous and asynchronous calls, streaming, token usage-based billing, and other features to take full advantage of Claude 2’s improvements over previous versions.

The Claude 2 is my favorite so far and I think building apps using the Anthropic API will help you create a product that outshines the rest.

Let me know if you would like to read a more advanced tutorial. Maybe you can create an app using Anthropic API.

Abid Ali Awan (@1abidaliawan) is a certified professional data scientist who loves building machine learning models. Currently, he focuses on content creation and writing technical blogs on data science and machine learning technologies. Abid has a Master’s degree in technology Management and a Bachelor’s degree in Telecommunications Engineering. His vision is to build an artificial intelligence product using a graph neural network for students struggling with mental illness.

NEWSLETTER

NEWSLETTER