Data scientists need a consistent and reproducible environment for machine learning (ML) and data science workloads that is dependency-manageable and secure. AWS Deep Learning Containers already provides pre-built Docker images for training and serving models on common frameworks like TensorFlow, PyTorch, and MXNet. To enhance this experience, we announced a public beta of the open source SageMaker distribution at JupyterCon 2023. This provides a unified end-to-end ML experience among ML developers of different experience levels. Developers no longer need to switch between different framework containers for experimentation, or when moving from JupyterLab locales and SageMaker notebooks to production work in SageMaker. The open source SageMaker distribution supports the most common packages and libraries for data science, machine learning, and visualization, such as TensorFlow, PyTorch, Scikit-learn, Pandas, and Matplotlib. You can start using the container from the Amazon ECR Public Gallery starting today.

In this post, we show you how you can use the open source distribution of SageMaker to quickly experiment in your local environment and easily promote them to SageMaker jobs.

Solution Overview

For our example, we show training an image classification model using PyTorch. we use the KMNIST data set publicly available in PyTorch. We train a neural network model, test the performance of the model, and finally print the training and test loss. The full notebook for this example is available at the SageMaker Studio Lab Samples Repository. We started experimentation on a local laptop with the open source distribution, moved it to Amazon SageMaker Studio to use a larger instance, and then programmed the laptop as a laptop job.

previous requirements

You need the following prerequisites:

Set up your local environment

You can directly start using the open source distribution on your local laptop. To start JupyterLab, run the following commands in your terminal:

you can replace ECR_IMAGE_ID with any of the image tags available in the Amazon ECR Public Galleryor choose the latest-gpu label if you are using a GPU-capable machine.

This command will start JupyterLab and provide a URL in the terminal, like http://127.0.0.1:8888/lab?token=<token>. Copy the link and enter it in your preferred browser to launch JupyterLab.

set up studio

Studio is an end-to-end integrated development environment (IDE) for ML that enables developers and data scientists to build, train, deploy, and monitor ML models at scale. Studio provides an extensive list of proprietary images with common frameworks and packages, such as Data Science, TensorFlow, PyTorch, and Spark. These images make it easy for data scientists to get started with ML by simply choosing a framework and instance type of their choice for the compute.

You can now use the open source distribution of SageMaker in Studio with Studio’s Bring Your Own Image feature. To add the open source distribution to your SageMaker domain, complete the following steps:

- Add the open source distribution to your account’s Amazon Elastic Container Registry (Amazon ECR) repository by running the following commands in your terminal:

- Create a SageMaker image and attach the image to the Studio domain:

- In the SageMaker Console, launch Studio by choosing your existing domain and user profile.

- Optionally, restart Studio by following the steps in Shutting down and updating SageMaker Studio.

Download the notebook

Download the sample notebook locally from the github repository.

Open the laptop in the IDE of your choice and add a cell to the beginning of the laptop to install torchsummary. He torchsummary The package is not part of the distribution, and installing it on the laptop will ensure that the laptop runs from start to finish. We recommend using conda either micromamba to manage environments and dependencies. Add the following cell to the notebook and save the notebook:

Experiment in the local notebook

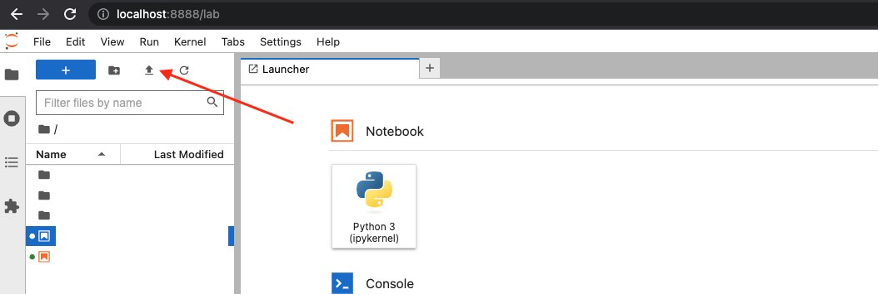

Upload the notebook in the JupyterLab UI that you launched by choosing the upload icon as shown in the following screenshot.

When loaded, start the cv-kmnist.ipynb laptop. You can start running your cells right away, without having to install dependencies like torch, matplotlib, or ipywidgets.

If you followed the steps above, you can see that you can use the distro locally from your laptop. In the next step, we use the same distribution in Studio to take advantage of Studio features.

Move experimentation to Studio (optional)

Optionally, let’s promote the experimentation to Studio. One of the benefits of Studio is that the underlying compute resources are fully elastic, so you can easily scale up or down the available resources, and changes happen automatically in the background without interrupting your work. If you want to run the same old notebook on a larger data set and compute instance, you can migrate to Studio.

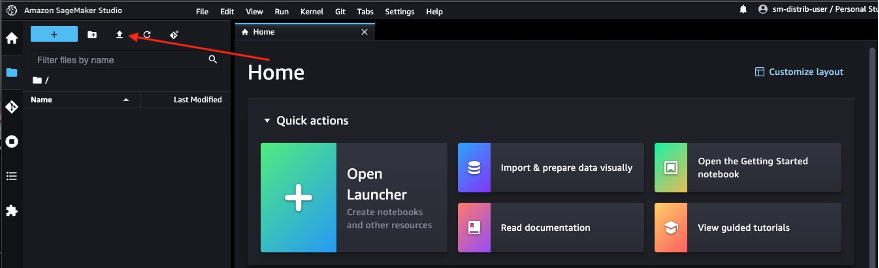

Navigate to the Studio UI that you launched earlier and choose the upload icon to upload the notebook.

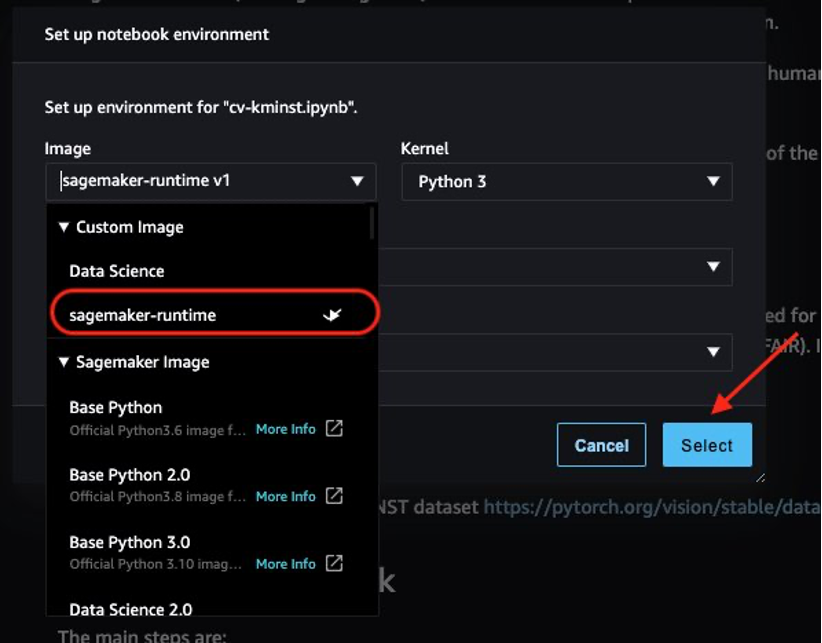

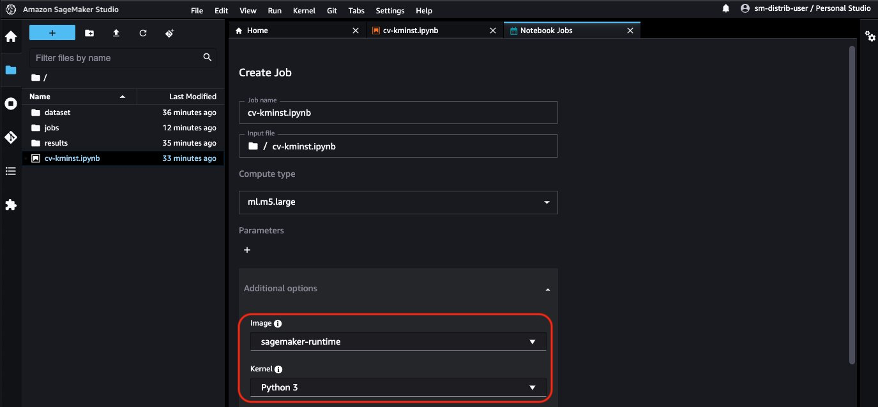

After launching the notebook, you will be prompted to choose the image and instance type. In the kernel launcher, choose sagemaker-runtime like the picture and a ml.t3.medium instance, then choose Select.

You can now run the notebook from start to finish without having to make any changes to the notebook from your local development environment to Studio notebooks!

Schedule the notebook as a job

When you’re done with your experimentation, SageMaker offers multiple options for getting your laptop up and running, such as training jobs and SageMaker pipelines. One of these options is to directly run the notebook itself as a non-interactive scheduled notebook job using SageMaker notebook jobs. For example, you might want to retrain your model periodically or draw inferences on incoming data periodically and generate reports for consumption by your stakeholders.

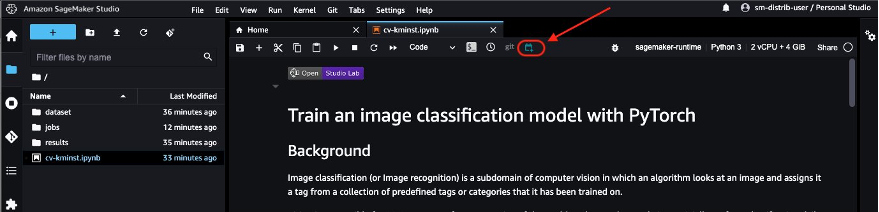

In Studio, choose the notebook job icon to start the notebook job. If you have installed the laptop jobs extension locally on your laptop, you can also schedule the laptop directly from your laptop. See the Installation Guide to locally configure the laptop jobs extension.

The notebook job automatically uses the ECR image URI from the open source distribution, so you can directly schedule the notebook job.

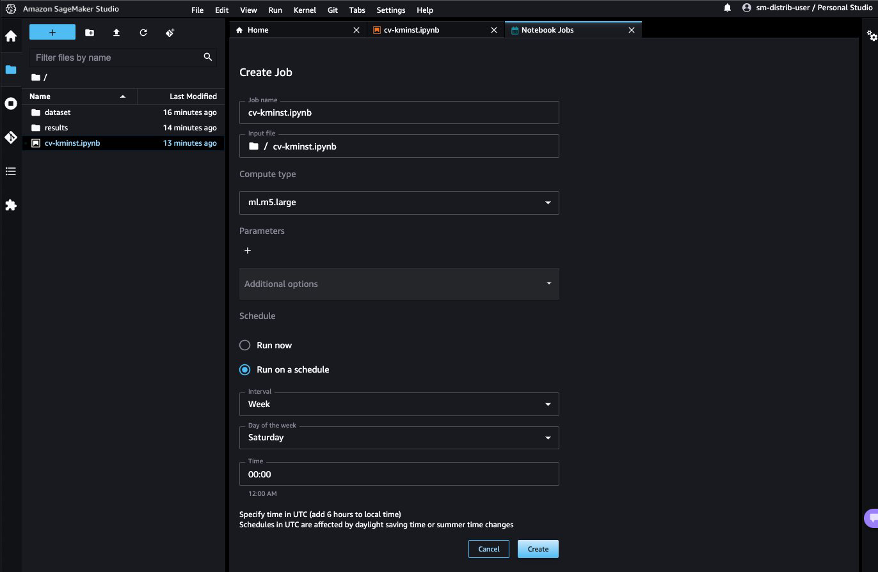

Choose Run as scheduledchoose a time, for example every week on Saturday, and choose Create. you can also choose run now if you want to see the results immediately.

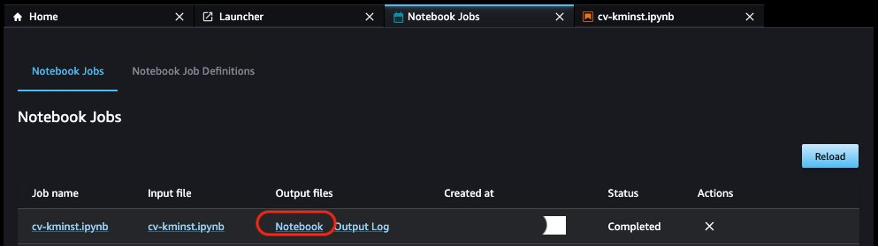

When the first notebook job is complete, you can view the notebook results directly from the Studio user interface by choosing Laptop low output files.

Additional considerations

In addition to using the publicly available ECR image directly for ML workloads, the open source distribution offers the following benefits:

- The Dockerfile used to create the image is publicly available for developers to explore and create their own images. You can also inherit this image as the base image and install your custom libraries to have a reproducible environment.

- If you are not used to Docker and prefer to use Conda environments in your JupyterLab environment, we provide a

env.outfile for each of the published versions. You can use the instructions in the file to create your own conda environment that mimics the same environment. For example, see the CPU environment file cpu.send.out. - You can use the GPU versions of the image to run GPU-aware workloads such as deep learning and image processing.

Clean

Complete the following steps to clean up your resources:

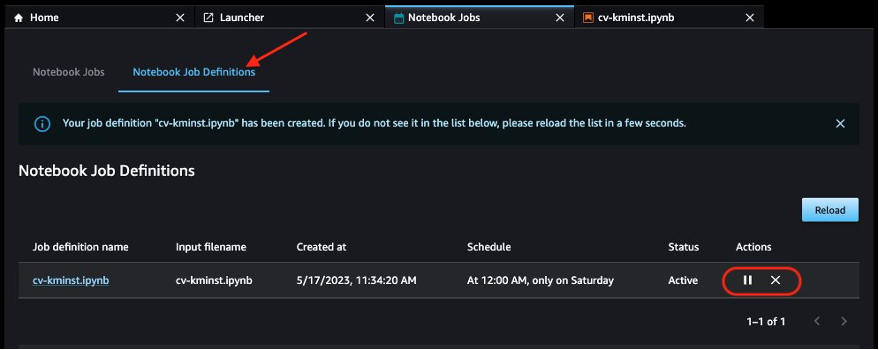

- If you have programmed your laptop to run on a schedule, pause or remove the schedule on the Notebook Job Definitions token to avoid paying for future jobs.

- Close all Studio applications to avoid paying for unused compute usage. See Shut down and update Studio applications for instructions.

- Optionally, delete the domain from Studio if you created one.

Conclusion

Maintaining a reproducible environment at different stages of the ML lifecycle is one of the biggest challenges for data scientists and developers. With the open source distribution of SageMaker, we provide an image with mutually compatible versions of the most common ML frameworks and packages. The distribution is also open source, giving developers transparency into the packages and build processes, making it easy to customize your own distribution.

In this post, we show you how to use the distribution in your local environment, in Studio, and as a container for your training jobs. This feature is currently in public beta. We encourage you to try this and share your feedback and issues on the GitHub public repository!

About the authors

durgasury is a Machine Learning Solutions Architect on the Amazon SageMaker Service SA team. He is passionate about making machine learning accessible to everyone. In his 4 years at AWS, he helped set up AI/ML platforms for enterprise customers. When he’s not working, he loves motorcycle rides, mystery novels, and long walks with his 5-year-old husky.

durgasury is a Machine Learning Solutions Architect on the Amazon SageMaker Service SA team. He is passionate about making machine learning accessible to everyone. In his 4 years at AWS, he helped set up AI/ML platforms for enterprise customers. When he’s not working, he loves motorcycle rides, mystery novels, and long walks with his 5-year-old husky.

Ketan Vijayvargiya is a Senior Software Development Engineer at Amazon Web Services (AWS). His focus areas are machine learning, distributed systems and open source. Outside of work, he likes to spend his time living alone and enjoying nature.

Ketan Vijayvargiya is a Senior Software Development Engineer at Amazon Web Services (AWS). His focus areas are machine learning, distributed systems and open source. Outside of work, he likes to spend his time living alone and enjoying nature.

NEWSLETTER

NEWSLETTER