Current challenges in text-to-speech (TTS) systems revolve around the inherent limitations of autoregressive models and their complexity in accurately aligning text and speech. Many conventional TTS models require complex elements such as duration modeling, phoneme alignment, and dedicated text encoders, which add significant overhead and complexity to the synthesis process. Furthermore, previous models such as E2 TTS have faced problems of slow convergence, robustness, and maintaining accurate alignment between input text and generated speech, making it difficult to optimize and efficiently implement them in real-world scenarios.

Researchers from Shanghai Jiao Tong University, Cambridge University and Geely Automobile Research Institute introduced F5-TTS, a non-autoregressive text-to-speech (TTS) system that uses flux matching with a diffusion transformer (DiT). Unlike many conventional TTS models, F5-TTS does not require complex elements such as duration modeling, phoneme alignment, or a dedicated text encoder. Instead, it introduces a simplified approach where text inputs are padded to match the length of the speech input, taking advantage of stream matching for effective synthesis. F5-TTS is designed to address the shortcomings of its predecessor, E2 TTS, which faced issues of slow convergence and alignment between speech and text. Notable improvements include a ConvNeXt architecture to refine text representation and a novel jitter sampling strategy during inference, significantly improving performance without the need for retraining.

Structurally, F5-TTS leverages ConvNeXt and DiT to overcome alignment challenges between text and generated speech. Input text is first processed using ConvNeXt blocks to prepare it for speech-in-context learning, allowing for smoother alignment. The character sequence, filled with filler tokens, is fed into the model along with a noisy version of the input speech. The diffusion transformer (DiT) backbone is used for training and employs flow matching to effectively map a simple initial distribution to the data distribution. Additionally, F5-TTS includes an innovative inference-time jitter sampling technique that helps control the steps of the flow, prioritizing inference in the early stages to improve the alignment of the generated speech with the input text.

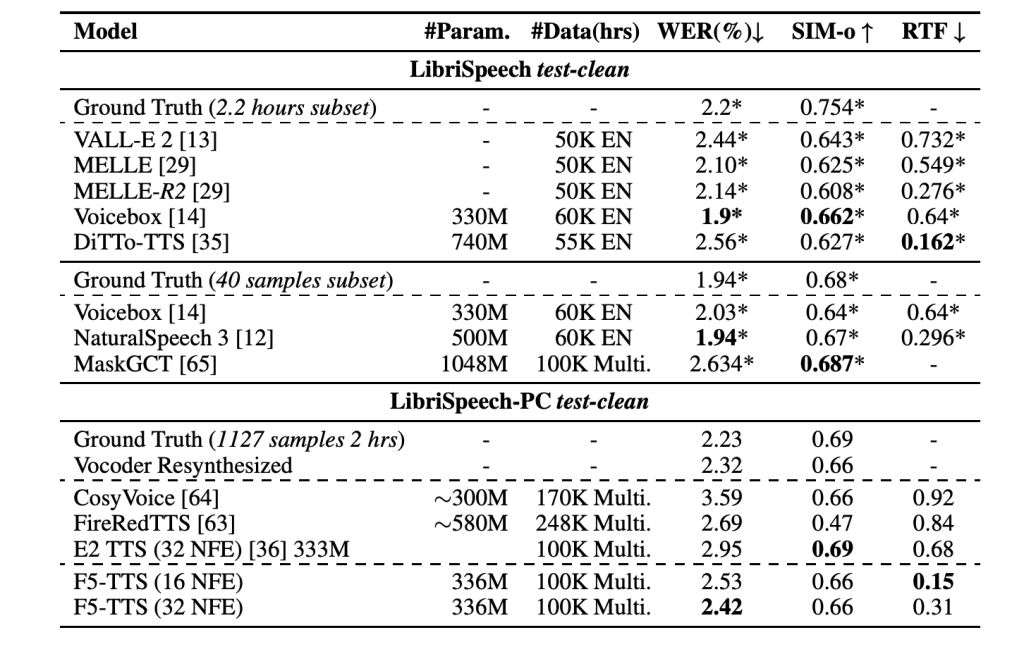

The results presented in the paper demonstrate that F5-TTS outperforms other state-of-the-art TTS systems in terms of synthesis quality and inference speed. The model achieved a word error rate (WER) of 2.42 on the LibriSpeech-PC dataset using 32 feature evaluations (NFE) and demonstrated a real-time factor (RTF) of 0.15 for inference. This performance is a significant improvement over diffusion-based models like E2 TTS, which required a longer convergence time and struggled to maintain robustness across different input scenarios. The Sway Sampling strategy greatly improves naturalness and intelligibility, allowing the model to achieve smooth and expressive zero-shot generation. Evaluation metrics such as WER and speaker similarity scores confirm the competitive quality of the generated speech.

In conclusion, F5-TTS successfully introduces a simpler and highly efficient pipeline for TTS synthesis by eliminating the need for duration predictors, phoneme alignments, and explicit text encoders. Using ConvNeXt for text processing and Sway Sampling for optimized flow control collectively improves alignment robustness, training efficiency, and speech quality. By maintaining a lightweight architecture and providing an open source framework, F5-TTS aims to advance community-driven development in text-to-speech technologies. The researchers also highlight ethical considerations for the possible misuse of such models, emphasizing the need for detection systems and watermarks to prevent fraudulent use.

look at the Paper, Model hugging faceand GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml

(Next Event: Oct 17, 202) RetrieveX – The GenAI Data Recovery Conference (Promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>