Editor's Image

In today's fast-paced world, we are bombarded with more information than we can handle. We are increasingly accustomed to receiving more information in less time, which generates frustration when having to read extensive documents or books. That's where the extractive summary comes in. At the heart of a text, the process extracts key sentences from an article, piece or page to give us a snapshot of its most important points.

For anyone who needs to understand large documents without reading every word, this is a game-changer.

In this article we delve into the foundations and applications of extractive summary. We will examine the role of large language models, especially BERT (Representations of bidirectional encoder of transformers), to improve the process. The article will also include a practical tutorial on using BERT for extractive summaries, showing its practicality for condensing large volumes of text into informative summaries.

Extractive summarization is a prominent technique in the field of natural language processing (NLP) and text analysis. With it, key sentences or phrases are carefully selected from the original text and combined to create a concise and informative summary. This involves meticulously examining the text to identify the most crucial elements and the central ideas or arguments presented in the selected article.

While abstractive summary involves generating completely new sentences that are often not present in the source material, extractive summary sticks to the original text. He does not alter or paraphrase, but rather extracts sentences exactly as they appear, maintaining the original wording and structure. In this way, the summary remains faithful to the tone and content of the original material. The extractive summary technique is extremely beneficial in cases where the accuracy of the information and the preservation of the author's original intent are a priority.

It has many different uses, such as summarizing news articles, academic articles, or long reports. The process effectively conveys the message of the original content without potential bias or reinterpretation that can occur when paraphrasing.

1. Text analysis

This initial step involves dividing the text into its essential elements, mainly sentences and phrases. The goal is to identify the basic units (sentences, in this context) that the algorithm will later evaluate for inclusion in a summary, like dissecting a text to understand its structure and individual components.

For example, the model would analyze a four-sentence paragraph by breaking it down into the following four-sentence components.

- The pyramids of Giza, built in ancient Egypt, stood magnificently for millennia.

- They were built as tombs for the pharaohs.

- The Great Pyramids are the most famous.

- These structures symbolize architectural intelligence.

2. Feature extraction

At this stage, the algorithm analyzes each sentence to identify characteristics or “features” that might indicate its importance to the overall text. Common features include the frequency and repeated use of keywords and phrases, the length of sentences, their position in the text and their implications, and the presence of specific keywords or phrases that are central to the main theme of the text.

Below is an example of how the LLM would do the feature extraction for the first sentence “The Pyramids of Giza, built in ancient Egypt, have been magnificently maintained for millennia.”

| Attributes | Text |

|---|---|

| Frequency | “Pyramids of Giza”, “Ancient Egypt”, “Millennias” |

| Sentence length | Moderate |

| Position in the text | Introductory, establishes the topic. |

| Specific keywords | “Pyramids of Giza”, “ancient Egypt” |

3. Scoreable sentences

Each sentence is assigned a score based on its content. This score reflects the perceived importance of a sentence in the context of the entire text. Sentences with a higher score are considered to have more weight or relevance.

Simply put, this process rates each sentence based on its potential meaning for a summary of the full text.

| Sentence Punctuation | Prayer | Explanation |

|---|---|---|

| 9 | The pyramids of Giza, built in ancient Egypt, stood magnificently for millennia. | It is the introductory sentence that establishes the theme and context, and contains key terms such as “Pyramids of Giza” and “Ancient Egypt.” |

| 8 | They were built as tombs for the pharaohs. | This sentence provides critical historical information about the pyramids and may identify them as important to understanding their purpose and meaning. |

| 3 | The Great Pyramids are the most famous. | Although this sentence adds specific information about the Great Pyramids, it is considered less crucial in the broader context of summarizing the general meaning of the Pyramids. |

| 7 | These structures symbolize architectural intelligence. | This summary statement captures the general meaning of the Pyramids. |

4. Selection and aggregation

The final phase is to select the phrases with the highest scores and compile them into a summary. When done carefully, this ensures that the summary remains coherent and representative of the main ideas and themes of the original text.

To create an effective summary, the algorithm must balance the need to include important sentences that are concise, avoiding redundancy, and ensuring that the selected sentences provide a clear and complete overview of the entire original text.

- The Pyramids of Giza, built in Ancient Egypt, stood magnificently for millennia. They were built as tombs for the pharaohs. These structures symbolize architectural brilliance.

This example is extremely basic and extracts 3 out of a total of 4 sentences to get the best overall summary. Reading an extra sentence doesn't hurt, but what happens when the text is longer? Say, 3 paragraphs?

Step 1: Install and import the necessary packages

We will leverage the pre-trained BERT model. However, we will not use just any BERT model; instead, we will focus on the BERT extractor summary. This particular model has been tuned for specialized extractive summarization tasks.

!pip install bert-extractive-summarizer

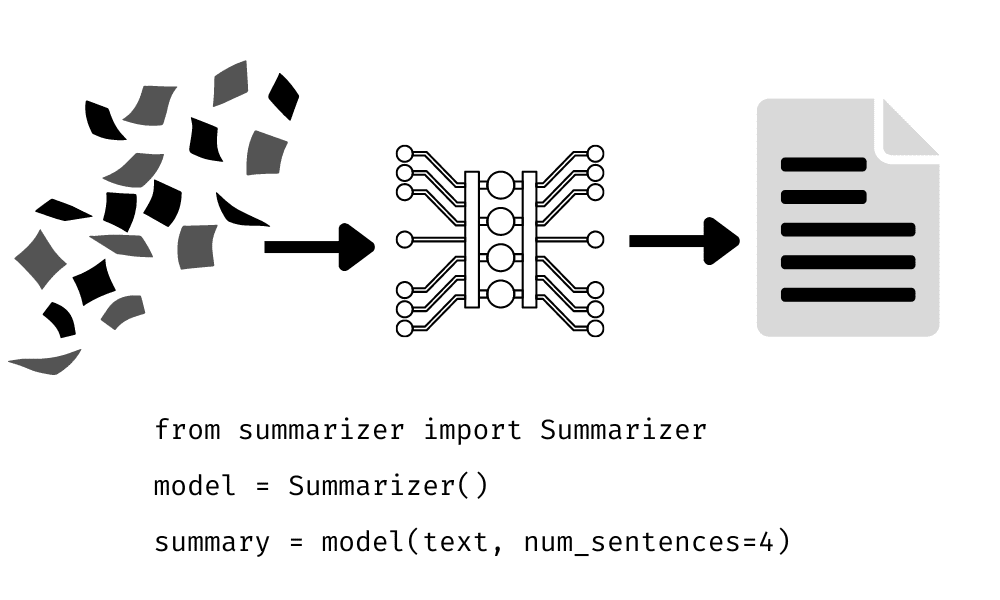

from summarizer import SummarizerStep 2: Introduce the summary function

The Summarizer() function imported from the Summarizer in Python is an extractive text summarization tool. It uses the BERT model to analyze and extract key sentences from larger text. This feature aims to retain the most important information, providing a condensed version of the original content. It is commonly used to summarize long documents efficiently.

Step 3: Importing our Text

Here, we will import any text fragments that we want to test our model on. To test our extractive summary model, we generated text using ChatGPT 3.5 with the prompt: “Please provide a 3-paragraph summary of the history of GPUs and how they are used today.”

text = "The history of Graphics Processing Units (GPUs) dates back to the early 1980s when companies like IBM and Texas Instruments developed specialized graphics accelerators for rendering images and improving overall graphical performance. However, it was not until the late 1990s and early 2000s that GPUs gained prominence with the advent of 3D gaming and multimedia applications. NVIDIA's GeForce 256, released in 1999, is often considered the first GPU, as it integrated both 2D and 3D acceleration on a single chip. ATI (later acquired by AMD) also played a significant role in the development of GPUs during this period. The parallel architecture of GPUs, with thousands of cores, allows them to handle multiple computations simultaneously, making them well-suited for tasks that require massive parallelism. Today, GPUs have evolved far beyond their original graphics-centric purpose, now widely used for parallel processing tasks in various fields, such as scientific simulations, artificial intelligence, and machine learning. Industries like finance, healthcare, and automotive engineering leverage GPUs for complex data analysis, medical imaging, and autonomous vehicle development, showcasing their versatility beyond traditional graphical applications. With advancements in technology, modern GPUs continue to push the boundaries of computational power, enabling breakthroughs in diverse fields through parallel computing. GPUs also remain integral to the gaming industry, providing immersive and realistic graphics for video games where high-performance GPUs enhance visual experiences and support demanding game graphics. As technology progresses, GPUs are expected to play an even more critical role in shaping the future of computing."

Step 4: Perform an extractive summary

Finally, we will run our summary function. This function requires two inputs: the text to be summarized and the desired number of sentences for the summary. After processing, it will generate an extractive summary, which we will then display.

# Specifying the number of sentences in the summary

summary = model(text, num_sentences=4)

print(summary)Extractive summary output:

The history of graphics processing units (GPUs) dates back to the early 1980s, when companies such as IBM and Texas Instruments developed specialized graphics accelerators to render images and improve overall graphics performance. NVIDIA's GeForce 256, released in 1999, is often considered the first GPU, as it integrated 2D and 3D acceleration on a single chip. Today, GPUs have evolved far beyond their original graphics-focused purpose, and are now widely used for parallel processing tasks in various fields, such as scientific simulations, artificial intelligence, and machine learning. As technology advances, GPUs are expected to play an even more critical role in shaping the future of computing.

Our model extracted the top 4 sentences from our large text corpus to generate this summary!

- Limitations of contextual understanding

- While LLMs are proficient in language processing and generation, their understanding of context, especially in longer texts, is limited. LLMs may miss subtle nuances or fail to recognize critical aspects of the text, leading to less accurate or relevant summaries. The more advanced the language model, the better the summary.

- Bias in training data

- LLMs learn from vast sets of data compiled from various sources, including the Internet. These data sets may contain biases, which models could inadvertently learn and replicate in their summaries, resulting in biased or unfair representations.

- Management of specialized or technical language

- While LLMs are typically trained in a wide range of general texts, they may not accurately capture technical or specialized language in fields such as law, medicine, or other highly technical fields. This can be alleviated by feeding it more specialized and technical text. Lack of training in specialized jargon can affect the quality of summaries when used in these fields.

It is clear that the extractive summary is more than just a useful tool; It is a growing need in our information-saturated age, where we are inundated with walls of text every day. By harnessing the power of technologies like BERT, we can see how complex texts can be summarized into digestible summaries, saving us time and helping us better understand the texts being summarized.

Whether for academic research, business insights, or simply staying informed in a technologically advanced world, extractive summarization is a practical way to navigate the sea of information that surrounds us. As natural language processing continues to evolve, tools like extractive summarization will become even more essential, helping us quickly find and understand the most important information in a world where every minute counts.

Original. Reposted with permission.

Kevin Vu manages Exxact Corp. Blog and works with many of its talented authors who write about different aspects of deep learning.

NEWSLETTER

NEWSLETTER