Introduction

LlamaParse is a document parsing library developed by Llama Index to efficiently and effectively parse documents like PDF, PPT, etc.

Building RAG applications on top of PDF documents presents a significant challenge that many of us face, specifically with the complex task of parsing embedded objects such as tables, figures, etc. The nature of these objects often means that conventional analysis techniques have difficulty interpreting and extracting them. the information encoded in them accurately.

The software development community has introduced several libraries and frameworks in response to this widespread problem. Examples of these solutions include LLMSherpa and unstructured.io. These tools provide robust and flexible solutions to some of the most persistent problems when analyzing complex PDF files.

The latest addition to this list of invaluable tools is LlamaParse. LlamaParse was developed by Llama Index, one of the most highly regarded LLM frameworks currently available. Because of this, LlamaParse can be integrated directly with Llama Index. This seamless integration represents a significant advantage as it simplifies the implementation process and ensures a higher level of compatibility between the two tools. In conclusion, LlamaParse is a promising new tool that makes parsing complex PDF files less daunting and more efficient.

Learning objectives

- Recognize the challenges of document analysis: Understand the difficulties when parsing complex PDF files with embedded objects.

- Introduction to LlamaParse: Find out what LlamaParse is and its seamless integration with Llama Index.

- Configuration and initialization: Create a LlamaCloud account, obtain an API key and install the necessary libraries.

- Implementing FlameParse: Follow the steps to initialize the LLM, upload and analyze documents.

- Create a vector index and query data: Learn how to create a vector warehouse index, configure a query engine, and extract specific information from analyzed documents.

This article was published as part of the Data Science Blogathon.

Steps to create a RAG application over PDF using LlamaParse

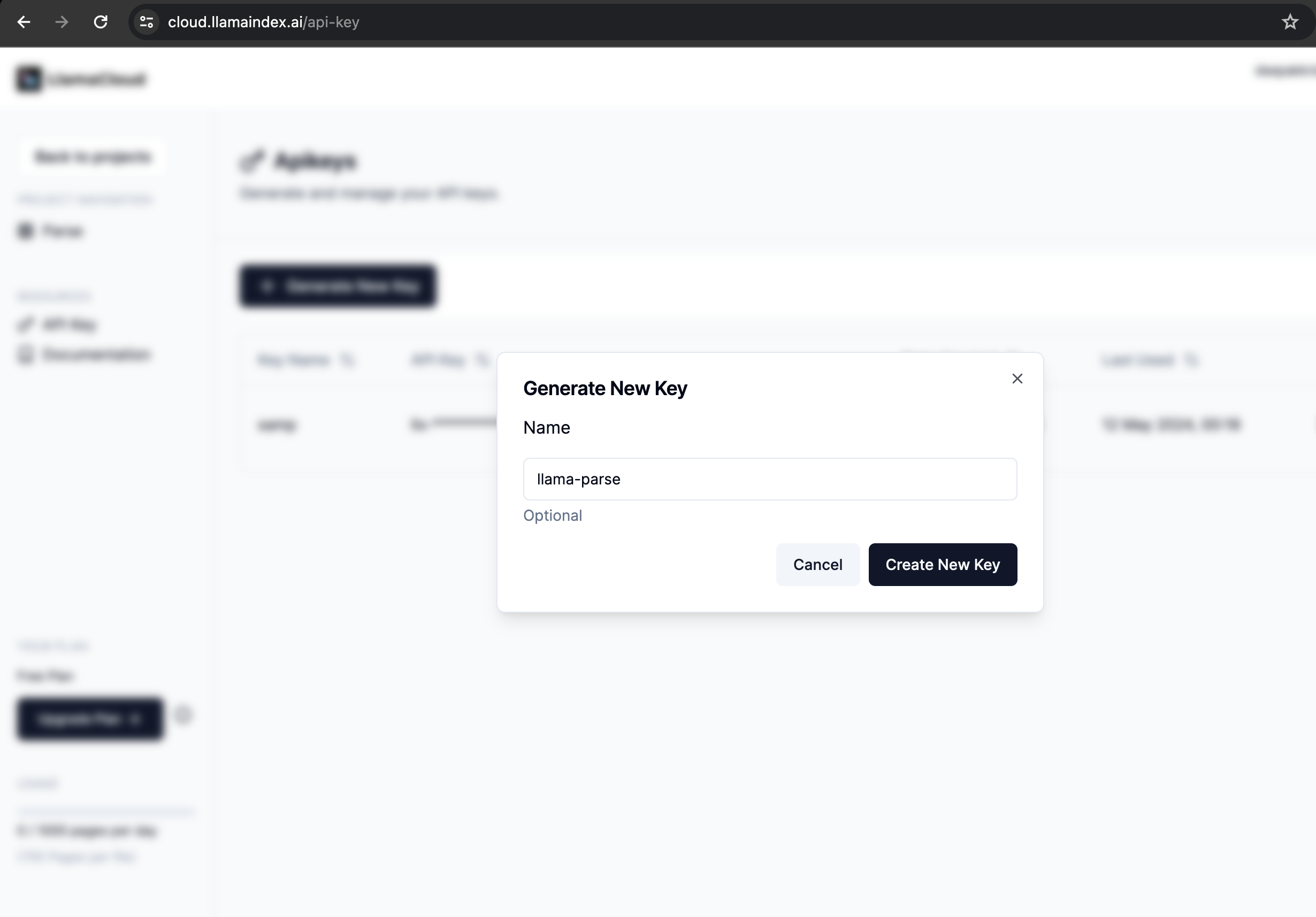

Step 1 – Get the API Key

LlamaParse is part of the LlamaCloud platform, so you need to have a LlamaCloud account to get an API key.

First, you must create an account on ai/” target=”_blank” rel=”noreferrer noopener nofollow”>callcloud and sign in to create an API key.

Step 2 – Install the necessary libraries

Now open your Jupyter Notebook/Colab and install the necessary libraries. Here, we only need to install two libraries: llama-index and ai/en/stable/llama_cloud/llama_parse/” target=”_blank” rel=”noreferrer noopener nofollow”>call-parse. We will use the OpenAI model for querying and embedding.

!pip install llama-index

!pip install llama-parseStep 3: Set environment variables

import os

os.environ('OPENAI_API_KEY') = 'sk-proj-****'

os.environ("LLAMA_CLOUD_API_KEY") = 'llx-****'Step 4: Initialize the LLM and Onboarding Model

Here, I am using gpt-3.5-turbo-0125 as LLM and OpenAI's text-embedding-3-small as embedding model. We will use the Configuration module to replace the default LLM and embedding model.

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.core import Settings

embed_model = OpenAIEmbedding(model="text-embedding-3-small")

llm = OpenAI(model="gpt-3.5-turbo-0125")

Settings.llm = llm

Settings.embed_model = embed_modelStep 5: Analyze the document

Now, we will upload our document and convert it to Markdown type. It is then parsed using MarkdownElementNodeParser.

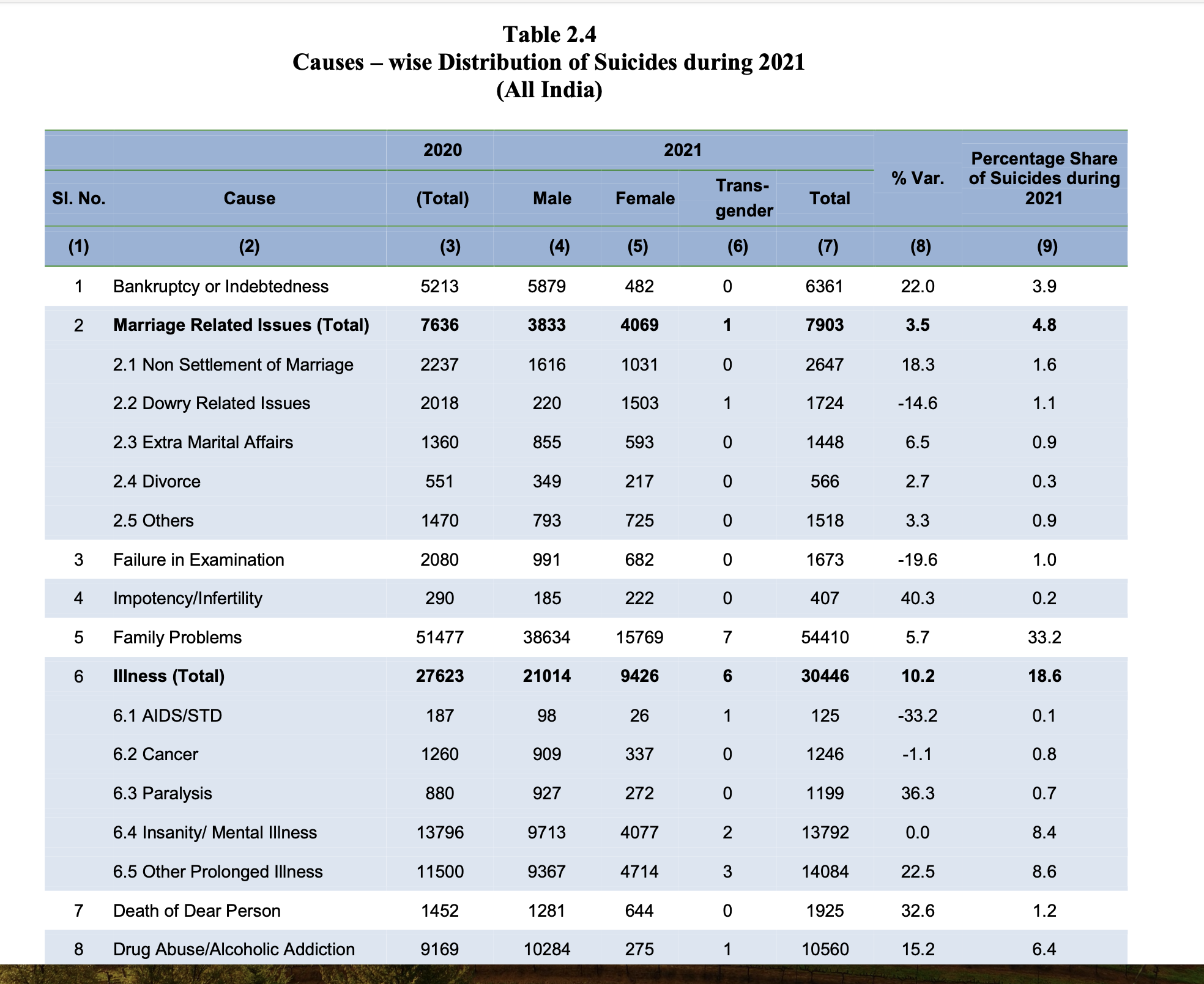

The table I used is taken from ncrb.gov.in and can be found here: https://ncrb.gov.in/accidental-deaths-suicidios-in-india-adsi. It has data integrated at different levels.

Below is the snapshot of the table I am trying to analyze.

from llama_parse import LlamaParse

from llama_index.core.node_parser import MarkdownElementNodeParser

documents = LlamaParse(result_type="markdown").load_data("./Table_2021.pdf")

node_parser = MarkdownElementNodeParser(

llm=llm, num_workers=8

)

nodes = node_parser.get_nodes_from_documents(documents)

base_nodes, objects = node_parser.get_nodes_and_objects(nodes)Step 6: Create the vector index and query engine

Now, we will create a vector store index using the built-in implementation of the called index to create a query engine on top of it. We can also use vector stores like chromadb, pinecone for this.

from llama_index.core import VectorStoreIndex

recursive_index = VectorStoreIndex(nodes=base_nodes + objects)

recursive_query_engine = recursive_index.as_query_engine(

similarity_top_k=5

)Step 7: Consult the index

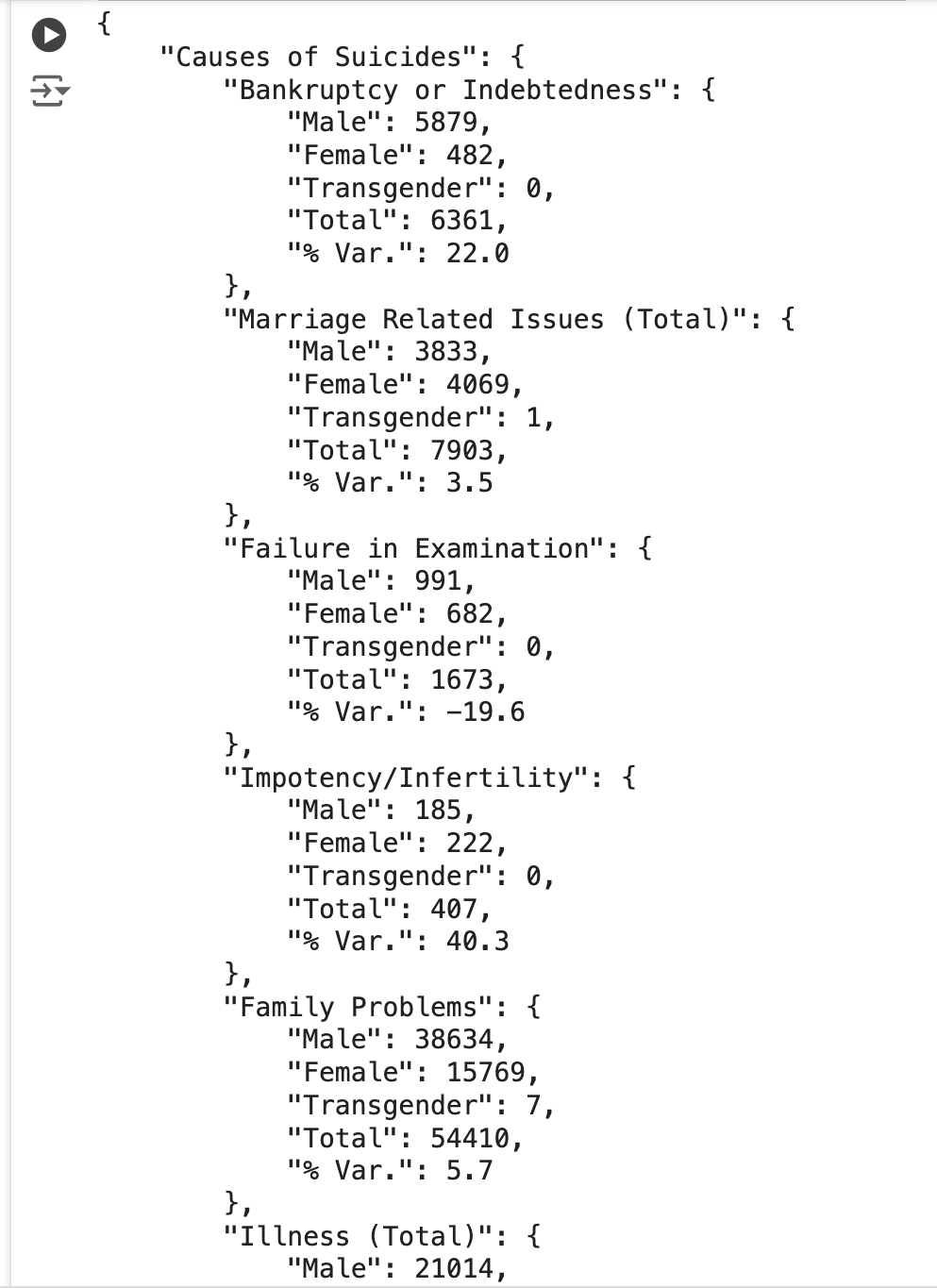

query = 'Extract the table as a dict and exclude any information about 2020. Also include % var'

response = recursive_query_engine.query(query)

print(response)The above user query will query the index of the underlying vector and return the content embedded in the PDF document in JSON format, as shown in the image below.

As you can see in the screenshot, the table was extracted in a clean JSON format.

Step 8: Putting it all together

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.core import Settings

from llama_parse import LlamaParse

from llama_index.core.node_parser import MarkdownElementNodeParser

from llama_index.core import VectorStoreIndex

embed_model = OpenAIEmbedding(model="text-embedding-3-small")

llm = OpenAI(model="gpt-3.5-turbo-0125")

Settings.llm = llm

Settings.embed_model = embed_model

documents = LlamaParse(result_type="markdown").load_data("./Table_2021.pdf")

node_parser = MarkdownElementNodeParser(

llm=llm, num_workers=8

)

nodes = node_parser.get_nodes_from_documents(documents)

base_nodes, objects = node_parser.get_nodes_and_objects(nodes)

recursive_index = VectorStoreIndex(nodes=base_nodes + objects)

recursive_query_engine = recursive_index.as_query_engine(

similarity_top_k=5

)

query = 'Extract the table as a dict and exclude any information about 2020. Also include % var'

response = recursive_query_engine.query(query)

print(response)Conclusion

LlamaParse is an effective tool for extracting complex objects from various types of documents such as PDF files with few lines of code. However, it is important to note that to fully utilize this tool requires a certain level of experience working with LLM frameworks, as the index calls it.

LlamaParse is valuable in handling tasks of varying complexity. However, like any other tool in the technological field, it is not entirely immune to errors. Therefore, it is highly recommended to conduct a thorough evaluation of the application independently or take advantage of available evaluation tools. Evaluation libraries such as Ragas, Truera, etc. provide metrics to evaluate the accuracy and reliability of their results. This step ensures that potential issues are identified and resolved before the application is shipped to a production environment.

Key takeaways

- LlamaParse is a tool created by the Llama Index team. Extract complex embedded objects from documents such as PDF files with just a few lines of code.

- LlamaParse offers free and paid plans. The free plan allows you to scan up to 1000 pages per day.

- LlamaParse currently supports more than 10 file types (.pdf, .pptx, .docx, .html, .xml, and more).

- LlamaParse is part of the LlamaCloud platform, so you need a LlamaCloud account to get an API key.

- With LlamaParse, you can provide natural language instructions to format the output. It even supports image extraction.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.

Frequently asked questions (FAQ)

A. LlamaIndex is the leading LLM framework, along with LangChain, for building LLM applications. It helps connect custom data sources to large language models (LLM) and is a widely used tool for building RAG applications.

A. LlamaParse is an offering from Llama Index that can extract complex tables and figures from documents like PDF, PPT, etc. Because of this, LlamaParse can integrate directly with Llama Index, allowing us to use it in conjunction with a wide variety of agents and tools that Llama Index offers.

A. Llama Index is an LLM framework for creating custom LLM applications and provides various tools and agents. LlamaParse is especially focused on extracting complex embedded objects from documents like PDF, PPT, etc.

A. The importance of LlamaParse lies in its ability to convert complex unstructured data in tables, images, etc., to a structured format, which is crucial in the modern world where the most valuable information is available in unstructured form. This transformation is essential for analytical purposes. For example, studying a company's financials from its SEC filings, which can span 100 to 200 pages, would be a challenge without such a tool. LlamaParse provides an efficient way to handle and structure this large amount of unstructured data, making it more accessible and useful for analysis.

A. Yes, LLMSherpa and unstructured.io are the available alternatives for LlamaParse.

NEWSLETTER

NEWSLETTER