Editor's Image | Transfer the learning flow from ai/se/skyengine-blog/128-what-is-transfer-learning” rel=”noopener” target=”_blank”>Skyengine.ai

When it comes to machine learning, where the appetite for data is insatiable, not everyone has the luxury of accessing large data sets to learn whenever they want; That's where transfer learning comes to the rescue, especially when you're stuck with limited data or the cost of acquiring more is simply too high.

This article will take a closer look at the magic of transfer learning and show how it intelligently uses models that have already learned from massive data sets to give a significant boost to your own machine learning projects, even when your data is sparse.

I'm going to address the obstacles that come with working in data-scarce environments, take a look at what the future holds, and celebrate the versatility and effectiveness of learning transfer in all kinds of different fields.

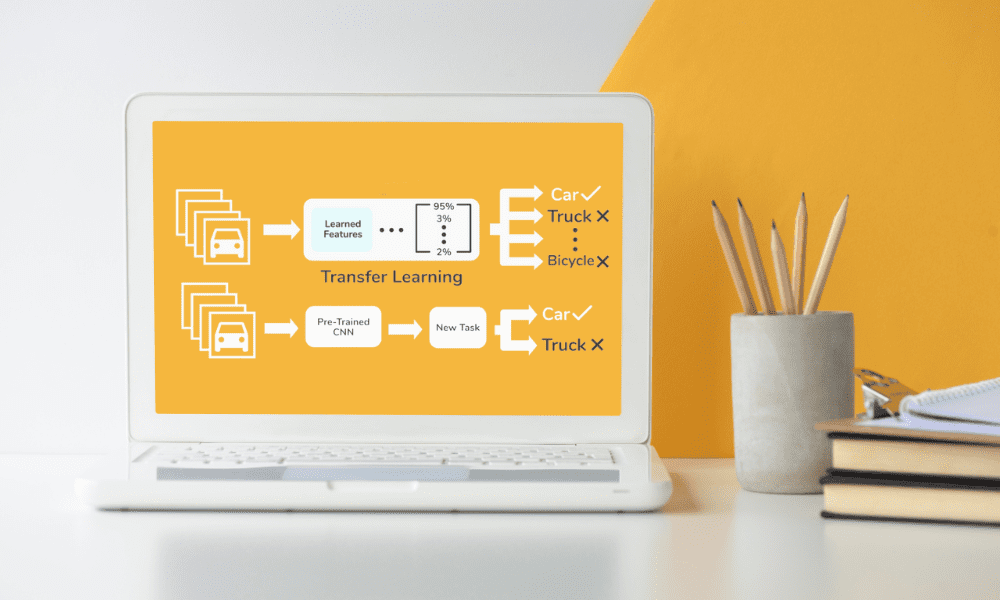

Transfer learning is a technique used in machine learning which takes a model developed for one task and reuses it for a second related task, evolving it further.

At its core, this approach depends on the idea that knowledge gained while learning one problem can help solve another, somewhat similar problem.

For example, a model trained to recognize objects within images. It can be adapted to recognize specific types of animals in photographs.leveraging their pre-existing knowledge of shapes, textures and patterns.

It actively speeds up the training process and at the same time significantly reduces the amount of data required. In small data scenarios, this is particularly beneficial as it avoids the traditional need for large data sets to achieve high model accuracy.

Using pretrained models allows practitioners to overcome many of the initial hurdles commonly associated with model development, such as feature selection and model architecture design.

Pretrained models serve as the true foundation for transfer learning, and these models, often developed and trained on large-scale data sets by research institutions or technology giants, are made available to the public.

The versatility of ai-model/” rel=”noopener” target=”_blank”>pre-trained models is notable, with applications ranging from image and speech recognition to natural language processing. Adopting these models for new tasks can dramatically reduce development time and resources required.

For example, ai/posts/2018-08-10-fastai-diu-imagenet.html” rel=”noopener” target=”_blank”>models trained on ImageNet databaseContaining millions of labeled images in thousands of categories, it provides a rich set of functions for a wide range of image recognition tasks.

The adaptability of these models to new and smaller data sets underlines their value, as it allows the extraction of complex features without the need for large computational resources.

Working with limited data presents unique challenges: the main concern is overfitting, where a model learns the training data too well, including its noise and outliers, leading to poor performance with unseen data.

Transfer learning mitigates this risk by using models pre-trained on diverse data sets, thereby improving generalization.

However, the effectiveness of transfer learning depends on the relevance of the pre-trained model to the new task. If the tasks involved are too different, the benefits of transfer learning may not be fully realized.

Additionally, fine-tuning a pre-trained model on a small data set requires careful tuning of parameters to avoid losing valuable knowledge the model has already acquired.

In addition to these obstacles, another scenario where data can be compromised is during the compression process. This applies even to fairly simple actions, such as when you want compress PDF filesBut fortunately these types of events can be prevented with precise modifications.

In the context of machine learning, ensure data integrity and quality even when subjected to compression for storage or transmission is vital to developing a reliable model.

Transfer learning, with its reliance on pre-trained models, further highlights the need for careful management of data resources to avoid loss of information, ensuring that each data is used to its full potential in the training and application.

Balancing the retention of learned features with adaptation to new tasks is a delicate process that requires a deep understanding of both the model and the available data.

The horizon of transfer learning is constantly expanding and research pushes the boundaries of what is possible.

An interesting avenue here is the development of more universal models which can be applied to a wider range of tasks with minimal adjustments required.

Another area of exploration is improving algorithms for transferring knowledge between very different domains, improving the flexibility of learning transfer.

There is also growing interest in automating the process of selecting and tuning pre-trained models for specific tasks, which could further lower the barrier to entry for using advanced machine learning techniques.

These advances promise to make transfer learning even more accessible and effective, opening new possibilities for its application in fields where data is scarce or difficult to collect.

The beauty of transfer learning lies in its adaptability that applies in all kinds of different domains.

From healthcare, wherever I can help diagnose diseases with limited patient data, to robotics, where it accelerates learning new tasks without extensive training, the potential applications are enormous.

In it field of natural language processingTransfer learning has enabled significant advances in linguistic models with comparatively small data sets.

This adaptability not only shows the efficiency of transfer learning, but highlights its potential to democratize access to advanced machine learning techniques to enable smaller organizations and researchers to undertake projects that were previously out of reach due to data limitations.

Even if it is a Django platformYou can take advantage of transfer learning to improve the capabilities of your application without having to start over from scratch.

Transfer learning transcends the boundaries of specific programming languages or frameworks, allowing advanced machine learning models to be applied to projects developed in diverse environments.

Transfer learning is not just about overcoming data scarcity; It is also a testament to the efficiency and resource optimization in machine learning.

By leveraging knowledge from pre-trained models, researchers and developers can achieve meaningful results with less computational power and time.

This efficiency is particularly important in scenarios where resources are limitedwhether in terms of data, computational capabilities or both.

From 43% of all websites use WordPress as your CMS, this is a great testing ground for ML models that specialize in, say, web scraping or compare different types of content for contextual and linguistic differences.

This underlines the ai/a-comprehensive-hands-on-guide-to-transfer-learning-with-real-world-applications-in-deep-learning/” rel=”noopener” target=”_blank”>Practical benefits of transfer learning in real-world scenarios., where access to large-scale, domain-specific data may be limited. Transfer learning also encourages the reuse of existing models, aligning with sustainable practices by reducing the need for energy-intensive training from scratch.

The approach exemplifies how strategic use of resources can lead to substantial advances in machine learning, making sophisticated models more accessible and environmentally friendly.

As we conclude our exploration of transfer learning, it is evident that this technique is significantly changing machine learning as we know it, particularly for projects facing limited data resources.

Transfer learning enables the effective use of pre-trained models, allowing both small and large projects to achieve notable results without the need for large data sets or computational resources.

Looking ahead, the potential for learning transfer is vast and varied, and the prospect of making machine learning projects more feasible and less resource-intensive is not only promising; It is already becoming a reality.

This shift toward more accessible and efficient machine learning practices has the potential to spur innovation in numerous fields, from healthcare to environmental protection.

Transfer learning is democratizing machine learning, making advanced techniques available to a much wider audience than ever before.

Nahla Davies is a software developer and technology writer. Before dedicating her full-time job to technical writing, she managed, among other interesting things, to work as a lead programmer at an Inc. 5,000 experiential brand organization whose clients include Samsung, Time Warner, Netflix, and Sony.