Image generated with ai” rel=”noopener” target=”_blank”>Ideogram.ai

Who hasn't heard of OpenAI? The ai research lab has changed the world thanks to its famous product, ChatGPT.

It has literally changed the landscape of ai implementation and many companies are now racing to become the next big thing.

Despite huge competition, OpenAI is still the go-to company for any business generative ai needs because it has one of the best models and ongoing support. The company offers many next-generation generative ai models with various task capabilities: image generation, text-to-speech, and many more.

All models offered by OpenAI are available through API calls. With some simple Python code, you can now use the model.

In this article, we will explore how to use the OpenAI API with Python and various tasks it can perform. I hope you learn a lot from this article.

To follow this article, there are a few things you need to prepare.

The most important thing you need is the OpenAI API keys, as you cannot access the OpenAI models without the key. To purchase access, you must register for an OpenAI account and request the API key at the account page. After you receive the key, save it somewhere you can remember, as it will not appear in the OpenAI interface again.

The next thing you need to set up is to purchase the prepaid credit to use the OpenAI API. Recently, OpenAI announced changes to how your billing jobs. Instead of paying at the end of the month, we must purchase prepaid credit for the API call. You can visit the OpenAI Pricing page to estimate the credit you need. You can also consult their model page to understand which model you need.

Lastly, you need to install the OpenAI Python package in your environment. You can do it using the following code.

Then you need to set your OpenAI key environment variable using the following code.

import os

os.environ('OPENAI_API_KEY') = 'YOUR API KEY'With everything ready, let's start exploring the OpenAI models API with Python.

The star of the OpenAI API is its Text Generations model. This family of large language models can produce text output from text input called a request. Prompts are basically instructions on what we expect from the model, such as text analysis, generating draft documents, and much more.

Let's start by executing a simple call to the Text Generations API. We would use OpenAI's GPT-3.5-Turbo model as the base model. It is not the most advanced model, but the cheapest ones are usually enough to perform text-related tasks.

from openai import OpenAI

client = OpenAI()

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=(

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Generate me 3 Jargons that I can use for my Social Media content as a Data Scientist content creator"}

)

)

print(completion.choices(0).message.content)- “Unleashing the power of predictive analytics to drive data-driven decisions!”

- “Dive deep into the ocean of data to discover valuable insights.”

- “Transforming raw data into actionable intelligence through advanced algorithms.”

The API call for the text generation model uses the API endpoint chat.completions to create the text response from our message.

There are two parameters required for text generation: model and messages.

For the model, you can check the list of models you can use on the related model page.

As for the messages, we pass a dictionary with two pairs: the role and the content. The role key specified the sender role in the conversation model. There are 3 different roles: system, user and assistant.

Using the role in messages, we can help establish the behavior of the model and an example of how the model should answer our question.

Let's extend the code example above with the function wizard to provide guidance on our model. Furthermore, we would explore some parameters of the text generation model to improve its result.

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=(

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Generate me 3 jargons that I can use for my Social Media content as a Data Scientist content creator."},

{"role": "assistant", "content": "Sure, here are three jargons: Data Wrangling is the key, Predictive Analytics is the future, and Feature Engineering help your model."},

{"role": "user", "content": "Great, can you also provide me with 3 content ideas based on these jargons?"}

),

max_tokens=150,

temperature=0.7,

top_p=1,

frequency_penalty=0

)

print(completion.choices(0).message.content)Of course! Here are three content ideas based on the jargons provided:

- “Unlocking the Power of Data Manipulation: A Step-by-Step Guide for Data Scientists” – Create a blog post or video tutorial showcasing best practices and tools for data manipulation in a data science project. real world.

- “The Future of Predictive Analytics: Trends and Innovations in Data Science” – Write a thought leadership article that analyzes emerging trends and technologies in predictive analytics and how they are shaping the future of data science.

- “Mastering Feature Engineering: Techniques to Improve Model Performance” – Develop an infographic or social media series that highlights different feature engineering techniques and their impact on improving the accuracy and efficiency of learning models automatic.

The resulting output follows the example we provided to the model. Using the role wizard is useful if we have a certain style or outcome that we want the model to follow.

As for the parameters, here are simple explanations of each parameter we use:

- tokens_max: This parameter sets the maximum number of words that the model can generate.

- temperature: This parameter controls the unpredictability of the model output. A higher temperature results in more varied and imaginative results. The acceptable range is 0 to infinity, although values greater than 2 are unusual.

- up_p: Also known as kernel sampling, this parameter helps determine the subset of the probability distribution from which the model obtains its output. For example, a top_p value of 0.1 means that the model considers only the top 10% of the probability distribution for sampling. Its values can range from 0 to 1, with higher values allowing greater output diversity.

- frequency_penalty: This penalizes repeated tokens in the model output. The penalty value can range from -2 to 2, where positive values discourage repetition of tokens and negative values do the opposite, encouraging repeated word use. A value of 0 indicates that no repetition penalty is applied.

Lastly, you can change the model output to JSON format with the following code.

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

response_format={ "type": "json_object" },

messages=(

{"role": "system", "content": "You are a helpful assistant designed to output JSON.."},

{"role": "user", "content": "Generate me 3 Jargons that I can use for my Social Media content as a Data Scientist content creator"}

)

)

print(completion.choices(0).message.content){

“slang”: (

“Leverage predictive analytics to unlock valuable insights,”

“Digging deeper into the ins and outs of advanced machine learning algorithms,”

“Harness the power of big data to drive data-driven decisions”

)

}

The result is in JSON format and fits the message we enter into the model.

To finish Text Generation API DocumentationYou can consult them on their dedicated page.

The OpenAI model is useful for text generation use cases and can also call the API for image generation purposes.

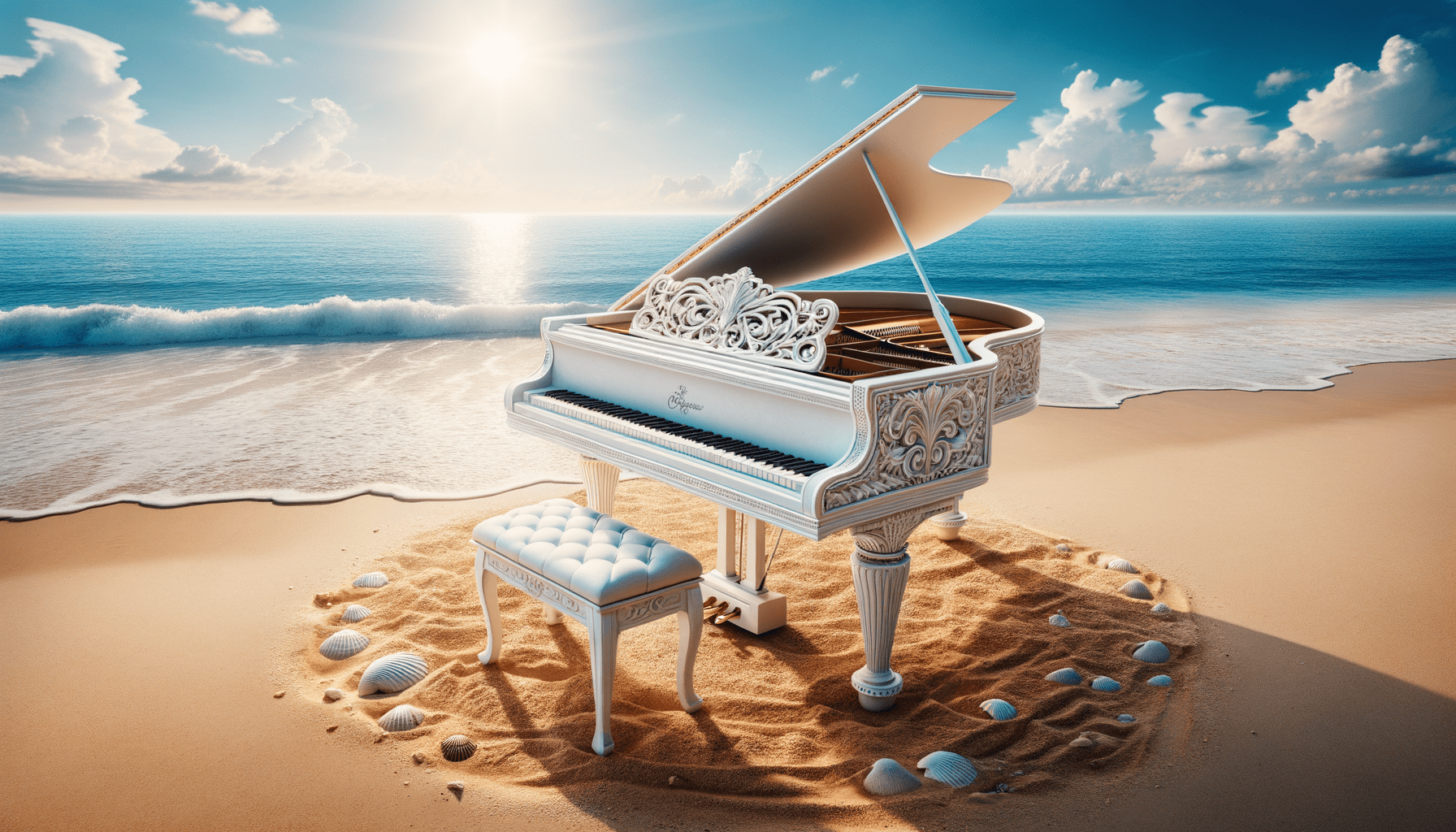

Using the DALL·E model, we can generate an image as requested. The easy way to do this is by using the following code.

from openai import OpenAI

from IPython.display import Image

client = OpenAI()

response = client.images.generate(

model="dall-e-3",

prompt="White Piano on the Beach",

size="1792x1024",

quality="hd",

n=1,

)

image_url = response.data(0).url

Image(url=image_url)

Image generated with DALL·E 3

For the parameters, here are the explanations:

- model: The imaging model to use. Currently, the API only supports DALL·E 3 and DALL·E 2 models.

- immediate: This is the textual description from which the model will generate an image.

- size: Determines the resolution of the generated image. There are three options for the DALL·E 3 model (1024×1024, 1024×1792 or 1792×1024).

- quality: This parameter influences the quality of the generated image. If calculation time is needed, “standard” is faster than “hd”.

- north: Specifies the number of images to be generated based on the message. DALL·E 3 can only generate one image at a time. DALL·E 2 can generate up to 10 at a time.

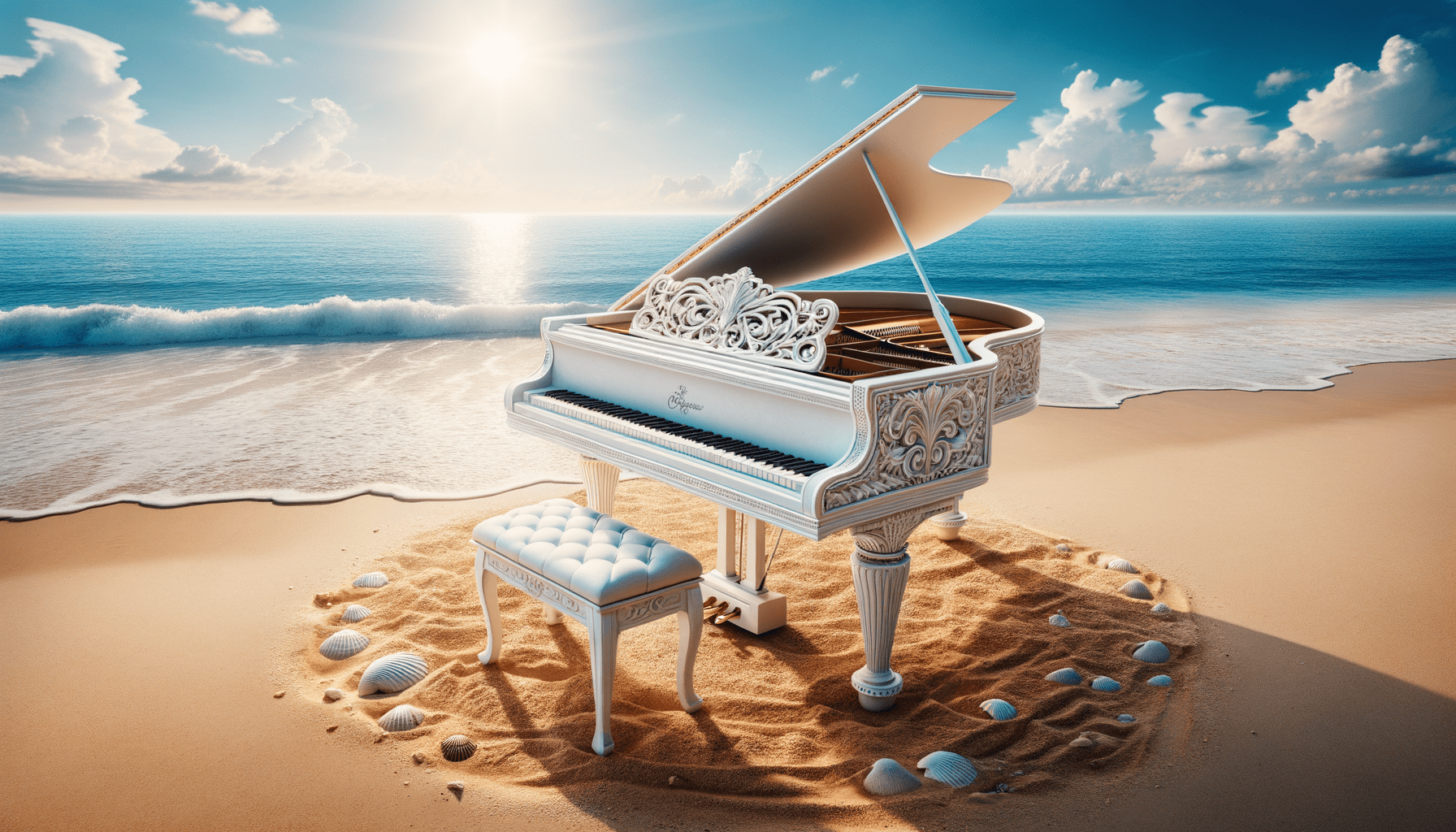

It is also possible to generate a variation image from the existing image, although this is only available using the DALL·E 2 model. The API only accepts square PNG images less than 4 MB.

from openai import OpenAI

from IPython.display import Image

client = OpenAI()

response = client.images.create_variation(

image=open("white_piano_ori.png", "rb"),

n=2,

size="1024x1024"

)

image_url = response.data(0).url

Image(url=image_url)The image may not be as good as the DALL·E 3 generation since it uses the older model.

OpenAI is a leading company that provides models that can understand image input. This model is called the Vision model, sometimes called GPT-4V. The model is able to answer questions given the image we gave.

Let's try the Vision model API. In this example, you would use the white piano image that we generated from the DALL·E 3 model and store it locally. Additionally, I would create a function that takes the image path and returns the image description text. Don't forget to change the api_key variable to your API key.

from openai import OpenAI

import base64

import requests

def provide_image_description(img_path):

client = OpenAI()

api_key = 'YOUR-API-KEY'

# Function to encode the image

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

# Path to your image

image_path = img_path

# Getting the base64 string

base64_image = encode_image(image_path)

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

payload = {

"model": "gpt-4-vision-preview",

"messages": (

{

"role": "user",

"content": (

{

"type": "text",

"text": """Can you describe this image? """

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

)

}

),

"max_tokens": 300

}

response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

return response.json()('choices')(0)('message')('content')This image shows a grand piano placed in a serene beach setting. The piano is white, indicating a finish that is often associated with elegance. The instrument is located right on the edge of the coast, where the gentle waves lightly caress the sand, creating a foam that barely touches the base of the piano and the matching stool. The surroundings of the beach imply a feeling of tranquility and isolation with clear blue skies, fluffy clouds in the distance and a calm sea that expands to the horizon. Scattered around the piano in the sand are numerous seashells of various sizes and shapes, highlighting the natural beauty and serene atmosphere of the surroundings. The juxtaposition of a classical music instrument in a natural beach setting creates a surreal and visually poetic composition.

You can modify the text values in the dictionary above to match the requirements of your Vision model.

OpenAI also provides a model for generating audio based on its Text-to-Speech model. It's very easy to use, although the voice narration style is limited. Additionally, the model supports many languages, which you can see on its language support page.

To generate the audio, you can use the following code.

from openai import OpenAI

client = OpenAI()

speech_file_path = "speech.mp3"

response = client.audio.speech.create(

model="tts-1",

voice="alloy",

input="I love data science and machine learning"

)

response.stream_to_file(speech_file_path)You should see the audio file in your directory. Try playing it and see if it lives up to your standard.

Currently, there are only a few parameters that you can use for the Text to Speech model:

- model: The text-to-speech model to use. There are only two models available (tts-1 or tts-1-hd), where tts-1 optimizes speed and tts-1-hd for quality.

- voice: The voice style to use where the entire voice is optimized for English. The selection is alloy, echo, fable, onyx, nova and glitter.

- Response_format: The audio format file. Currently supported formats are mp3, opus, aac, flac, wav and pcm.

- speed: The speed of the generated audio. You can select values between 0.25 and 4.

- input: The text to create the audio. Currently, the model only supports up to 4096 characters.

OpenAI provides the models to transcribe and translate audio data. Using the whisper model, we can transcribe audio from the supported language to text files and translate them into English.

Let's try a simple transcription of the audio file we generated earlier.

from openai import OpenAI

client = OpenAI()

audio_file= open("speech.mp3", "rb")

transcription = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file

)

print(transcription.text)I love data science and machine learning.

It is also possible to translate the audio files into English. The model is not yet available to translate into another language.

from openai import OpenAI

client = OpenAI()

audio_file = open("speech.mp3", "rb")

translate = client.audio.translations.create(

model="whisper-1",

file=audio_file

)We have explored various model services that OpenAI offers, from text generation, image generation, audio generation, vision, and text-to-speech models. Each model has its API parameter and specifications that you should know before using them.

Cornellius Yudha Wijaya He is an assistant data science manager and data writer. While working full-time at Allianz Indonesia, he loves sharing data and Python tips through social media and print media. Cornellius writes on a variety of artificial intelligence and machine learning topics.

NEWSLETTER

NEWSLETTER