Introduction

Convolutional Neural Networks (CNNs) have been key players in understanding images and patterns, transforming the landscape of deep learning. The journey began with Yan introducing the LeNet architecture, and today, we have a range of CNNs to choose from. Traditionally, these networks heavily depended on fully connected layers, especially when sorting things into different categories. But wait, there’s a change in the air. We’re exploring a different architecture that uses Pointwise Convolution—a fresh and improved method for CNNs. It’s like taking a new path. This approach challenges the usual use of fully connected layers, bringing in some cool benefits that make our networks smarter and faster. Come along on this exploration with us as we dive into understanding Pointwise Convolution and discover how it helps our networks operate more efficiently and perform better.

Learning Objectives

- Understand the journey of Convolutional Neural Networks (CNNs) from early models like LeNet to the diverse architectures in use today.

- Explore issues related to computational intensity and spatial information loss associated with traditional fully connected layers in CNNs.

- Exploring Pointwise Convolution How efficient feature extraction alternative in CNNs

- Develop practical skills implementing Pointwise Convolution in CNNs, involving tasks like network modification and hyperparameter tuning.

This article was published as a part of the Data Science Blogathon.

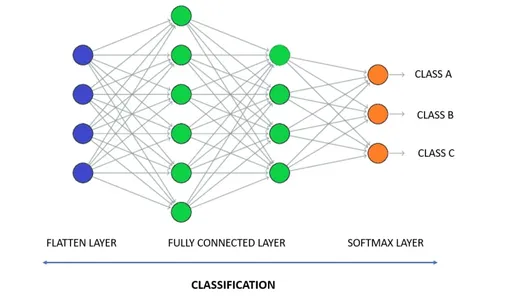

Understanding Fully Connected Layers

In traditional Convolutional Neural Networks (CNNs), fully connected layers play a crucial role in connecting all neurons from one layer to another, forming a dense interconnection structure. Use these layers in tasks like image classification, where the network learns to associate specific features with particular classes.

Key Points

- Global Connectivity: Fully connected layers create a global connection, allowing each neuron in one layer to be connected to every neuron in the subsequent layer.

- Parameter Intensity: The sheer number of parameters in fully connected layers can substantially increase the model’s parameter count.

- Spatial Information Loss: Flattening the input data in fully connected layers may result in the loss of spatial information from the original image, which can be a drawback in specific applications.

- Computational Intensity: The computational load associated with fully connected layers can be significant, especially as the network scales in size.

Usage in Practice

- After Convolutional Layers: Fully connected layers are typically used after convolutional layers in a CNN architecture, where the convolutional layers extract features from the input data.

- Dense Layer: In some cases, fully connected layers are referred to as “dense” layers, highlighting their role in connecting all neurons.

What is the Need for Change?

Now that we’ve got a basic understanding of fully connected layers in regular Convolutional Neural Networks (CNNs), let’s talk about why some folks are looking for something different. While fully combined layers do their job well, they have some challenges. They can be a bit heavy on the computer, use a lot of parameters, and sometimes lose essential details from the pictures.

Why We’re Exploring Something New:

- Fully Connected Hiccups: Think of fully connected layers like a hard worker with a few hiccups – they’re effective but come with challenges.

- Searching for Smarter Ways: People seek more innovative and efficient ways to build these networks without these hiccups.

- Making Things Better: The goal is to make these networks work even better – faster, smarter, and use less computing power.

Understanding Pointwise Convolution

Now that we’re intrigued by making our networks smarter and more efficient let’s get to know Pointwise Convolution, which is a bit of a game-changer in the world of Convolutional Neural Networks (CNNs).

Getting to Know Pointwise Convolution

- What’s Pointwise Convolution? It’s like a new tool in our toolkit for building CNNs. Instead of connecting everything globally like fully connected layers, it’s a bit more focused.

- Changing the Route: If fully connected layers are like the main highway, Pointwise Convolution is like finding a neat shortcut—it helps us get where we want to go faster.

- Less Heavy Lifting: One cool thing about Pointwise Convolution is that it can do its job without using as much computer power as fully connected layers.

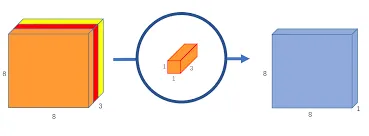

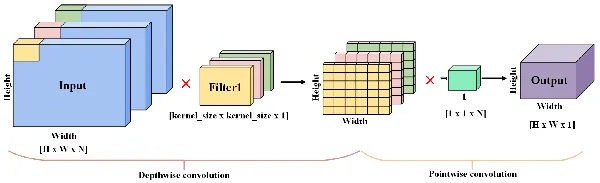

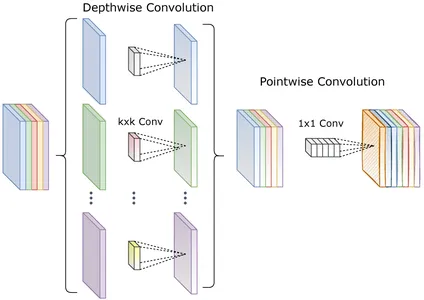

How Does Pointwise Convolution Work?

- Focused Computation: Pointwise Convolution is like having a mini-computation at each specific point in our data. It’s more focused, looking at individual spots rather than the whole picture.

- Notation: We often denote Pointwise Convolution with the term 1×11×1 convolution because it’s like looking at a single point in our data at a time, hence the “1×1.”

Advantages of Pointwise Convolution

Now that we’ve got a handle on Pointwise Convolution let’s dig into why it’s gaining attention as a cool alternative in Convolutional Neural Networks (CNNs).

What Makes Pointwise Convolution Stand Out:

- Reduced Computational Load: Unlike fully connected layers that involve heavy computation, Pointwise Convolution focuses on specific points, making the overall process more efficient.

- Parameter Efficiency: With its 1×11×1 notation, Pointwise Convolution doesn’t need as many parameters, making our networks less complex and easier to manage.

- Preserving Spatial Information: Remember the spatial information we sometimes lose? Pointwise Convolution helps keep it intact, which is super handy in tasks like image processing.

Examples of Pointwise Convolution in Action:

Now that we’ve covered why Pointwise Convolution is a promising approach let’s delve into some real-world examples of Convolutional Neural Networks (CNNs) where Pointwise Convolution has been successfully implemented.

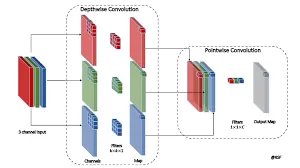

1. MobileNet

- What is MobileNet? MobileNet is a specialized CNN architecture designed for mobile and edge devices, where computational resources may be limited.

- Role of Pointwise Convolution: Pointwise Convolution is a key player in MobileNet, especially in the bottleneck architecture. It helps reduce the number of computations and parameters, making MobileNet efficient on resource-constrained devices.

- Impact: By leveraging Pointwise Convolution, MobileNet balances accuracy and computational efficiency, making it a popular choice for on-the-go applications.

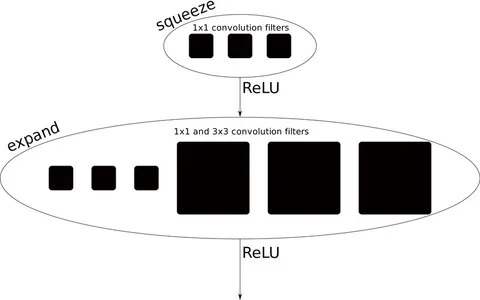

2. SqueezeNet

- What is SqueezeNet? SqueezeNet is a CNN architecture that emphasizes model compression—achieving high accuracy with fewer parameters.

- Role of Pointwise Convolution: Pointwise Convolution is integral to SqueezeNet’s success. It replaces larger convolutional filters, reducing the number of parameters and enabling efficient model training and deployment.

- Advantages: SqueezeNet’s use of Pointwise Convolution demonstrates how this approach can significantly decrease model size without sacrificing performance, making it suitable for environments with limited resources.

3. EfficientNet

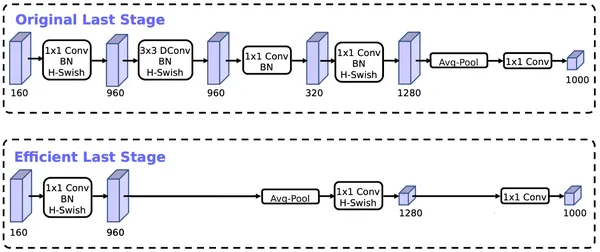

- Overview: EfficientNet is a family of CNN architectures known for achieving state-of-the-art performance while maintaining efficiency.

- Role of Pointwise Convolution: Pointwise Convolution is strategically used in EfficientNet to balance model complexity and computational efficiency across different network scales (B0 to B7).

- Significance: The incorporation of Pointwise Convolution contributes to EfficientNet’s ability to achieve high accuracy with relatively fewer parameters.

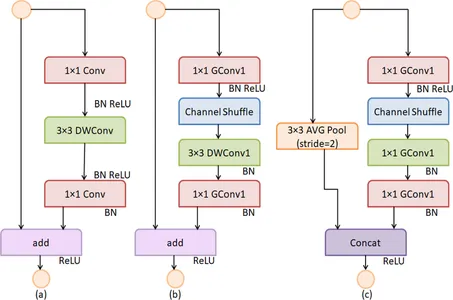

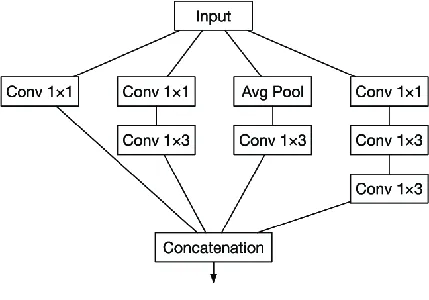

4. ShuffleNet

- Introduction to ShuffleNet: ShuffleNet is designed to improve computational efficiency by introducing channel shuffling and pointwise group convolutions.

- Role of Pointwise Convolution: Pointwise Convolution is a fundamental element in ShuffleNet’s design, reducing the number of parameters and computations.

- Impact: The combination of channel shuffling and Pointwise Convolution allows ShuffleNet to balance model accuracy and computational efficiency, making it suitable for deployment on resource-constrained devices.

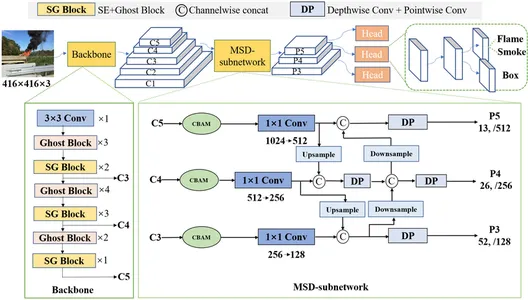

5. GhostNet:

- GhostNet Overview: GhostNet is a lightweight CNN architecture designed for efficient training and deployment, focusing on reducing memory and computation requirements.

- Role of Pointwise Convolution: Pointwise Convolution is utilized in GhostNet to reduce the number of parameters and enhance computational efficiency.

- Benefits: Using Pointwise Convolution, GhostNet achieves competitive accuracy with lower computational demands, making it suitable for applications with limited resources.

6. MnasNet:

- MnasNet Introduction: MnasNet is a mobile-oriented CNN architecture developed specifically emphasizing efficiency and effectiveness in mobile and edge devices.

- Role of Pointwise Convolution: Pointwise Convolution is a key component in MnasNet, contributing to the model’s lightweight design and efficiency.

- Performance: MnasNet showcases how Pointwise Convolution enables the creation of compact yet powerful models suitable for mobile applications.

7. Xception:

- Overview of Xception: Xception (Extreme Inception) is a CNN architecture that takes inspiration from the Inception architecture, emphasizing depthwise separable convolutions.

- Role of Pointwise Convolution: Pointwise Convolution is utilized in the final stage of Xception, aiding in feature integration and dimensionality reduction.

- Advantages: The integration of Pointwise Convolution contributes to Xception’s ability to capture complex features while maintaining computational efficiency.

8. InceptionV3:

- Overview: InceptionV3 is a widely-used CNN architecture that belongs to the Inception family. It is known for its success in image classification and object detection tasks.

- Role of Pointwise Convolution: Pointwise Convolution is a fundamental component in the InceptionV3 architecture, contributing to the efficient processing of features across different spatial resolutions.

- Applications: Applied across various domains, InceptionV3 demonstrates robust performance in medical image analysis within the healthcare sector.

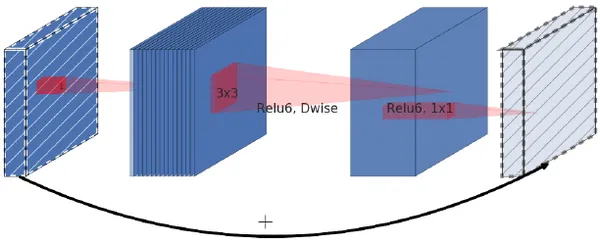

9. MobileNetV2:

- Introduction to MobileNetV2: MobileNetV2 is a follow-up to MobileNet, designed for mobile and edge devices. It focuses on achieving higher accuracy and improved efficiency.

- Role of Pointwise Convolution: MobileNetV2 extensively uses Pointwise Convolution to streamline and enhance the architecture by reducing computation and parameters.

- Significance: MobileNetV2 has become popular for on-device processing due to its lightweight design, making it suitable for applications like image recognition on mobile devices.

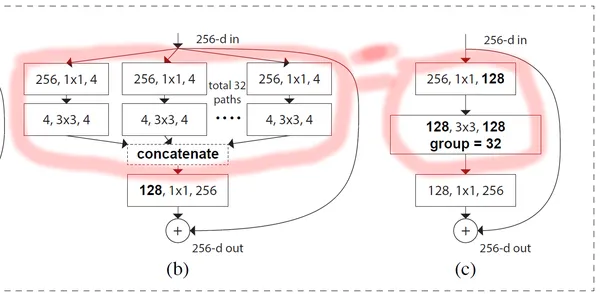

10. ResNeXt:

- ResNeXt Overview: ResNeXt, a variant of the ResNet architecture, emphasizes a cardinality parameter, enabling the model to capture richer feature representations.

- Role of Pointwise Convolution: ResNeXt employs Pointwise Convolution to enhance the network’s ability to capture diverse features through flexible feature fusion.

- Impact: The use of Pointwise Convolution in ResNeXt contributes to its success in image classification tasks, particularly in scenarios where diverse features are crucial.

Case Studies and Comparisons

Now that we’ve explored several popular Convolutional Neural Networks (CNNs) leveraging Pointwise Convolution. Let’s investigate specific case studies and comparisons to understand how these networks perform in real-world scenarios.

1. Image Classification: MobileNet vs. VGG16

- Scenario: Compare the performance of MobileNet (utilizing Pointwise Convolution for efficiency) and VGG16 (traditional architecture with fully connected layers) in image classification tasks.

- Observations: Evaluate accuracy, computational speed, and model size to showcase the advantages of Pointwise Convolution in terms of efficiency without compromising accuracy.

2. Edge Device Deployment: MobileNetV2 vs. InceptionV3

- Scenario: Examine the efficiency and suitability of MobileNetV2 (leveraging Pointwise Convolution) and InceptionV3 (traditional architecture) for deployment on edge devices with limited computational resources.

- Results: Assess the trade-off between model accuracy and computational demands, highlighting the benefits of Pointwise Convolution in resource-constrained environments.

3. Semantic Segmentation: U-Net vs. GhostNet

- Scenario: Investigate the performance of U-Net (a traditional architecture for semantic segmentation) and GhostNet (incorporating Pointwise Convolution for efficiency) in medical image segmentation tasks.

- Outcomes: Analyze segmentation accuracy, computational efficiency, and memory requirements to showcase how Pointwise Convolution aids in optimizing models for segmentation tasks.

Implementing Pointwise Convolution

Now, let’s dive into the practical steps of integrating Pointwise Convolution into a Convolutional Neural Network (CNN) architecture. We’ll focus on a simplified example to illustrate the implementation process.

1. Network Modification

- Identify fully connected layers in your existing CNN architecture that you want to replace with Pointwise Convolution.

# Original fully connected layer

model.add(Dense(units=256, activation='relu'))Replace it with:

# Pointwise Convolution layer

model.add(Conv2D(filters=256, kernel_size=(1, 1), activation='relu'))2. Architecture Adjustment

- Consider the position of Pointwise Convolution within your network. It’s often used after other convolutional layers to capture and refine features effectively.

# Add Pointwise Convolution after a convolutional layer

model.add(Conv2D(filters=128, kernel_size=(3, 3), activation='relu'))

model.add(Conv2D(filters=256, kernel_size=(1, 1), activation='relu'))

# Pointwise Convolution3. Hyperparameter Tuning

- Experiment with kernel size and stride based on your specific task requirements.

# Fine-tune kernel size and stride

model.add(Conv2D(filters=256, kernel_size=(3, 3), strides=(1, 1), activation='relu'))

model.add(Conv2D(filters=512, kernel_size=(1, 1), strides=(1, 1), activation='relu'))

# Pointwise Convolution4. Regularization Techniques

- Enhance stability and convergence by incorporating batch normalization.

# Batch normalization with Pointwise Convolution

model.add(Conv2D(filters=512, kernel_size=(1, 1), activation=None))

model.add(BatchNormalization())

model.add(Activation('relu'))5. Model Evaluation

- Compare the modified network’s performance against the original architecture.

# Original fully connected layer for comparison

model.add(Dense(units=512, activation='relu'))

Conclusion

Pointwise Convolution greatly improves how we design Convolutional Neural Networks (CNNs). It provides a focused and effective option compared to the usual fully connected layers. Recommend experimenting to assess the applicability and effectiveness of Pointwise Convolution, as it varies with the specific architecture and task. Making Pointwise Convolution work in a network requires smart changes to the architecture and dealing with some challenges. Looking ahead, using Pointwise Convolution suggests a change in how we design CNNs, leading us towards networks that are more efficient and easier to adjust, setting the stage for more progress in deep learning.

Key Takeaways

- Evolution of CNNs: Witnessed a significant evolution from LeNet to diverse CNNs today.

- Fully Connected Layers’ Challenges: Traditional layers faced issues like computational intensity and spatial information loss.

- Pointwise Convolution: Promising alternative with a focused and efficient approach to feature extraction.

- Advantages: Brings reduced computational load, improved parameter efficiency, and preserved spatial information.

- Real-world Impact: Crucial role in optimizing network performance, seen in models like MobileNet and SqueezeNet.

- Practical Implementation: Steps include network modification, architecture adjustments, hyperparameter tuning, and model evaluation.

Frequently Asked Questions

A. Pointwise Convolution is a Convolutional Neural Networks (CNNs) technique that focuses on individual points, offering a more efficient alternative to traditional fully connected layers.

A. Unlike fully connected layers, Pointwise Convolution operates at specific points in the input, reducing computational load and preserving spatial information.

A. Pointwise Convolution brings advantages such as reduced computational load, improved parameter efficiency, and preservation of spatial information in CNN architectures.

A. Experimentation determines Pointwise Convolution’s applicability and effectiveness, which may vary based on the specific architecture and task.

A. Implementation involves modifying the network, adjusting architecture, tuning hyperparameters, and considering challenges like overfitting for improved efficiency.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

NEWSLETTER

NEWSLETTER