Introduction

November has been dramatic in the ai space. It has been quite a ride from the launch of GPT stores, GPT-4-turbo, to the OpenAI fiasco. But this begs an important question: how trustworthy are closed models and the people behind them? It will not be a pleasant experience when the model you use in production goes down because of some internal corporate drama. This is not a problem with open-source models. You have full control over the models you deploy. You have sovereignty over your data and models alike. But is it possible to substitute an OS model with GPTs? Thankfully, many open-source models are already performing at par or more than the GPT-3.5 models. This article will explore some of the best-performing alternatives for open-source LLMs and LMMs.

Learning Objectives

- Discuss about the open-source Large language models.

- Explore state-of-the-art open-source language models and multi-modal models.

- A mild introduction to quantizing Large language models.

- Learn about tools and services to run LLMs locally and on the cloud.

This article was published as a part of the Data Science Blogathon.

What is an Open-Source Model?

A model is called open-source when the weights and architecture of the model are freely available. These weights are pre-trained parameters of a large language model, for example, Meta’s Llama. These are usually base models or vanilla models without any fine-tuning. Anyone can use the models and fine-tune them on custom data for performing downstream actions.

But are they open? What about data? Most research labs do not release the data that goes into training the base models because of many concerns regarding copyrighted content and data sensitivity. This also brings us to the licensing part of models. Every open-source model comes with a license similar to any other open-source software. Many base models like Llama-1 came with non-commercial licensing, which means you cannot use these models to make money. But models like Mistral7B and Zephyr7B come with Apche-2.0 and MIT licenses, which can be used anywhere without concerns.

Open-Source Alternatives

Since the launch of Llama, there has been an arms race in open-source space to catch up to OpenAI models. And the results have been encouraging so far. Within a year of GPT-3.5, we have models performing on par or better than GPT-3.5 with fewer parameters. But GPT-4 is still the best model for performing general tasks from reasoning and math to code generation. Further looking at the pace of innovation and funding in open-source models, we will soon have models approximating GPT-4’s performance. For now, let’s discuss some great open-source alternatives to these models.

Meta’s Llama 2

Meta released their best model, Llama-2, in July this year, and it became an instant hit owing to its impressive capabilities. Meta released four Llama-2 models with different parameter sizes. Llama-7b, 13b, 34b, and 70b. The models were good enough to beat other open models in their respective categories. But now, multiple models like mistral-7b and Zephyr-7b outperform smaller Llama models in many benchmarks. Llama-2 70b is still one of the best in its category and is worthy of a GPT-4 alternative for tasks like summarising, machine translation, etc.

On several benchmarks, Llama-2 has performed better than GPT-3.5, and it was able to approach GPT-4, making it a worthy substitute for GPT-3.5 and, in some cases, GPT-4. The following graph is a performance comparison of Llama and GPT models by Anyscale.

For more information on Llama-2, refer to this blog on HuggingFace. These LLMs have been shown to perform well when fine-tuned over custom datasets. We can fine-tune the models to perform better at specific tasks.

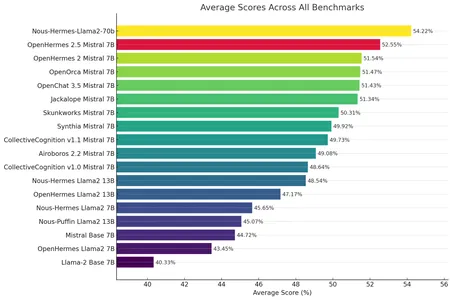

Different research labs have also released fine-tuned versions of Llama-2. These models have shown better outcomes than the original models on many benchmarks. This fine-tuned Llama-2 model, Nous-Hermes-Llama2-70b from Nous Research, has been fine-tuned on over 300,000 custom instructions, making it better than the original meta-llama/Llama-2-70b-chat-hf.

Check out the HuggingFace leaderboard. You can find fine-tuned Llama-2 models with better results than the original models. This is one of the pros of OS models. There are plenty of models to choose from as per the requirements.

Mistral-7B

Since the release of Mistral-7B, it has become the darling of the open-source community. It has been shown to perform much better than any models in the category and approach GPT-3.5’s capabilities. This model can be a substitute for Gpt-3.5 in many cases, such as summarising, paraphrasing, classification, etc.

Few model parameters ensure a smaller model that can be run locally or hosted with cheaper rates than bigger ones. Here is the original huggingFace space for Mistral-7b. Besides being a great performer, one thing that makes Mistral-7b stand out is that it is a raw model without any censorship. Most models are lobotomized with heavy RLHF before launch, making them undesirable for many tasks. But this makes Mistral-7B desirable for doing real-world subject-specific tasks.

Thanks to the vibrant open-source community, quite a few fine-tuned alternatives with better performance than the original Mistral7b models exist.

OpenHermes-2.5

OpenHermes-2.5 is a Mistral fine-tuned model. It has shown remarkable results across the evaluation metrics (GPT4ALL, TruthfullQA, AgiEval, BigBench). For many tasks, this is indistinguishable from GPT-3.5. For more information on OpenHermes, refer to this HF repository: teknium/OpenHermes-2.5-Mistral-7B.

Zephyr-7b

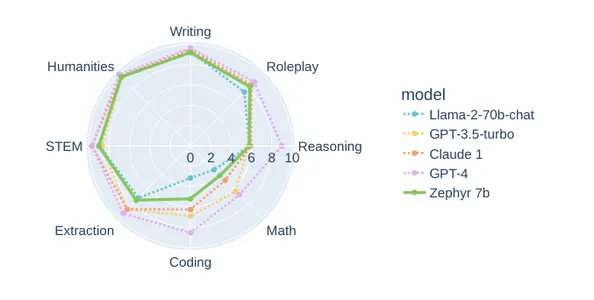

Zephyr-7b is another fine-tuned model of Mistral-7b by HuggingFace. Huggingface has fully fine-tuned the Mistral-7b using DPO (Direct Preference Optimization). Zephyr-7b-beta performs on par with bigger models like GPT-3.5 and Llama-2-70b on many tasks, including writing, humanities subjects, and roleplay. Following is a comparison between Zephyr-7b and other models on MTbench. This can be a good substitute for GPT-3.5 in many ways.

Here is the official HuggingFace repository: HuggingFaceH4/zephyr-7b-beta.

Intel Neural Chat

The Neural chat is a 7B LLM model fine-tuned from Mistral-7B by Intel. It has shown remarkable performance, topping the Huggingface leaderboard among all the 7B models. The NeuralChat-7b is fine-tuned and trained over Gaudi-2, an Intel chip for making ai tasks faster. The excellent performance of NeuralChat is a result of supervised fine-tuning and Direct optimization preference (DPO) over Orca and slim-orca datasets.

Here is the HuggingFace repository of NeuralChat: Intel/neural-chat-7b-v3-1.

Open-Source Large Multi-Modal Models

After the release of GPT-4 Vision, there has been an increased interest in multi-modal models. Large Language Models with Vision can be great in many real-world use cases, such as question-answering on images and narrating videos. In one such use-case, Tldraw has released an ai whiteboard that lets you create web components from drawings on the whiteboard using GPT-4V’s insane capability to interpret images to codes.

But open source is getting there faster. Many research labs released large multi-modal models such as Llava, Baklava, Fuyu-8b, etc.

Llava

The Llava (Large language and vision Assistant) is a multi-modal model with 13 billion parameters. Llava connects Vicuna-13b LLM and a pre-trained visual encoder CLIP ViT-L/14. It has been fine-tuned over the Visual Chat and Science QA dataset to achieve performance similar to GPT-4V on many occasions. This can be used in visual QA tasks.

BakLlava

BakLlava from SkunkWorksAI is another large multi-modal model. It has Mistral-7b as base LLM augmented with Llava-1.5 architecture. It has shown promising results on par with Llava-13b despite being smaller. This is the model to look for when you need a smaller model with good visual inferencing.

Fuyu-8b

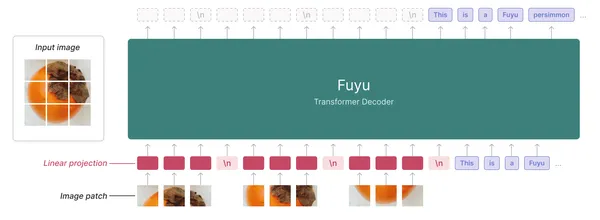

Another open-source alternative is Fuyu-8b. It is a capable multi-modal language model from Adept. Fuyu is a decoder-only transformer without a visual encoder; this is different from Llava, where CLIP is used.

Unlike other multi-modal models that use an image encoder to feed LLM with image data, it linearly projects the pieces of images to the first layer of the transformer. It treats the transformer decoder as an image transformer. Following is an illustration of the Fuyu architecture.

For more information regarding Fuyu-8b, refer to this ai/blog/fuyu-8b” target=”_blank” rel=”noreferrer noopener nofollow”>article. HuggingFace Repository adept/fuyu-8b

How to Use Open LLMs?

Now that we are familiar with some of the best-performing open-source LLMs and LMMs, the question is how to get inferences from an open model. There are two ways we can get inferences from the open-source models. Either we download models on personal hardware or subscribe to a cloud provider. Now, this depends on your use case. These models, even the smaller ones, are compute-intensive and demand high RAM and VRAM. Inferencing these vanilla models on commercial hardware is very difficult. To make this thing easy, the models need to be quantized. So, let’s understand what model quantization is.

Quantization

The quantization is the technique of reducing the precision of floating point integers. Usually, labs release models with weights and activations with higher floating point precision to attain state-of-the-art (SOTA) performance. This makes the models’s computing hungry and un-ideal for running locally or hosting on the cloud. The solution for this is to reduce the precision of weights and embeddings. This is called quantization.

The SOTA models usually have float32 precision. There are different cases in quantization, from fp32 -> fp16, fp-32-> int8, fp32->fp8, and fp32->fp4. This section will only discuss quantization to int8 or 8-bit integer quantization.

Quantization to int8

The int8 representation can only accommodate 256 characters (signed (-128,127), unsigned (0, 256)), while fp32 can have a wide range of numbers. The idea is to find the equivalent projection of fp32 values in (a,b) to the int8 format.

If X is an fp32 number in the range (a,b,), then the quantization scheme is

X = S*(X_q – z)

- X_q = the quantized value associated with X

- S is the scaling parameter. A positive fp32 number.

- z is the zero-point. It is the int8 value corresponding to the value 0 in fp32.

Hence, X_q = round(X/S + z) ∀ X ∈ (a,b)

For fp3,2, values beyond (a,b) are clipped to the nearest representation

X_q = clip( X_q = round(a/S + z) + round(X/S + z) + X_q = round(b/S + z) )

- round(a/S + z) is the smallest and round(b/S + z) is the biggest number in the said number format.

This is the equation for affine or zero-point quantization. This was about 8-bit integer quantization; there are also quantization schemes for 8-bit fp8, 4-bit (fp4, nf4), and 2-bit (fp2). For more information on quantization, refer to this article on HuggingFace.

Model quantization is a complex task. There are multiple open-source tools for quantizing LLMs, such as Llama.cpp, AutoGPTQ, llm-awq, etc. The llama cpp quantizes models using GGUF, AutoGPTQ using GPTQ, and llm-awq using AWQ format. These are different quantization methods to reduce model size.

So, if you want to use an open-source model for inferencing, it makes sense to use a quantized model. However, you will trade some inferencing quality for a smaller model that does not cost a fortune.

Check out this HuggingFace repository for quantized models: https://huggingface.co/TheBloke

Running Models Locally

Often, for various needs, we may need to run models locally. There is a lot of freedom when running models locally. Whether building a custom solution for confidential documents or experimentation purposes, local LLMs provide much more freedom and peace of mind than closed-source models.

There are multiple tools to run models locally. The most popular ones are vLLM, Ollama, and LMstudio.

VLLM

The vLLM is an open-source alternative software written in Python that lets you run LLMs locally. Running models on vLLM requires certain hardware specifications, generally, with vRAM compute capability of more than seven and RAM above 16 GB. You should be able to run on a Collab for testing. VLLM currently supports AWQ quantization format. These are the models you can use with ai/en/latest/models/supported_models.html” target=”_blank” rel=”noreferrer noopener nofollow”>vLLM. And this is how we can run a model locally.

from vllm import LLM, SamplingParams

prompts = (

"Hello, my name is",

"The president of the United States is",

"The capital of France is",

"The future of ai is",

)

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="mistralai/Mistral-7B-v0.1")

outputs = llm.generate(prompts, sampling_params)

for output in outputs:

prompt = output.prompt

generated_text = output.outputs(0).text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")The vLLM also supports OpenAI endpoints. Hence, you can use the model as a drop-in replacement for existing OpenAI implementation.

import openai

# Modify OpenAI's API key and API base to use vLLM's API server.

openai.api_key = "EMPTY"

openai.api_base = "http://localhost:8000/v1"

completion = openai.Completion.create(model="mistralai/Mistral-7B-v0.1",

prompt="San Francisco is a")

print("Completion result:", completion)Here, we will infer from the local model by using OpenAI SDK.

Ollama

The Ollama is another open-source alternative CLI tool in Go that lets us run open-source models on local hardware. Ollama supports the GGUF quantized models.

Create a Model file in your directory and run

FROM ./mistral-7b-v0.1.Q4_0.ggufCreate an Ollama model from the model file.

ollama create example -f ModelfileNow, run your model.

ollama run example "How to kill a Python process?"Ollama also lets you run Pytorch and HuggingFace models. For more, refer to their official repository.

LMstudio

LMstudio is a closed-source software that conveniently lets you run any model on your PC. This is ideal if you want dedicated software for running models. It has a nice UI to use local models. This is available on Macs(M1, M2), Linux(beta), and Windows.

It also supports GGUF formatted models. Check out their ai/” target=”_blank” rel=”noreferrer noopener nofollow”>official page for more. Make sure it supports your hardware specifications.

Models from Cloud Providers

Running models locally is great for experimentation and custom use cases, but using them in applications requires models to be hosted on the cloud. You can host your model on the cloud via dedicated LLM model providers, such as Replicate and Brev Dev. You can host, fine-tune, and get inferences from models. They provide elastic scalable service for hosting LLMs. Resource allocation will change as per traffic on your model.

Conclusion

Open-source model development is happening at a break-neck pace. Within a year of ChatGPT, we have models much smaller out competing it on many benchmarks. This is just the beginning, and a model on par with GPT-4 might be around the corner. Lately, questions have been raised regarding the integrity of organizations behind closed-source models. As a developer, you will not want your model and services built on top of it to get jeopardized. The open-source solves this. You know your model, and you own the model. Open-source models provide a lot of freedom. You can also have a hybrid structure with OS and OpenAI models to reduce cost and dependency. So, this article was about an introduction to some great performing OS models and concepts related to running them.

So, here are the key takeaways:

- Open models are synonymous with sovereignty. Open-source models provide the much-needed trust factor that closed models fail to do.

- Large language models like Llama-2 and Mistral and their fine tunings have beaten GPT-3.5 on many tasks, making them ideal substitutes.

- Large Multi-modal models such as Llava, BakLlava, and Fuyu-8b have shown promise to be useful in many QA and classification tasks.

- The LLMs are large and compute-intensive. Hence, running them locally requires quantization.

- Quantization is a technique for reducing the model size by casting weights and activation floats to smaller bits.

- Hosting and inferencing locally from OS models require tools like LMstudio, Ollama, and vLLM. To deploy on the cloud, use services like Replicate and Brev.

Frequently Asked Question

A. Yes, there are alternatives to ChatGPT, such as Llama-2 chat, Mistral-7b, Vicuna-13b, etc.

A. It is possible to run open-source LLMs on the local machine using tools like LMstudio, Ollama, and vLLM. It also depends on how capable the local machine is.

A. Open-source models are better and more effective than Gpt-3.5 on many tasks, but GPT-4 is still the best model available.

A. Depending on the use case, open-source models might be cheaper than GPT models, but they must be fine-tuned to perform better at specific tasks.

Ans. Chatbots are LLM that have been fine-tuned over chat-like conversations.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.