Omnimodal large language models (LLMs) are at the forefront of artificial intelligence research and seek to unify multiple modalities of data, such as vision, language, and speech. The main goal is to improve the interactive capabilities of these models, allowing them to perceive, understand and generate results through various inputs, just as a human would. These advances are critical to creating more complete ai systems to engage in natural interactions, respond to visual cues, interpret vocal instructions, and provide consistent responses in text and voice formats. Such a feat involves designing models to manage high-level cognitive tasks while integrating sensory and textual information.

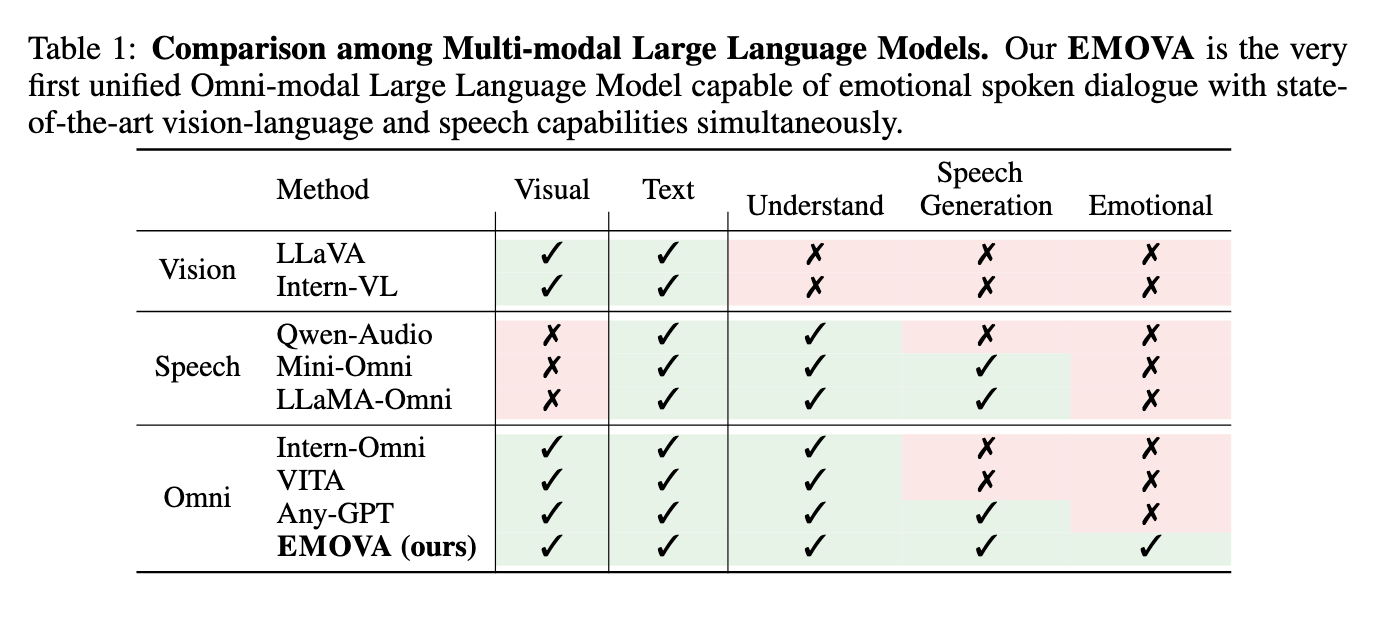

Despite progress in individual modalities, existing ai models need help integrating vision and speech capabilities into a unified framework. Current models focus on vision-language or speech-language, and often fail to achieve a perfect end-to-end understanding of all three modalities simultaneously. This limitation makes it difficult to apply in scenarios that demand real-time interactions, such as virtual assistants or autonomous robots. Furthermore, current speech models rely heavily on external tools to generate vocal outputs, which introduces latency and restricts flexibility in speech style control. The challenge remains to design a model that can overcome these barriers while maintaining high performance in understanding and generating multimodal content.

Various approaches have been taken to improve multimodal models. Visual language models such as LLaVA and Intern-VL employ vision encoders to extract and integrate visual features with textual data. Speech-language models, such as Whisper, use vocoders to extract continuous features, allowing the model to understand vocal inputs. However, these models are limited by their reliance on external text-to-speech (TTS) tools to generate voice responses. This approach limits the model's ability to generate speech in real time and with emotional variation. Furthermore, attempts at omnimodal models, such as AnyGPT, rely on discretized data, which often results in loss of information, especially in visual modalities, reducing the effectiveness of the model in high-resolution visual tasks.

Researchers from the Hong Kong University of Science and technology, the University of Hong Kong, Huawei Noah's Ark Laboratory, the Chinese University of Hong Kong, Sun Yat-sen University and the Southern University of Science and technology have presented EMOVA (Emotionally Omnipresent Voice Assistant). ). This model represents a significant advance in LLM research by seamlessly integrating vision, language, and speech capabilities. EMOVA's unique architecture incorporates a continuous vision encoder and a per-unit speech tokenizer, allowing the model to perform end-to-end processing of visual and speech inputs. By employing a semantic-acoustic disentangled speech tokenizer, EMOVA decouples semantic content (what is said) from acoustic style (how it is said), allowing it to generate speech with various emotional tones. This feature is crucial for real-time spoken dialogue systems, where the ability to express emotions through speech adds depth to interactions.

The EMOVA model comprises multiple components designed to handle specific modalities effectively. The vision encoder captures high-resolution visual features and projects them into the text embedding space, while the speech encoder transforms speech into discrete units that the LLM can process. A critical aspect of the model is the semantic-acoustic disentangling mechanism, which separates the meaning of spoken content from its stylistic attributes, such as tone or emotional tone. This allows researchers to introduce a lightweight style module to control speech output, making EMOVA capable of expressing various emotions and personalized speech styles. Furthermore, the integration of text modality as a bridge to align image and speech data eliminates the need for specialized omnimodal data sets, which are often difficult to obtain.

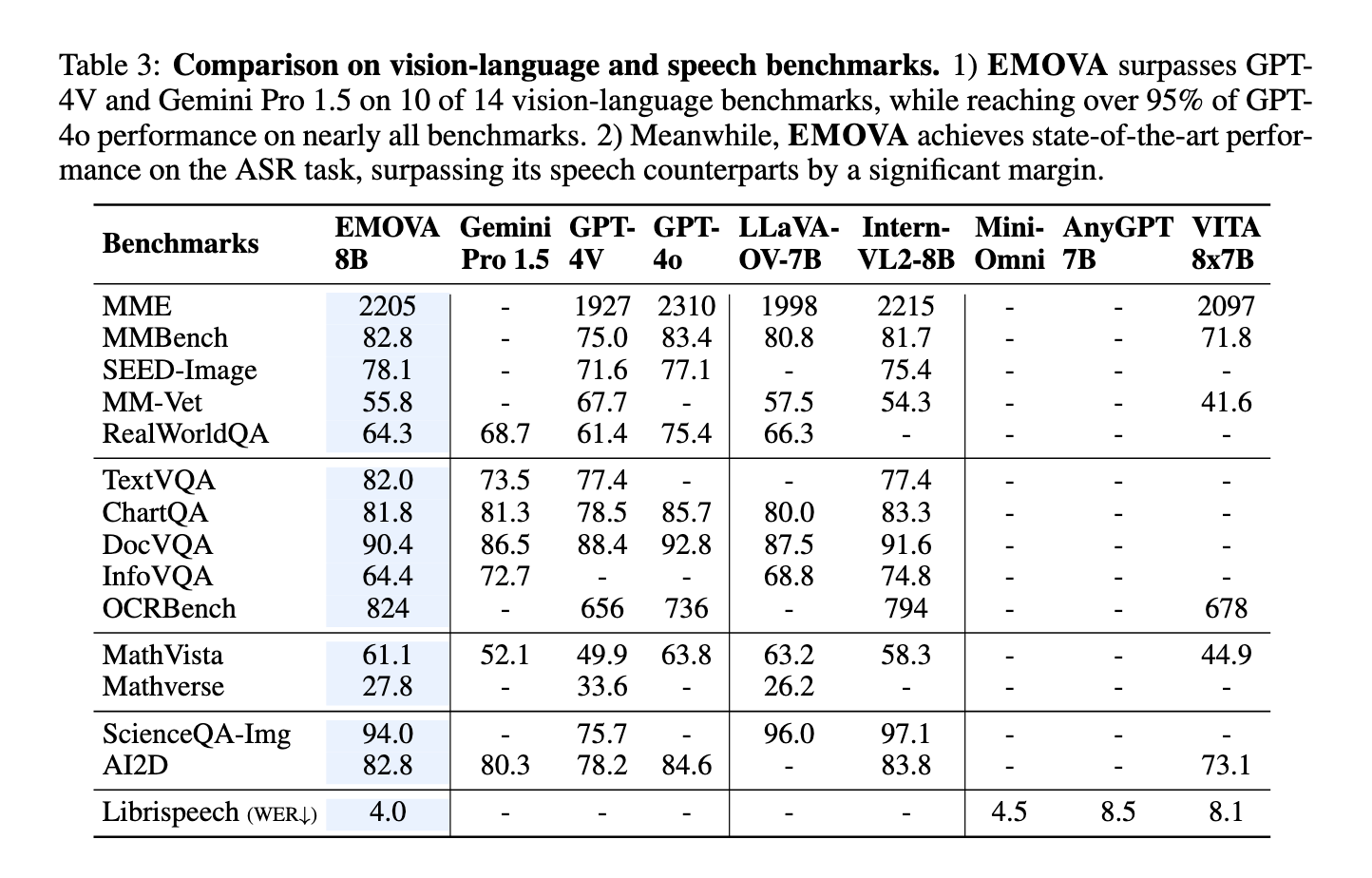

EMOVA's performance has been evaluated on multiple benchmarks, demonstrating its superior capabilities compared to existing models. In speech and language tasks, EMOVA achieved a remarkable 97% accuracy, outperforming other state-of-the-art models such as AnyGPT and Mini-Omni by a margin of 2.8%. On vision and language tasks, EMOVA scored 96% on the MathVision data set, outperforming competing models such as Intern-VL and LLaVA by 3.5%. Furthermore, the model's ability to maintain high accuracy on speech and vision tasks simultaneously is unprecedented, as most existing models typically excel in one modality at the expense of the other. This comprehensive performance makes EMOVA the first LLM capable of supporting real-time, emotionally rich spoken dialogues, while achieving cutting-edge results in multiple domains.

In summary, EMOVA addresses a critical gap in the integration of vision, language, and speech capabilities within a single ai model. Through its innovative semantic-acoustic disentanglement and efficient omnimodal alignment strategy, it not only performs exceptionally well on standard landmarks, but also introduces flexibility into the emotional control of speech, making it a versatile tool for advanced ai interactions. This advance paves the way for further research and development in large omnimodal language models, setting a new standard for future advances in this field.

look at the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml

Are you interested in promoting your company, product, service or event to over 1 million ai developers and researchers? Let's collaborate!

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>