The researchers investigate whether, similar to AlphaGo Zero, where AI agents are developed by repeatedly participating in competitive games with clearly established rules, many Long Language Models (LLMs) can enhance each other in a game of negotiation with little or no human interaction. The results of this study will have far-reaching effects. Unlike today’s data-hungry LLM lineup, powerful agents can be created with little human annotation if the agents can progress independently. It also suggests powerful agents with little human oversight, which is problematic. In this study, researchers from the University of Edinburgh and the Allen Institute for AI invite two language models, a customer and a salesperson, to haggle over a purchase.

The customer wants to pay less for the product, but the seller is asked to sell it at a higher price (Fig. 1). They ask a third language model to take the role of critic and provide feedback to a player once an agreement has been reached. Then, using the AI information from the LLM critic, they play the game again and encourage the player to refine their approach. They select the trading game because it has explicit rules in print and a specific, quantifiable objective (lower/higher contract price) for tactical trading. Although the game initially seems simple, it requires non-trivial language model skills because the model must be able to:

- Clearly understand and strictly adhere to the textual rules of the trading game.

- Correspond to the textual feedback provided by the LM reviewer and improve based on it iteratively.

- Reflect on long-term strategy and feedback and improve over multiple rounds.

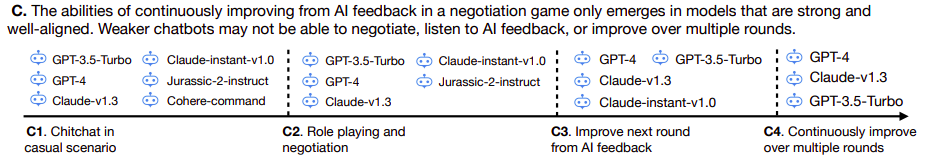

In their experiments, only the get-3.5-turbo, get-4 and Claude-v1.3 models meet the requirements of being able to understand trading rules and strategies and being well aligned with the AI instructions. As a result, not all of the models they considered exhibited all of these abilities (Fig. 2). In the early studies, they also tested more complex textual games, such as board games and text-based role-playing games, but it proved more difficult for agents to understand and adhere to the rules. His method is known as ICL-AIF (in-context learning from AI feedback).

They leverage feedback from the AI Critic and previous rounds of story dialogue as in-context demos. This turns the player’s actual development in the previous rounds and the critic’s trade ideas into the signals of a few shots for the next round of negotiation. For two reasons, they use learning in context: (1) fine tuning large linguistic models with reinforcement learning is prohibitively expensive, and (2) learning in context has recently been shown to be closely related to gradient descent, which makes the conclusions quite likely to generalize when the model is fitted (resources permitting).

Reward in reinforcement learning from human feedback (RLHF) is usually a scalar, but in his ICL-AIF, the feedback is provided in natural language. This is a notable distinction between the two approaches. Instead of relying on human interaction after each round, they look at feedback from the AI, as it is more scalable and can help models progress independently.

When given feedback while taking on different responsibilities, the models respond differently. Improving buyer role models can be more difficult than supplier role models. Even if it’s conceivable that powerful agents like get-4 are constantly being developed in meaningful ways using past knowledge and online iterative AI feedback, trying to sell something for more money (or buy something for less) risks not doing a transaction at all. They also prove that the model can engage in less detailed but more deliberate (and ultimately more successful) negotiations. Overall, they anticipate that their work will be an important step in improving the negotiation of language models in a game environment with AI feedback. The code is available on GitHub.

review the Paper and GitHub link. Don’t forget to join our 24k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

featured tools Of AI Tools Club

🚀 Check out 100 AI tools at AI Tools Club

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. She is currently pursuing her bachelor’s degree in Information Science and Artificial Intelligence at the Indian Institute of Technology (IIT), Bhilai. She spends most of her time working on projects aimed at harnessing the power of machine learning. Her research interest is image processing and she is passionate about creating solutions around her. She loves connecting with people and collaborating on interesting projects.