Large language models (LLMs) have significantly advanced the field of natural language processing (NLP). These models, recognized for their ability to generate and understand human language, are applied in various domains, such as chatbots, translation services, and content creation. Continued development in this field aims to improve the efficiency and effectiveness of these models, making them more responsive and accurate for real-time applications.

A major challenge facing LLMs is the substantial computational cost and time required for inference. As these models scale, the generation of each token during autoregressive tasks becomes slower, preventing real-time applications. Addressing this issue is crucial to improving application performance and user experience that rely on LLMs, particularly when quick responses are essential.

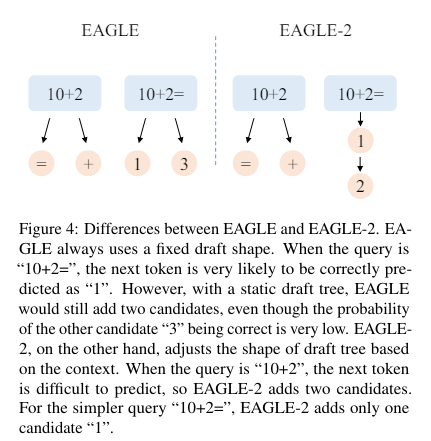

Current methods to alleviate this problem include speculative sampling techniques, which generate and verify tokens in parallel to reduce latency. Traditional speculative sampling methods often rely on static draft trees that are context-insensitive, leading to inefficiencies and suboptimal acceptance rates of draft tokens. These methods aim to reduce inference time, but still face performance limitations.

Researchers from Peking University, Microsoft Research, the University of Waterloo, and the Vector Institute presented EAGLE-2, a method that leverages a context-aware dynamic draft tree to improve speculative sampling. EAGLE-2 builds on the previous EAGLE method and offers significant improvements in speed while maintaining the quality of the generated text. This method dynamically adjusts the draft tree based on context, using confidence scores from the draft model to approximate acceptance rates.

EAGLE-2 dynamically adjusts the draft tree based on context, improving speculative sampling. Its methodology includes two main phases: expansion and reranking. The process begins with the expansion phase, where the preliminary model inputs the most promising nodes from the last layer of the preliminary tree to form the next layer. The confidence scores of the preliminary model approximate the acceptance rates, allowing for efficient token prediction and verification. During the reclassification phase, tokens with higher acceptance probabilities are selected for input to the original LLM during verification. This two-phase approach ensures that the draft tree adapts to the context, significantly improving token acceptance rates and overall efficiency. This method eliminates the need for multiple forward passes, thus speeding up the inference process without compromising the quality of the generated text.

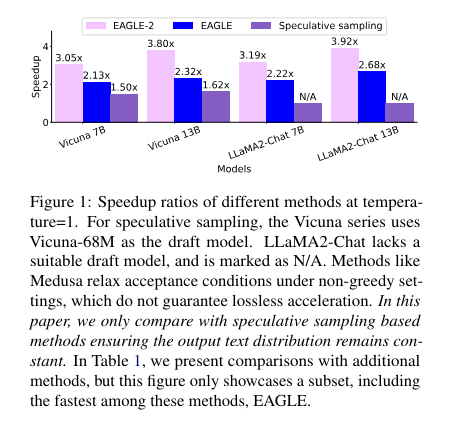

The proposed method showed remarkable results. For example, in multi-turn conversations, EAGLE-2 achieved a speedup of about 4.26x, while in code generation tasks it achieved up to 5x. The average number of tokens generated per redaction verification cycle was significantly higher than other methods, approximately double that of standard speculative sampling. This performance increase makes EAGLE-2 a valuable tool for real-time NLP applications.

Performance evaluations also show that EAGLE-2 achieves speedup rates between 3.05x and 4.26x on various tasks and LLMs, outperforming the previous EAGLE method by 20% to 40%. Maintains the distribution of the generated text, ensuring that output quality is not lost despite the increase in speed. EAGLE-2 demonstrated the best performance in extensive testing on six tasks and three LLM series, confirming its robustness and efficiency.

In conclusion, EAGLE-2 effectively addresses computational inefficiencies in LLM inference by introducing a dynamic context-aware scratch tree. This method offers a substantial performance increase without compromising the quality of the generated text, making it a significant advance in NLP. Future research and applications should consider integrating dynamic context adjustments to further improve the performance of LLMs.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram channel and LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 45,000ml

Create, edit, and augment tabular data with the first composite ai system, Gretel Navigator, now generally available! (Commercial)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>