The landscape of machine learning has undergone a transformative shift with the emergence of transformer-based architectures, revolutionizing tasks across natural language processing, computer vision, and beyond. However, a notable gap still needs to be addressed within image-level generative models, specifically diffusion models, which largely adhere to convolutional U-Net architectures.

Unlike other domains that have embraced transformers, diffusion models have yet to integrate these powerful architectures despite their significance in generating high-quality images. The researchers of NYU University address this discrepancy by introducing Diffusion Transformers (DiTs), an innovative approach that replaces the conventional U-Net backbone with transformer capabilities, thereby challenging the established norms in diffusion model architecture.

Presently, diffusion models have become sophisticated image-level generative models, yet they have steadfastly relied on convolutional U-Nets. This research introduces a groundbreaking concept—integrating transformers into diffusion models through DiTs. This transition, informed by Vision Transformers (ViTs) principles, breaks away from the status quo, advocating for structural transformations that transcend the confines of U-Net designs. The structural metamorphosis empowers diffusion models to align with the broader architectural trend, capitalizing on best practices across domains to enhance scalability, robustness, and efficiency.

DiTs are grounded in Vision Transformers (ViTs) architecture, offering a fresh paradigm for designing diffusion models. The architecture involves key components, beginning with “patchy,” which transforms spatial inputs into token sequences via linear and positional embeddings. Variants of DiT blocks handle conditional information, including “in-context conditioning,” “cross-attention blocks,” “adaptive layer norm (adaLN) blocks,” and “adaLN-zero blocks.” These block designs and varying model sizes from DiT-S to DiT-XL constitute a versatile toolkit for designing powerful diffusion models.

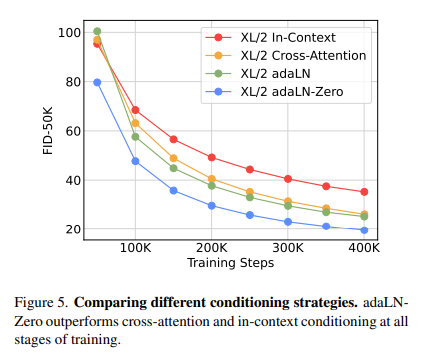

The experimental phase delves into evaluating the performance of diverse DiT block designs. Four DiT-XL/2 models were trained, each employing a different block design: “in-context,” “cross-attention,” “adaptive layer norm (adaLN),” and “adaLN-zero.” Results highlight the consistent superiority of the adaLN-zero block design in terms of FID scores, demonstrating its computational efficiency and the critical role of conditioning mechanisms in shaping model quality. This discovery underscores the efficacy of the adaLN-zero initialization method, subsequently influencing the adoption of adaLN-zero blocks for further DiT model exploration.

Further exploration involves scaling DiT configurations by manipulating model and patch sizes. Visualizations showcase significant enhancements in image quality achieved through computational capacity augmentation. This augmentation can be performed by expanding transformer dimensions or increasing input tokens. The robust correlation linking model Gflops with FID-50K scores, emphasizes the importance of computational resources in driving DiT performance improvements. Benchmarking DiT models against existing diffusion models on ImageNet datasets across resolutions of 256×256 and 512×512 unveils compelling results. DiT-XL/2 models consistently surpass existing diffusion models, excelling in FID-50K scores for both resolutions. This robust performance underscores the scalability and versatility of DiT models across varying scales. Furthermore, the study highlights the intrinsic computational efficiency of DiT-XL/2 models, emphasizing their pragmatic suitability for real-world applications.

In conclusion, introducing Diffusion Transformers (DiTs) heralds a transformative era in generative models. By fusing the power of transformers with diffusion models, DiTs challenge traditional architectural norms and offer a promising avenue for research and real-world applications. The comprehensive experiments and findings accentuate DiTs’ potential in advancing the landscape of image generation and underscore their position as a pioneering architectural innovation. As DiTs continue to reshape the image generation landscape, their integration with transformers signifies a notable step towards unifying diverse model architectures and driving enhanced performance across various domains.

Check out the Paper and Reference Article. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 28k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

![]()

Madhur Garg is a consulting intern at MarktechPost. He is currently pursuing his B.Tech in Civil and Environmental Engineering from the Indian Institute of Technology (IIT), Patna. He shares a strong passion for Machine Learning and enjoys exploring the latest advancements in technologies and their practical applications. With a keen interest in artificial intelligence and its diverse applications, Madhur is determined to contribute to the field of Data Science and leverage its potential impact in various industries.

NEWSLETTER

NEWSLETTER

Use SQL to predict the future (Sponsored)

Use SQL to predict the future (Sponsored)