Implementing hardware resilience in your training infrastructure is critical to mitigating risks and enabling uninterrupted model training. By implementing features such as proactive health monitoring and automated recovery mechanisms, organizations can create a fault-tolerant environment capable of handling hardware failures or other issues without compromising the integrity of the training process.

In the post, we introduce AWS Neuron node problem detection and recovery. Set of demons for AWS Trainium and AWS Inferentia on amazon Elastic Kubernetes Service (amazon EKS). This component can quickly detect rare occurrences when Neuron devices fail by monitoring monitoring logs. It marks worker nodes on a failed Neuron device as unhealthy and quickly replaces them with new worker nodes. By accelerating the speed of issue detection and resolution, you increase the reliability of your ML training and reduce lost time and costs due to hardware failures.

This solution is applicable if you are using managed nodes or self-managed node groups (using amazon EC2 Auto Scaling groups) on amazon EKS. At the time of writing this post, automatic recovery of nodes provisioned by amazon EC2 Auto Scaling is not possible. carpenter Not yet supported.

Solution Overview

The solution is based on the DaemonSet node problem detection and recovery tool, a powerful tool designed to automatically detect and report various node-level issues in a Kubernetes cluster.

The node problem detector component will continuously monitor the kernel message (kmsg) logs the worker nodes. If it detects error messages related specifically to the Neuron device (which is the Trainium chip or AWS Inferentia), it will change NodeCondition to NeuronHasError on the Kubernetes API server.

The Node Recovery Agent is a standalone component that periodically checks the Prometheus metrics exposed by the Node Problem Detector. When it finds a node condition that indicates a problem with the Neuron device, it will take automatic action. First, it will mark the affected instance in the relevant Auto Scaling group as unhealthy, which will invoke the Auto Scaling group to stop the instance and launch a replacement. Additionally, the Node Recovery Agent will publish amazon CloudWatch metrics for users to monitor and alert on these events.

The following diagram illustrates the solution architecture and workflow.

In the following tutorial, we create an EKS cluster with Trn1 worker nodes, deploy the Neuron plugin for node problem detector, and inject a failure message into the node. We then observe that the failing node is stopped and replaced by a new one, and find a metric in CloudWatch that indicates the failure.

Prerequisites

Before you begin, make sure you have the following tools installed on your machine:

Deploy the node problem detection and recovery plugin

Complete the following steps to configure the Node Problem Detection and Recovery plugin:

- Create an EKS cluster using data from an EKS Terraform module:

- Install the AWS Identity and Access Management (IAM) role required for the service account and the Node Problem Detector plugin.

- Create a policy as shown below. Update the

Resourcekey value to match the ARN of the node group that contains the Trainium and AWS Inferentia nodes, and update theec2:ResourceTag/aws:autoscaling:groupNamekey value to match the auto scaling group name.

You can get these values from the amazon EKS console. Select Clusters in the navigation pane, open the trainium-inferentia cluster, select Node Groups and locate your node group.

This component will be installed as a DaemonSet on your EKS cluster.

Container images in Kubernetes manifests are stored in a public repository as registry.k8s.io and public.ecr.awsFor production environments, we recommend that customers limit external dependencies that impact these areas and host container images in a private registry and sync images from public repositories. For detailed implementation information, see the blog post: Announcing Pull-Through Cache for Registry.k8s.io on amazon Elastic Container Registry.

By default, the node problem detector will not take any action in case of node failure. If you want the agent to automatically terminate the EC2 instance, update the DaemonSet as follows:

kubectl edit -n neuron-healthcheck-system ds/node-problem-detector

...

env:

- name: ENABLE_RECOVERY

value: "true"Try the node troubleshooter and recovery solution

Once the plugin is installed, you can see the Neuron conditions appear when you run kubectl describe nodeWe simulate a device failure by injecting error logs into the instance:

After about 2 minutes, you will be able to see that the error has been identified:

kubectl describe node ip-100-64-58-151.us-east-2.compute.internal | grep 'Conditions:' -A7Now that the node's problem detector has detected the failure and the recovery agent has automatically taken the action of setting the node to unhealthy, amazon EKS will cordon off the node and evict the pods on it:

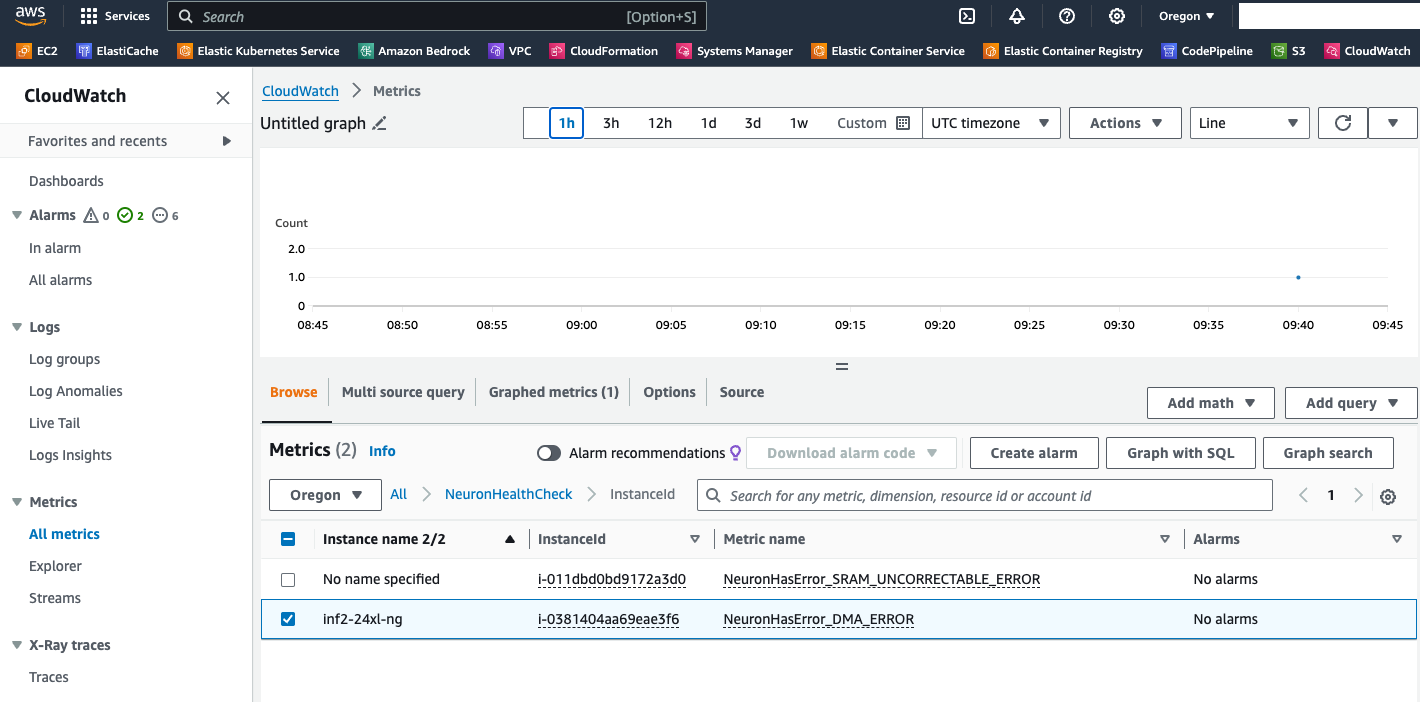

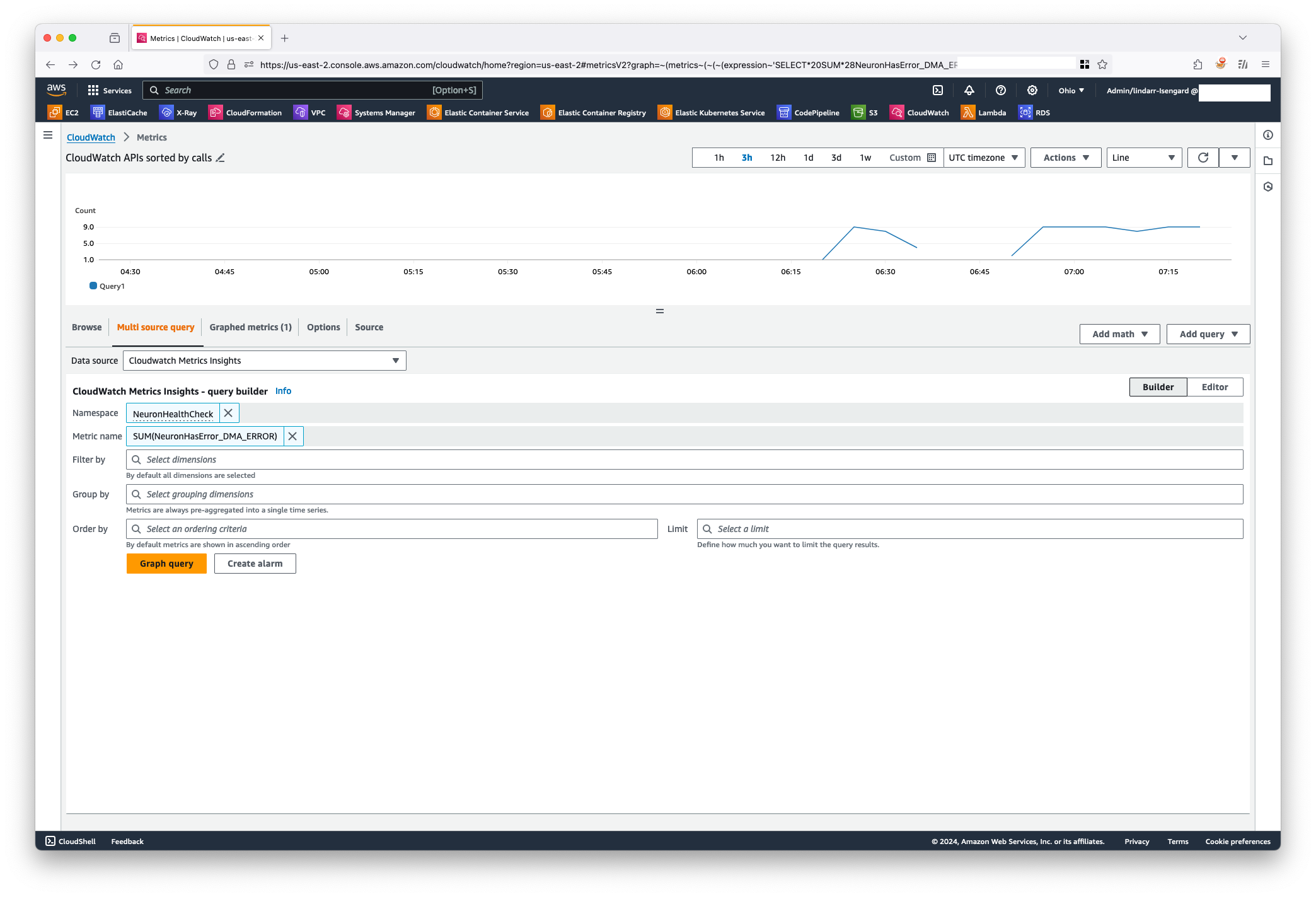

You can open the CloudWatch console and check the metrics for NeuronHealthCheckYou can see the CloudWatch NeuronHasError_DMA_ERROR Metrics have the value 1.

After the replacement, you can see that a new worker node has been created:

Let's look at a real-world scenario, where a distributed training job is run, using an MPI operator as described in ai/training/Llama2″ target=”_blank” rel=”noopener”>Llama-2 in Trainiumand there is an unrecoverable Neuron error on one of the nodes. Before the plugin is deployed, the training job will be blocked, resulting in wasted time and computational costs. After the plugin is deployed, the node problem detector will proactively remove the problematic node from the cluster. In your training scripts, save checkpoints periodically so that training resumes from the previous checkpoint.

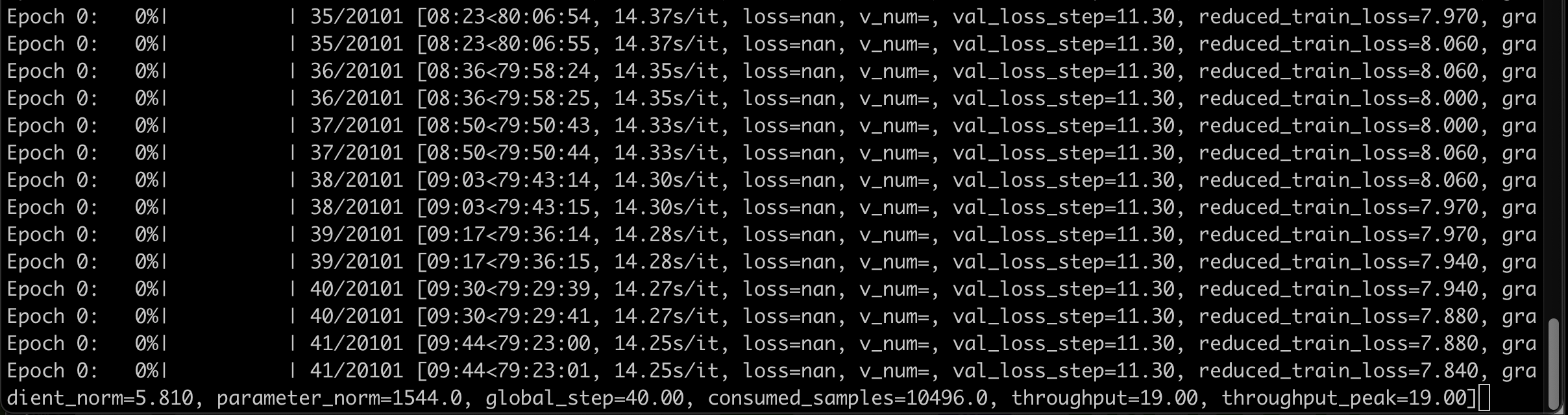

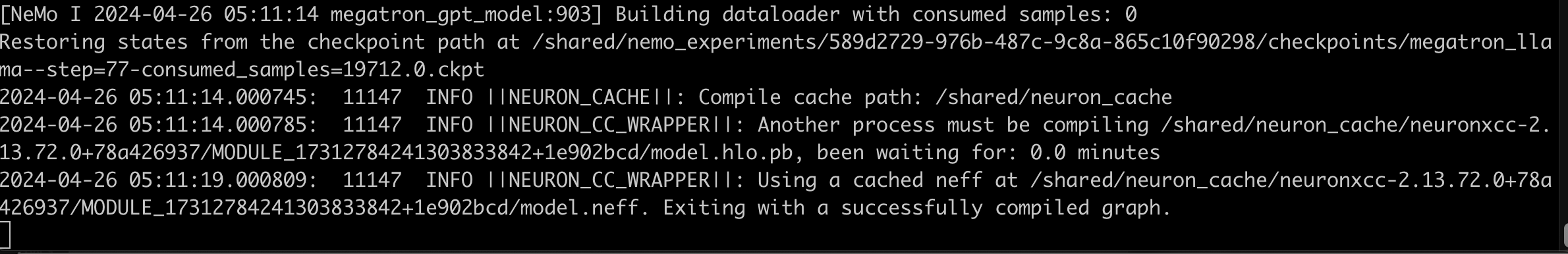

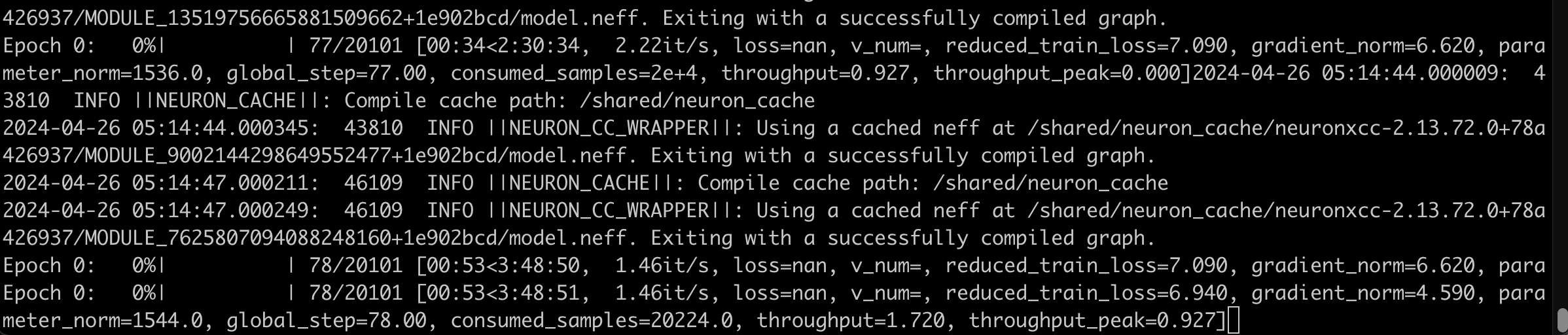

The following screenshot shows example logs from a distributed training job.

Training has started. (You can ignore loss=nan for now; it is a known issue and will be removed. For immediate use, see the reduction_train_loss metric.)

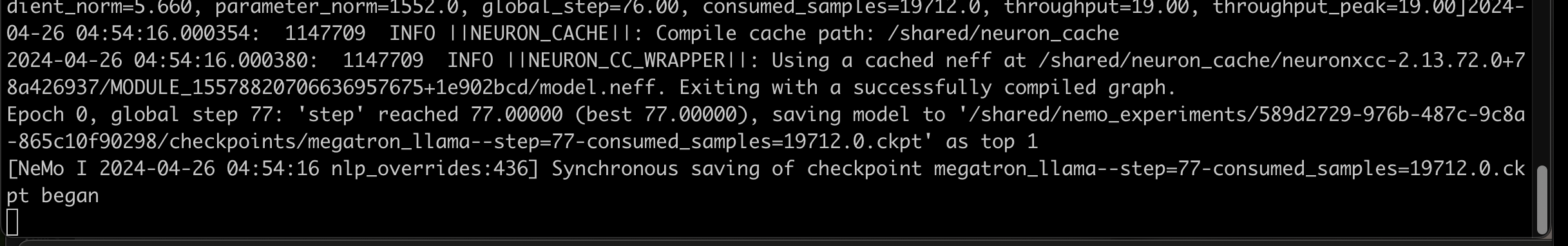

The following screenshot shows the checkpoint created in step 77.

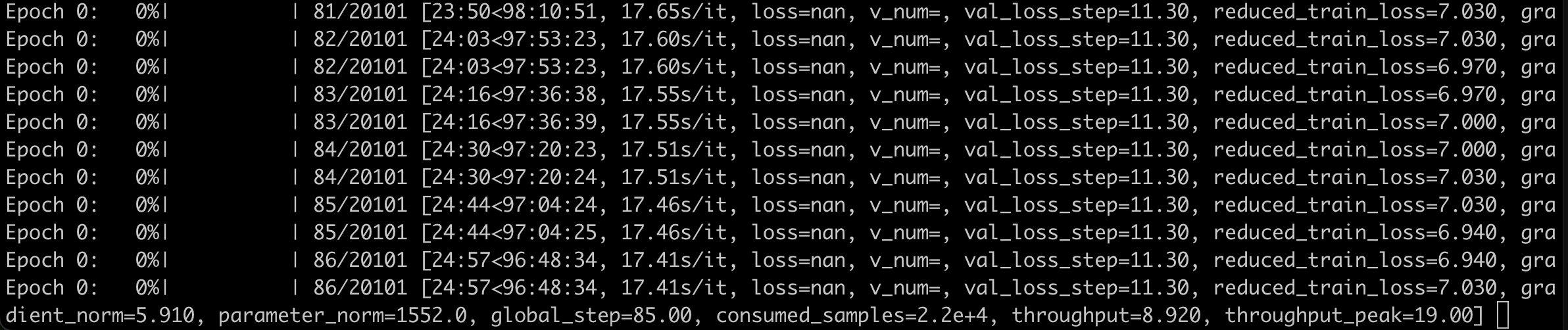

Training stopped after one of the nodes had a problem at step 86. The error was manually injected for testing purposes.

After the faulty node was detected and replaced by the Neuron plugin for node problems and recovery, the training process resumed at step 77, which was the last checkpoint.

While autoscaling groups will stop nodes that are not performing properly, they may encounter issues that prevent replacement nodes from being launched. In such cases, training jobs will be stopped and require manual intervention. However, the stopped node will not incur any further charges on the associated EC2 instance.

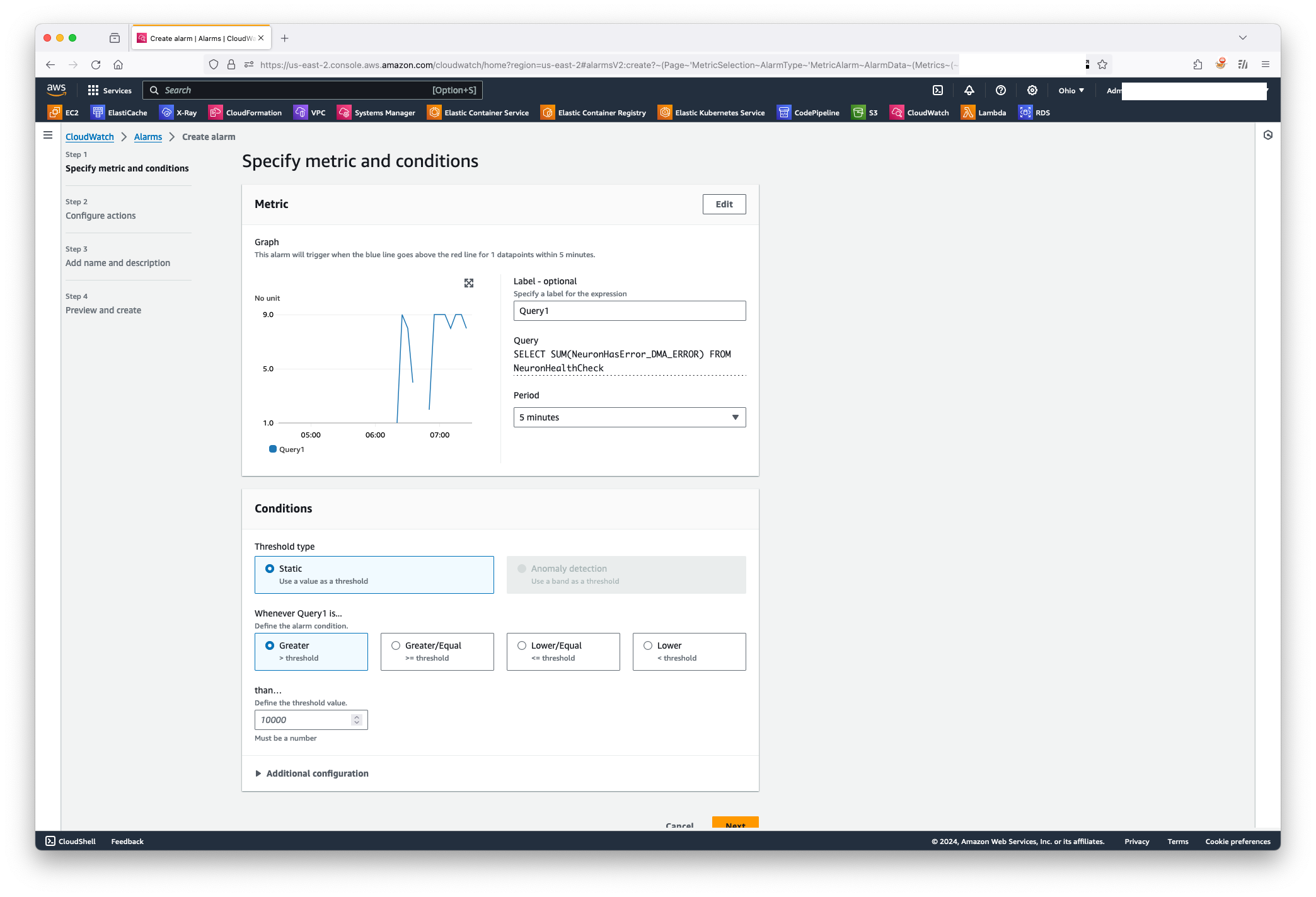

If you want to perform custom actions beyond stopping instances, you can create CloudWatch alarms that watch metrics. NeuronHasError_DMA_ERROR,NeuronHasError_HANG_ON_COLLECTIVES, NeuronHasError_HBM_UNCORRECTABLE_ERROR, NeuronHasError_SRAM_UNCORRECTABLE_ERRORand NeuronHasError_NC_UNCORRECTABLE_ERRORand use a CloudWatch Metrics Insights query like SELECT AVG(NeuronHasError_DMA_ERROR) FROM NeuronHealthCheck To sum these values and evaluate the alarms, the following screenshots show an example.

Clean

To clean up all resources provided for this post, run the cleanup script:

Conclusion

In this post, we show how the Neuron problem detector and recovery DaemonSet for amazon EKS work on EC2 instances powered by Trainium and AWS Inferentia. If you are running Neuron-based EC2 instances and using managed nodes or self-managed node pools, you can deploy the detector and recovery DaemonSet on your EKS cluster and benefit from improved reliability and fault tolerance of your machine learning training workloads in the event of a node failure.

About the authors

Harish Rao is a Senior Solutions Architect at AWS, specializing in large-scale distributed ai training and inference. He empowers customers to harness the power of ai to drive innovation and solve complex challenges. Outside of work, Harish embraces an active lifestyle, enjoying the tranquility of hiking, the intensity of racquetball, and the mental clarity of mindfulness practices.

Harish Rao is a Senior Solutions Architect at AWS, specializing in large-scale distributed ai training and inference. He empowers customers to harness the power of ai to drive innovation and solve complex challenges. Outside of work, Harish embraces an active lifestyle, enjoying the tranquility of hiking, the intensity of racquetball, and the mental clarity of mindfulness practices.

Ziwen Ning is a Software Development Engineer at AWS. He is currently focused on improving the ai/ML experience by integrating AWS Neuron with containerized environments and Kubernetes. In his free time, he enjoys challenging himself with badminton, swimming, and other sports, and immersing himself in music.

Ziwen Ning is a Software Development Engineer at AWS. He is currently focused on improving the ai/ML experience by integrating AWS Neuron with containerized environments and Kubernetes. In his free time, he enjoys challenging himself with badminton, swimming, and other sports, and immersing himself in music.

Geeta Gharpur is a Senior Software Developer on Annapurna’s ML Engineering team. She focuses on running large-scale ai/ML workloads on Kubernetes. She lives in Sunnyvale, California and enjoys listening to Audible in her spare time.

Geeta Gharpur is a Senior Software Developer on Annapurna’s ML Engineering team. She focuses on running large-scale ai/ML workloads on Kubernetes. She lives in Sunnyvale, California and enjoys listening to Audible in her spare time.

Darren Lin is a Cloud Native Solutions Architect at AWS focusing on domains such as Linux, Kubernetes, Containers, Observability, and Open Source technologies. In his free time, he enjoys working out and having fun with his family.

Darren Lin is a Cloud Native Solutions Architect at AWS focusing on domains such as Linux, Kubernetes, Containers, Observability, and Open Source technologies. In his free time, he enjoys working out and having fun with his family.

NEWSLETTER

NEWSLETTER