Author's image

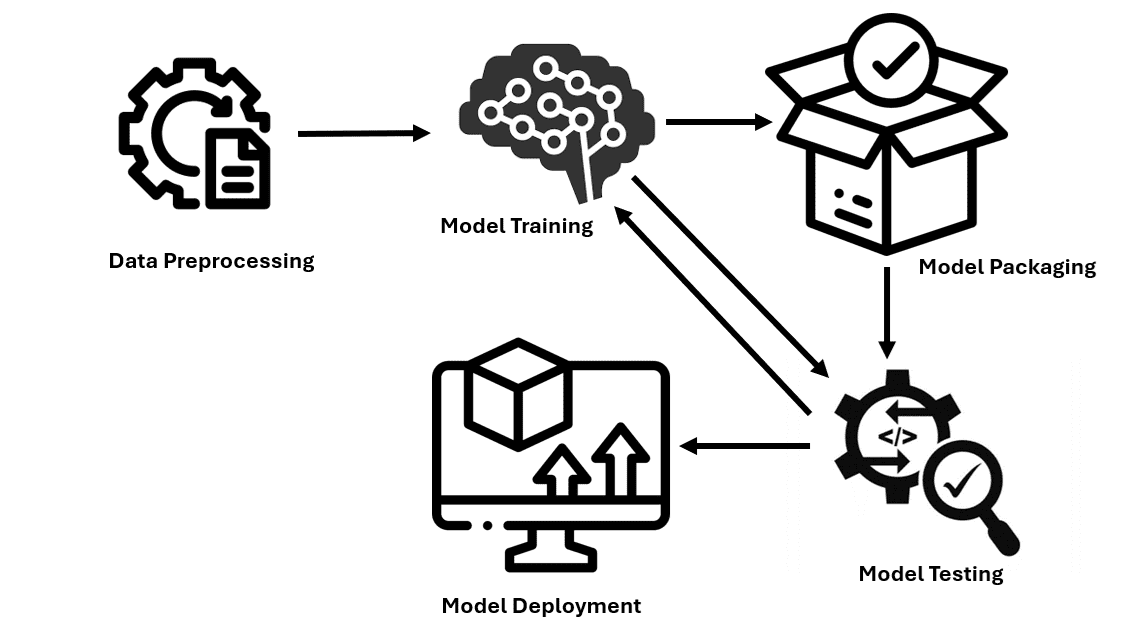

Model deployment is the process of integrating trained models into practical applications. This includes defining the necessary environment, specifying how input data is entered into the model and how results are produced, and the ability to analyze new data and provide relevant predictions or categorizations. Let's explore the process of deploying models to production.

Step 1: Data preprocessing

Address missing values by imputing them using mean values or removing rows/columns. Ensure that categorical variables are also transformed from qualitative data to quantitative data using One-Hot coding or tag coding. Normalize and standardize numerical features to transform them to a common scale.

import pandas as pd

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import OneHotEncoder, StandardScaler, MinMaxScaler

# Load your data

df = pd.read_csv('your_data.csv')

# Handle missing values

imputer_mean = SimpleImputer(strategy='mean')

df('numeric_column') = imputer_mean.fit_transform(df(('numeric_column')))

# Encode categorical variables

one_hot_encoder = OneHotEncoder()

encoded_features = one_hot_encoder.fit_transform(df(('categorical_column'))).toarray()

encoded_df = pd.DataFrame(encoded_features, columns=one_hot_encoder.get_feature_names_out(('categorical_column')))

# Normalize and standardize numerical features

# Standardization (zero mean, unit variance)

scaler = StandardScaler()

df('standardized_column') = scaler.fit_transform(df(('numeric_column')))

# Normalization (scaling to a range of (0, 1))

normalizer = MinMaxScaler()

df('normalized_column') = normalizer.fit_transform(df(('numeric_column')))Step 2: Training and evaluation of the model

Divide the data into two groups: training data set and testing data set to train the model. Choose a model and train it with the data used. Tuning hyperparameters selects the best performing machine learning models. The stability of the model is verified with different subgroups of data to implement cross-validation.

import pandas as pd

from sklearn.model_selection import train_test_split, GridSearchCV, cross_val_score

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, precision_score, recall_score

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import OneHotEncoder, StandardScaler, MinMaxScaler

# Load your data

df = pd.read_csv('data.csv')

# Split data into training and testing sets

x = df.drop(columns=('target_column'))

y = df('target_column')

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

# Hyperparameter tuning

param_grid = {

'n_estimators': (50, 100, 200),

'max_depth': (None, 10, 20, 30),

'min_samples_split': (2, 5, 10)

}

grid_search = GridSearchCV(estimator=RandomForestClassifier(random_state=42),

param_grid=param_grid,

cv=5,

scoring='accuracy',

n_jobs=-1)

# Fit the grid search to the data

grid_search.fit(X_train, y_train)

# Get the best model from the grid search

best_model = grid_search.best_estimator_

# Cross-validation to assess model generalization and robustness

cv_scores = cross_val_score(best_model, X_train, y_train, cv=5, scoring='accuracy')

print(f"Cross-validation scores: {cv_scores}")

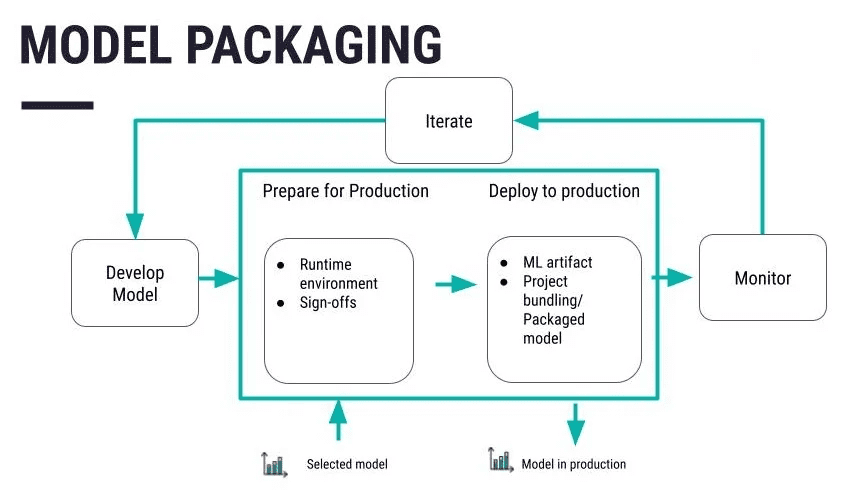

print(f"Mean cross-validation score: {cv_scores.mean()}")Step 3: Model packaging

Source: https://knowledge.dataiku.com/latest/mlops-o16n/architecture/concept-model-packaging.html

Serialize the code into a more suitable format that can be stored or distributed to the other system. Pickle is one of the conventional formats followed by joblib and ONNX formats depending on the user requirements. Once you have defined and optimized your model, save it to a file or database. Platforms like Git are also useful for managing the alterations and modifications to be made. Apply specific measures such as encrypting data both while it is stored and in transit so that no one else can easily access the data.

import joblib

joblib.dump(model, 'model.pkl')Put your serialized model in a container like Docker. This makes it portable and easier to transport machine learning models to different environments.

# Docker code

FROM python:3.8-slim

COPY model.pkl /app/model.pkl

COPY app.py /app/app.py

WORKDIR /app

RUN pip install -r requirements.txt

CMD ("python", "app.py")Step 4: Setting up the environment for deployment

To configure the infrastructure and resources for model deployment, it is recommended to use cloud services such as AWS, Azure, or Google Cloud. Modify the components necessary for hosting the model, such as servers, databases, and everything that can be done in the appropriate cloud infrastructure services of the selected cloud platform.

AWS: Configure EC2 instance using AWS CLI

aws ec2 run-instances \

--image-id ami-0abcdef1234567890 \

--count 1 \

--instance-type t2.micro \

--key-name MyKeyPair \

--security-group-ids sg-0abcdef1234567890 \

--subnet-id subnet-0abcdef1234567890Azure: Configure the virtual machine using the Azure CLI

az vm create \

--resource-group myResourceGroup \

--name myVM \

--image UbuntuLTS \

--admin-username azureuser \

--generate-ssh-keysGoogle cloud: Configure the Compute Engine instance using the Google Cloud CLI

gcloud compute instances create my-instance \

--zone=us-central1-a \

--machine-type=e2-medium \

--subnet=default \

--network-tier=PREMIUM \

--maintenance-policy=MIGRATE \

--image=debian-9-stretch-v20200902 \

--image-project=debian-cloud \

--boot-disk-size=10GB \

--boot-disk-type=pd-standard \

--boot-disk-device-name=my-instanceStep 5 – Creating the Deployment Pipeline

Use Jenkins or GitLab CI/CD to automate the model deployment step. Design a list of steps to execute to make the deployment process more efficient and use a Jenkinsfile or YAML configuration in the context of GitHub Actions.

# Using Jenkins for CI/CD pipeline

pipeline {

agent any

stages {

stage('Build') {

steps {

sh 'python setup.py build'

}

}

stage('Test') {

steps {

sh 'python -m unittest discover'

}

}

stage('Deploy') {

steps {

sh 'docker build -t mymodel:latest .'

sh 'docker run -d -p 5000:5000 mymodel:latest'

}

}

}

}Step 6: Test the model

Carry out tests to verify that all the functions of the model are fulfilled properly. After that, the predicted quantities are compared to the results that this model is supposed to provide. Check the generalizability of the model to determine if it will work well with other new data. To compare with sample data, choose the correct evaluation criteria: accuracy, precision, recall.

# Import necessary libraries

from sklearn.metrics import accuracy_score, precision_score, recall_score

# Load your test data

test_df = pd.read_csv('your_test_data.csv')

X_test = test_df.drop(columns=('target_column'))

y_test = test_df('target_column')

# Predict outcomes on the test set

y_pred_test = best_model.predict(X_test)

# Evaluate performance metrics

test_accuracy = accuracy_score(y_test, y_pred_test)

test_precision = precision_score(y_test, y_pred_test, average="weighted")

test_recall = recall_score(y_test, y_pred_test, average="weighted")

# Print performance metrics

print(f"Test Set Accuracy: {test_accuracy}")

print(f"Test Set Precision: {test_precision}")

print(f"Test Set Recall: {test_recall}")Step 7: Monitoring and Maintenance

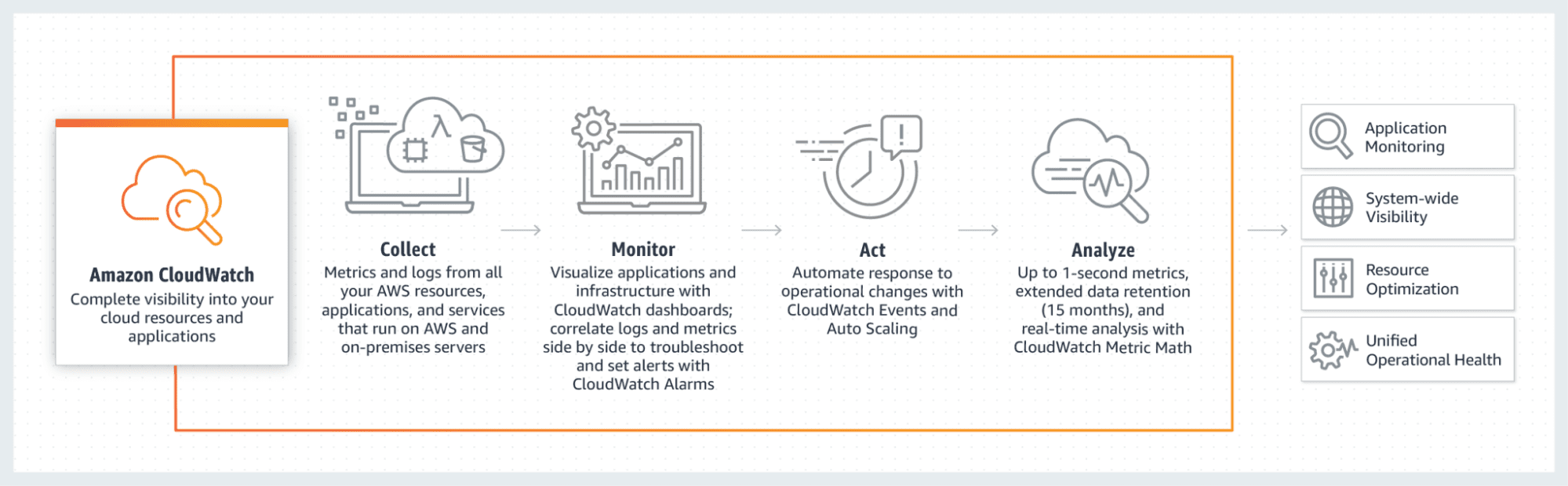

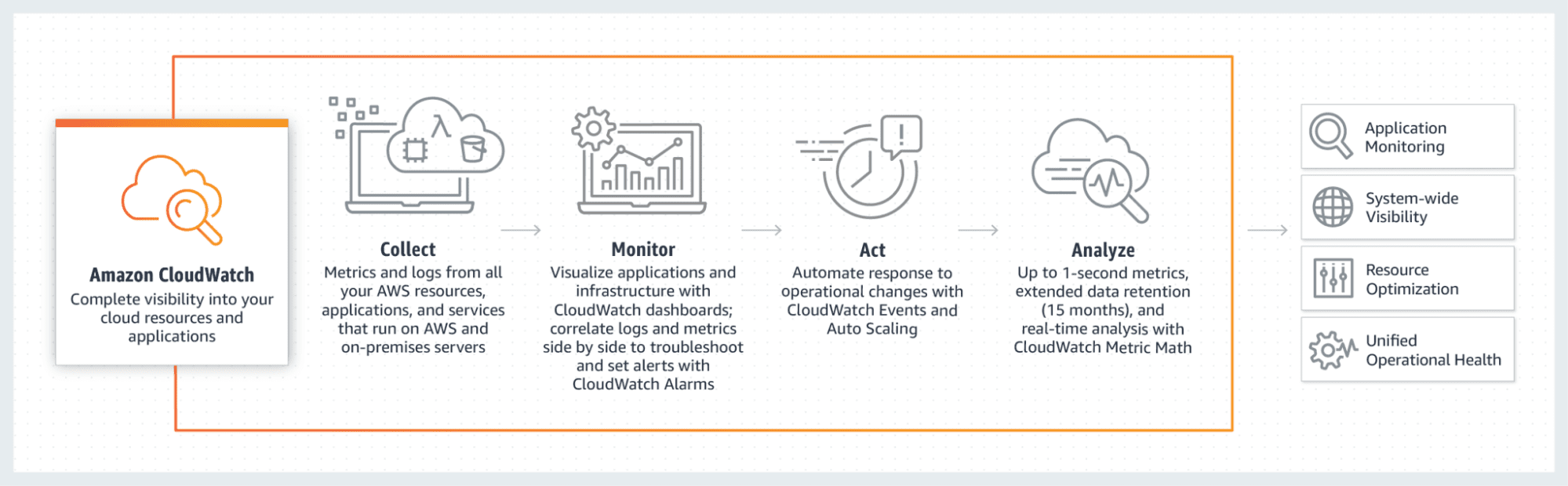

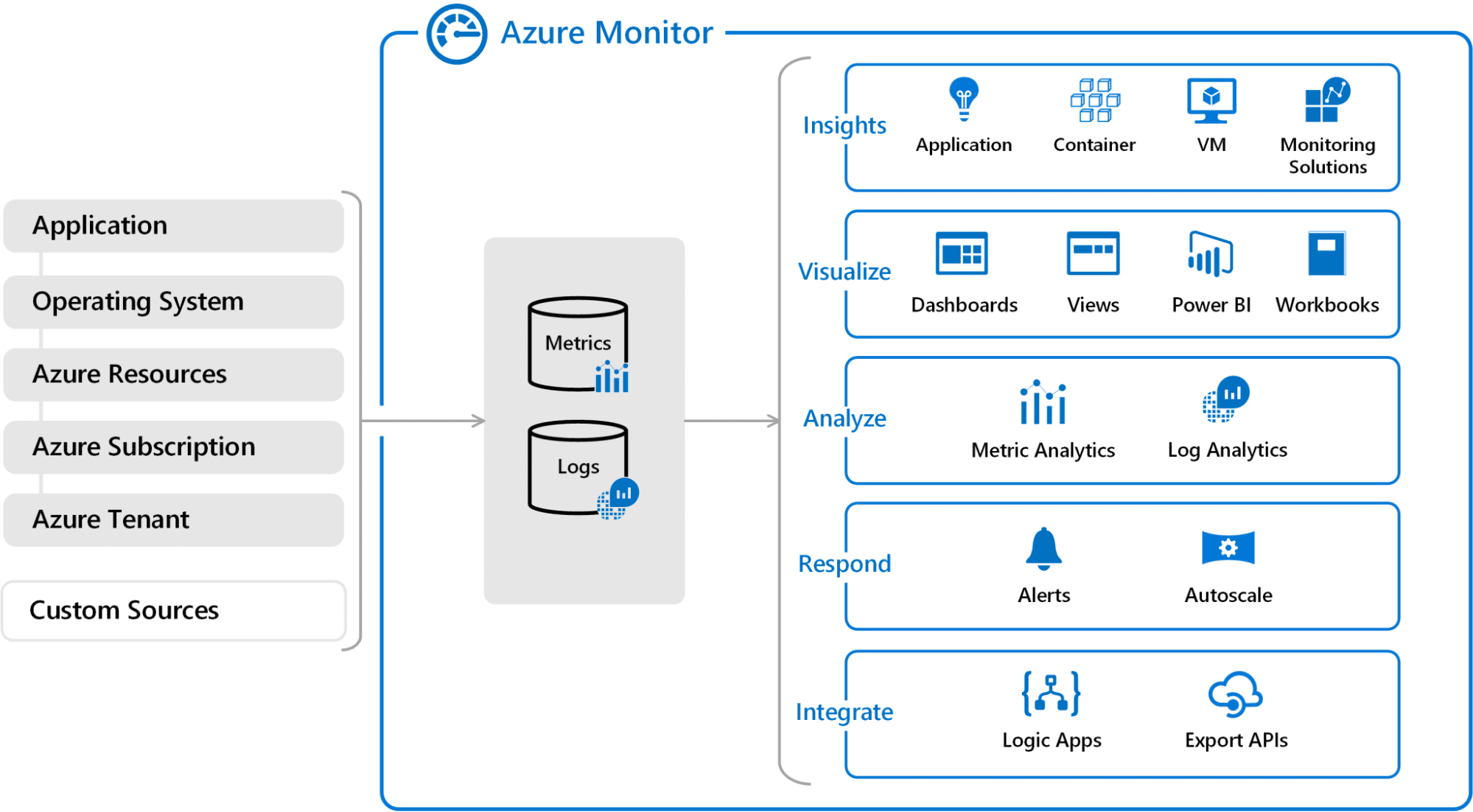

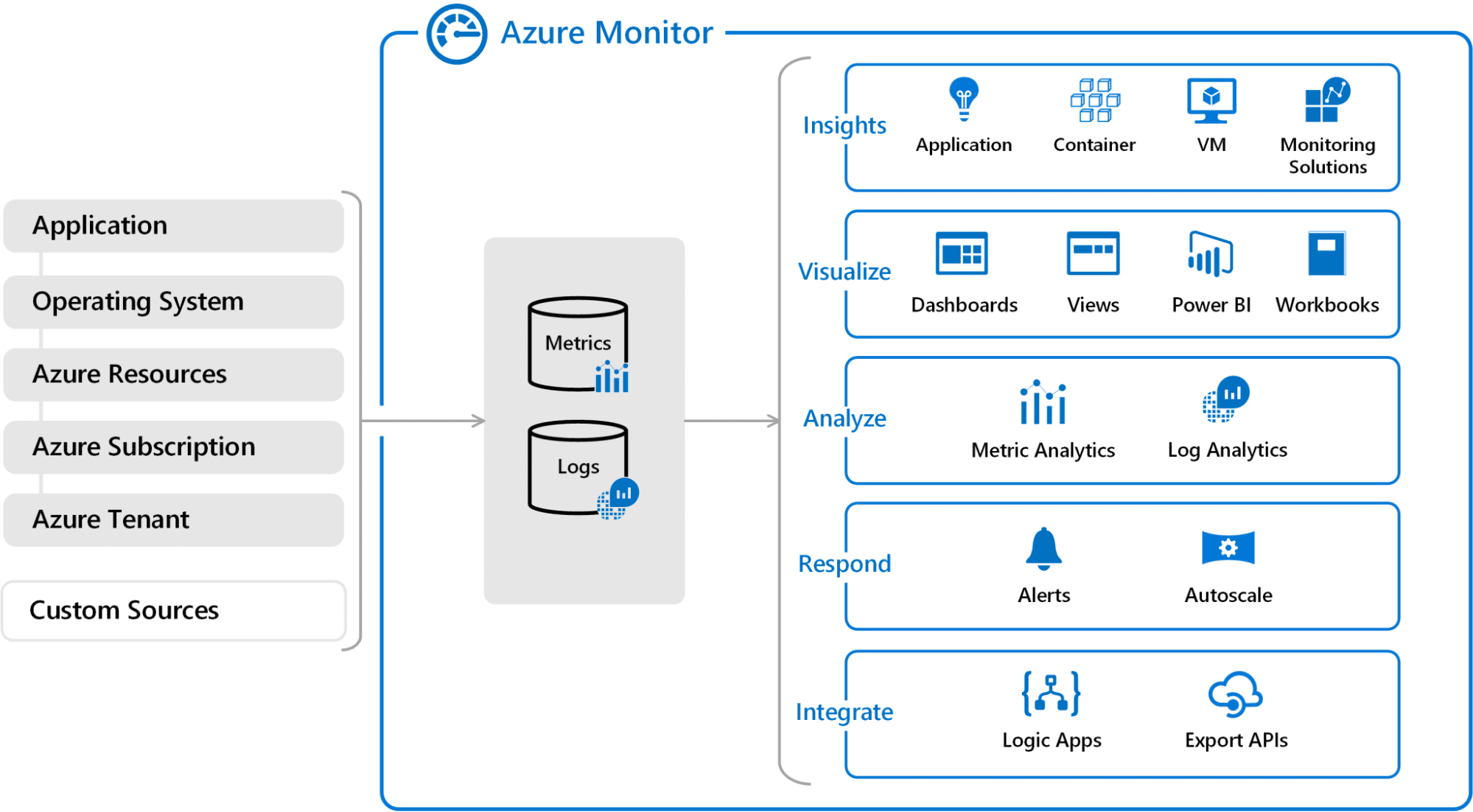

Make sure there are no errors in the model with the help of tools such as AWS CloudWatch, Azure Monitor, or Google Cloud Monitoring. This will require showing how the implemented model should be modified in the future to make it even better.

AWS CloudWatch

aws cloudwatch put-metric-alarm --alarm-name CPUAlarm --metric-name CPUUtilization \

--namespace AWS/EC2 --statistic Average --period 300 --threshold 70 \

--comparison-operator GreaterThanThreshold --dimensions "Name=InstanceId,Value=i-1234567890abcdef0" \

--evaluation-periods 2 --alarm-actions arn:aws:sns:us-east-1:123456789012:my-sns-topic

Source: https://blogs.vmware.com/management/2021/03/cloud-services-aws-cloudwatch-azure-monitor.html

blue monitor

az monitor metrics alert create --name 'CPU Alert' --resource-group myResourceGroup \

--scopes /subscriptions/{subscription-id}/resourceGroups/{resource-group-name}/providers/Microsoft.Compute/virtualMachines/{vm-name} \

--condition "avg Percentage CPU > 80" --description 'Alert if CPU usage exceeds 80%'

Source: https://blogs.vmware.com/management/2021/03/cloud-services-aws-cloudwatch-azure-monitor.html

Ending

The strategies outlined in this tutorial will ensure that you have the key steps necessary to deploy machine learning models. By following the aforementioned steps, the trained models can be made usable and easily deployable for practice-based use. From model building to framework configuration and validation, you now know how to take your machine learning efforts from the hypothetical to the practical.

Jayita Gulati is a machine learning enthusiast and technical writer driven by her passion for building machine learning models. She has a master's degree in Computer Science from the University of Liverpool.

NEWSLETTER

NEWSLETTER