Amazon SageMaker Canvas now supports deploying machine learning (ML) models to real-time inference endpoints, allowing you to take your ML models to production and drive actions based on ML-based insights. SageMaker Canvas is a no-code workspace that enables citizen data scientists and analysts to generate accurate machine learning predictions for their business needs.

Until now, SageMaker Canvas provided the ability to evaluate an ML model, generate bulk predictions, and run what-if analyzes within its interactive workspace. But now you can also deploy models to Amazon SageMaker endpoints for real-time inference, making it easy to consume model predictions and drive actions outside of the SageMaker Canvas workspace. Having the ability to deploy ML models directly from SageMaker Canvas eliminates the need to manually export, configure, test, and deploy ML models to production, saving complexity and time. It also makes running machine learning models more accessible to people, without the need to write code.

In this post, we’ll walk you through the process of deploying a model in SageMaker Canvas to a real-time endpoint.

Solution Overview

For our use case, we take on the role of a business user in the marketing department of a mobile operator and have successfully built a machine learning model in SageMaker Canvas to identify customers at potential churn risk. Thanks to the predictions generated by our model, we now want to move it from our development environment to production. To streamline the process of deploying our model endpoint for inference, we directly deploy ML models from SageMaker Canvas, eliminating the need to manually export, configure, test, and deploy ML models to production. This helps reduce complexity, saves time, and also makes getting machine learning models up and running more accessible to people, without the need to write code.

The workflow steps are as follows:

- Upload a new data set with the current customer population to SageMaker Canvas. For the full list of supported data sources, see Import data into Canvas.

- Create ML models and analyze their performance metrics. For instructions, see Creating a custom model and Evaluating the performance of your model in Amazon SageMaker Canvas.

- Deploy the approved model version as the endpoint for real-time inference.

You can perform these steps in SageMaker Canvas without writing a single line of code.

Previous requirements

For this tutorial, make sure the following prerequisites are met:

- To deploy model versions to SageMaker endpoints, the SageMaker Canvas administrator must grant the necessary permissions to the SageMaker Canvas user, who can manage in the SageMaker domain that hosts your SageMaker Canvas application. For more information, see Managing permissions in Canvas.

- Implement the prerequisites mentioned in Predict churn with code-free machine learning using Amazon SageMaker Canvas.

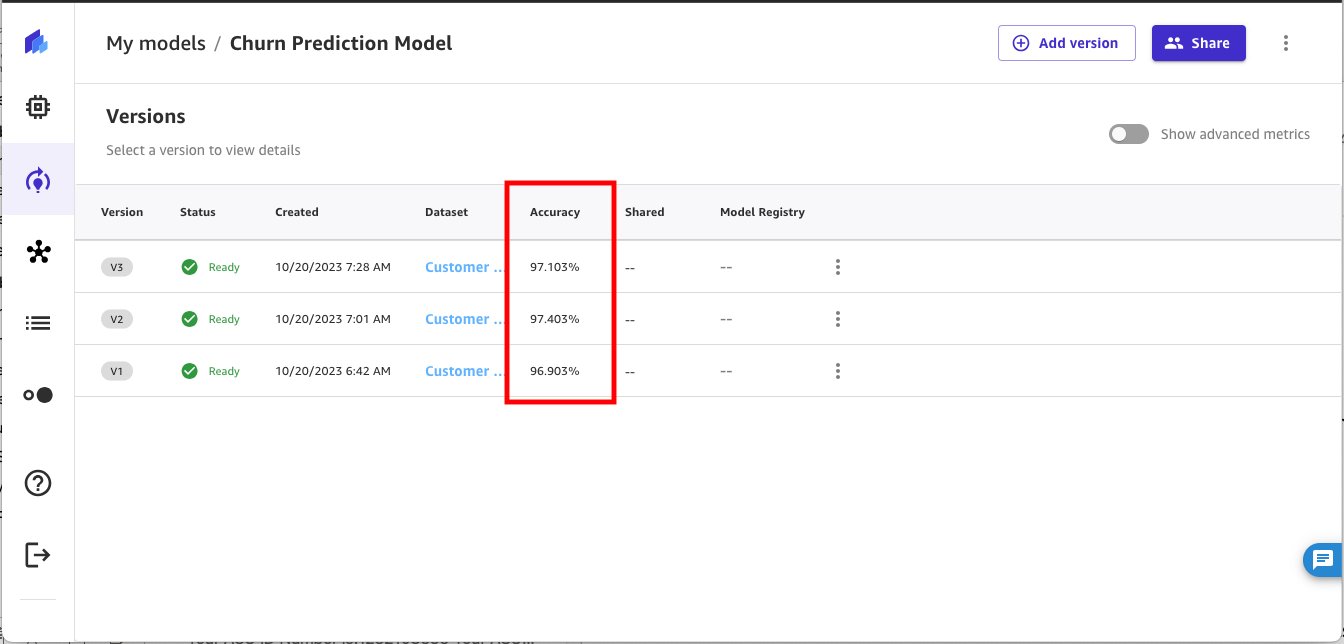

You should now have three versions of the model trained with historical churn prediction data in Canvas:

- V1 trained with all 21 features and fast build configuration with a model score of 96.903%

- V2 trained with all 19 features (phone and status features removed) and fast build configuration and improved accuracy of 97.403%

- V3 trained with standard build configuration with model score of 97.103%

Use the customer churn prediction model

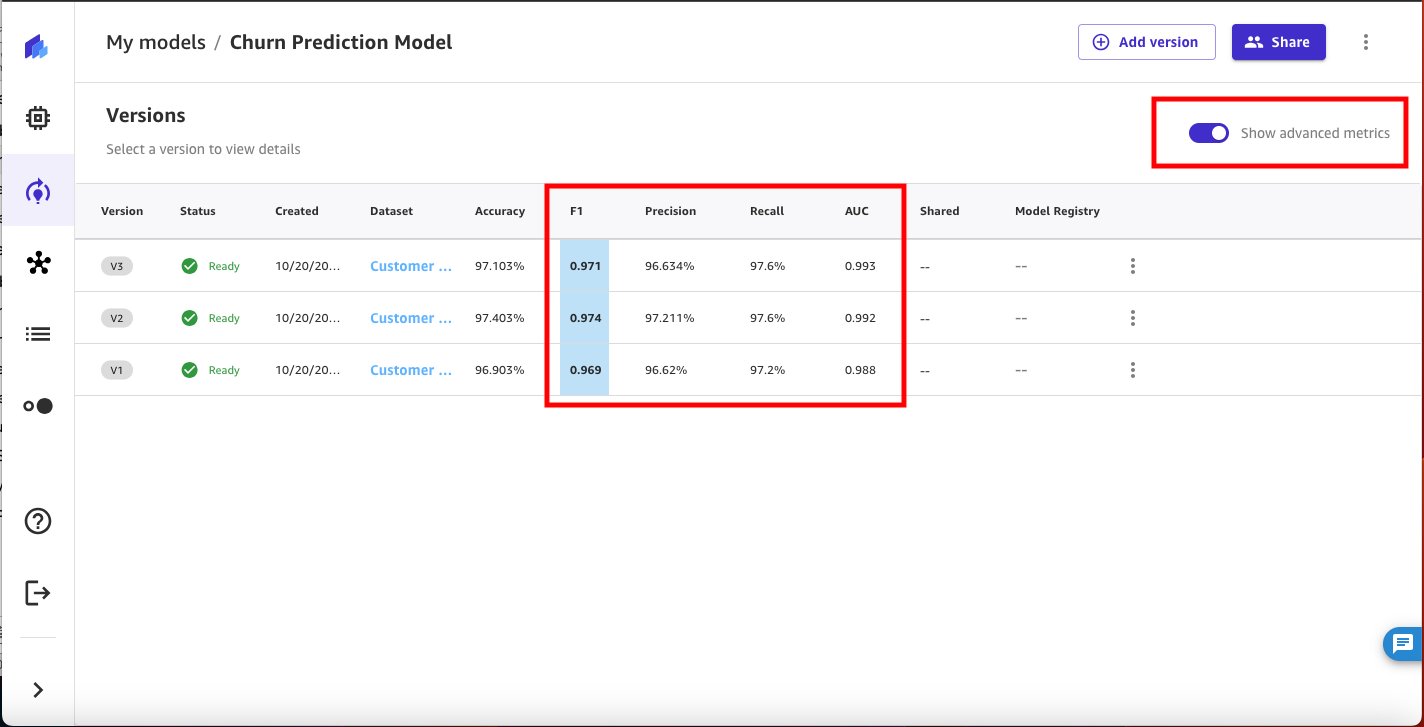

Allow Show advanced metrics on the model details page and review the objective metrics associated with each model version so you can select the best performing model to deploy to SageMaker as an endpoint.

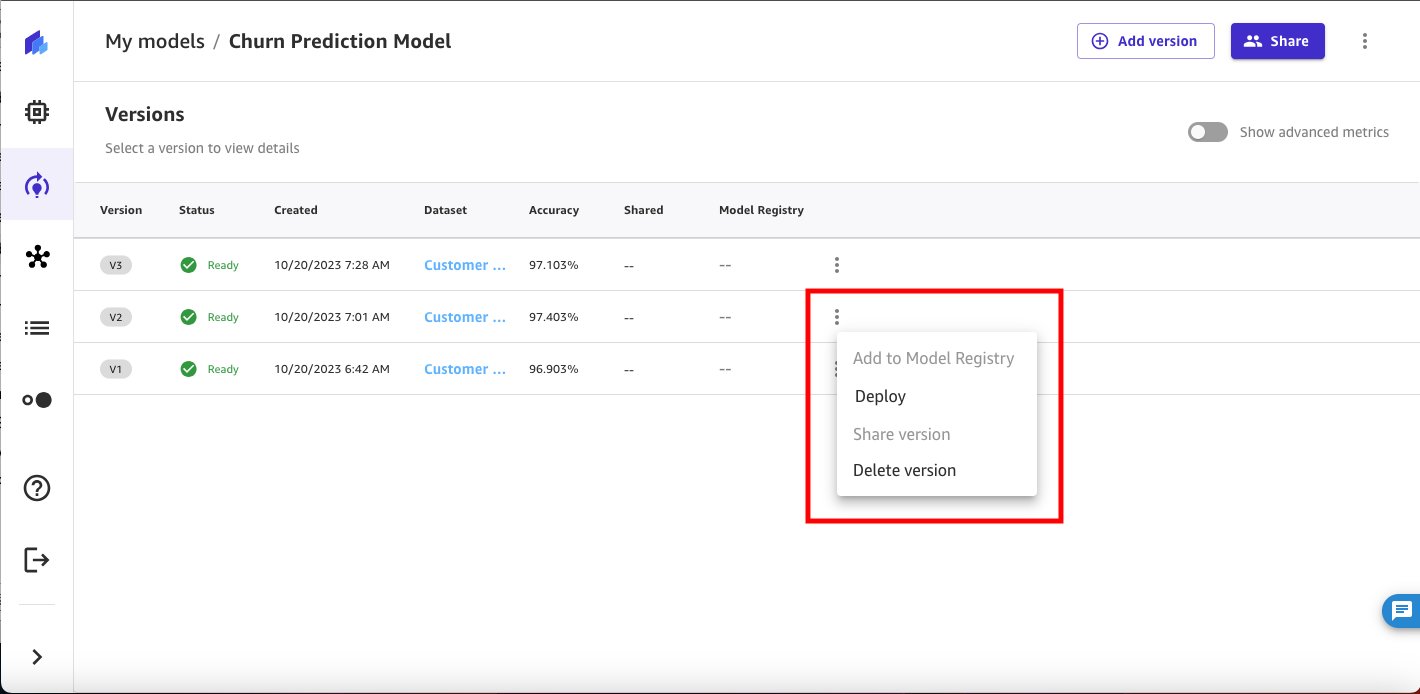

Based on performance metrics, we select version 2 to deploy.

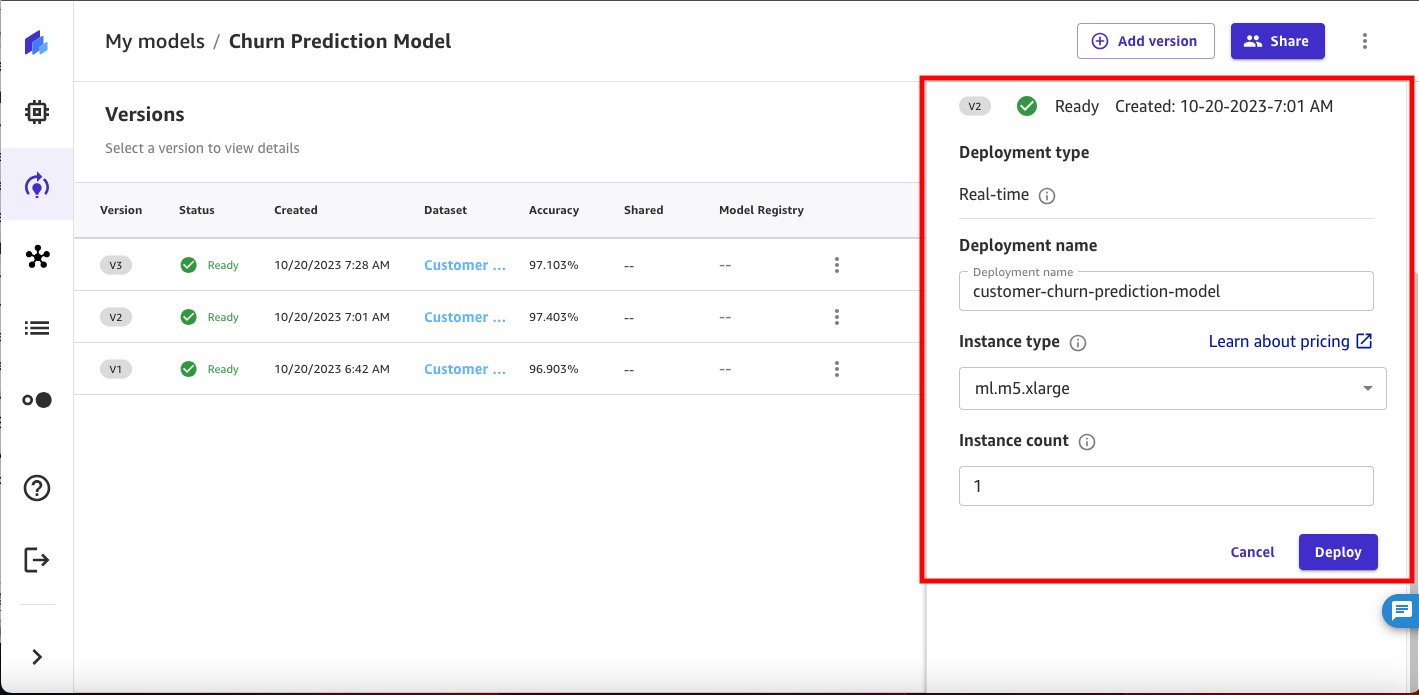

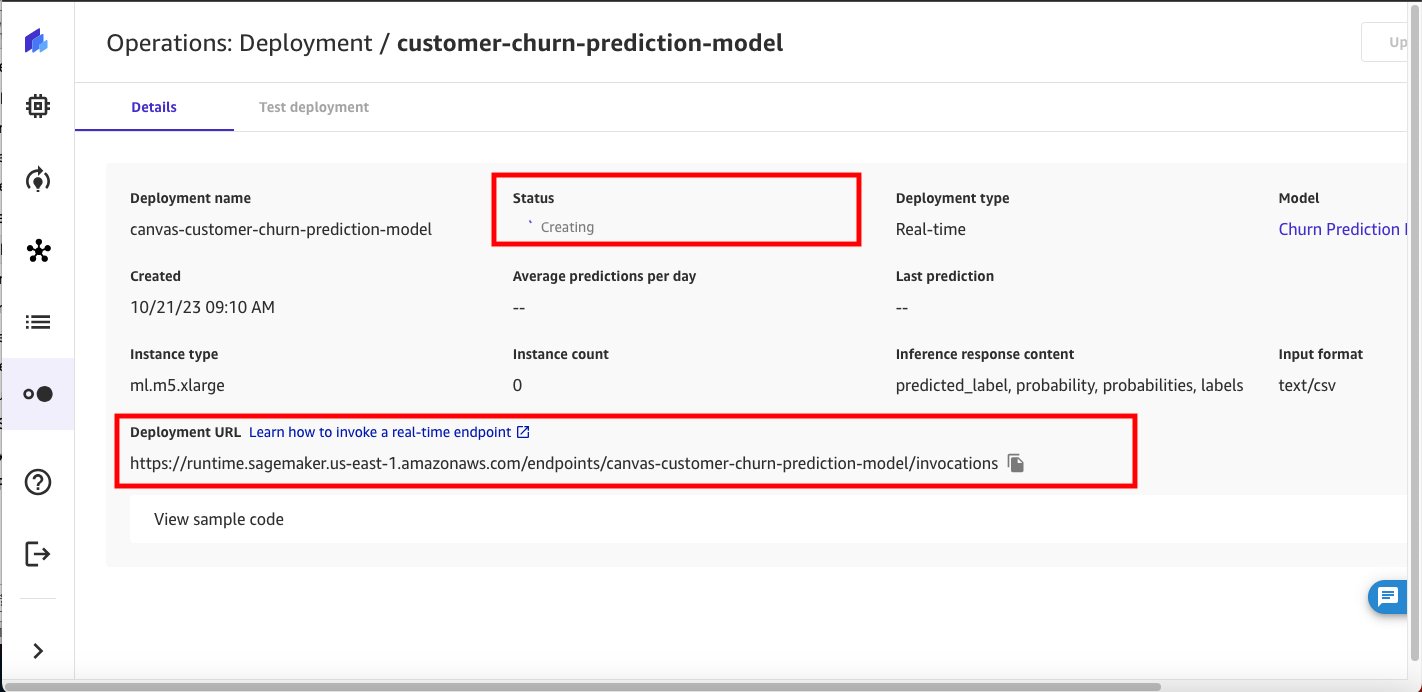

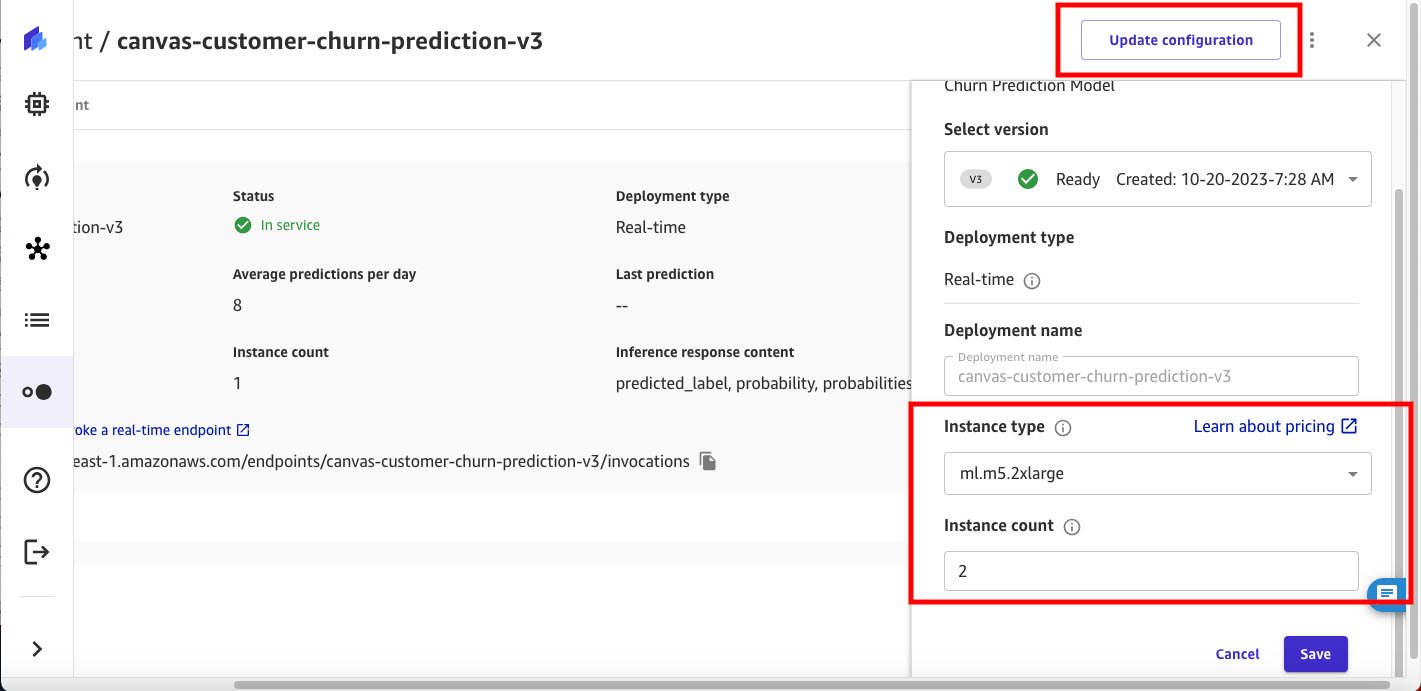

Configure model deployment settings: deployment name, instance type, and instance count.

As a starting point, Canvas will automatically recommend the best instance type and number of instances for your model deployment. You can change it based on your workload needs.

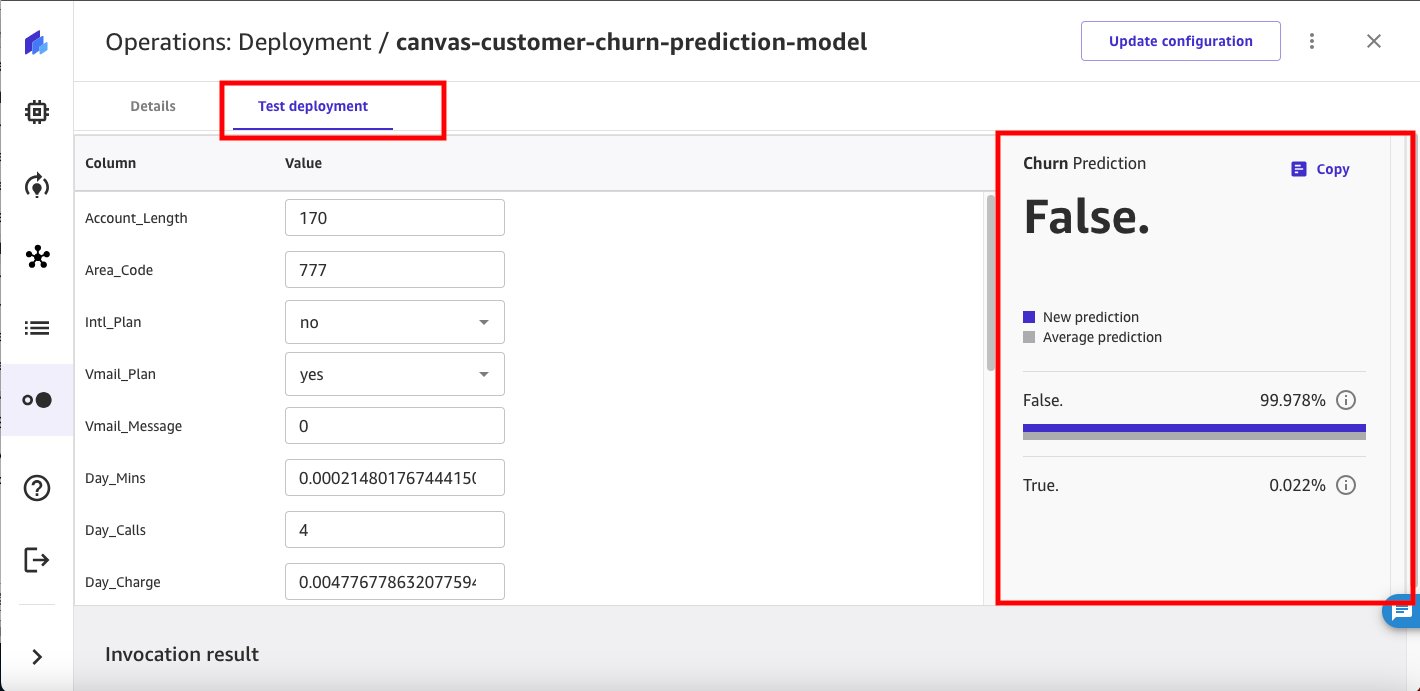

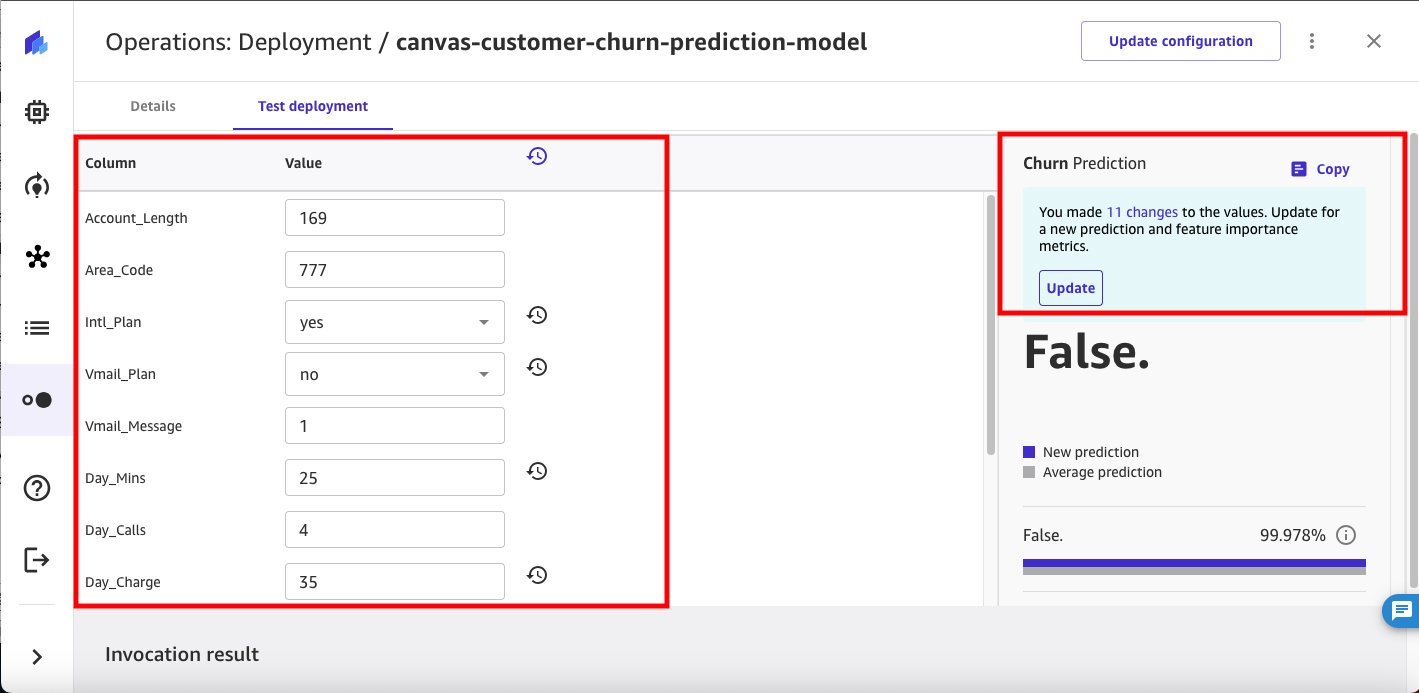

You can test the implemented SageMaker inference endpoint directly from SageMaker Canvas.

You can change the input values using the SageMaker Canvas user interface to infer additional churn predictions.

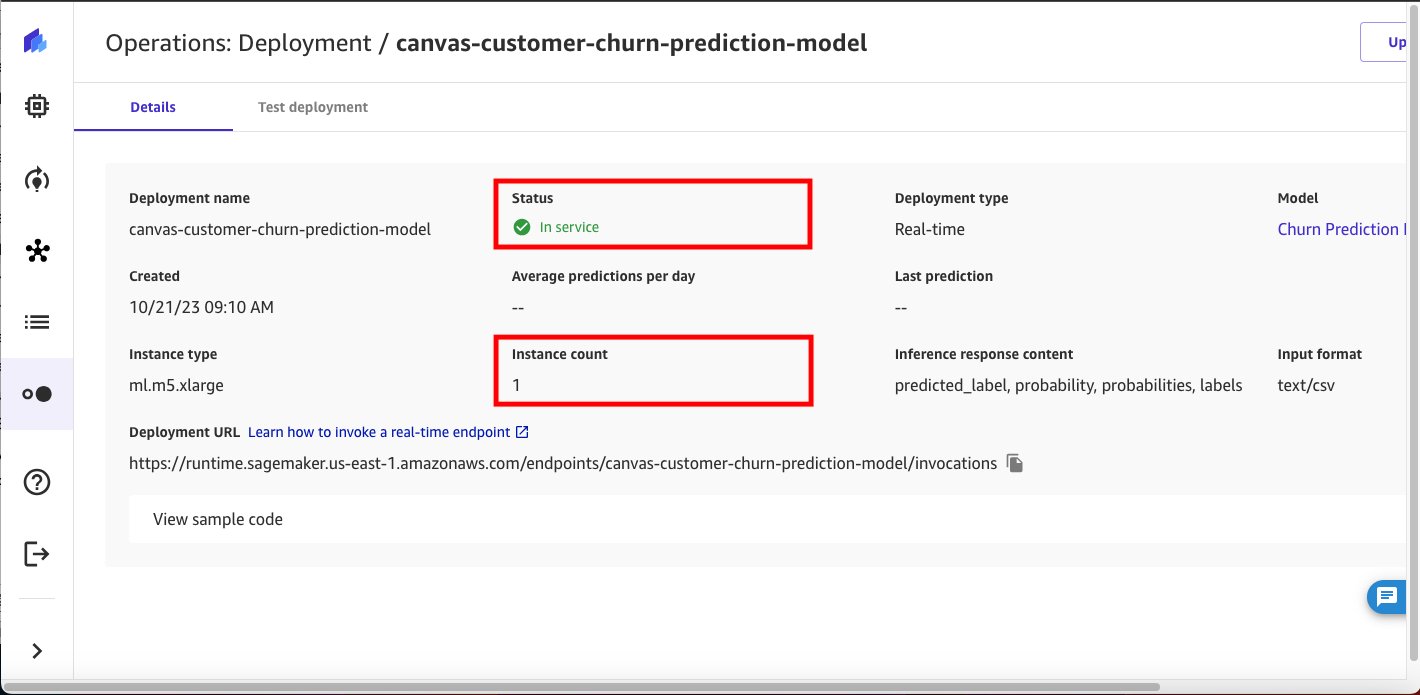

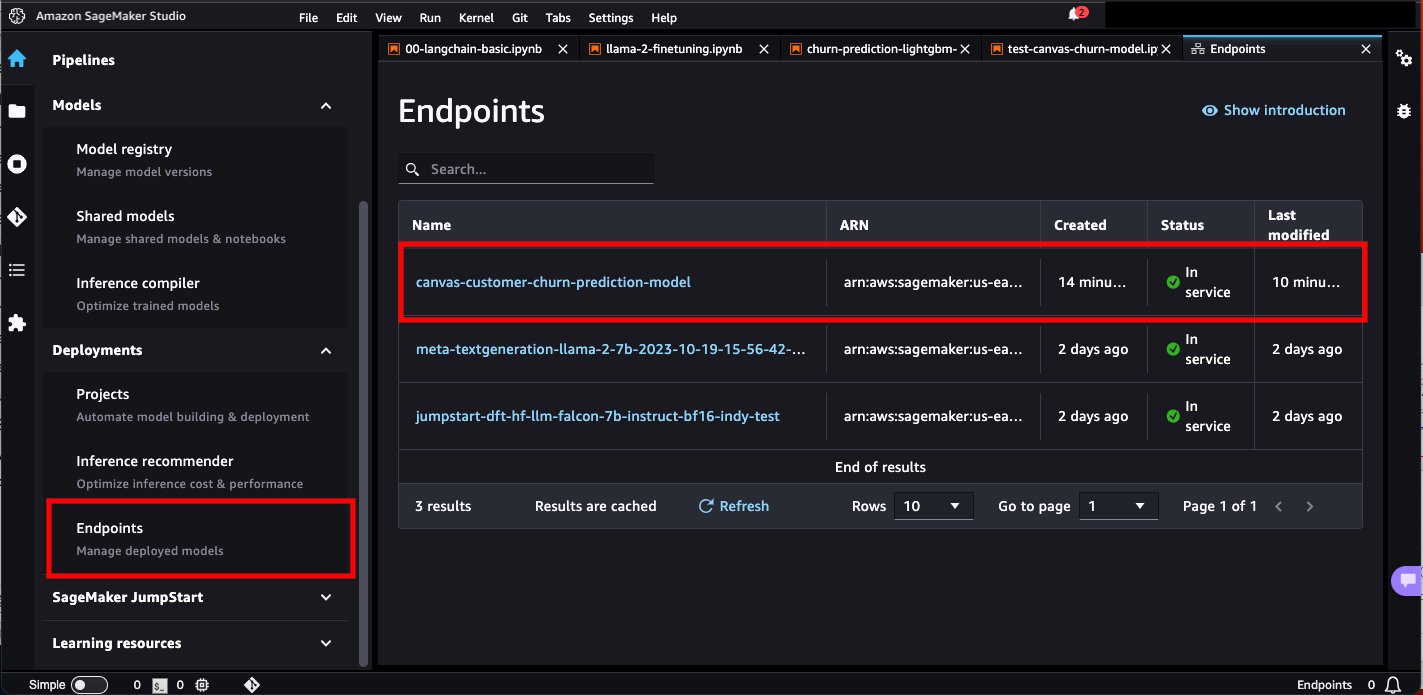

Now let’s navigate to Amazon SageMaker Studio and review the deployed endpoint.

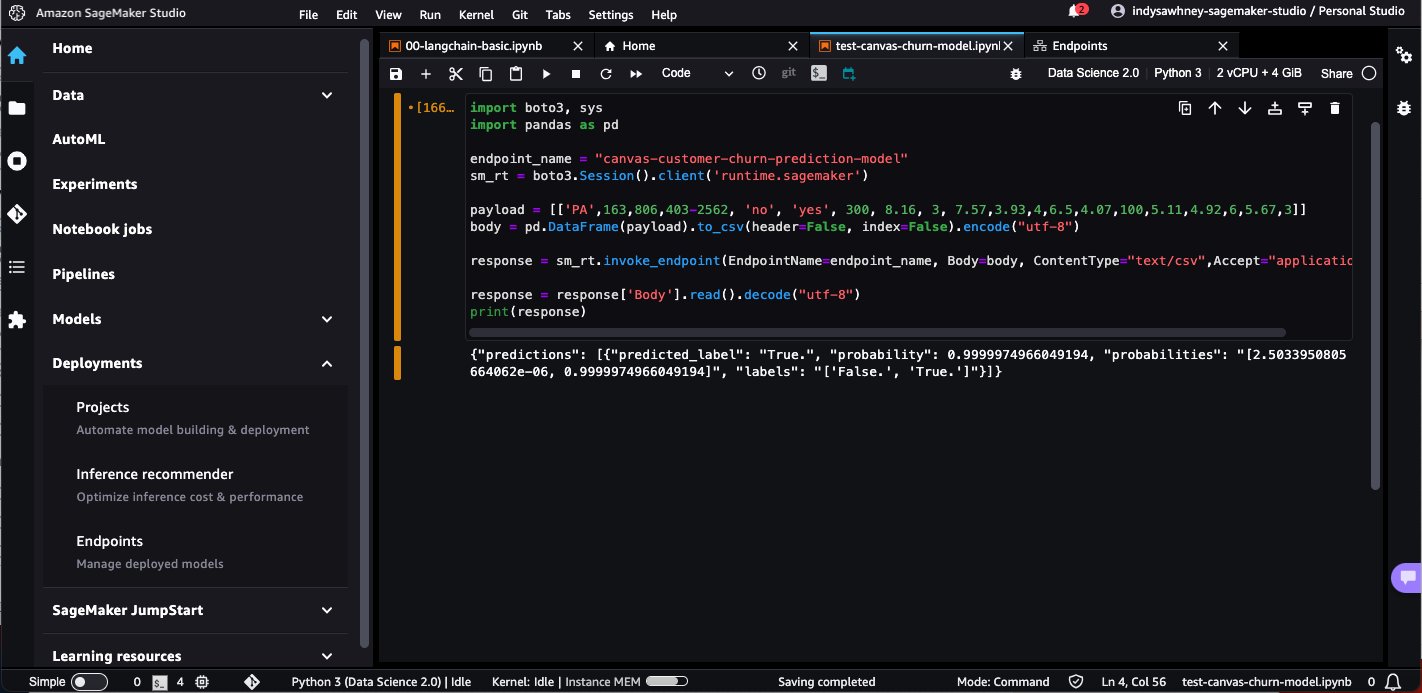

Open a notebook in SageMaker Studio and run the following code to infer the endpoint of the deployed model. Replace the model endpoint name with your own model endpoint name.

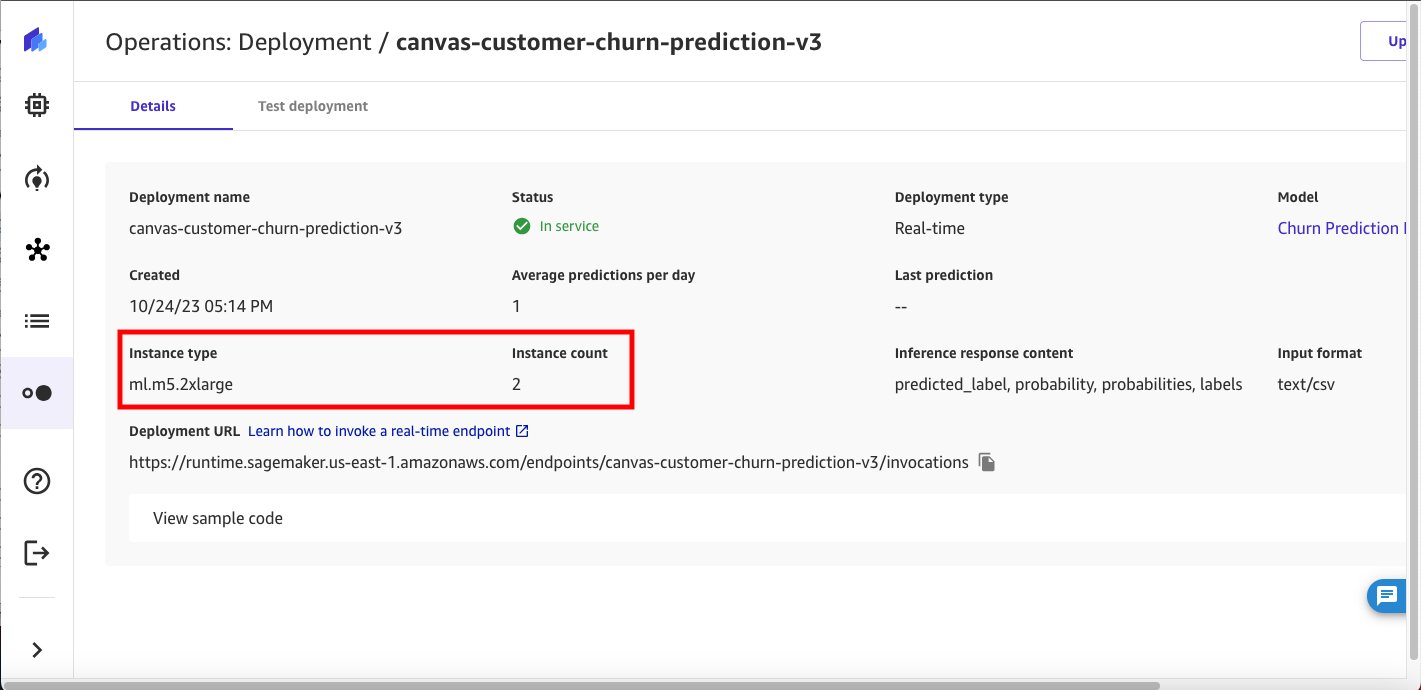

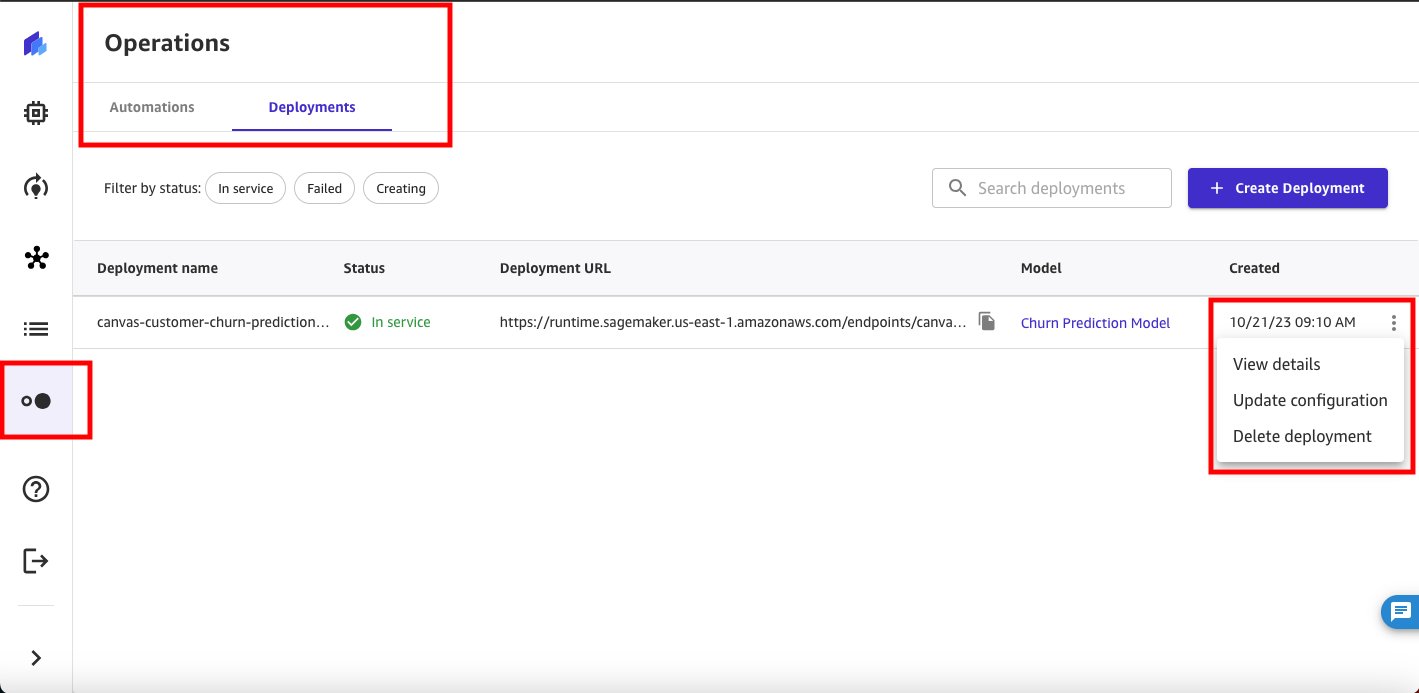

The endpoint of our original model uses an ml.m5.xlarge instance and a count of 1 instance. Now, let’s say you expect the number of end users inferring the endpoint of your model to increase and you want to provision more computing capacity. You can achieve this directly from SageMaker Canvas by choosing Update settings.

Clean

To avoid incurring future charges, delete any resources you created while following this post. This includes signing out of SageMaker Canvas and deleting the deployed SageMaker endpoint. SageMaker Canvas bills you for the duration of the session, and we recommend signing out of SageMaker Canvas when you are not using it. See Signing out of Amazon SageMaker Canvas for more details.

Conclusion

In this post, we discuss how SageMaker Canvas can deploy ML models to real-time inference endpoints, allowing you to take your ML models to production and drive actions based on ML-based insights. In our example, we show how an analyst can quickly create a highly accurate predictive machine learning model without writing any code, deploy it to SageMaker as an endpoint, and test the model endpoint from SageMaker Canvas as well as from a SageMaker notebook Studio.

To start your low-code or no-code machine learning journey, check out Amazon SageMaker Canvas.

Special thanks to everyone who contributed to the launch: Prashanth Kurumaddali, Abhishek Kumar, Allen Liu, Sean Lester, Richa Sundrani and Alicia Qi.

About the authors

Janisha Anand is a Senior Product Manager on the Amazon SageMaker Low/No-Code Machine Learning team, including SageMaker Canvas and SageMaker Autopilot. She enjoys coffee, staying active and spending time with her family.

Janisha Anand is a Senior Product Manager on the Amazon SageMaker Low/No-Code Machine Learning team, including SageMaker Canvas and SageMaker Autopilot. She enjoys coffee, staying active and spending time with her family.

Indy Sawhney is a Senior Customer Solutions Leader at Amazon Web Services. Always working backwards from customer issues, Indy advises AWS enterprise customer executives throughout their unique cloud transformation journey. She has more than 25 years of experience helping enterprise organizations adopt emerging technologies and business solutions. Indy is a deep specialist area in the AWS technical domain community for ai/ML, with specialization in generative ai and low-code/no-code Amazon SageMaker solutions.

Indy Sawhney is a Senior Customer Solutions Leader at Amazon Web Services. Always working backwards from customer issues, Indy advises AWS enterprise customer executives throughout their unique cloud transformation journey. She has more than 25 years of experience helping enterprise organizations adopt emerging technologies and business solutions. Indy is a deep specialist area in the AWS technical domain community for ai/ML, with specialization in generative ai and low-code/no-code Amazon SageMaker solutions.

NEWSLETTER

NEWSLETTER