Natural language processing is advancing rapidly, focusing on optimizing large language models (LLMs) for specific tasks. These models, often containing billions of parameters, pose a significant challenge in terms of customization. The goal is to develop efficient and better methods for fine-tuning these models to specific downstream tasks without prohibitive computational costs. This requires innovative approaches for efficient parameter fine-tuning (PEFT) that maximize performance and minimize resource usage.

A major problem in this area is the resource-intensive nature of customizing LLMs for specific tasks. Traditional fine-tuning methods typically update all model parameters, which can lead to high computational costs and overfitting. Given the scale of modern LLMs, such as those with sparse architectures that distribute tasks across multiple specialized experts, there is a pressing need for more efficient fine-tuning techniques. The challenge lies in optimizing performance while ensuring that the computational load remains manageable.

Existing methods for PEFT in dense architecture linear modeling models include low-rank adaptation (LoRA) and P-tuning. These methods typically involve adding new parameters to the model or selectively updating existing ones. For example, LoRA decomposes weight matrices into low-rank components, which helps reduce the number of parameters that need to be trained. However, these approaches have primarily focused on dense models and do not fully exploit the potential of sparse architecture linear modeling models. In sparse models, different tasks activate different subsets of parameters, making traditional methods less effective.

Researchers at DeepSeek ai and Northwestern University have introduced a new method called Expert Skilled Fine Tuning (ESFT) Designed for sparse architecture LLMs, specifically those using a mixture of experts (MoE) architecture. This method aims to fine-tune only the most relevant experts for a given task while freezing the other experts and model components. By doing so, ESFT improves tuning efficiency and maintains expert specialization, which is crucial for optimal performance. The ESFT method leverages the inherent ability of the MoE architecture to assign different tasks to experts, ensuring that only the necessary parameters are updated.

In more detail, ESFT involves computing experts’ affinity scores to the task-specific data and selecting a subset of experts with the highest relevance. The selected experts are then fine-tuned while the rest of the model remains unchanged. This selective approach significantly reduces the computational costs associated with fine-tuning. For example, ESFT can reduce storage requirements by up to 90% and training time by up to 30% compared to fine-tuning all parameters. This efficiency is achieved without compromising overall model performance, as demonstrated by experimental results.

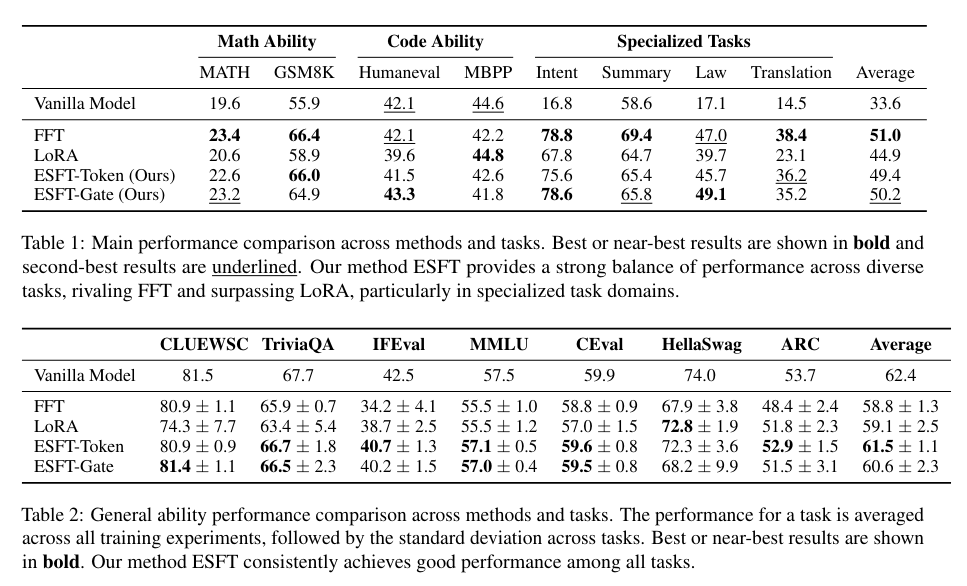

In several downstream tasks, ESFT not only matched, but often outperformed the performance of traditional full-parameter fine-tuning methods. For example, in tasks such as mathematics and programming, ESFT achieved significant performance improvements while maintaining a high degree of specialization. The method’s ability to efficiently fine-tune a subset of experts, selected based on their relevance to the task, highlights its effectiveness. The results showed that ESFT maintained overall task performance better than other PEFT methods such as LoRA, making it a versatile and powerful tool for LLM customization.

In conclusion, the research presents expert-specialized fine-tuning (ESFT) as a solution to the resource-intensive fine-tuning problem in large language models. By selectively fine-tuning relevant experts, ESFT optimizes both performance and efficiency. This method leverages the specialized architecture of sparse-architecture LLMs to achieve superior results with reduced computational costs. The research demonstrates that ESFT can significantly improve training efficiency, reduce storage and training time, and maintain high performance across multiple tasks. This makes ESFT a promising approach for future developments in personalizing large language models.

Review the Paper and ai/ESFT” target=”_blank” rel=”noreferrer noopener”>GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram Channel and LinkedIn GrAbove!.

If you like our work, you will love our Newsletter..

Don't forget to join our Subreddit with over 46 billion users

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER