Understanding and reasoning about program execution is a critical skill for developers, often applied during tasks such as debugging and repairing code. Traditionally, developers simulate code execution mentally or using debugging tools to identify and fix errors. Despite their sophistication, large language models (LLMs) trained on code have had difficulty capturing the deeper semantic aspects of program execution beyond the surface textual representation of the code. This limitation often affects its performance in complex software engineering tasks, such as program repair, where it is essential to understand the execution flow of a program.

Existing research on ai-driven software development includes several frameworks and models focused on improving code execution reasoning. Notable examples include CrossBeam, which leverages execution states in sequence-to-sequence models, and specialized neural architectures such as instruction pointer attention graph neural networks. Other approaches, such as the Forth differentiable interpreter and Scratchpad, integrate execution traces directly into model training to improve program synthesis and debugging capabilities. These methods pave the way for advanced reasoning about code, focusing on both process and dynamic states of execution within programming environments.

Researchers at Google DeepMind, Yale University, and the University of Illinois proposed NExT, which introduces a novel approach by teaching LLMs to interpret and use execution traces, allowing for more nuanced reasoning about program behavior over time. of execution. This method is distinguished by incorporating detailed runtime data directly into model training, fostering a deeper semantic understanding of the code. By incorporating execution traces as inline comments, NExT allows models to access crucial contexts that traditional training methods often miss, making the foundations generated for code fixes more accurate and based on the actual execution of the code.

NExT's methodology uses a self-training cycle to refine the model's ability to generate execution-aware foundations. Initially, execution traces are synthesized with proposed code fixes in a dataset, where each trace details the states of variables and their changes during execution. Using Google's PaLM 2 model, the method evaluates performance on tasks such as program repair, significantly improving the model's accuracy with repeated iterations. The data sets include Mbpp-R and HumanEval Fix-Plus, benchmarks designed to test programming skills and fix bugs in code. This method of iterative learning and synthetic data set generation focuses on practical improvements in the programming capabilities of LLMs without requiring extensive manual annotations.

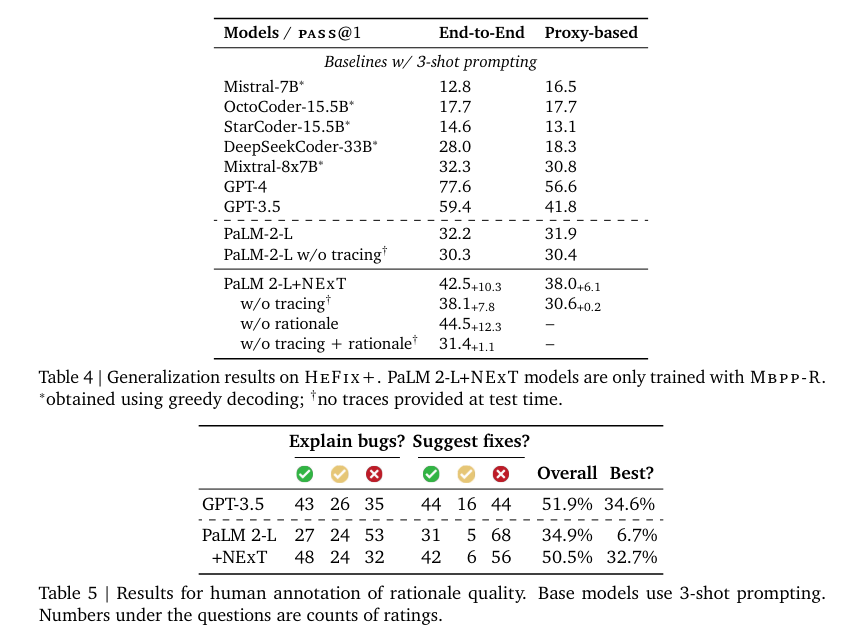

Substantial improvements in program repair tasks demonstrate the effectiveness of NExT. Applying the NExT methodology, the PaLM 2 model achieved a 26.1% absolute increase in fix rate on the Mbpp-R dataset and a 14.3% absolute improvement on HumanEval Fix-Plus. These results indicate significant improvements in the model's ability to accurately diagnose and correct programming errors. Additionally, the quality of model-generated foundations, essential for explaining code fixes, improved markedly, as evidenced by automated metrics and human evaluations.

In conclusion, the NExT methodology significantly improves the ability of large language models to understand and correct code by integrating execution traces into their training. This approach has dramatically improved repair rates and logic quality in complex programming tasks, as demonstrated by substantial advances on established benchmarks such as Mbpp-R and HumanEval Fix-Plus. The practical impact of NExT in improving the accuracy and reliability of automated program repair shows its potential to transform software development practices.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 40,000ml

![]()

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER