Alignment has become a critical concern for the development of next-generation text-based assistants, particularly to ensure that large language models (LLMs) align with human values. This alignment aims to improve the accuracy, consistency and safety of content generated by LLM in response to user queries. The alignment process involves three key elements: feedback acquisition, alignment algorithms and model evaluation. While previous efforts focused on alignment algorithms, this study delves into the nuances of feedback acquisition, specifically comparing ratings and classification protocols, shedding light on an important consistency challenge.

In the existing literature, alignment algorithms such as PPO, DPO, and PRO have been widely explored under specific feedback protocols and evaluation settings. Meanwhile, feedback acquisition strategies have focused on developing dense, detailed protocols, which can be challenging and costly. This study analyzes the impact of two feedback protocols, grading and rankings, on LLM alignment. Figure 1 provides an illustration of your pipeline.

Understanding Feedback Protocols: Ratings Versus Rankings

Ratings involve assigning an absolute value to an answer using a predefined scale, while rankings require scorers to select their preferred answer from a pair. Ratings quantify the goodness of responses but can be challenging for complex instructions, while ratings are easier for these types of instructions but lack quantification of the gap between responses.Listed in Table 1).

We will now delve into the initially announced feedback inconsistency problem. The authors make use of the observation that the ratings of a pair of responses for a given instruction can be compared to convert the rating feedback data into its classification form. This conversion of grade data dTO to the ranking data dRTO provides us with a unique opportunity to study the interaction between absolute feedback dTO and relative feedback dR collected from annotators, independently. Here, they define the term consistency as the agreement between the ratings (converted to their rating form) and the ratings received by a pair of responses to a given instruction, independent of the rating data.

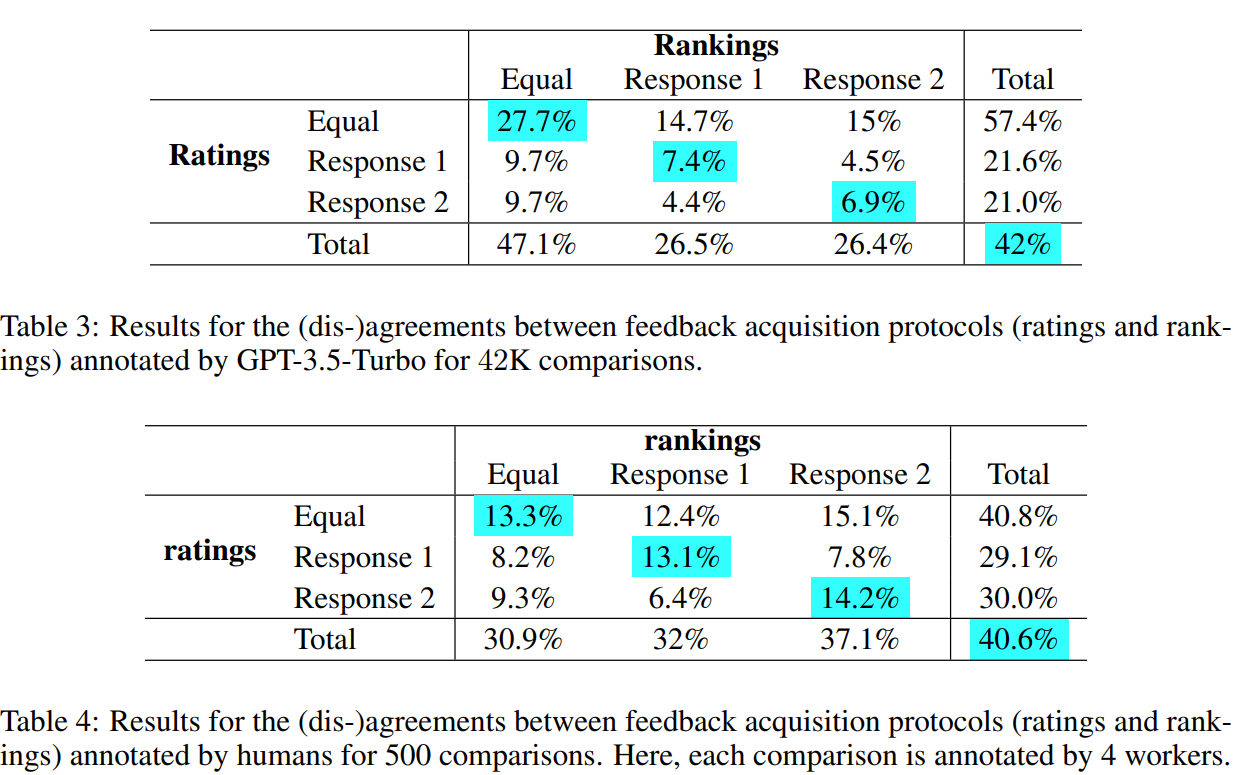

We can clearly observe consistency issues in Tables 3 and 4 in both human and ai feedback data. Interestingly, the consistency score falls within a similar range of 40% to 42% for both humans and ai, suggesting that a substantial portion of the feedback data may generate conflicting preferences depending on the feedback protocol employed. . This consistency issue highlights several critical points: (a) it indicates variations in the perceived quality of responses depending on the choice of feedback acquisition protocols, (b) it highlights that the alignment process can vary significantly depending on whether ratings are used. or classifications. as sparse forms of feedback, and (c) emphasizes the need for meticulous data curation when working with multiple feedback protocols to align LLMs.

Exploring feedback inconsistency:

The study delves into the identified feedback inconsistency problem, taking advantage of a revealing observation. By comparing the ratings of a pair of responses, the authors convert the rating feedback data (dTO) in classification data (dRTO). This conversion offers a unique opportunity to independently study the interaction between absolute feedback (dTO) and relative feedback (dR) of the scorers. Consistency, defined as the agreement between the converted ratings and the original ratings, is evaluated. In particular, Tables 3 and 4 reveal consistent issues in human and ai feedback, with a notable consistency score range from 40% to 42%. This highlights variations in perceived response quality across feedback acquisition protocols, highlighting the significant impact on the alignment process and emphasizing the need for meticulous data curation when handling various feedback protocols in alignment. LLM.

Feedback data acquisition

The study uses various instructions from sources such as Dolly, Self-Instructions and Super-NI to gather feedback. Alpaca-7B serves as the base LLM, generating candidate responses for evaluation. The authors leverage GPT-3.5-Turbo to collect large-scale ratings and ratings data. They also collect feedback data based on grading and ranking protocols.

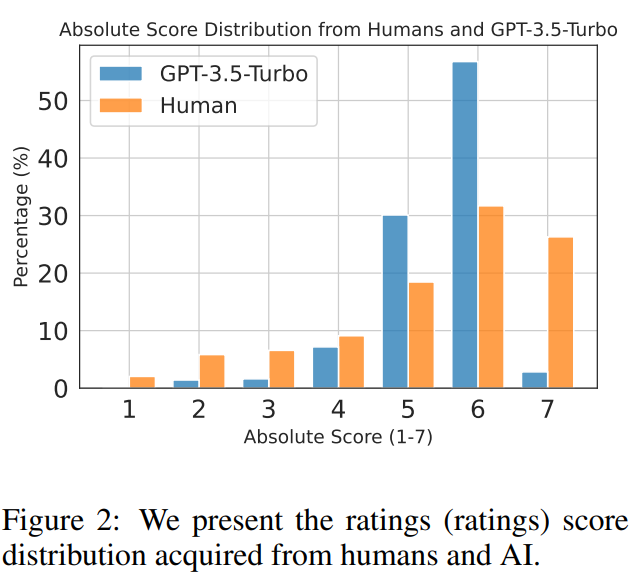

Grade Distribution Analysis (shown in Figure 2) indicates that human annotators tend to give higher scores, while ai feedback is more balanced. The study also ensures that the feedback data is not biased towards longer or single responses. Agreement analysis (shown in Table 2) between human-human and human-ai feedback shows reasonable alignment rates. In summary, the agreement results indicate that GPT-3.5-Turbo can provide ratings and rankings close to the human gold label for responses to instructions in our data set.

Impact on model alignment and evaluation

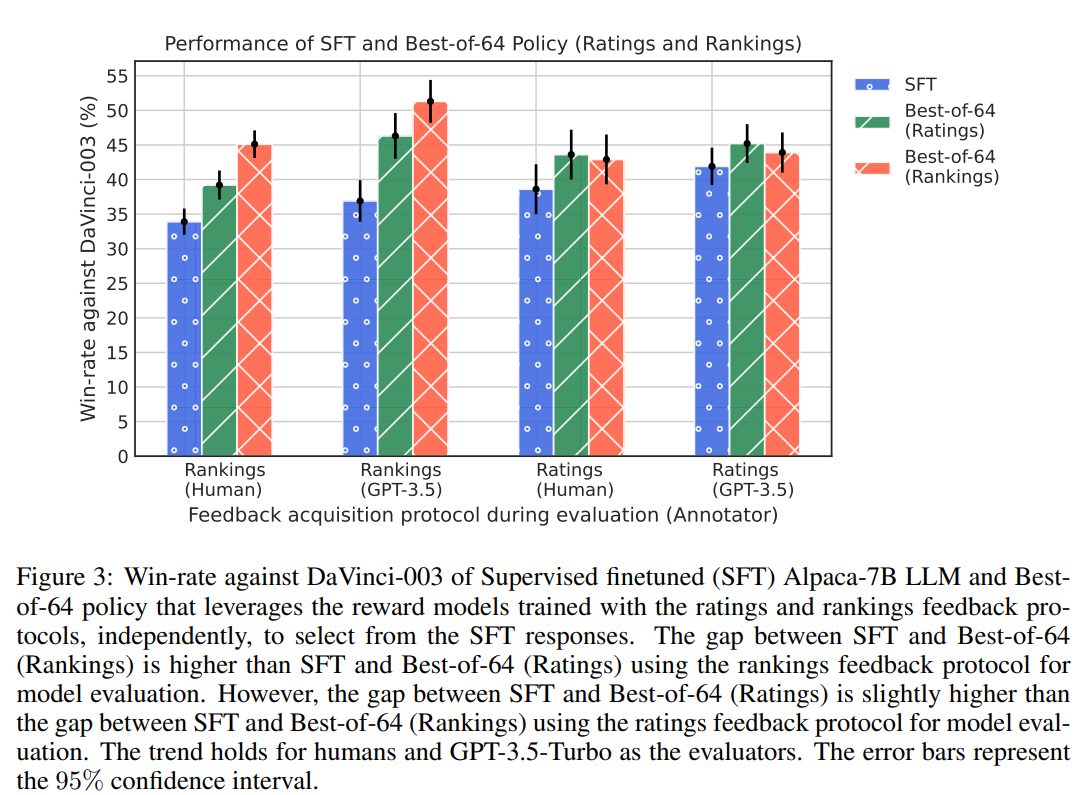

The study trains reward models based on ratings and rankings feedback and evaluates Best-of-n policies. Evaluation according to invisible instructions reveals that Best-of-n policies, especially with ranking feedback, outperform the base LLM (SFT) and demonstrate an improvement in alignment (shown in Figure 3).

A surprising revelation in the study reveals a phenomenon of inconsistency in assessment, where the choice of feedback protocol during assessment appears to favor the alignment algorithm that aligns with the same feedback protocol. In particular, the gap in win rates between the Best of n (ratings) policy and the SFT is more pronounced (11.2%) than the observed gap between the Best of n (ratings) policy and the SFT (5. 3%) in The classification protocol. In contrast, according to the grading protocol, the gap between the Best of n (ratings) policy and SFT (5%) slightly exceeds the gap between the Best of n (ratings) policy and SFT (4.3%). This inconsistency extends to evaluations involving GPT-3.5-Turbo, indicating a nuanced perception of the quality of policy response by annotators (both human and ai) under different feedback protocols. These findings underscore substantial implications for practitioners, highlighting that the feedback acquisition protocol significantly influences each stage of the alignment process.

In conclusion, the study highlights the paramount importance of meticulous data curation within sparse feedback protocols, shedding light on the potential implications of feedback protocol choices on evaluation outcomes. In the search for model alignment, future lines of research can delve into the cognitive aspects of the consistency problem identified, with the aim of improving alignment strategies. Exploring richer forms of feedback beyond the scope of absolute and relative preferences is crucial for more comprehensive understanding and better alignment across diverse application domains. Despite its valuable insights, the study recognizes limitations, including its focus on specific types of feedback, potential subjectivity in human annotations, and the need to explore the impact on different demographic groups and specialized domains. Addressing these limitations will help develop more robust and universally applicable alignment methodologies in the changing ai landscape.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Vineet Kumar is a Consulting Intern at MarktechPost. She is currently pursuing her bachelor's degree from the Indian Institute of technology (IIT), Kanpur. He is a machine learning enthusiast. He is passionate about research and the latest advances in Deep Learning, Computer Vision and related fields.

<!– ai CONTENT END 2 –>