In the fast-paced world of AI, efficient code generation is a challenge that can’t be overlooked. With the advent of increasingly complex models, the demand for accurate code generation has surged, but so have concerns about energy consumption and operational costs. Addressing this efficiency gap head-on, Deci, a pioneering AI company, introduces DeciCoder, a 1-billion-parameter open-source Large Language Model (LLM) that aims to redefine the gold standard in efficient and accurate code generation.

Existing code generation models have grappled with the delicate balance between accuracy and efficiency. A prominent player in this arena, SantaCoder, while widely used, has shown limitations in throughput and memory consumption. This is where DeciCoder emerges as a transformative solution. Based on Deci’s AI efficiency foundation, DeciCoder leverages cutting-edge architecture and AutoNAC™, a proprietary Neural Architecture Search technology. Unlike manual, labor-intensive approaches that often fall short, AutoNAC™ automates the process of generating optimal architectures. This results in an impressive architecture optimized for NVIDIA’s A10 GPU, which not only boosts throughput but rivals the accuracy of SantaCoder.

DeciCoder’s architecture is a testament to innovation. Incorporating Grouped Query Attention with eight key-value heads streamlines computation and memory usage, achieving harmony between precision and efficiency. In a head-to-head comparison with SantaCoder, DeciCoder has distinctive attributes – fewer layers (20 vs. 24), more heads (32 vs. 16), and a parallel embedding size. These features, derived from the intricate dance of AutoNAC™, underpin DeciCoder’s prowess.

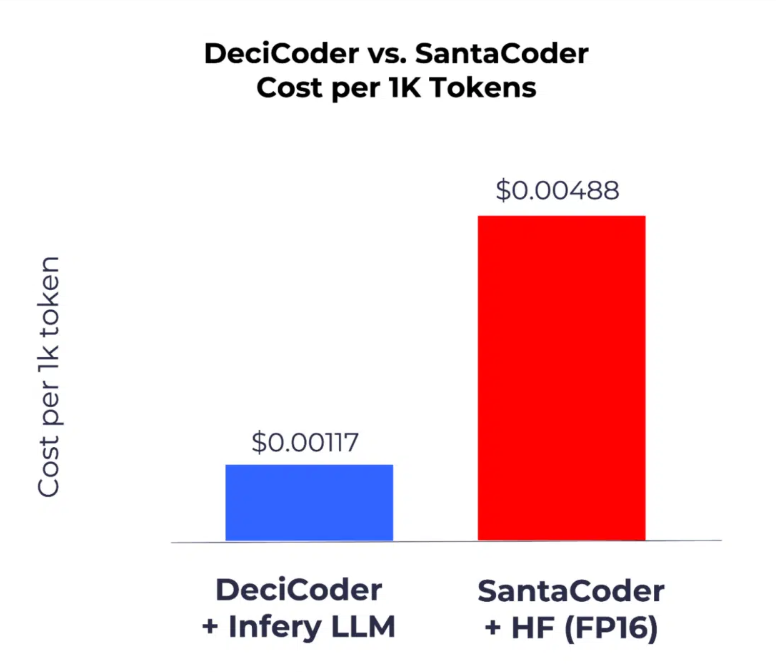

DeciCoder’s journey is marked by innovation and a relentless focus on efficiency. The implications of this development are profound. By leveraging DeciCoder alongside Infery LLM, a dedicated inference engine, users unlock the power of significantly higher throughput – a staggering 3.5 times greater than SantaCoder’s. The narrative of this innovation doesn’t end with efficiency gains; it’s equally about sustainability. Deci’s emphasis on eco-friendliness is reflected in the reduction of carbon emissions by 324 kg CO2 per model instance on an A10G GPU. This translates to a promising step towards environmentally-conscious AI.

DeciCoder is not an isolated endeavor; it’s part of Deci’s holistic approach to AI efficiency. As the company ushers in a new era of high-efficiency foundation LLMs and text-to-image models, developers can anticipate an upcoming generative AI SDK poised to redefine the fine-tuning, optimization, and deployment landscape. This comprehensive suite extends efficiency benefits to mammoth enterprises and smaller players, democratizing AI’s potential.

DeciCoder’s story isn’t confined to its architecture and benchmarks; it’s about empowerment. It empowers developers and businesses alike through permissive licensing, enabling the integration of DeciCoder into projects with minimal constraints. The flexibility to deploy DeciCoder in commercial applications aligns with Deci’s mission to catalyze innovation and growth across industries. It’s a story that isn’t just about AI but about driving a positive transformation in technology and its impact.

Overall, DeciCoder is more than just a model; it’s a realization of AI efficiency’s potential. Through the synergy of AutoNAC™, Grouped Query Attention, and dedicated inference engines, it brings forth a high-performing and environmentally conscious model. Deci’s journey, outlined by DeciCoder’s introduction, is a beacon for the AI community – a call to revolutionize technology while respecting our planet’s resources. It’s not just code; it’s a code for a more sustainable and efficient AI future.

Check out the Reference Article and Project. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 29k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.If you like our work, please follow us on Twitter

![]()

Madhur Garg is a consulting intern at MarktechPost. He is currently pursuing his B.Tech in Civil and Environmental Engineering from the Indian Institute of Technology (IIT), Patna. He shares a strong passion for Machine Learning and enjoys exploring the latest advancements in technologies and their practical applications. With a keen interest in artificial intelligence and its diverse applications, Madhur is determined to contribute to the field of Data Science and leverage its potential impact in various industries.