Reinforcement learning (RL) continually evolves as researchers explore methods to refine algorithms that learn from human feedback. This domain of learning algorithms addresses the challenges of defining and optimizing critical reward functions to train models to perform various tasks ranging from gaming to language processing.

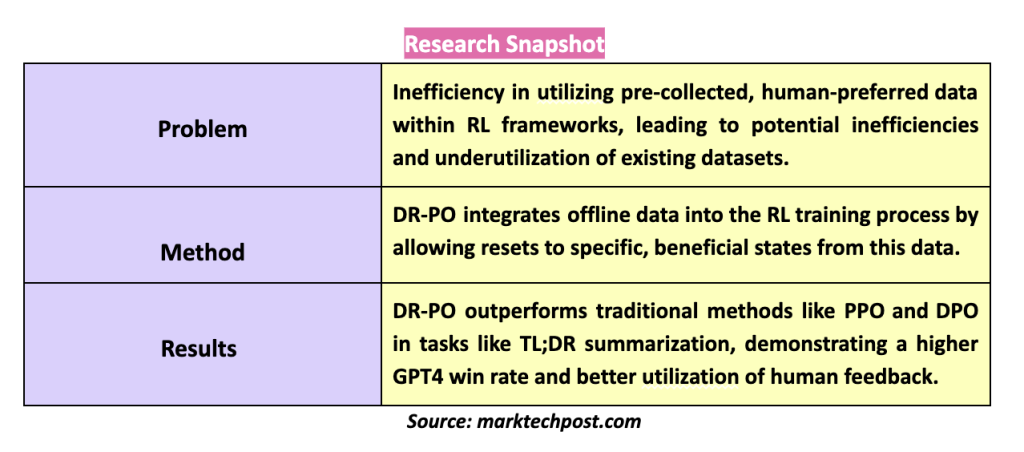

A common problem in this area is the inefficient use of previously collected data sets on human preferences, which are often overlooked in RL training processes. Traditionally, these models are trained from scratch, ignoring the rich information content of existing data sets. This disconnection leads to inefficiencies and a lack of utilization of valuable, pre-existing knowledge. Recent advances have introduced innovative methods that effectively integrate offline data into the RL training process to address this inefficiency.

Researchers from Cornell University, Princeton University, and Microsoft Research introduced a new algorithm, the Dataset Restore Policy Optimization (DR-PO) method. This method cleverly incorporates pre-existing data into the model training rule and is distinguished by its ability to directly reset to specific states from an offline data set during policy optimization. It contrasts with traditional methods that start each training episode from a generic initial state.

The DR-PO method improves offline data by allowing the model to “reset” to specific beneficial states already identified as useful in the offline data. This process reflects real-world conditions where scenarios are not always started from scratch but are often influenced by previous events or states. By leveraging this data, DR-PO improves the efficiency of the learning process and expands the application scope of the trained models.

DR-PO employs a hybrid strategy that combines online and offline data flows. This method takes advantage of the informative nature of the offline data set by resetting the policy optimizer to states previously identified as valuable by the human labelers. The integration of this method has shown promising improvements over traditional techniques, which often ignore the potential insights available in previously collected data.

DR-PO has shown outstanding results in studies involving tasks such as TL; summary DR and the Anthropic Helpful and Harmful dataset. DR-PO has outperformed established methods such as proximal policy optimization (PPO) and direction preference optimization (DPO). In the TL;DR summarization task, DR-PO achieved a higher win rate in GPT4, improving the quality of the summaries generated. In direct comparisons, DR-PO's approach to integrating resets and offline data has consistently demonstrated superior performance metrics.

In conclusion, DR-PO presents a significant advance in RL. DR-PO overcomes traditional inefficiencies by integrating previously collected and human-preferred data into the RL training process. This method improves learning efficiency by using resets to specific states identified in offline data sets. Empirical evidence demonstrates that DR-PO outperforms conventional approaches such as proximal policy optimization and steering preference optimization in real-world applications such as TL;DR summary, achieving superior GPT4 gain rates. This innovative approach streamlines the training process and maximizes the utility of existing human feedback, setting a new benchmark in adapting offline data for model optimization.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our SubReddit over 40,000ml

Do you want to be in front of 1.5 million ai audiences? Work with us here

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER