In the modern world, most companies rely on the power of big data and analytics to fuel their growth, strategic investments, and customer engagement. Big data is the underlying constant in targeted advertising, personalized marketing, product recommendations, insight generation, pricing optimizations, sentiment analysis, predictive analytics, and much more.

Data is often collected from multiple sources, transformed, stored, and processed in on-premises or cloud data lakes. While initial data ingestion is relatively trivial and can be accomplished through custom scripts developed in-house or traditional Extract Transform Load (ETL) tools, the problem quickly becomes prohibitively complex and costly to resolve as companies must:

- Manage the entire data lifecycle, for cleansing and compliance purposes

- Optimize storage – to reduce associated costs

- Simplify architecture: by reusing IT infrastructure

- Process data incrementally, through powerful state management

- Apply the same policies across batches and data streams, without duplicating efforts

- Migrate between on-premises and the cloud, with minimal effort

is where apache goblinan open source data management and integration system. Apache Gobblin provides unmatched capabilities that can be used in whole or in parts, depending on business needs.

In this section, we’ll dive into the various Apache Gobblin capabilities that help address the challenges described above.

Full data lifecycle management

Apache Gobblin provides a range of capabilities for building data pipelines that support the full set of data lifecycle operations on data sets.

- Ingest data – from multiple sources to sinks ranging from databases, rest APIs, FTP/SFTP servers, filing cabinets, CRMs like Salesforce and Dynamics, and more.

- Replicate Data – Across multiple data lakes with specialized capabilities for the Hadoop Distributed File System via Distcp-NG.

- Purge data: Using retention policies such as time-based, latest K, versioned, or a combination of policies.

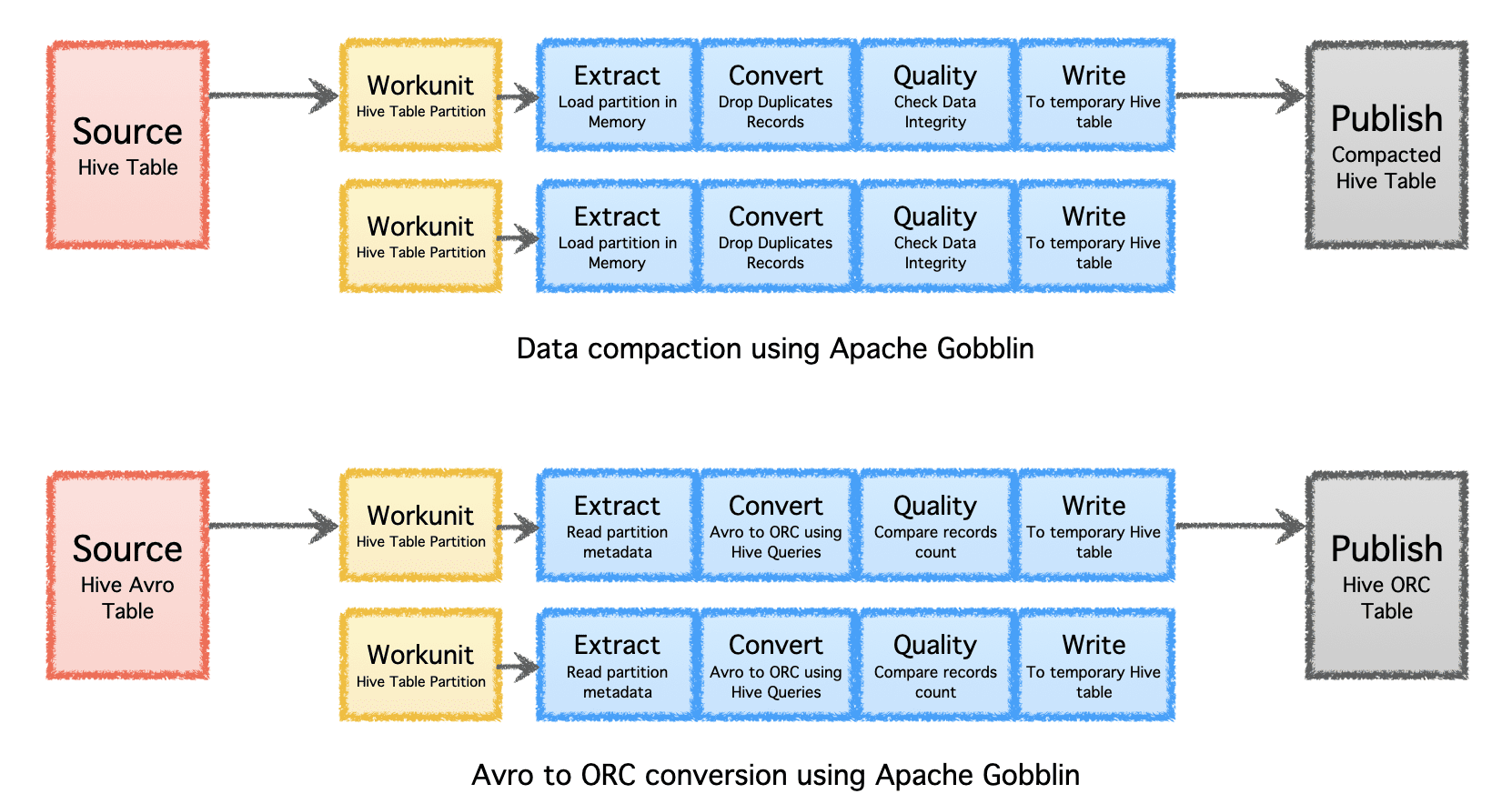

The Gobblin Logical Pipeline consists of a ‘Source’ which determines the distribution of work and creates ‘Work Units’. These ‘units of work’ are then selected for execution as ‘tasks’, which include extracting, converting, QA and writing data to the destination. The final step, ‘Publish Data’, validates the successful execution of the pipeline and atomically commits the output data, if supported by the target.

Image by author

Optimize storage

Apache Gobblin can help reduce the amount of storage required for data by post-processing the data after ingestion or replication using compaction or format conversion.

- Compaction – Post-processing of data to deduplicate based on all fields or key fields of the records, trimming the data to keep only one record with the last timestamp with the same key.

- Avro to ORC – As a specialized format conversion mechanism to convert the popular row-based Avro format into a hyper-optimized column-based ORC format.

Image by author

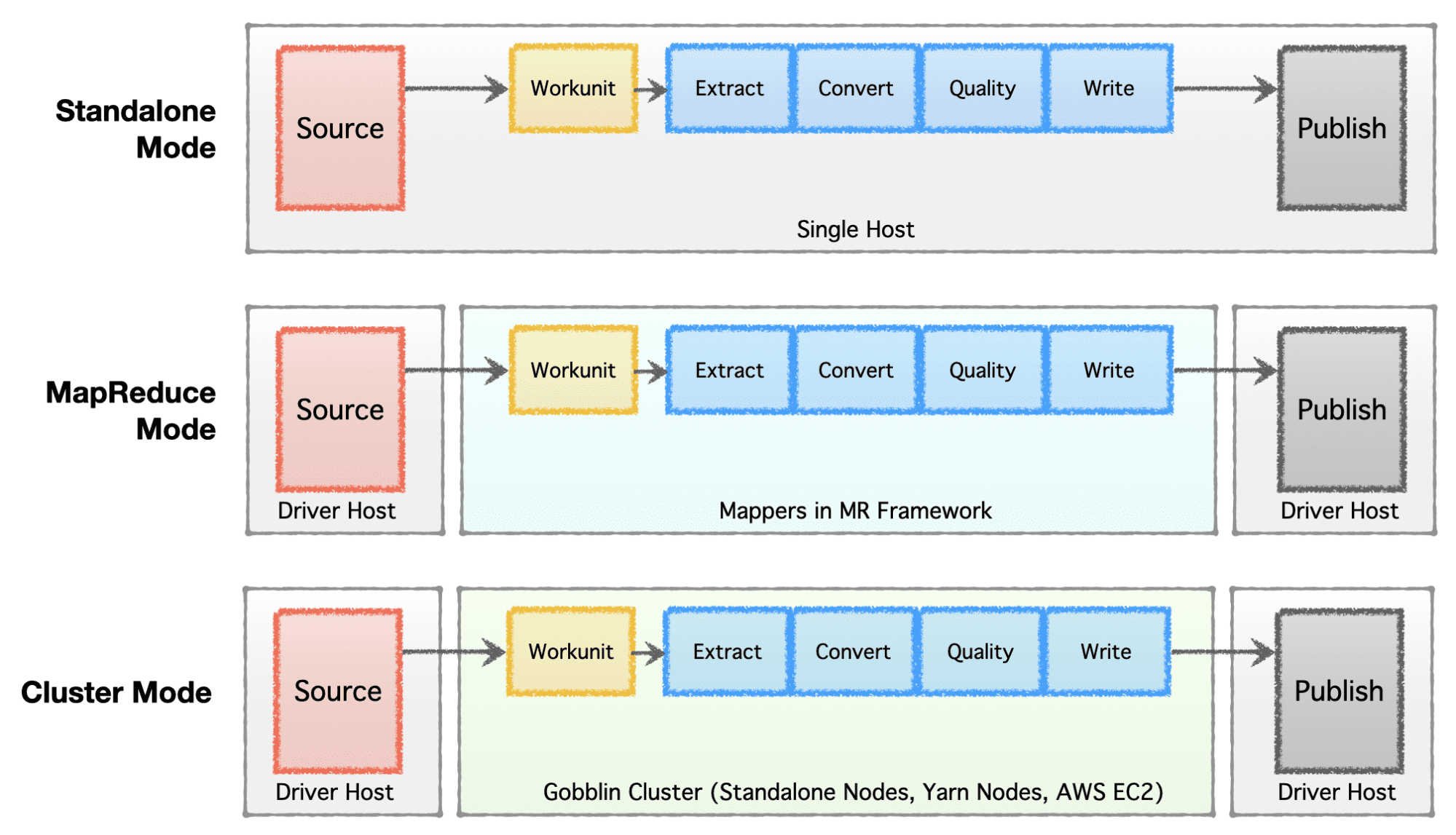

Simplify architecture

Depending on the stage of the company (startup to company), scale requirements, and their respective architecture, companies prefer to configure or evolve their data infrastructure. Apache Gobblin is very flexible and supports multiple execution models.

- Standalone Mode – To run as a standalone process on a full box, i.e. a single host for simple use cases and low demand situations.

- MapReduce mode – to run as a MapReduce job on the Hadoop infrastructure for big data scenarios to handle data sets that range in scale to Petabytes.

- Cluster mode: Standalone – To run as a cluster backed by Apache Helix and Apache Zookeeper on a set of machines or bare metal hosts to handle large scale independent of the Hadoop MR framework.

- Cluster Mode: Yarn – To run as a cluster on native Yarn without the Hadoop MR framework.

- Cluster Mode: AWS – To run as a cluster on Amazon’s public cloud offering, ie. AWS for infrastructures hosted on AWS.

Image by author

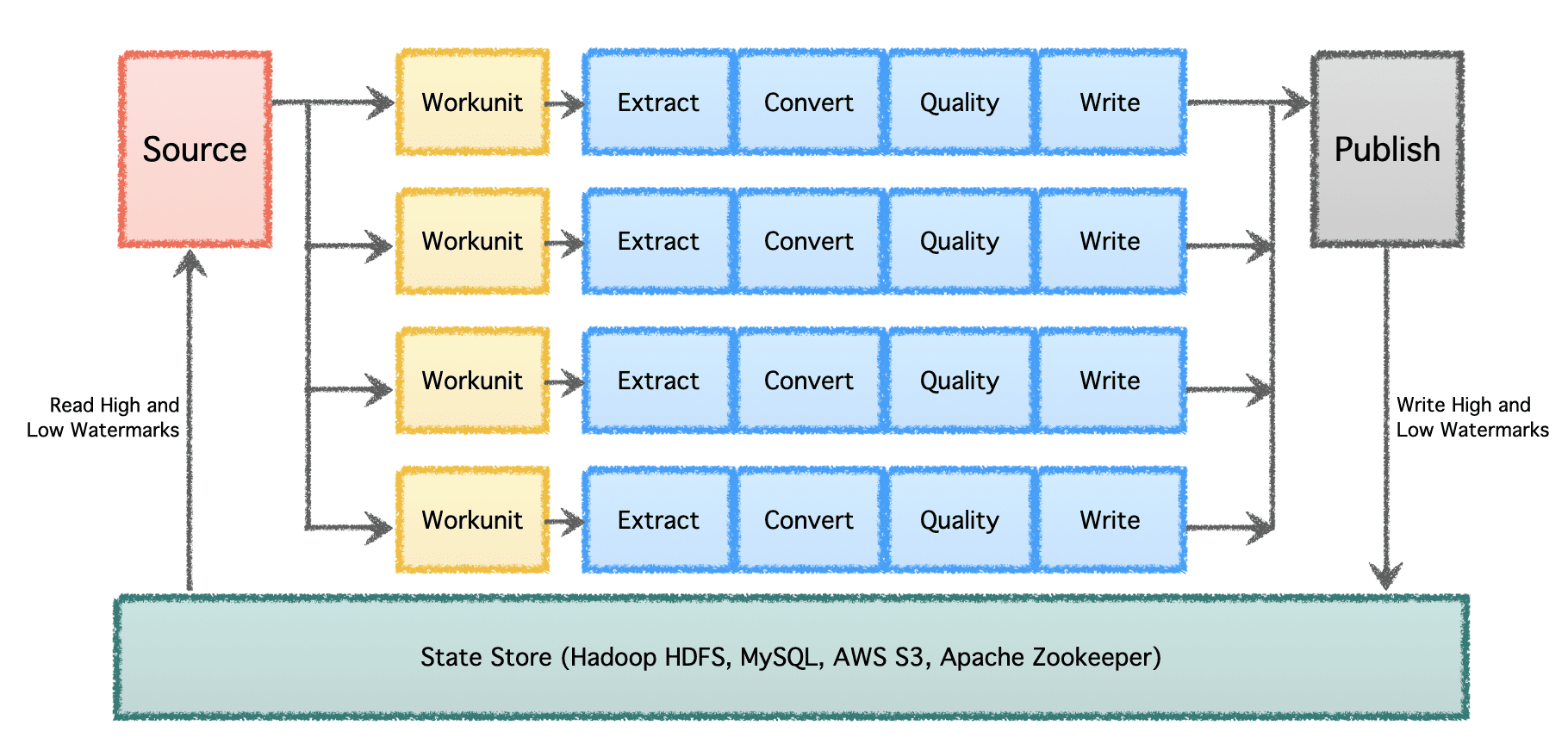

Process data incrementally

At significant scale with multiple data pipelines and high volume, data must be processed in batches and over time. Therefore, it is necessary to establish checkpoints so that data pipelines can pick up from where they last left off and continue. Apache Gobblin supports low and high watermarks and supports strong state management semantics via the State Store on HDFS, AWS S3, MySQL, and more transparently.

Image by author

Same policies on batch and stream data

Most of today’s data pipelines must be written twice, once for batch data and once for near-line or streaming data. It duplicates the effort and introduces inconsistencies in the policies and algorithms applied to different types of pipelines. Apache Gobblin solves this by allowing users to create a pipeline once and run it on both batch and stream data whether used in Gobblin Cluster mode, Gobblin in AWS mode, or Gobblin in Yarn mode.

Migrate between on-premises and cloud

Due to its versatile modes that can run locally on a single box, a node pool, or the cloud, Apache Gobblin can be deployed and used on-premises and in the cloud. Thus, allowing users to write their data pipelines once and migrate them along with Gobblin deployments easily between on-premises and cloud based on specific needs.

Due to its highly flexible architecture, powerful features, and the extreme scale of data volumes it can support and process, Apache Gobblin is used in the production infrastructure of major technology companies and is a must for any big data infrastructure implementation today.

More details about Apache Gobblin and how to use it can be found at https://gobblin.apache.org

Abhishek Tiwari is a senior manager at LinkedIn and leads the company’s Big Data Pipelines organization. He is also Vice President of Apache Gobblin at the Apache Software Foundation and a Fellow of the British Computer Society.

NEWSLETTER

NEWSLETTER