Image of DALL-E 3

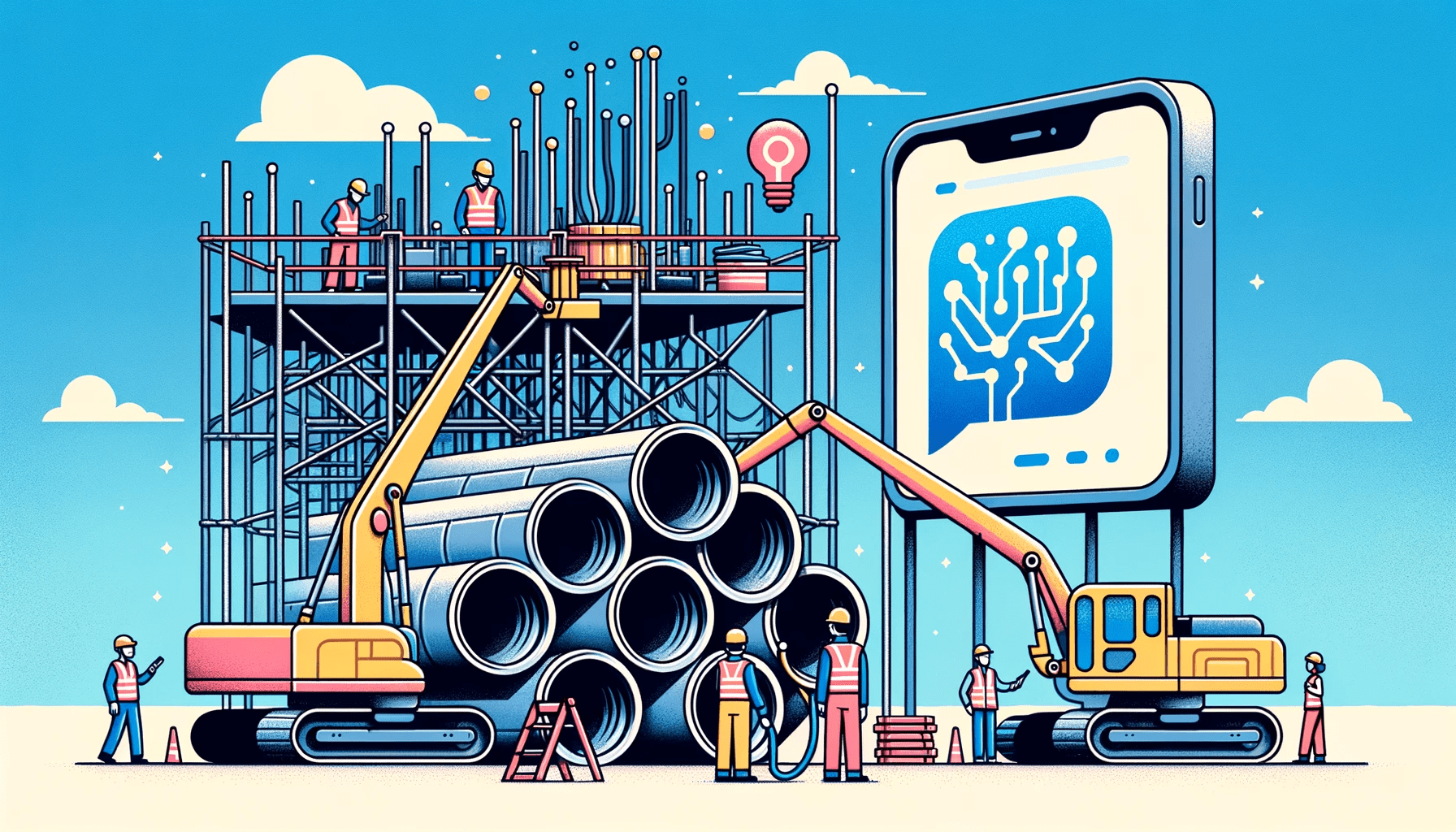

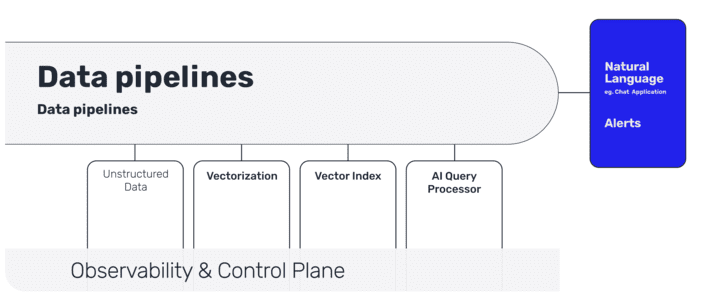

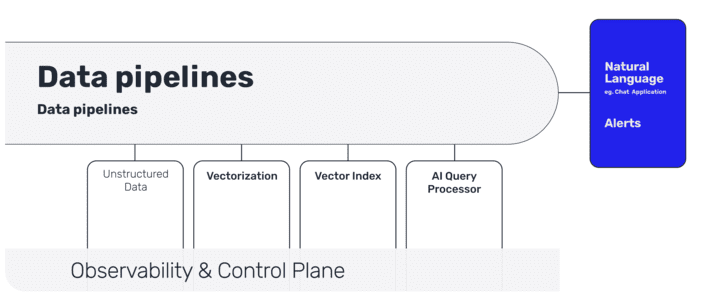

Currently, companies follow two approaches for LLM-driven applications: Fine tuning and Recovery Augmented Generation (RAG). At a very high level, RAG takes an input and retrieves a set of relevant/supporting documents given a source (e.g. company wiki). The documents are concatenated as context with the original input message and sent to the LLM model that produces the final response. RAG seems to be the most popular approach to bringing LLMs to market, especially in real time processing scenarios. The LLM architecture to support that most of the time includes creating an effective data pipeline.

In this post, we’ll explore different stages in the LLM data pipeline to help developers implement production-grade systems that work with their data. Follow the instructions to learn how to ingest, prepare, enrich, and serve data to power GenAI applications.

These are the different stages of an LLM process:

Data ingestion of unstructured data.

Vectorization with enrichment (with metadata)

Vector indexing (with real-time synchronization)

ai query processor

User interaction in natural language (with chat or API)

Data ingestion of unstructured data.

The first step is to collect the right data to help with business objectives. If you are creating a consumer-facing chatbot, you need to pay special attention to the data that will be used. Data sources can range from a company portal (e.g. Sharepoint, Confluent, document storage) to internal APIs. Ideally, you would want to have a mechanism for inserting these sources into the index so that your LLM application is up-to-date for your end consumer.

Organizations should implement data governance policies and protocols when extracting text data for in-context LLM training. Organizations can start by auditing document data sources to catalog sensitivity levels, license terms, and origin. Identify restricted data that needs redaction or exclusion from data sets.

The quality of these data sources must also be evaluated: diversity, size, noise levels, redundancy. Lower quality data sets will dilute the responses of Master of Laws applications. You may even need a document classification mechanism early to help with the right type of storage later in the process.

Adhering to data governance guardrails, even in accelerated LLM development, reduces risks. Establishing upfront governance mitigates many issues down the road and enables robust, scalable extraction of text data for contextual learning.

Pulling messages through Slack, Telegram, or Discord APIs provides access to real-time data is what helps RAG, but raw conversational data contains noise: typos, encoding issues, strange characters. Filtering real-time messages with offensive content or sensitive personal details that could be PII is an important part of data cleansing.

Vectorization with metadata

Metadata such as the author, date, and context of the conversation further enrich the data. This incorporation of external knowledge into vectors helps in smarter and more specific retrieval.

Some of the metadata related to the documents may be found in the portal or in the metadata of the document itself; However, if the document is attached to a business object (e.g. case information, customer, employee), then you will need to obtain that information from a relational database. . If there are security concerns around data access, this is a place where you can add security metadata that also helps with the recovery stage later.

A critical step here is to convert text and images into vector representations using the LLM embedding models. For documents, you should first perform fragmentation and then encode, preferably using local zero-shot embedding models.

Vector indexing

Vector representations must be stored somewhere. This is where vector databases or vector indexes are used to efficiently store and index this information as embeddings.

This becomes your “LLM source of truth” and should be in sync with your data sources and documents. Real-time indexing It becomes important if your LLM application serves clients or generates business-related information. You want to prevent your LLM application from being out of sync with your data sources.

Fast recovery with a query processor

When you have millions of business documents, getting the right content based on the user’s query becomes a challenge.

This is where the early stages of the process begin to add value: cleaning and enriching data by adding metadata and, most importantly, indexing data. This addition in context helps strengthen rapid engineering.

User interaction

In a traditional pipeline environment, you send data to a data warehouse and the analytics tool will pull reports from the warehouse. In an LLM process, an end-user interface is typically a chat interface that, at the simplest level, takes a query from the user and responds to the query.

The challenge with this new type of pipe is not only getting a prototype, but also putting it into production. This is where an enterprise-grade monitoring solution becomes important to track your vector pipelines and warehouses. The ability to obtain business data from structured and unstructured data sources becomes an important architectural decision. LLMs represent the state-of-the-art in natural language processing, and creating enterprise-grade data pipelines for LLM-powered applications keeps it ahead of the curve.

Here you have access to an available source. real-time stream processing framework.

Anup Surendran is vice president of products and product marketing and specializes in bringing ai products to market. She has worked with startups that have had two successful exits (to SAP and Kroll) and enjoys teaching others about how ai products can improve productivity within an organization.

NEWSLETTER

NEWSLETTER