Introduction

In today's fast-paced world of local food delivery, ensuring customer satisfaction is key for businesses. Big players like Zomato and Swiggy dominate this industry. Customers expect fresh food; If they receive damaged items, they appreciate a refund or a discount voucher. However, manually determining the freshness of food is cumbersome for customers and company staff. One solution is to automate this process using deep learning models. These models can predict food freshness, allowing employees to review only flagged complaints for final validation. If the model confirms the freshness of the food, it can automatically dismiss the complaint. In this article we will build a food quality detector using deep learning.

Deep learning, a subset of artificial intelligence, offers significant utility in this context. Specifically, CNNs (convolutional neural networks) can be employed to train models using images of food to discern its freshness. The accuracy of our model depends entirely on the quality of the data set. Ideally, incorporating real food images from user chatbot complaints into hyperlocal food delivery apps would greatly improve accuracy. However, lacking access to such data, we rely on a widely used dataset known as the “fresh and rotten ranking dataset”, accessible on Kaggle. To explore the complete deep learning code, simply click the “Copy and Edit” button provided here.

Learning objectives

- Learn the importance of food quality in customer satisfaction and business growth.

- Find out how deep learning helps build food quality detector.

- Gain hands-on experience through a step-by-step implementation of this model.

- Understand the challenges and solutions involved in its implementation.

This article was published as part of the Data Science Blogathon.

Understanding the use of deep learning in food quality detector

Deep learning, a subset of artificial intelligence, primarily uses spatial data sets to build models. Neural networks within deep learning are used to train these models, mimicking the functionality of the human brain.

In the context of food quality detection, training deep learning models with large sets of food images is essential to accurately distinguish between good and poor quality foods. We can perform hyperparameter tuning based on the data provided, to make the model more accurate.

Importance of food quality in hyperlocal delivery

Integrating this feature into hyperlocal food delivery offers several benefits. The model avoids bias towards specific customers and predicts accurately, thereby reducing complaint resolution time. Additionally, we can employ this feature during the order packaging process to inspect food quality before delivery, ensuring customers receive consistently fresh food.

Development of a food quality detector

To completely build this feature, we need to go through many steps, such as obtaining and cleaning the data set, training the deep learning model, evaluating the performance and performing hyperparameter tuning, and finally saving the model to h5 Format. After this we can implement the interface using Reactand the backend using python framework Django. We will use Django to handle image upload and processing.

About the data set

Before delving into data preprocessing and model building, it is essential to understand the data set. As discussed above, we will use a Kaggle dataset called Classification of fresh and rotten foods. This data set is divided into two main categories called Train and Proof which They are used for training and testing purposes, respectively. Under the train folder, we have 9 subfolders of fresh fruits and vegetables and 9 subfolders of rotten fruits and vegetables.

Key features of the data set

- Variety of images: This dataset contains many food images with a lot of variation in terms of angle, background, and lighting conditions. This helps make the model unbiased and more accurate.

- High quality images: This dataset has very good quality images captured by several professional cameras.

Data loading and preparation

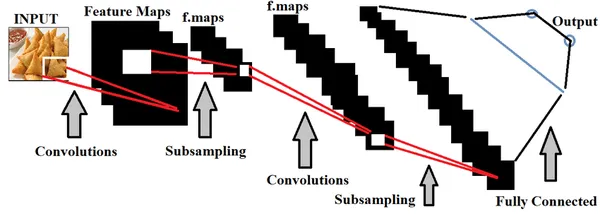

In this section, we will first upload the images using 'tensorflow.keras.preprocessing.image.load_img'It runs and displays images using the matplotlib library. Preprocessing these images for model training is really important. This involves cleaning and organizing the images so that they are suitable for the model.

import os

import matplotlib.pyplot as plt

from tensorflow.keras.preprocessing.image import load_img

def visualize_sample_images(dataset_dir, categories):

n = len(categories)

fig, axs = plt.subplots(1, n, figsize=(20, 5))

for i, category in enumerate(categories):

folder = os.path.join(dataset_dir, category)

image_file = os.listdir(folder)(0)

img_path = os.path.join(folder, image_file)

img = load_img(img_path)

axs(i).imshow(img)

axs(i).set_title(category)

plt.tight_layout()

plt.show()

dataset_base_dir="/kaggle/input/fresh-and-stale-classification/dataset"

train_dir = os.path.join(dataset_base_dir, 'Train')

categories = ('freshapples', 'rottenapples', 'freshbanana', 'rottenbanana')

visualize_sample_images(train_dir, categories)

Now let's load the training and test images into variables. We will resize all images to the same height and width of 180.

from tensorflow.keras.preprocessing.image import ImageDataGenerator

batch_size = 32

img_height = 180

img_width = 180

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode="nearest",

validation_split=0.2)

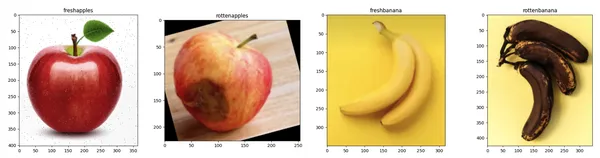

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(img_height, img_width),

batch_size=batch_size,

class_mode="binary",

subset="training")

validation_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(img_height, img_width),

batch_size=batch_size,

class_mode="binary",

subset="validation")

Model construction

Now let's build the deep learning model using the sequential algorithm of 'tensorflow.keras'. We will add 3 convolution layers and an Adam optimizer. Before we stop at the practical part, let us first understand what the terms'Sequential model','Adam Optimizer', and 'Convolution layer' mean.

Sequential model

The sequential model comprises a stack of layers, providing a fundamental structure in Keras. It is ideal for scenarios where your neural network features a single input tensor and a single output tensor. It adds layers in the sequential order of execution, making it suitable for building simple models with stacked layers. This simplicity makes the sequential model very useful and easier to implement.

Adam Optimizer

Adam's abbreviation is “Adaptive Moment Estimation”. It serves as an alternative optimization algorithm to stochastic gradient descent, updating the network weights iteratively. Adam Optimizer is beneficial as it maintains a learning rate (LR) for each network weight, which is advantageous in handling noise in the data.

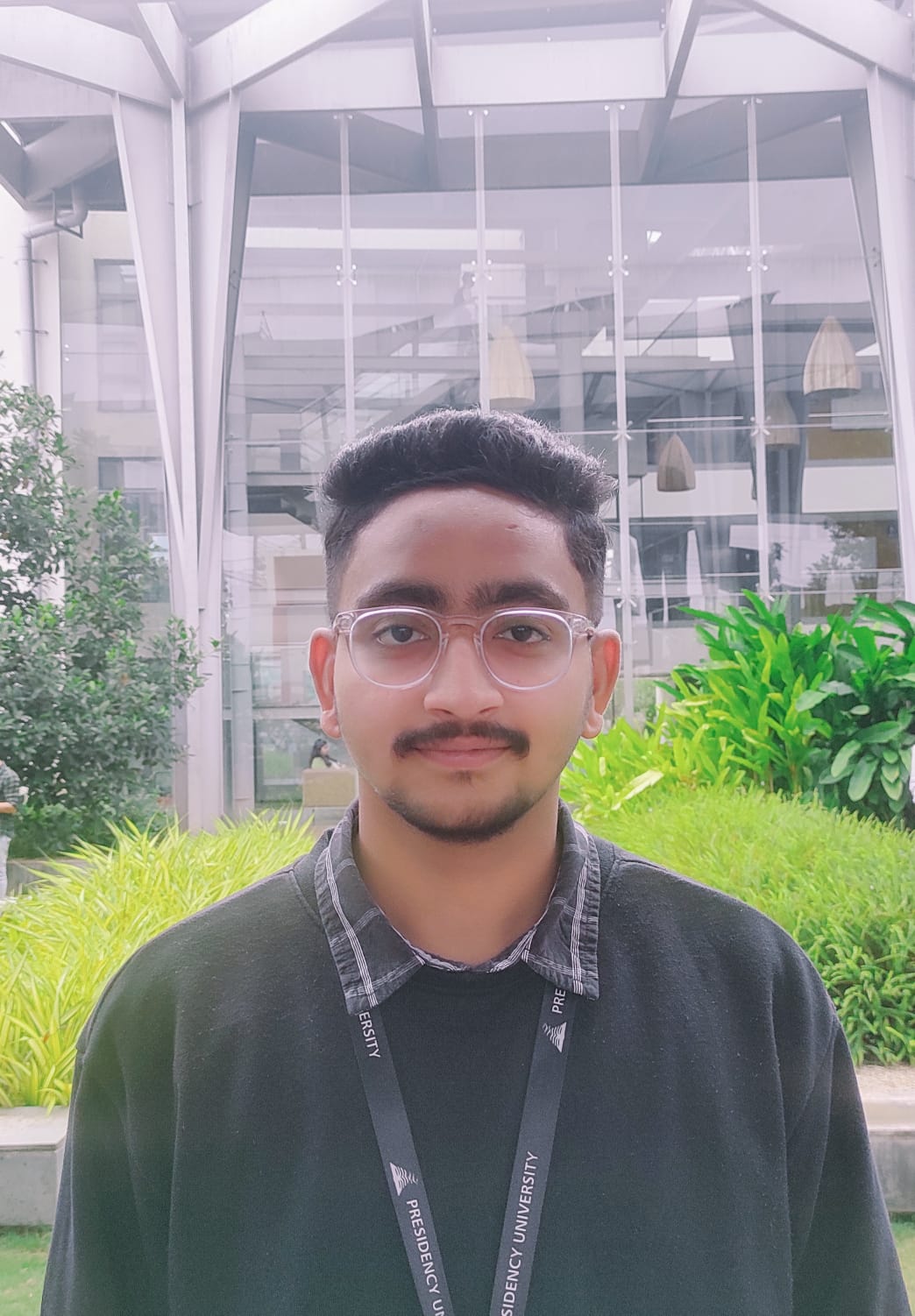

Convolutional layer (Conv2D)

It is the main component of convolutional neural networks (CNN). It is mainly used to process spatial data sets such as images. This layer applies a convolution function or operation to the input and then passes the result to the next layer.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout

model = Sequential((

Conv2D(32, (3, 3), activation='relu', input_shape=(img_height, img_width, 3)),

MaxPooling2D(2, 2),

Conv2D(64, (3, 3), activation='relu'),

MaxPooling2D(2, 2),

Conv2D(128, (3, 3), activation='relu'),

MaxPooling2D(2, 2),

Flatten(),

Dense(512, activation='relu'),

Dropout(0.5),

Dense(1, activation='sigmoid')

))

model.compile(optimizer="adam",

loss="binary_crossentropy",

metrics=('accuracy'))

epochs = 10

history = model.fit(

train_generator,

steps_per_epoch=train_generator.samples // batch_size,

epochs=epochs,

validation_data=validation_generator,

validation_steps=validation_generator.samples // batch_size)

Food Quality Detector Test

Now let's test the model by giving it a new food image and see how accurately it can classify between fresh and rotten food.

from tensorflow.keras.preprocessing import image

import numpy as np

def classify_image(image_path, model):

img = image.load_img(image_path, target_size=(img_height, img_width))

img_array = image.img_to_array(img)

img_array = np.expand_dims(img_array, axis=0)

img_array /= 255.0

predictions = model.predict(img_array)

if predictions(0) > 0.5:

print("Rotten")

else:

print("Fresh")

image_path="/kaggle/input/fresh-and-stale-classification/dataset/Train/

rottenoranges/Screen Shot 2018-06-12 at 11.18.28 PM.png"

classify_image(image_path, model)

As we can see, the model has predicted correctly. as we have given rotten orange image as input, the model has correctly predicted it as Rotten.

For the frontend (React) and backend (Django) code, you can see my full code on GitHub here: Link

Conclusion

In conclusion, to automate food quality complaints in hyperlocal delivery applications, we propose to build a deep learning model integrated with a web application. However, due to limited training data, the model may not accurately detect all food images. This implementation serves as a critical step toward a broader solution. Access to user-uploaded images in real time within these applications would significantly improve the accuracy of our model.

Key takeaways

- Food quality plays a critical role in achieving customer satisfaction in the hyperlocal food delivery market.

- You can use deep learning technology to train an accurate food quality predictor.

- You got hands-on experience with this step-by-step guide to creating the web application.

- You have understood the importance of data set quality to create an accurate model.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.