Meeting notes are a crucial part of collaboration, but they often go unnoticed. Between leading discussions, listening carefully, and writing notes, it's easy for key information to slip through the cracks. Even when notes are captured, they may be disorganized or illegible, making them useless.

In this post, we explore how to use Amazon Transcribe and Amazon Bedrock to automatically generate clean, concise summaries of video or audio recordings. Whether it's an internal team meeting, conference, or results call, this approach can help you distill hours of content into bullet points.

We looked at a solution to transcribe a project team meeting and summarize key takeaways from Amazon Bedrock. We also discuss how you can customize this solution for other common scenarios, such as course conferences, interviews, and sales calls. Read on to simplify and automate your note-taking process.

Solution Overview

By combining Amazon Transcribe and Amazon Bedrock, you can save time, capture insights, and improve collaboration. Amazon Transcribe is an automatic speech recognition (ASR) service that makes it easy to add speech-to-text capability to applications. It uses advanced deep learning technologies to accurately transcribe audio into text. Amazon Bedrock is a fully managed service that offers a selection of high-performance base models (FMs) from leading ai companies such as AI21 Labs, Anthropic, Cohere, Meta, Stability ai, and Amazon with a single API, along with a rich set of capabilities you need to build generative ai applications. With Amazon Bedrock, you can easily experiment with a variety of core FMs and privately customize them with your data using techniques such as fine-tuning and recovery augmented generation (RAG).

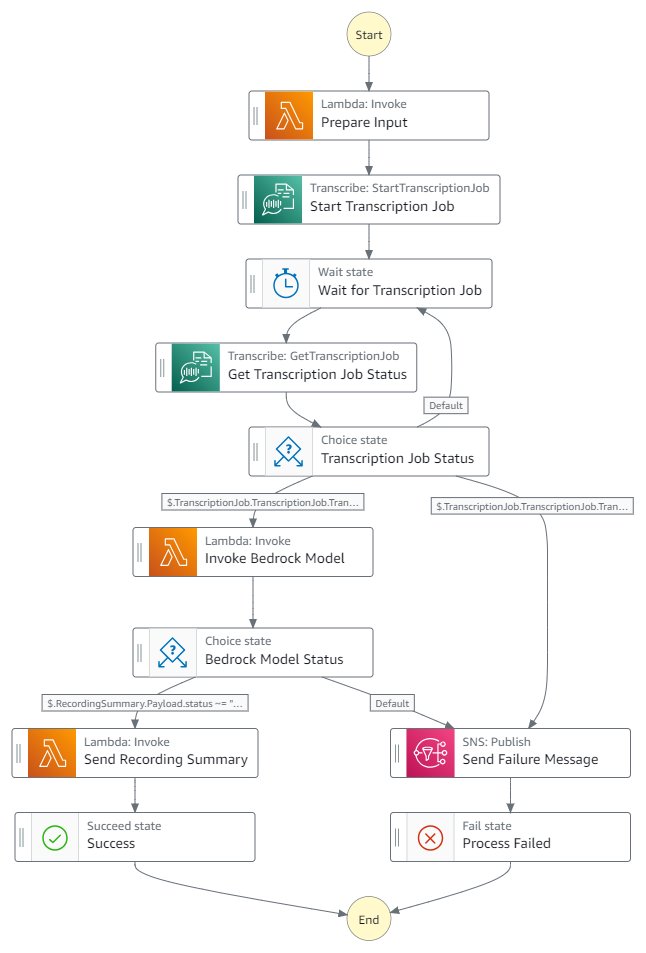

The solution presented in this post is organized using an AWS Step Functions state machine that is triggered when you upload a recording to the designated Amazon Simple Storage Service (Amazon S3) bucket. Step Functions allows you to create serverless workflows to orchestrate and connect components across AWS services. It handles the underlying complexity so you can focus on the application logic. It is useful for coordinating tasks, distributed processing, ETL (extract, transform, load), and business process automation.

The following diagram illustrates the high-level solution architecture.

The solution workflow includes the following steps:

- A user stores a recording in the S3 asset bucket.

- This action activates the Step Functions transcription and summary state machine.

- As part of the state machine, an AWS Lambda function is triggered, which transcribes the recording using Amazon Transcribe and stores the transcript in the asset repository.

- A second Lambda function retrieves the transcript and generates a summary using the Anthropic Claude model in Amazon Bedrock.

- Finally, a final Lambda function uses Amazon Simple Notification Service (Amazon SNS) to send a summary of the recording to the recipient.

This solution is supported in regions where Anthropic Claude on Amazon Bedrock is available.

The state machine organizes the steps to perform specific tasks. The following diagram illustrates the detailed process.

Previous requirements

Amazon Bedrock users must request access to models before they are available for use. This is a one-time action. For this solution, you will need to enable access to the Anthropic Claude model (not Anthropic Claude Instant) in Amazon Bedrock. For more information, see Accessing the model.

Deploy solution resources

The solution is implemented using an AWS CloudFormation template, located at ai-solutions/recordings-summary-generator” target=”_blank” rel=”noopener”>GitHub repository, to automatically provision the necessary resources in your AWS account. The template requires the following parameters:

- Email address used to send the summary – The summary will be sent to this address. You must confirm the initial Amazon SNS confirmation email before receiving additional notifications.

- Summary instructions – These are the instructions given to the Amazon Bedrock model to generate the summary.

Run the solution

After you deploy your solution using AWS CloudFormation, complete the following steps:

- Confirm the Amazon SNS email confirmation that you should receive moments after creating the CloudFormation stack.

- In the AWS CloudFormation console, navigate to the stack you just created.

- in the stack Departures and find the value associated with

AssetBucketName; it will look something likesummary-generator-assetbucket-xxxxxxxxxxxxx. - In the Amazon S3 console, navigate to your asset bucket.

This is where you will upload your recordings. Valid file formats are MP3, MP4, WAV, FLAC, AMR, OGG and WebM.

- Upload your recording to

recordingsfile.

Uploading recordings will automatically activate the Step Functions state machine. For this example, we use a sample team meeting recording in the sample-recording GitHub repository directory.

- In the Step Functions console, navigate to the summary generator state machine.

- Choose the name of the state machine executed with the state Run.

Here you can observe the progress of the state machine as it processes the recording.

- After you reach your Success status, you should receive a summary of the recording via email.

Alternatively, you can navigate to the S3 asset bucket and view the transcript there in the transcripts folder.

Check the summary

You will receive the recording summary via email to the address you provided when you created the CloudFormation stack. If you don't receive the email within a few moments, make sure you have acknowledged the Amazon SNS confirmation email that you should have received after creating the stack and then upload the recording again, which will trigger the digest process.

This solution includes a simulated recording of a team meeting that you can use to test the solution. The summary will be similar to the following example. However, due to the nature of generative ai, your output will look a little different, but the content should be similar.

These are the key points of stand-up:

- Joe finished reviewing the current state of the EDU1 task and created a new task to develop the future state. That new task is in the backlog and must be prioritized. He is now starting EDU2 but is stuck on resource selection.

- Rob created a tagging strategy for SLG1 based on best practices, but you may need to coordinate with other teams who have created their own strategies to align on a uniform approach. A new task was created to coordinate labeling strategies.

- Rob has made progress debugging SLG2, but may need additional help. This task will be moved to Sprint 2 to allow time to obtain additional resources.

Next steps:

- Joe will continue to work on EDU2 as he can until resource selection is decided.

- A new task will be prioritized to coordinate labeling strategies between teams

- SLG2 moved to Sprint 2

- Standups will move to Mondays starting next week

Expand the solution

Now that you have a working solution, here are some potential ideas for customizing the solution for your specific use cases:

- Try modifying the process to fit the available source content and desired results:

- For situations where transcripts are available, create an alternative Step Functions workflow to ingest existing text-based or PDF transcripts.

- Instead of using Amazon SNS to notify recipients via email, you can use it to send the result to a different endpoint, such as a team collaboration site or the team chat channel.

- Try changing the CloudFormation summary statement stack parameter provided to Amazon Bedrock to produce results specific to your use case (this is the Generative ai message):

- By summarizing a company's earnings call, you can focus the model on potentially promising opportunities, areas of concern, and things you should continue to monitor.

- If you are using this to summarize a course lecture, the model could identify upcoming tasks, summarize key concepts, list facts, and filter out any small talk from the recording.

- For the same recording, create different summaries for different audiences:

- Engineer briefs focus on design decisions, technical challenges, and upcoming deliverables.

- Project manager briefs focus on schedules, costs, deliverables, and action items.

- Project backers receive a brief update on project status and escalations.

- For longer recordings, try generating summaries for different levels of interest and time commitment. For example, create a single sentence, a single paragraph, a single page, or a detailed summary. In addition to the message, you may want to adjust the

max_tokens_to_sampleparameter to adapt to different content lengths.

Clean

To clean up the solution, delete the CloudFormation stack you created earlier. Please note that removing the stack will not remove the asset bucket. If you no longer need the recordings or transcripts, you can delete this repository separately. Amazon Transcribe will automatically delete transcription jobs after 90 days, but you can manually delete them before that date.

Conclusion

In this post, we explore how to use Amazon Transcribe and Amazon Bedrock to automatically generate clean, concise summaries of video or audio recordings. We recommend that you continue to evaluate Amazon Bedrock, Amazon Transcribe, and other AWS ai services, such as Amazon Textract, Amazon Translate, and Amazon Rekognition, to see how they can help you achieve your business goals.

About the authors

Rob Barnes He is a Principal Consultant for AWS Professional Services. Work with our customers to address security and compliance requirements at scale in complex, multi-account AWS environments through automation.

Rob Barnes He is a Principal Consultant for AWS Professional Services. Work with our customers to address security and compliance requirements at scale in complex, multi-account AWS environments through automation.

Jason Stehle is a Senior Solutions Architect at AWS, based in the New England area. He works with customers to align AWS capabilities with their biggest business challenges. Outside of work, he spends his time building things and watching comic book movies with his family.

Jason Stehle is a Senior Solutions Architect at AWS, based in the New England area. He works with customers to align AWS capabilities with their biggest business challenges. Outside of work, he spends his time building things and watching comic book movies with his family.

NEWSLETTER

NEWSLETTER