Today, we are excited to announce that the Jina Embeddings v2 model, powered by Jina ai, is available for customers through amazon SageMaker JumpStart to deploy with a single click to run model inference. This next-generation model supports an impressive context length of 8192 tokens. You can deploy this model with SageMaker JumpStart, a machine learning (ML) hub with base models, built-in algorithms, and pre-built ML solutions that you can deploy with just a few clicks.

Text embedding refers to the process of transforming text into numerical representations that reside in a high-dimensional vector space. Text embeddings have a wide range of applications in enterprise artificial intelligence (ai), including:

- Multimodal search for e-commerce

- Content customization

- Recommendation systems

- Analysis of data

Jina Embeddings v2 is a next-generation collection of text embedding models, trained by Berlin-based Jina ai, boasting high performance. at various public landmarks.

In this post, we explain how to discover and implement the jina-embeddings-v2 model as part of a retrieval augmented generation (RAG)-based question answering system in SageMaker JumpStart. You can use this tutorial as a starting point for a variety of chatbot-based solutions for customer service, internal support, and question answering systems based on internal and private documents.

What is RAG?

RAG is the process of optimizing the output of a large language model (LLM) so that it references an authoritative knowledge base outside of its training data sources before generating a response.

LLMs are trained on large volumes of data and use billions of parameters to generate original results for tasks such as answering questions, translating languages, and completing sentences. RAG extends the already powerful capabilities of LLM to specific domains or an organization's internal knowledge base, all without the need to retrain the model. It is a cost-effective approach to improve LLM outcomes so that it remains relevant, accurate and useful in various contexts.

What does Jina Embeddings v2 bring to RAG applications?

A RAG system uses a vector database to serve as a knowledge retrieval. You need to extract a query from a user's message and send it to a vector database to reliably find as much semantic information as possible. The following diagram illustrates the architecture of a RAG application with Jina ai and amazon SageMaker.

Jina Embeddings v2 is the preferred choice of experienced machine learning scientists for the following reasons:

- Next-generation performance – We have demonstrated on several text embedding benchmarks that Jina Embeddings v2 models excel at tasks such as classification, reclassification, summarization, and retrieval. Some of the benchmarks that demonstrate its performance are MTEBa ai/boosting-rag-picking-the-best-embedding-reranker-models-42d079022e83″ target=”_blank” rel=”noopener”>independent study of combining embedding models with reclassification models, and thetwitter.com/bo_wangbo/status/1747865035345715249″ target=”_blank” rel=”noopener”> LoCo benchmark by a group at Stanford University.

- Long input context length – Jina Embeddings v2 models support 8192 input tokens. This makes the models especially powerful in tasks such as grouping long documents, such as legal texts or product documentation.

- Support for bilingual text input – ai-gmbh.ghost.io” target=”_blank” rel=”noopener”>Recent research shows that multilingual models without specific linguistic training show strong biases towards English grammatical structures in the embeddings. Jina ai bilingual integration models include

jina-embeddings-v2-base-de,jina-embeddings-v2-base-zh,jina-embeddings-v2-base-esandjina-embeddings-v2-base-code. They were trained to code texts in a combination of ai/news/ich-bin-ein-berliner-german-english-bilingual-embeddings-with-8k-token-length/” target=”_blank” rel=”noopener”>English-German, ai/news/8k-token-length-bilingual-embeddings-break-language-barriers-in-chinese-and-english/” target=”_blank” rel=”noopener”>English-Chinese, ai/news/aqui-se-habla-espanol-top-quality-spanish-english-embeddings-and-8k-context” target=”_blank” rel=”noopener”>English Spanishand ai/news/elevate-your-code-search-with-new-jina-code-embeddings” target=”_blank” rel=”noopener”>English coderespectively, allowing the use of any language as a query or target document in recovery applications. - Operation profitability – Jina Embeddings v2 provides high performance in information retrieval tasks with relatively small models and compact embedding vectors. For example,

jina-embeddings-v2-base-deIt has a size of 322 MB with a performance score of 60.1%. A smaller vector size means a lot of cost savings when storing them in a vector database.

What is SageMaker JumpStart?

With SageMaker JumpStart, machine learning professionals can choose from a growing list of top-performing base models. Developers can deploy basic models to dedicated SageMaker instances within a network-isolated environment and customize models using SageMaker for model training and deployment.

You can now discover and deploy a Jina Embeddings v2 model with a few clicks in amazon SageMaker Studio or programmatically through the SageMaker Python SDK, allowing you to derive model performance and MLOps controls with SageMaker functions like amazon SageMaker Pipelines and amazon SageMaker Debugger. With SageMaker JumpStart, the model is deployed in a secure AWS environment and under the controls of your VPC, helping to provide data security.

Jina Embeddings models are available on AWS Marketplace so you can integrate them directly into your deployments when working in SageMaker.

AWS Marketplace allows you to find third-party software, data, and services that run on AWS and manage them from a centralized location. AWS Marketplace includes thousands of software listings and simplifies software licensing and licensing with flexible pricing options and multiple deployment methods.

Solution Overview

We have prepared a ai/workshops/blob/main/notebooks/embeddings/sagemaker/rag_jina_embeddings_aws_sagemaker_jumpstart.ipynb” target=”_blank” rel=”noopener”>laptop that builds and runs a RAG question answering system using Jina Embeddings and Mixtral 8x7B LLM in SageMaker JumpStart.

In the following sections, we give you an overview of the main steps required to bring a RAG application to life using generative ai models in SageMaker JumpStart. Although we skipped some of the boilerplate code and installation steps in this post for readability reasons, you can access ai/workshops/blob/main/notebooks/embeddings/sagemaker/rag_jina_embeddings_aws_sagemaker_jumpstart.ipynb” target=”_blank” rel=”noopener”>complete python notebook to run yourself.

Connecting to a Jina Embeddings v2 endpoint

To start using Jina Embeddings v2 models, complete the following steps:

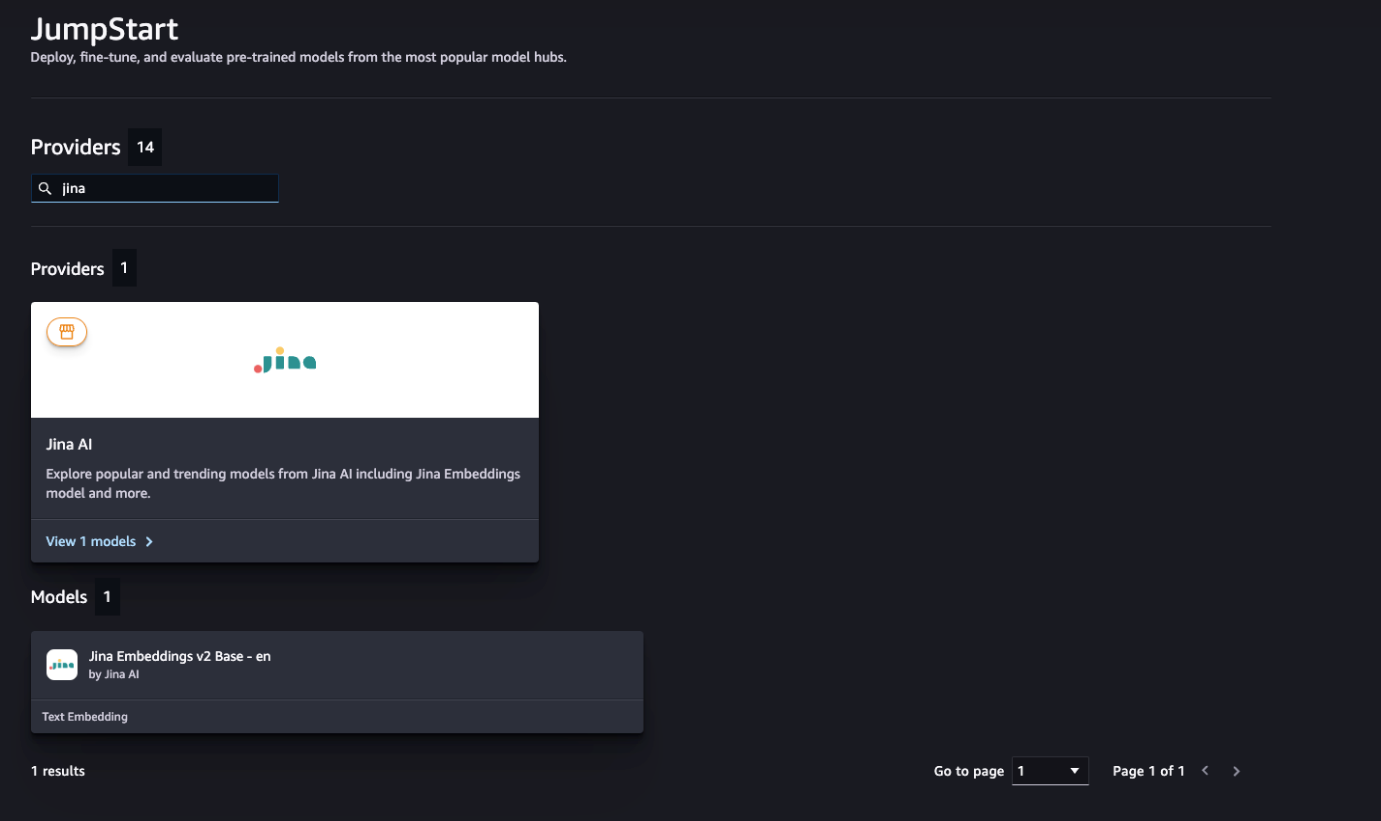

- In SageMaker Studio, choose Good start in the navigation panel.

- Search for “jina” and you will see the link to the supplier page and the models available on Jina ai.

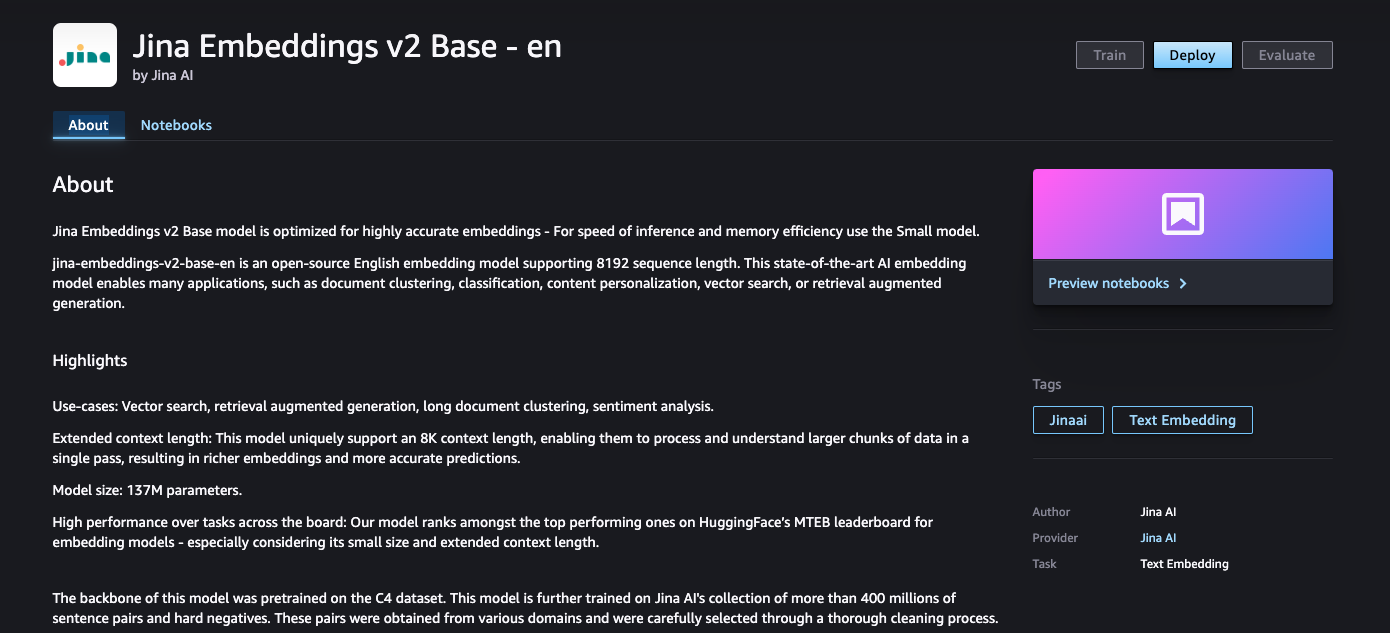

- Choose Jina Embeddings v2 Base – enwhich is Jina ai's English onboarding model.

- Choose Deploy.

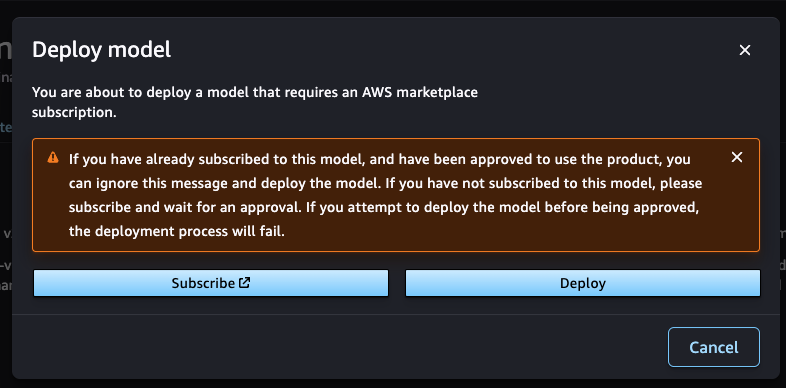

- In the dialog box that appears, choose Subscribewhich will redirect you to the model's AWS Marketplace listing, where you can subscribe to the model after accepting the terms of use.

- After you subscribe, return to Sagemaker Studio and choose Deploy.

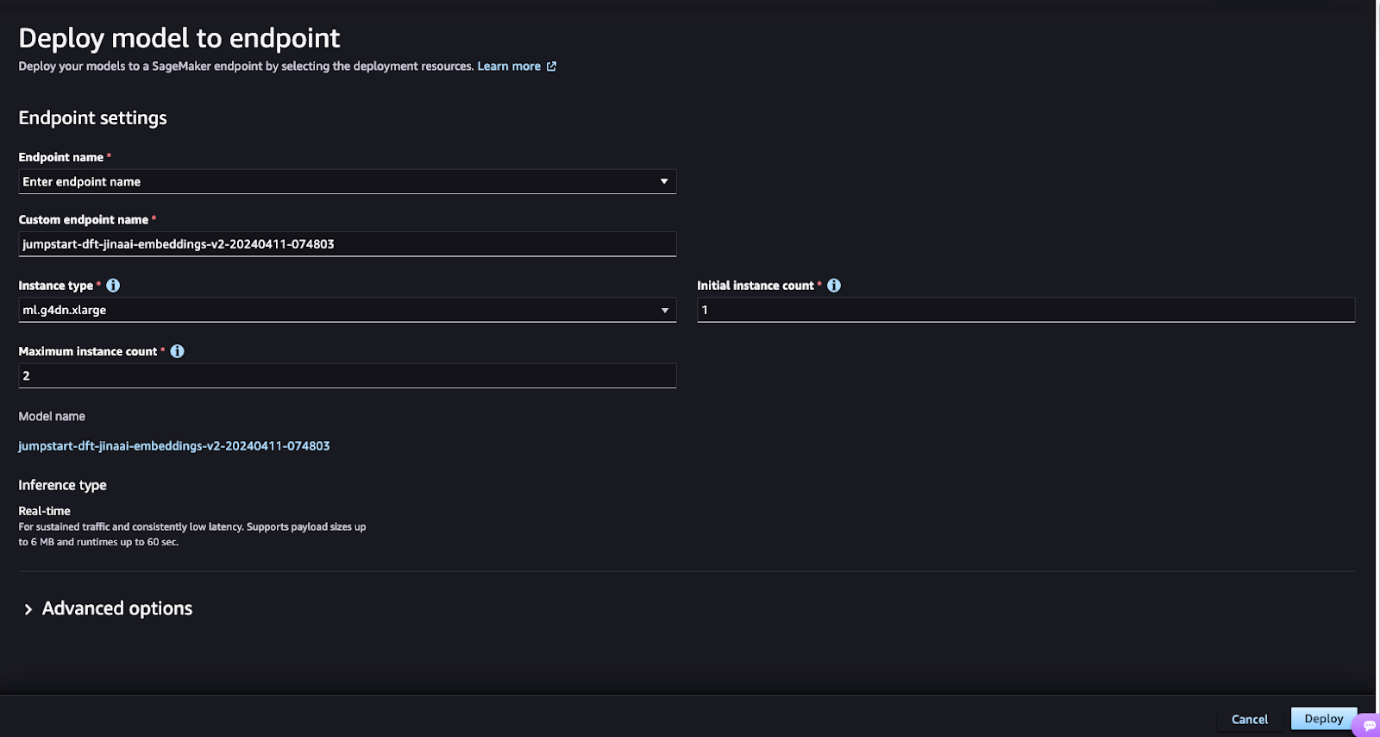

- You will be redirected to the endpoint configuration page, where you can select the most appropriate instance for your use case and provide a name for the endpoint.

- Choose Deploy.

After creating the endpoint, you can connect to it with the following code snippet:

Prepare a data set for indexing

In this post, we use a public dataset of kaggle (CC0: Public Domain) containing audio transcripts from the popular YouTube channel. In summary – In a nutshellwhich has more than 20 million subscribers.

Each row in this data set contains the title of a video, its URL, and the corresponding text transcript.

Enter the following code:

Because the transcript of these videos can be quite long (around 10 minutes), to find only the content relevant to answering users' questions and not other unrelated parts of the transcripts, you can chunk each of these transcripts before indexing them. :

The parameter max_words defines the maximum number of complete words that can be in an indexed text fragment. Many fragmentation strategies They exist in academic and non-peer-reviewed literature that are more sophisticated than a simple word limit. However, for simplicity, we use this technique in this post.

Index text embeddings for vector search

After chunking the transcript text, you get embeds for each chunk and link each chunk to the original transcript and video title:

The data frame df contains a column titled embeddings which can be placed in any vector database of your choice. The embeddings can then be retrieved from the vector database using a function like find_most_similar_transcript_segment(query, n)which will retrieve the n documents closest to the input query given by a user.

Request a Generative LLM Endpoint

To answer questions based on an LLM, you can use the Mistral 7B-Instruct model in SageMaker JumpStart:

Check out the LLM

Now, for a query submitted by a user, it first finds the n semantically closest transcript fragments of any Kurzgesagt video (using the vector distance between the fragment embeddings and the users query), and provides those fragments as context to the LLM for responding to users' query:

Based on the previous question, the LLM could respond with an answer like the following:

Based on the provided context, it does not seem that individuals can solve climate change solely through their personal actions. While personal actions such as using renewable energy sources and reducing consumption can contribute to mitigating climate change, the context suggests that larger systemic changes are necessary to address the issue fully.

Clean

Once you have finished running the notebook, be sure to delete all the resources you created in the process so that your billing stops. Use the following code:

Conclusion

By leveraging the features of Jina Embeddings v2 to develop RAG applications, along with streamlined access to next-generation models in SageMaker JumpStart, developers and businesses can now create sophisticated ai solutions with ease.

Jina Embeddings v2's extended context length, support for bilingual documents, and small model size enable companies to quickly create natural language processing use cases based on their internal data sets without relying on external APIs.

Get started today with SageMaker JumpStart and check out the ai/workshops/tree/main/notebooks/embeddings/sagemaker” target=”_blank” rel=”noopener”>GitHub repository for the complete code to run this example.

Connect with Jina ai

Jina ai remains committed to leading the way in bringing affordable and accessible ai integration technology to the world. Our next-generation text embedding models support English and Chinese and will soon support German, with other languages to follow.

To learn more about Jina ai's offerings, check out the ai/?ref=jina-ai-gmbh.ghost.io” target=”_blank” rel=”noopener”>Jina ai website or join our ai/?ref=jina-ai-gmbh.ghost.io” target=”_blank” rel=”noopener”>community in discord.

About the authors

Francesco Kruk He is a product management intern at Jina ai and is completing his master's degree at eth Zurich in Management, technology and Economics. With strong business experience and machine learning expertise, Francesco helps clients implement RAG solutions using Jina Embeddings in an impactful way.

Francesco Kruk He is a product management intern at Jina ai and is completing his master's degree at eth Zurich in Management, technology and Economics. With strong business experience and machine learning expertise, Francesco helps clients implement RAG solutions using Jina Embeddings in an impactful way.

Sahil Ognawala is a Product Manager at Jina ai based in Munich, Germany. He leads the development of core search models and collaborates with clients around the world to enable rapid and efficient deployment of next-generation generative ai products. With an academic background in machine learning, Saahil is now interested in scaled applications of generative ai in the knowledge economy.

Sahil Ognawala is a Product Manager at Jina ai based in Munich, Germany. He leads the development of core search models and collaborates with clients around the world to enable rapid and efficient deployment of next-generation generative ai products. With an academic background in machine learning, Saahil is now interested in scaled applications of generative ai in the knowledge economy.

Roy Allela is a Senior Solutions Architect specializing in ai/ML at AWS based in Munich, Germany. Roy helps AWS customers (from small startups to large enterprises) efficiently train and deploy large language models on AWS. Roy is passionate about computational optimization problems and improving the performance of ai workloads.

Roy Allela is a Senior Solutions Architect specializing in ai/ML at AWS based in Munich, Germany. Roy helps AWS customers (from small startups to large enterprises) efficiently train and deploy large language models on AWS. Roy is passionate about computational optimization problems and improving the performance of ai workloads.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER