Introduction

Understanding the meaning of a word in a text is crucial for analyzing and interpreting large volumes of data. This is where the Inverse Document Frequency (TF-IDF) technique in Natural Language Processing (NLP) comes into play. By overcoming the limitations of the traditional bag-of-words approach, TF-IDF improves text classification and strengthens the ability of machine learning models to understand and analyze textual information effectively. This article will show you how to build a TF-IDF model from scratch in Python and how to compute it numerically.

General description

- TF-IDF is a key NLP technique that improves text classification by assigning importance to words based on their frequency and rarity.

- Key terms are defined, including term frequency (TF), document frequency (DF), and inverse document frequency (IDF).

- The article details the step-by-step numerical calculation of TF-IDF scores, as documents.

- A practical guide to the use

TfidfVectorizerfrom scikit-learn to convert text documents into a TF-IDF matrix. - It is used in search engines, text classification, clustering and summarization, but does not take into account word order or context.

Terminology: Key Terms Used in TF-IDF

Before we dive into the calculations and code, it is essential to understand the key terms:

- to: term (word)

- d: document (set of words)

- north: corpus count

- body:the complete set of documents

What is Term Frequency (TF)?

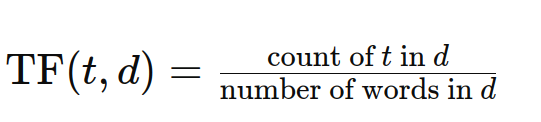

The frequency with which a term appears in a document is measured by term frequency (TF). The weight of a term in a document is directly related to its frequency of appearance. The TF formula is:

What is Document Frequency (DF)?

The importance of a document within a corpus is measured by its document frequency (DF). DF counts the number of documents that contain the phrase at least once, unlike TF, which counts the instances of a term in a document. The DF formula is:

DF

What is Inverse Document Frequency (IDF)?

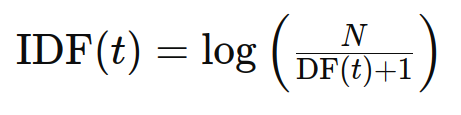

The informativeness of a word is measured by its inverse document frequency or IDF. All terms are assigned equal weight in calculating TF, although IDF helps to scale up uncommon terms and scale down common ones (such as stop words). The IDF formula is:

where N is the total number of documents and DF

What is TF-IDF?

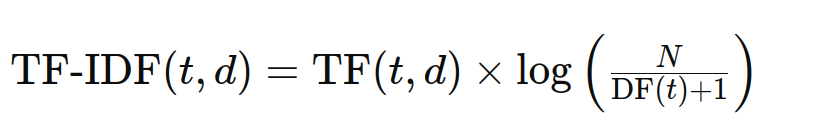

TF-IDF stands for Term Frequency-Inverse Document Frequency, a statistical measure used to assess the importance of a word in a document in a collection or corpus. It combines the importance of a term in a document (TF) with the rarity of the term in the corpus (IDF). The formula is:

Numerical calculation of TF-IDF

Let us analyze the numerical calculation of TF-IDF for the given documents:

Documents:

- “The sky is blue.”

- “The sun is shining today.”

- “The sun in the sky is bright.”

- “We can see the bright sun, the shining sun.”

Step 1: Calculate the term frequency (TF)

Document 1: “The sky is blue.”

| Term | Count | Thesis |

| he | 1 | 1/4 |

| darling | 1 | 1/4 |

| is | 1 | 1/4 |

| blue | 1 | 1/4 |

Document 2: “The sun is shining today.”

| Term | Count | Thesis |

| he | 1 | 1/5 |

| sun | 1 | 1/5 |

| is | 1 | 1/5 |

| bright | 1 | 1/5 |

| today | 1 | 1/5 |

Document 3: “The sun in the sky is bright.”

| Term | Count | Thesis |

| he | 2 | 2/7 |

| sun | 1 | 1/7 |

| in | 1 | 1/7 |

| darling | 1 | 1/7 |

| is | 1 | 1/7 |

| bright | 1 | 1/7 |

Document 4: “We can see the bright sun, the bright sun.”

| Term | Count | Thesis |

| us | 1 | 1/9 |

| can | 1 | 1/9 |

| see | 1 | 1/9 |

| he | 2 | 2/9 |

| bright | 1 | 1/9 |

| sun | 2 | 2/9 |

| bright | 1 | 1/9 |

Step 2: Calculate the Inverse Document Frequency (IDF)

Using N=4N = 4N=4:

| Term | DF | Israel Defense Forces |

| he | 4 | log(4/4+1)=log(0.8)≈−0.223 |

| darling | 2 | log(4/2+1)=log(1.333)≈0.287 |

| is | 3 | log(4/3+1)=log(1)=0 |

| blue | 1 | log(4/1+1)=log(2)≈0.693 |

| sun | 3 | log(4/3+1)=log(1)=0 |

| bright | 3 | log(4/3+1)=log(1)=0 |

| today | 1 | log(4/1+1)=log(2)≈0.693 |

| in | 1 | log(4/1+1)=log(2)≈0.693 |

| us | 1 | log(4/1+1)=log(2)≈0.693 |

| can | 1 | log(4/1+1)=log(2)≈0.693 |

| see | 1 | log(4/1+1)=log(2)≈0.693 |

| bright | 1 | log(4/1+1)=log(2)≈0.693 |

Step 3: Calculate TF-IDF

Now, let's calculate the TF-IDF values for each term in each document.

Document 1: “The sky is blue.”

| Term | Thesis | Israel Defense Forces | Israeli Security Forces Defense Forces |

| he | 0.25 | -0.223 | 0.25 * -0.223 ≈-0.056 |

| darling | 0.25 | 0.287 | 0.25 * 0.287 ≈ 0.072 |

| is | 0.25 | 0 | 0.25 * 0 = 0 |

| blue | 0.25 | 0.693 | 0.25 * 0.693 ≈ 0.173 |

Document 2: “The sun is shining today.”

| Term | Thesis | Israel Defense Forces | Israeli Security Forces Defense Forces |

| he | 0.2 | -0.223 | 0.2 * -0.223 ≈ -0.045 |

| sun | 0.2 | 0 | 0.2 * 0 = 0 |

| is | 0.2 | 0 | 0.2 * 0 = 0 |

| bright | 0.2 | 0 | 0.2 * 0 = 0 |

| today | 0.2 | 0.693 | 0.2 * 0.693 ≈0.139 |

Document 3: “The sun in the sky is bright.”

| Term | Thesis | Israel Defense Forces | Israeli Security Forces Defense Forces |

| he | 0.285 | -0.223 | 0.285 * -0.223 ≈ -0.064 |

| sun | 0.142 | 0 | 0.142 * 0 = 0 |

| in | 0.142 | 0.693 | 0.142 * 0.693 ≈0.098 |

| darling | 0.142 | 0.287 | 0.142 * 0.287≈0.041 |

| is | 0.142 | 0 | 0.142 * 0 = 0 |

| bright | 0.142 | 0 | 0.142 * 0 = 0 |

Document 4: “We can see the bright sun, the bright sun.”

| Term | Thesis | Israel Defense Forces | Israeli Security Forces Defense Forces |

| us | 0.111 | 0.693 | 0.111 * 0.693 ≈0.077 |

| can | 0.111 | 0.693 | 0.111 * 0.693 ≈0.077 |

| see | 0.111 | 0.693 | 0.111 * 0.693≈0.077 |

| he | 0.222 | -0.223 | 0.222 * -0.223≈-0.049 |

| bright | 0.111 | 0.693 | 0.111 * 0.693 ≈0.077 |

| sun | 0.222 | 0 | 0.222 * 0 = 0 |

| bright | 0.111 | 0 | 0.111 * 0 = 0 |

Implementing TF-IDF in Python using an embedded dataset

Now let's apply the TF-IDF calculation using the Tfidf Vectorizer from scikit-learn with a built-in dataset.

Step 1: Install the necessary libraries

Make sure you have scikit-learn installed:

pip install scikit-learnStep 2: Import libraries

import pandas as pd

from sklearn.datasets import fetch_20newsgroups

from sklearn.feature_extraction.text import TfidfVectorizerStep 3: Load the dataset

Get the dataset of 20 newsgroups:

newsgroups = fetch_20newsgroups(subset="train")Step 4: Initialize Tfidf Vectorizer

vectorizer = TfidfVectorizer(stop_words="english", max_features=1000)Step 5: Adjust and transform documents

Convert text documents to a TF-IDF matrix:

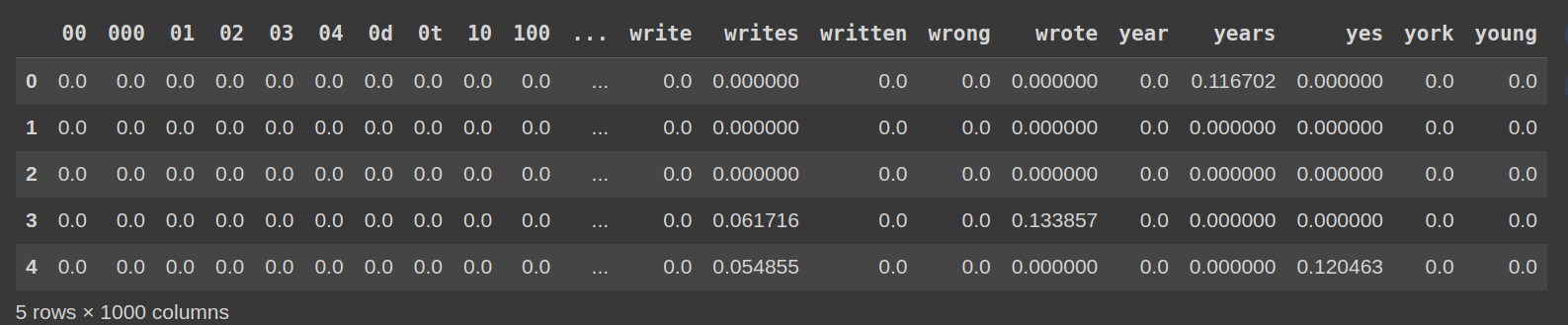

tfidf_matrix = vectorizer.fit_transform(newsgroups.data)Step 6: View the TF-IDF matrix

Convert the array to a DataFrame for better readability:

df_tfidf = pd.DataFrame(tfidf_matrix.toarray(), columns=vectorizer.get_feature_names_out())

df_tfidf.head()

Conclusion

Using the 20 Newsgroups dataset and TfidfVectorizer, you can convert a large collection of text documents into a TF-IDF matrix. This matrix numerically represents the importance of each term in each document, facilitating various natural language processing tasks such as text classification, clustering, and more advanced text analysis. scikit-learn's TfidfVectorizer provides an efficient and simple way to achieve this transformation.

Frequent questions

Answer: Calculating the log of IDF helps reduce the effect of extremely common words and prevents IDF values from skyrocketing, especially in large corpora. This ensures that IDF values remain manageable and reduces the impact of words that occur very frequently in documents.

Answer: Yes, TF-IDF can be used for large datasets. However, an efficient implementation and adequate computational resources are required to handle the large matrix calculations involved.

Answer: The limitation of TF-IDF is that it does not take into account word order or context, as it treats each term independently and therefore may miss the nuanced meaning of sentences or the relationship between words.

Answer: TF-IDF is used in a variety of applications including:

1. Search engines rank documents based on their relevance to a query.

2. Text classification to identify the most significant words to categorize documents

3. Clustering to group similar documents based on key terms

4. Text summarization to extract important sentences from a document

NEWSLETTER

NEWSLETTER