Editor's Image | Halfway through the trip

Rapid technological development has recently taken the fields of artificial intelligence (ai) and large language models (LLM) to new heights. To cite some advances in this area, LangChain and LlamaIndex have become important players. Each has their unique set of capabilities and strengths.

This article compares the battle between these two fascinating technologies, comparing their features, strengths, and real-world applications. If you are an ai developer or enthusiast, this analysis will help you understand which tool could meet your needs.

LangChain

LangChain is a comprehensive framework designed to create LLM-powered applications. Its main objective is to simplify and improve the entire life cycle of LLM applications, making it easier for developers to create, optimize and implement ai-based solutions. LangChain achieves this by offering tools and components that streamline development, production, and deployment processes.

Tools offered by LangChain

LangChain tools include model I/O, recovery, chains, memory, and agents. All these tools are explained in detail below:

I/O model: At the heart of LangChain's capabilities is the I/O (input/output) module model, a crucial component to realizing the potential of LLMs. This feature offers developers a standardized, easy-to-use interface to interact with LLMs, simplifying the creation of LLM-based applications to address real-world challenges.

Recovery: In many LLM applications, custom data must be incorporated beyond the original training scope of the models. This is achieved through retrieval augmented generation (RAG), which involves fetching external data and supplying it to the LLM during the generation process.

Chains: While stand-alone LLMs are sufficient for simple tasks, complex applications require the complexity of chaining LLMs together in collaboration or with other essential components. LangChain offers two general frameworks for this lovely process: the traditional and the modern Chain interface. LangChain Expression Language (LCEL). While LCEL reigns supreme when it comes to composing chains in new applications, LangChain also provides high-value pre-built chains, ensuring the seamless coexistence of both frameworks.

Memory: Memory in LangChain refers to storing and remembering past interactions. LangChain provides various tools to integrate memory into your systems, meeting simple and complex needs. This memory can be seamlessly incorporated into strings, allowing them to read and write stored data. Information held in memory guides LangChain Chains, improving its responses based on past interactions.

Agents: Agents are dynamic entities that use the reasoning capabilities of LLMs to determine the sequence of actions in real time. Unlike conventional chains, where the sequence is predefined in the code, agents use the intelligence of language models to decide the next steps and their order dynamically, making them highly adaptable and powerful in orchestrating complex tasks. .

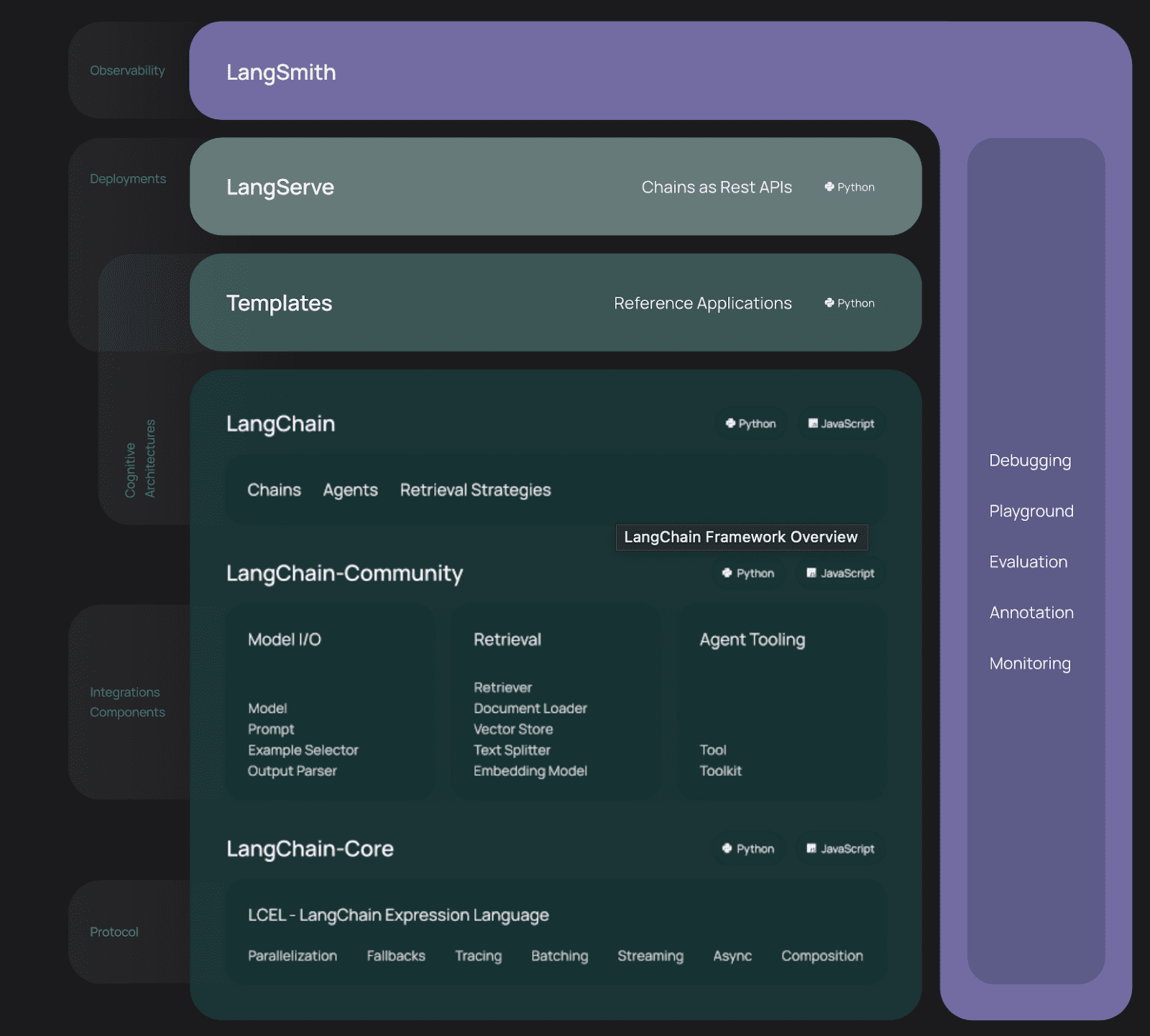

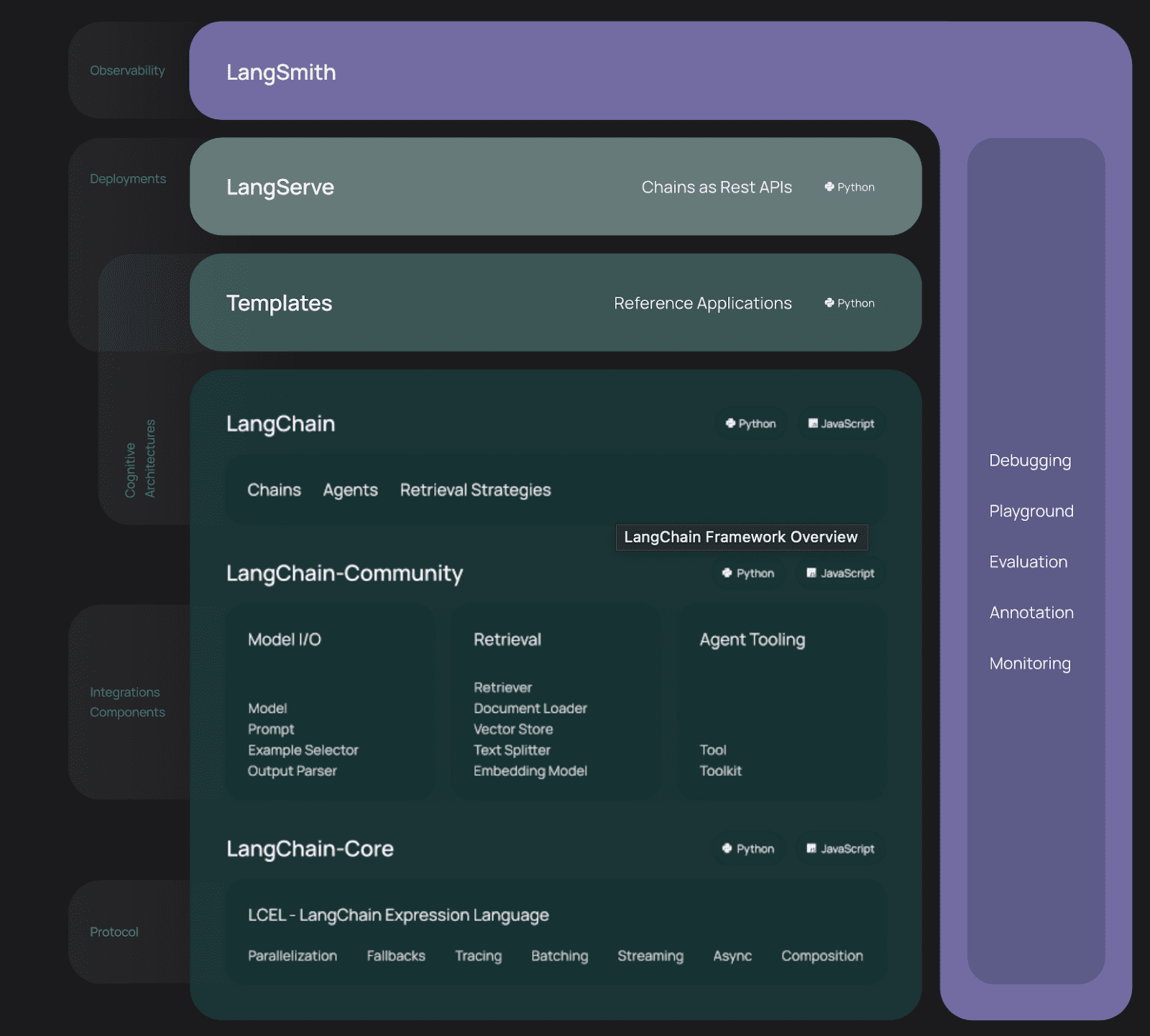

This image shows the architecture of the LangChain framework | fountain: Long String Documentation

The LangChain ecosystem comprises the following:

- Lang Smith: This helps you track and evaluate your language model and intelligent agent applications, helping you move from prototype to production.

- ai.github.io/langgraph” target=”_blank” rel=”noopener”>LangGraph– is a powerful tool for creating stateful multi-actor applications with LLM. It is built on top of (and designed for use with) LangChain primitives.

- LangServe: With this tool, you can implement LangChain chains and executables as REST APIs.

CallIndex

ai/” target=”_blank” rel=”noopener”>CallIndex is a sophisticated framework designed to optimize the development and deployment of LLM-powered applications. It provides a structured approach to integrating LLM into application software, improving its functionality and performance through a unique architectural design.

LlamaIndex, formerly known as GPT Index, emerged as a dedicated data framework designed to strengthen and elevate the functionalities of LLMs. It focuses on ingesting, structuring, and retrieving private or domain-specific data, presenting an optimized interface for indexing and accessing relevant information within vast textual data sets.

Tools Offers LlamaIndex

Some of the tools LlamaIndex offers include data connectors, engines, data brokers, and application integrations. All these tools are explained in detail below:

Data connectors: Data connectors play a crucial role in data integration, simplifying the complex process of linking your data sources to your data repository. They eliminate the need for manual data extraction, transformation, and loading (ETL), which can be cumbersome and error-prone. These connectors streamline the process by ingesting data directly from its native source and format, saving time on data conversion. Additionally, data connectors automatically improve data quality, protect data through encryption, increase performance through caching, and reduce the maintenance required for your data integration solution.

engines: LlamaIndex Engines enables seamless collaboration between data and LLM. They provide a flexible framework that connects LLMs to various data sources, simplifying access to real-world information. These engines have an intuitive search system that understands queries in natural language, which facilitates data interaction. They also organize data for faster access, enrich LLM applications with additional information, and help select the right LLM for specific tasks. LlamaIndex engines are essential for creating various LLM-based applications, bridging the gap between data and LLM to address real-world challenges.

Data brokers: Data Brokers are intelligent, LLM-savvy knowledge workers within LlamaIndex who are experts at managing your data. They can intelligently navigate through structured, semi-structured and unstructured data sources and interact with external service APIs in an organized manner, handling both”read” and “write“operations. This versatility makes them indispensable for automating data-related tasks. Unlike query engines limited to reading data from static sources, data agents can dynamically ingest and modify data from various tools, making them highly adaptable to evolving data environments.

App integrations: LlamaIndex excels at creating LLM-based applications, and achieves its full potential through extensive integrations with other tools and services. These integrations facilitate easy connections to a wide range of data sources, observability tools, and application frameworks, enabling the development of more powerful and versatile LLM-based applications.

Implementation comparison

These two technologies can be similar when it comes to building applications. Take a chatbot as an example. This is how you can create a local chatbot using LangChain:

from langchain.schema import HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

openai_api_base="http://localhost:5000",

openai_api_key="SK******",

max_tokens=1600,

Temperature=0.2

request_timeout=600,

)

chat_history = (

SystemMessage(content="You are a copywriter."),

HumanMessage(content="What is the meaning of Large language Evals?"),

)

print(llm(chat_history))This is how to build a local chatbot using LlamaIndex:

from llama_index.llms import ChatMessage, OpenAILike

llm = OpenAILike(

api_base="http://localhost:5000",

api_key=”******”,

is_chat_model=True,

context_window=32768,

timeout=600,

)

chat_history = (

ChatMessage(role="system", content="You are a copywriter."),

ChatMessage(role="user", content="What is the meaning of Large language Evals?"),

)

output = llm.chat(chat_history)

print(output)Main differences

While LangChain and LlamaIndex may exhibit certain similarities and complement each other in building resilient and adaptable LLM applications, they are quite different. Below are notable distinctions between the two platforms:

| Criteria | LangChain | CallIndex |

| Frame type | Development and deployment framework. | Data framework to enhance LLM capabilities. |

| Main functionality | Provides building blocks for LLM applications. | It focuses on data ingestion, structuring and access. |

| Modularity | Highly modular with several independent packages. | Modular design for efficient data management. |

| Performance | Optimized for creating and deploying complex applications. | Excels in text-based search and data retrieval. |

| Development | Uses open source components and templates. | Provides tools to integrate private/domain-specific data. |

| Productionization | LangSmith for monitoring, debugging and optimization. | Emphasizes high-quality responses and accurate queries. |

| Deployment | LangServe to convert strings to API. | No specific implementation tool is mentioned. |

| Integration | Supports third party integrations via langchain-community. | Integrates with LLM to improve data management. |

| Real world applications | Suitable for complex LLM applications in all industries. | Ideal for document management and accurate information retrieval. |

| Strengths | Versatile, supports multiple integrations and strong community. | Accurate answers, efficient data management, robust tools. |

Final thoughts

Depending on your specific needs and project goals, any LLM-powered application can benefit from using LangChain or LlamaIndex. LangChain is known for its flexibility and advanced customization options, making it ideal for contextual applications.

LlamaIndex excels at fast data retrieval and generating concise answers, making it perfect for knowledge-based applications such as chatbots, virtual assistants, content-based recommendation systems, and question answering systems. Combining the strengths of LangChain and LlamaIndex can help you create highly sophisticated LLM applications.

Resources

Olumida of Shittu is a software engineer and technical writer passionate about leveraging cutting-edge technologies to create compelling narratives, with a keen eye for detail and a knack for simplifying complex concepts. You can also find Shittu at twitter.com/Shittu_Olumide_”>twitter.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER