Text-to-video generation is advancing rapidly, driven by major advances in transformer architectures and delivery models. These technologies have unlocked the potential to transform text cues into coherent and dynamic video content, creating new possibilities in multimedia generation. Accurately translating textual descriptions into visual sequences requires sophisticated algorithms to manage the intricate balance between text and video modalities. This area focuses on improving the semantic alignment between text and generated video, ensuring that the results are visually appealing and faithful to the input cues.

A fundamental challenge in this field is achieving temporal coherence in long-duration videos. This involves creating video sequences that maintain coherence over long periods, especially when depicting complex, large-scale motions. Video data inherently contain a wealth of spatial and temporal information, making efficient modeling a major hurdle. Another critical issue is ensuring that generated videos accurately align with textual cues, a task that becomes increasingly difficult as video length and complexity increase. Effective solutions to these challenges are essential to advancing the field and creating practical applications for text-to-video generation.

Historically, methods to address these challenges have used variational autoencoders (VAEs) for video compression and transformers to improve text-video alignment. While these methods have improved the quality of video generation, they often need to maintain temporal consistency over longer sequences and align video content with text descriptions when handling intricate motion or large data sets. The limitation of these models in generating high-quality, long-duration videos has driven the search for more advanced solutions.

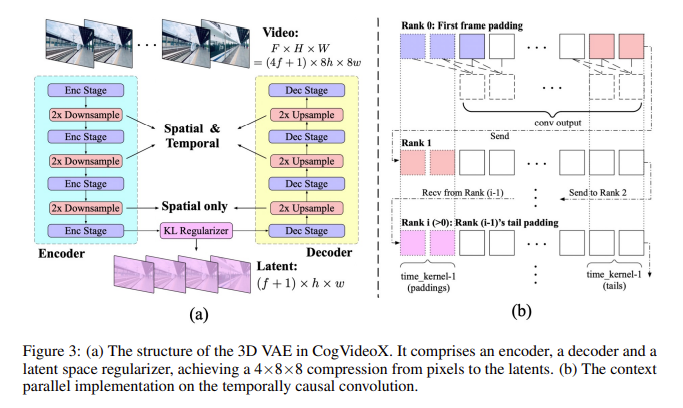

Researchers from Zhipu ai and Tsinghua University have presented CogX Videoa novel approach that leverages cutting-edge techniques to improve text-to-video generation. CogVideoX employs a 3D causal VAE, which compresses video data along spatial and temporal dimensions, significantly reducing the computational burden while maintaining video quality. The model also integrates an expert transformer with adaptive LayerNorm, which improves the alignment between text and video, facilitating a more seamless integration of these two modalities. This advanced architecture enables the generation of high-quality, semantically accurate videos that can span longer durations than previously possible.

CogVideoX incorporates several innovative techniques that set it apart from previous models. The 3D Causal VAE enables 4x8x8 pixel-to-latent compression, a substantial reduction that preserves video continuity and quality. The Expert Transformer uses a 3D full attention mechanism, which holistically models the video data to ensure that large-scale motions are accurately represented. The model includes a sophisticated video captioning process, which generates new textual descriptions for the video data, improving the semantic alignment of videos with the input text. This process includes video filtering to remove low-quality clips and a dense video captioning method that improves the model’s understanding of the video content.

CogVideoX is available in two variants: CogX-2B Video and CogX-5B Videoeach with different capabilities. The 2B variant is designed for scenarios where computational resources are limited, offering a balanced approach to text-to-video generation with a smaller model size. On the other hand, the 5B variant represents the high-end offering, with a larger model that delivers superior performance in more complex scenarios. The 5B variant, in particular, excels at handling intricate video dynamics and generating videos with a higher level of detail, making it suitable for more demanding applications. Both variants are publicly available and represent significant advances in the field.

CogVideoX’s performance has been rigorously evaluated and the results show that it outperforms existing models on several metrics. In particular, it demonstrates superior performance in human action recognition, scene representation, and dynamic quality, scoring 95.2, 54.65, and 2.74, respectively, in these categories. The model’s ability to generate consistent and detailed videos from text prompts marks a significant advancement in the field. Comparison using radar charts clearly illustrates CogVideoX’s dominance, particularly in its ability to handle complex dynamic scenes, where it outperforms previous models.

In conclusion, CogVideoX addresses key challenges in text-to-video generation by introducing a robust framework that combines efficient video data modeling with improved text-video alignment. The use of a 3D causal VAE and expert transformers, along with progressive training techniques such as mixed-resolution and mixed-length progressive training, enables CogVideoX to produce long-form, semantically accurate videos with meaningful motion. The introduction of two variants, CogVideoX-2B and CogVideoX-5B, offers flexibility for different use cases, ensuring that the model can be applied in various scenarios.

Take a look at the Paper, Model card, GitHuband ManifestationAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

Below is a highly recommended webinar from our sponsor: ai/webinar-nvidia-nims-and-haystack?utm_campaign=2409-campaign-nvidia-nims-and-haystack-&utm_source=marktechpost&utm_medium=banner-ad-desktop” target=”_blank” rel=”noreferrer noopener”>'Developing High-Performance ai Applications with NVIDIA NIM and Haystack'

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>