One of the main challenges of Retrieval Augmented Generation (RAG) models is the efficient management of extensive contextual inputs. While RAG models improve upon extensive language models (LLMs) by incorporating external information, this extension significantly increases the input length, leading to longer decoding times. This issue is critical as it directly impacts user experience by prolonging response times, particularly in real-time applications such as complex question-answering systems and large-scale information retrieval tasks. Addressing this challenge is crucial to advancing ai research as it makes LLMs more practical and efficient for real-world applications.

Current methods to address this challenge mainly involve context compression techniques, which can be divided into lexicon-based and embedding-based approaches. Lexicon-based methods filter out unimportant tokens or terms to reduce the input size, but often overlook nuanced contextual information. Embedding-based methods transform context into fewer embedding tokens, but suffer from limitations such as large model sizes, low effectiveness due to untuned decoder components, fixed compression ratios, and inefficiencies in handling multiple context documents. These limitations restrict their performance and applicability, particularly in real-time processing scenarios.

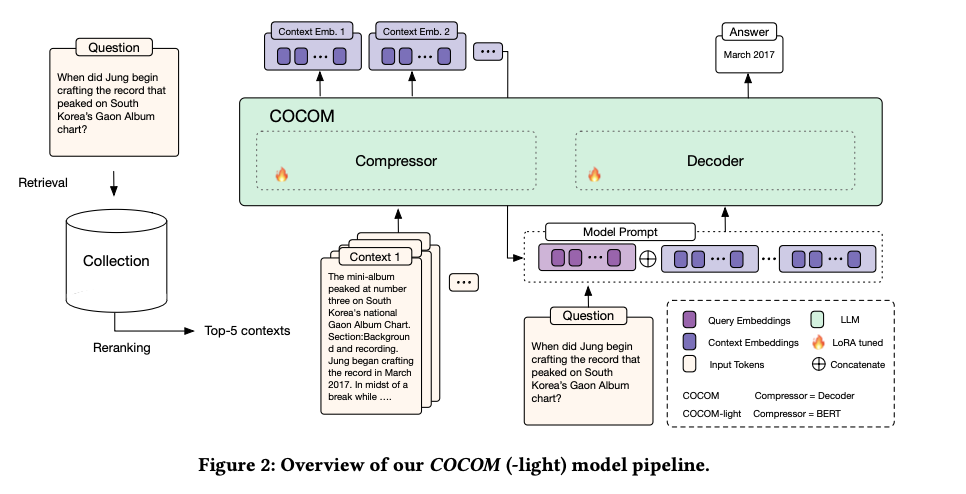

A team of researchers from the University of Amsterdam, the University of Queensland, and Naver Labs Europe present COCOM (COntext COmpression Model), a novel and efficient context compression method that overcomes the limitations of existing techniques. COCOM compresses long contexts into a small number of context embeddings, significantly speeding up generation time while maintaining high performance. This method offers various compression rates, allowing a trade-off between decoding time and response quality. The innovation lies in its ability to effectively handle multiple contexts, unlike previous methods that struggled with multi-document contexts. By using a single model for both context compression and response generation, COCOM demonstrates substantial improvements in speed and performance, providing a more efficient and accurate solution compared to existing methods.

COCOM involves compressing contexts into a set of context embeddings, which significantly reduces the input size for the LLM. The approach includes pre-training tasks such as auto-encoding and language modeling from context embeddings. The method uses the same model for both compression and response generation, ensuring efficient utilization of the compressed context embeddings by the LLM. The dataset used for training includes several QA datasets such as Natural Questions, MS MARCO, HotpotQA, WikiQA, and others. Evaluation metrics focus on exact match (EM) and matching (M) scores to evaluate the effectiveness of the generated responses. Key technical aspects include parameter-efficient tuning of LoRA and use of SPLADE-v3 for retrieval.

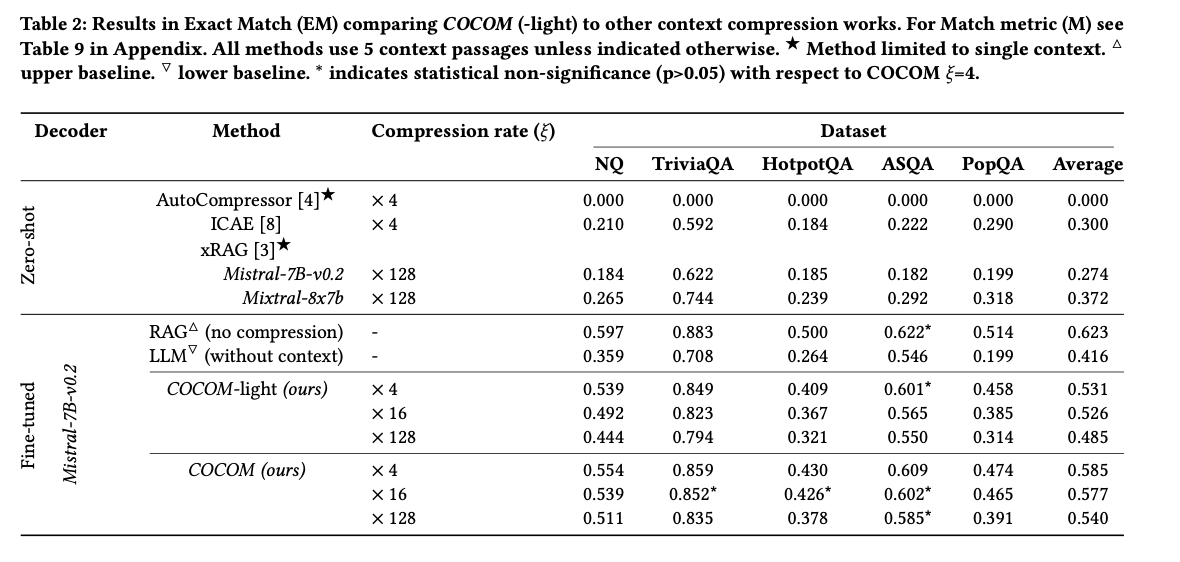

COCOM achieves significant improvements in decoding efficiency and performance metrics. It demonstrates up to a 5.69x speedup in decoding time while maintaining high performance compared to existing context compression methods. For example, COCOM achieved an exact match (EM) score of 0.554 on the Natural Questions dataset with a compression ratio of 4 and 0.859 on TriviaQA, significantly outperforming other methods such as AutoCompressor, ICAE, and xRAG. These improvements highlight COCOM’s superior ability to handle longer contexts more effectively while maintaining high response quality, demonstrating the method’s efficiency and robustness across multiple datasets.

In conclusion, COCOM represents a significant advancement in context compression for RAG models by reducing decoding time while maintaining high performance. Its ability to handle multiple contexts and offer adaptive compression rates makes it a critical development for improving the scalability and efficiency of RAG systems. This innovation has the potential to greatly improve the practical application of LLMs in real-world scenarios, overcoming critical challenges and paving the way for more efficient and responsive ai applications.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram Channel and LinkedIn GrAbove!.

If you like our work, you will love our Newsletter..

Don't forget to join our Subreddit with over 46 billion users

Aswin AK is a Consulting Intern at MarkTechPost. He is pursuing his dual degree from Indian Institute of technology, Kharagpur. He is passionate about Data Science and Machine Learning and has a strong academic background and practical experience in solving real-world interdisciplinary challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER