The problem of a mediator learning to coordinate a group of strategic agents is posed through recommendations of actions without knowing their underlying utility functions, such as the route that drivers should follow through a road network. The challenge lies in the difficulty of manually specifying the quality of these recommendations, making it necessary to provide the mediator with data on the desired coordination behavior. This transforms the problem into one of multi-agent imitation learning (MAIL). A fundamental issue in MAIL is identifying the right goal for the learner, explored through the development of personalized route recommendations for users.

Current research to solve the challenges of multi-agent imitation learning includes several methodologies. Single-agent imitation learning techniques, such as behavioral cloning, reduce imitation to supervised learning but suffer from covariate shifts, leading to compound errors. Interactive approaches, such as inverse reinforcement learning (RL), allow learners to observe the consequences of their actions, which avoids compound errors, but are sample-inefficient. The next approach is multi-agent imitation learning, where the concept of the regret gap has been explored but not fully utilized in Markov games. The third approach, inverse game theory, focuses on recovering utility functions rather than learning coordination from demonstrations.

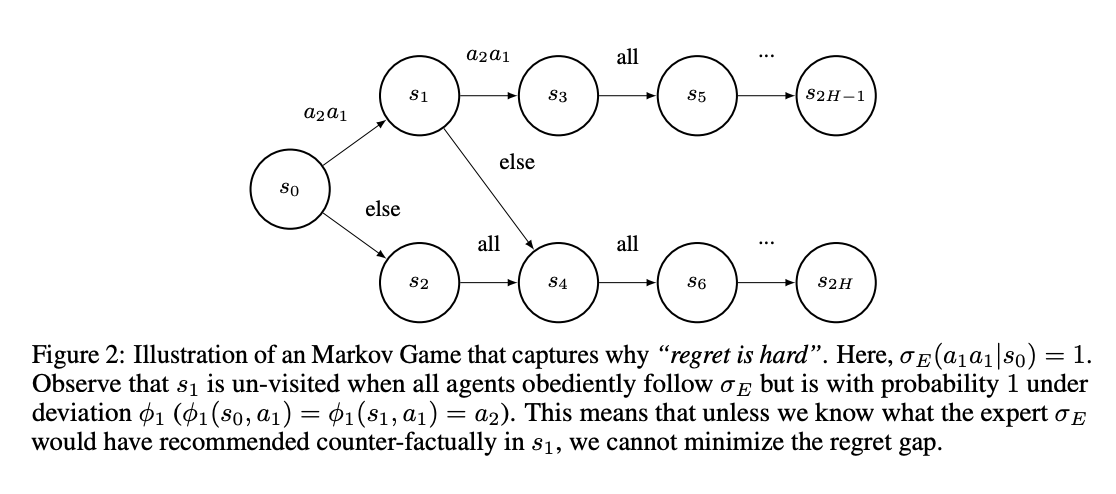

Researchers at Carnegie Mellon University have proposed an alternative objective for multi-agent imitation learning (MAIL) in Markov games, called the regret gap, which explicitly takes into account possible deviations of agents in the group. They investigated the relationship between value and regret gaps, and showed that while the value gap can be minimized using single-agent imitation learning (IL) algorithms, this does not prevent the regret gap from becoming arbitrarily large. This finding indicates that achieving regret equivalence is harder than achieving value equivalence in MAIL. To address this, two efficient reductions for regret-free online convex optimization are developed: (a) MALICE, under an expert coverage assumption, and (b) BLADES, with access to a queryable expert.

Although the value gap is considered a “weaker” objective, it can be a reasonable learning objective in real-world applications where agents are not strategic. The natural multi-agent generalization of single-agent imitation learning algorithms can efficiently minimize the value gap, making it relatively easy to achieve in MAIL. Two such single-agent IL algorithms, behavior cloning (BC) and inverse reinforcement learning (IRL), are used to minimize the value gap. These algorithms run on top of joint policies where BC and IRL are applied to the multi-agent environment, becoming joint behavior cloning (J-BC) and joint inverse reinforcement learning (J-IRL). These adaptations result in the same value gap bounds as in the single-agent environment.

Multi-Agent Loss Aggregation to Mimic Cache Experts (MALICE) is an efficient algorithm that extends the ALICE algorithm to the multi-agent setting. ALICE is an interactive algorithm that uses importance sampling to reweight the BC loss based on the density ratio between the learner's current policy and that of the expert. It requires full proof coverage to ensure finite importance weights. ALICE uses a regret-free algorithm to learn a policy that minimizes the reweighted policy error, ensuring a linear bound in H on the value gap under a recoverability assumption. MALICE adapts these principles to multi-agent settings, providing a robust solution to minimize the regret gap.

In conclusion, researchers at Carnegie Mellon University have introduced an alternative objective for MAIL in Markov games called the regret gap. For strategic agents that are not mere puppets, another source of distributional change arises from agents’ deviations within the population. This change cannot be efficiently controlled through interaction with the environment, like inverse RL. Therefore, it requires estimating the expert’s actions in counterfactual states. Using this insight, the researchers derived two reductions that can minimize the regret gap under a coverage or consultable expert assumption. Future work includes developing and implementing practical approximations of these idealized algorithms.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Sajjad Ansari is a final year student from IIT Kharagpur. As a technology enthusiast, he delves into practical applications of ai, focusing on understanding the impact of ai technologies and their real-world implications. He aims to articulate complex ai concepts in a clear and accessible manner.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>