Introduction

Calling functions in large language models (LLM) has transformed the way ai agents interact with external systems, APIs, or tools, enabling structured decision making based on natural language cues. Using functions defined by JSON schemas, these models can select and execute external operations autonomously, offering new levels of automation. This article will demonstrate how function calls can be implemented using Mistral 7B, a next-generation model designed for instruction following tasks.

Learning outcomes

- Understand the role and types of ai agents in generative ai.

- Learn how function calls enhance LLM capabilities using JSON schemas.

- Configure and load the Mistral 7B model for text generation.

- Implement function calls in LLM to execute external operations.

- Extract arguments from functions and generate responses using Mistral 7B.

- Run real-time functions like weather queries with structured results.

- Extend ai agent functionality across multiple domains using multiple tools.

This article was published as part of the Data Science Blogathon.

<h2 class="wp-block-heading" id="h-what-are-ai-agents”>What are ai agents?

In the realm of generative ai (GenAI), ai agents represent a significant evolution in artificial intelligence capabilities. These agents use models, such as large language models (LLM), to create content, simulate interactions, and perform complex tasks autonomously. ai agents improve their functionality and applicability in various domains, including customer service, education, and medical fields.

They can be of various types (as shown in the figure below), including:

- Humans in the loop (e.g. to provide feedback)

- Code executors (e.g. IPython kernel)

- Tool executors (for example, function or API executions)

- Models (LLM, VLM, etc.)

Function calling is the combination of code execution, tool execution and model inference, that is, while LLMs handle natural language understanding and generation, the code executor can execute any code fragment necessary to satisfy the requests of users.

We can also use humans in the loop to get feedback during the process or when to end the process.

What are function calls in large language models?

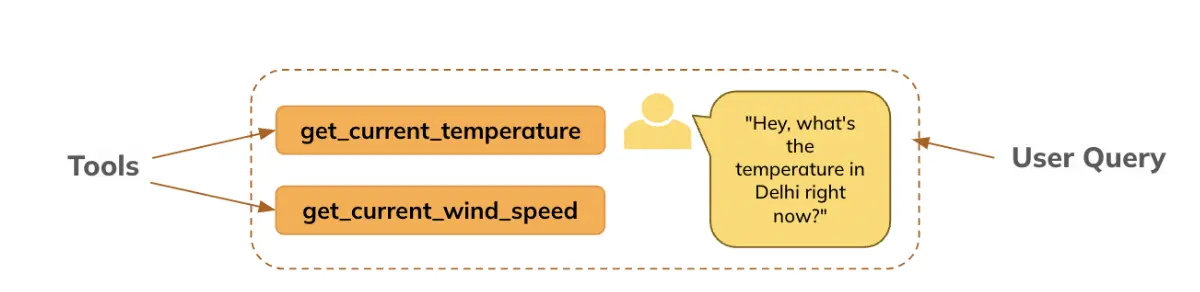

Developers define functions using JSON schemas (which are passed to the model), and the model generates the necessary arguments for these functions based on user input. For example: You can call weather APIs to provide real-time weather updates based on user queries (we'll look at a similar example in this notebook). With function calling, LLMs can intelligently select which functions or tools to use in response to a user request. This capability allows agents to make autonomous decisions about how to best accomplish a task, improving their efficiency and responsiveness.

This article will demonstrate how we use the LLM (here, Mistral) to generate arguments for the defined function, based on the question asked by the user, specifically: The user asks about the temperature in Delhi, the model extracts the arguments, which the function is used to get information in real time (here, we have configured it to return a default value for demonstration purposes), and then the LLM generates the response in simple language for the user.

Building a pipeline for Mistral 7B: model and text generation

Let's import the necessary libraries and import the huggingface model and tokenizer to set up inference. The model is available here.

Importing required libraries

from transformers import pipeline ## For sequential text generation

from transformers import AutoModelForCausalLM, AutoTokenizer # For leading the model and tokenizer from huggingface repository

import warnings

warnings.filterwarnings("ignore") ## To remove warning messages from outputProvide the name of the Huggingface model repository for Mistral 7B

model_name = "mistralai/Mistral-7B-Instruct-v0.3"Downloading the model and tokenizer

- Since this LLM is a closed model, you will first need to register with huggingface and agree to their terms and conditions. After registering, you can follow the instructions in this page to generate your user access token to download this model to your machine.

- After generating the token by following the steps mentioned above, pass the huggingface token (in hf_token) to load the model.

model = AutoModelForCausalLM.from_pretrained(model_name, token = hf_token, device_map='auto')

tokenizer = AutoTokenizer.from_pretrained(model_name, token = hf_token)Implementation of function calls with Mistral 7B

In the rapidly evolving world of ai, implementing function calls with Mistral 7B allows developers to create sophisticated agents capable of seamlessly interacting with external systems and providing accurate, context-aware responses.

Step 1: Tool specification (function) and query (initial message)

Here, we are defining the tools (functions) whose information the model will have access to, to generate the function arguments based on the user's query.

The tool is defined below:

def get_current_temperature(location: str, unit: str) -> float:

"""

Get the current temperature at a location.

Args:

location: The location to get the temperature for, in the format "City, Country".

unit: The unit to return the temperature in. (choices: ("celsius", "fahrenheit"))

Returns:

The current temperature at the specified location in the specified units, as a float.

"""

return 30.0 if unit == "celsius" else 86.0 ## We're setting a default output just for demonstration purpose. In real life it would be a working functionThe notice template for Mistral must have the specific format below for Mistral.

Query (the message) to be passed to the model

messages = (

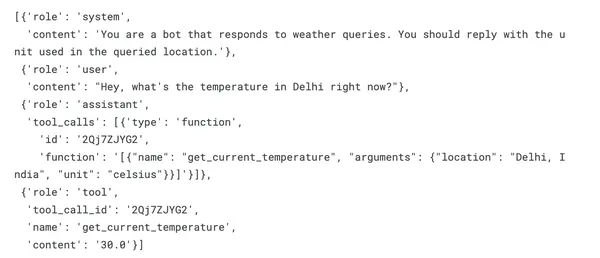

{"role": "system", "content": "You are a bot that responds to weather queries. You should reply with the unit used in the queried location."},

{"role": "user", "content": "Hey, what's the temperature in Delhi right now?"}

)Step 2: Model generates function arguments, if applicable

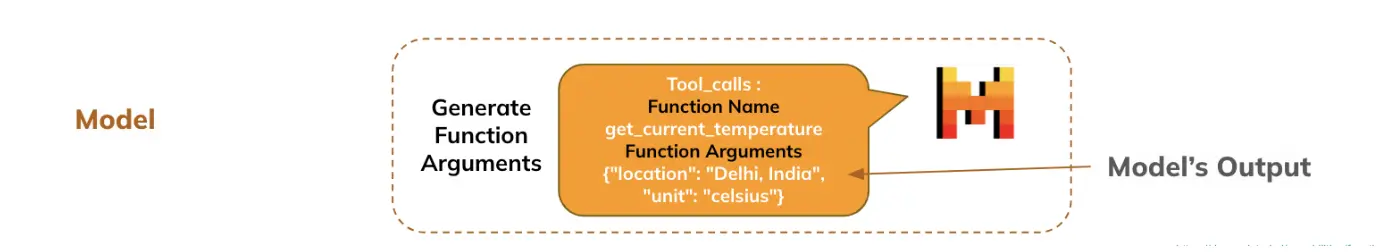

Generally, the user's query along with information about the available functions is passed to the LLM, based on which the LLM extracts the arguments from the user's query for the function to be executed.

- Apply the specific chat template for calls to the mistral function

- The model generates the response containing which function and which arguments should be specified.

- The LLM chooses which function to execute and extracts the arguments from the natural language provided by the user.

inputs = tokenizer.apply_chat_template(

messages, # Passing the initial prompt or conversation context as a list of messages.

tools=(get_current_temperature), # Specifying the tools (functions) available for use during the conversation. These could be APIs or helper functions for tasks like fetching temperature or wind speed.

add_generation_prompt=True, # Whether to add a system generation prompt to guide the model in generating appropriate responses based on the tools or input.

return_dict=True, # Return the results in dictionary format, which allows easier access to tokenized data, inputs, and other outputs.

return_tensors="pt" # Specifies that the output should be returned as PyTorch tensors. This is useful if you're working with models in a PyTorch-based environment.

)

inputs = {k: v.to(model.device) for k, v in inputs.items()} # Moves all the input tensors to the same device (CPU/GPU) as the model.

outputs = model.generate(**inputs, max_new_tokens=128)

response = tokenizer.decode(outputs(0)(len(inputs("input_ids")(0)):), skip_special_tokens=True)# Decodes the model's output tokens back into human-readable text.

print(response)Production :({“name”: “get_current_temperature”, “arguments”: {“location”: “Delhi, India”, “unit”: “celsius”}})

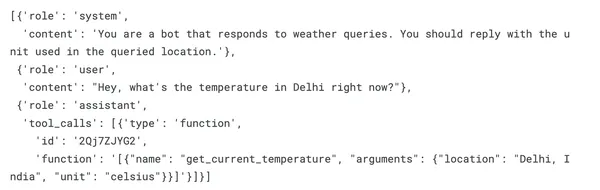

Step 3: Generate a unique tool caller ID (Mistral specific)

It is used to uniquely identify and combine tool calls with their corresponding responses, ensuring consistency and error handling in complex interactions with external tools.

import json

import random

import string

import reGenerate a random tool_call_id

It is used to uniquely identify and combine tool calls with their corresponding responses, ensuring consistency and error handling in complex interactions with external tools.

tool_call_id = ''.join(random.choices(string.ascii_letters + string.digits, k=9))Add the tool call to the conversation

messages.append({"role": "assistant", "tool_calls": ({"type": "function", "id": tool_call_id, "function": response})})print(messages)Production :

Step 4: Parse the response in JSON format

try :

tool_call = json.loads(response)(0)

except :

# Step 1: Extract the JSON-like part using regex

json_part = re.search(r'\(.*\)', response, re.DOTALL).group(0)

# Step 2: Convert it to a list of dictionaries

tool_call = json.loads(json_part)(0)

tool_callProduction : {'name': 'get_current_temperature', 'arguments': {'location': 'Delhi, India', 'unit': 'celsius'}}

(Note): In some cases, the model may also produce some texts along with the function information and arguments. The 'except' block is responsible for extracting the exact syntax from the output.

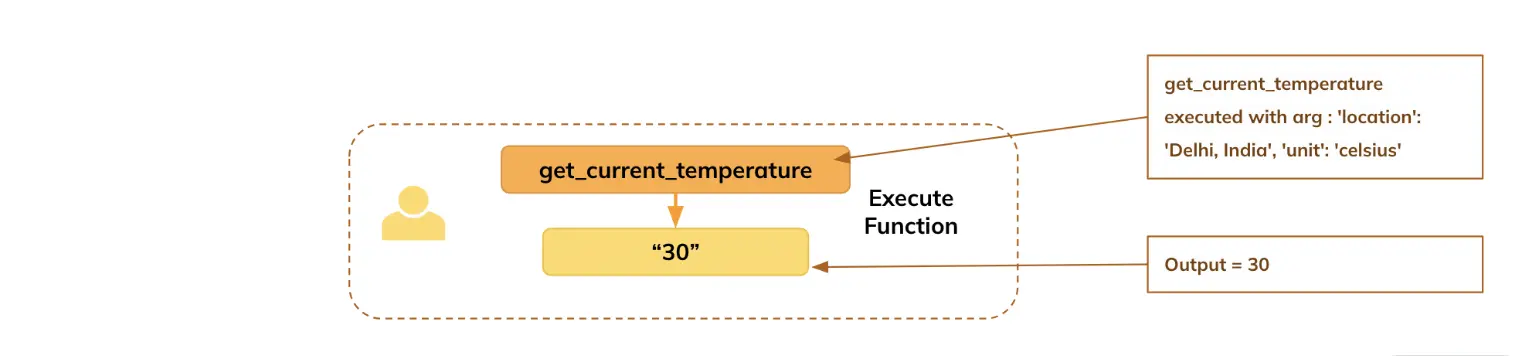

Step 5: Run functions and get results

Based on the arguments generated by the model, you pass them to the respective function to execute and get the results.

function_name = tool_call("name") # Extracting the name of the tool (function) from the tool_call dictionary.

arguments = tool_call("arguments") # Extracting the arguments for the function from the tool_call dictionary.

temperature = get_current_temperature(**arguments) # Calling the "get_current_temperature" function with the extracted arguments.

messages.append({"role": "tool", "tool_call_id": tool_call_id, "name": "get_current_temperature", "content": str(temperature)})Step 6: Generate the final response based on the result of the function

## Now this list contains all the information : query and function details, function execution details and the output of the function

print(messages)Production

Prepare the message to pass complete information to the model

inputs = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_dict=True,

return_tensors="pt"

)

inputs = {k: v.to(model.device) for k, v in inputs.items()}The model generates the final answer

Finally, the model generates the final response based on the entire conversation that starts with the user's query and displays it to the user.

- tickets – Unpacks the input dictionary, which contains tokenized data that the model needs to generate text.

- max_new_tokens=128: Limits the generated response to a maximum of 128 new tokens, preventing the model from generating excessively long responses.

outputs = model.generate(**inputs, max_new_tokens=128)

final_response = tokenizer.decode(outputs(0)(len(inputs("input_ids")(0)):),skip_special_tokens=True)

## Final response

print(final_response)Production: The current temperature in Delhi is 30 degrees Celsius.

Conclusion

We created our first agent that can give us real-time temperature statistics around the world! Of course, we use a random temperature as the default, but you can connect it to weather APIs that get real-time data.

Technically speaking, based on the user's natural language query, we were able to obtain the necessary arguments from the LLM to execute the function, obtain the results, and then generate a natural language response from the LLM.

What if we wanted to know other factors such as wind speed, humidity and UV index? : We just need to define the functions for those factors and pass them into the tools chat template argument. In this way, we can create a complete weather agent that has access to real-time weather information.

Key takeaways

- ai agents leverage LLMs to autonomously perform tasks in various fields.

- Integrating function calls with LLM enables automation and structured decision making.

- Mistral 7B is an effective model for implementing function calls in real-world applications.

- Developers can define functions using JSON schemas, allowing LLMs to generate the necessary arguments efficiently.

- ai agents can obtain real-time information, such as weather updates, improving user interactions.

- You can easily add new features to expand the capabilities of ai agents across multiple domains.

Frequently asked questions

A. Function calling in LLM allows the model to execute predefined functions based on user prompts, allowing for structured interactions with external systems or APIs.

A. Mistral 7B excels at instruction following tasks and can generate function arguments autonomously, making it suitable for applications requiring real-time data retrieval.

A. JSON schemas define the structure of functions used by LLMs, allowing models to understand and generate the necessary arguments for those functions based on user input.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.

NEWSLETTER

NEWSLETTER