Text-to-image (T2I) and text-to-video (T2V) generation has made significant advances in generative models. While T2I models can handle subject identity well, extending this capability to T2V remains a challenge. Existing T2V methods need finer control over the generated content, in particular the generation of specific identities for human-related scenarios. Efforts to leverage T2I advances for video generation need help maintaining consistent identities and stable backgrounds across frames. These challenges arise from various reference images influencing identity tokens and the struggle of motion modules to ensure temporal coherence amidst different identity inputs.

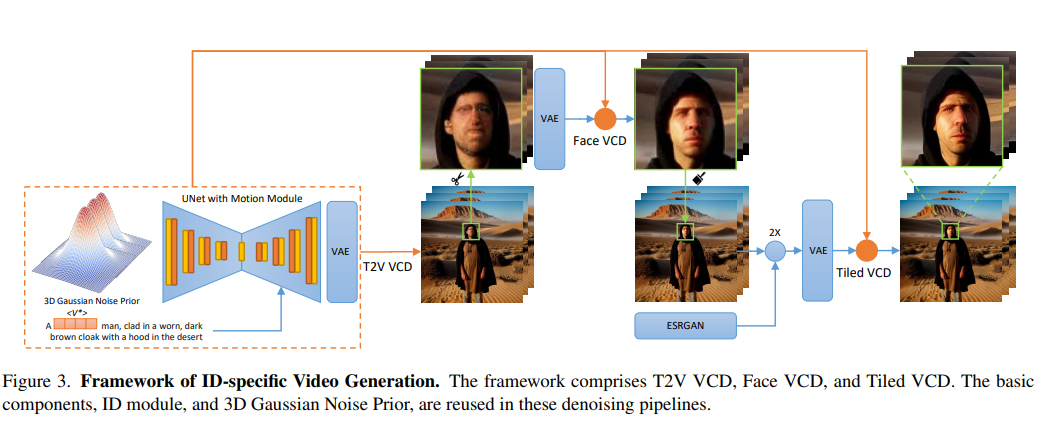

Researchers at ByteDance Inc. and UC Berkeley have developed Video Custom Diffusion (VCD), a simple yet powerful framework for generating videos controllable by subject identity. VCD employs three key components: an ID module for accurate identity extraction, a 3D Gaussian noise pre-noise for frame-to-frame coherence, and V2V modules to improve video quality. By separating identity information from background noise, VCD aligns IDs precisely, ensuring stable video outputs. The flexibility of the framework allows it to work seamlessly with various ai-generated content models. Contributions include significant advances in ID-specific video generation, robust denoising techniques, resolution enhancement, and a training approach for noise mitigation in ID tokens.

In generative models, advances in the T2I generation have given rise to customizable models capable of creating realistic portraits and imaginative compositions. Techniques such as Textual Inversion and DreamBooth fit pre-trained models with images of specific topics, generating unique identifiers linked to the desired topics. This progress extends to multi-subject generation, where models learn to composite multiple subjects into single images. The transition to the T2V generation presents new challenges due to the need for spatial and temporal coherence between frames. While early methods used GAN and VAE for low-resolution videos, recent approaches employed diffusion models for higher quality results.

A preprocessing module, an identification module and a motion module have been used in the VCD framework. Additionally, an optional ControlNet Tile module displays videos for higher resolution. VCD enhances a commercially available motion module with 3D Gaussian noise before mitigating exposure bias during inference. The ID module incorporates extended ID tokens with masked loss and fast segmentation, effectively eliminating background noise. The study also mentions two V2V VCD pipelines: Face VCD, which enhances facial features and resolution, and Tiled VCD, which further enhances the video while preserving identity details. These modules collectively ensure high-quality, identity-preserving video generation.

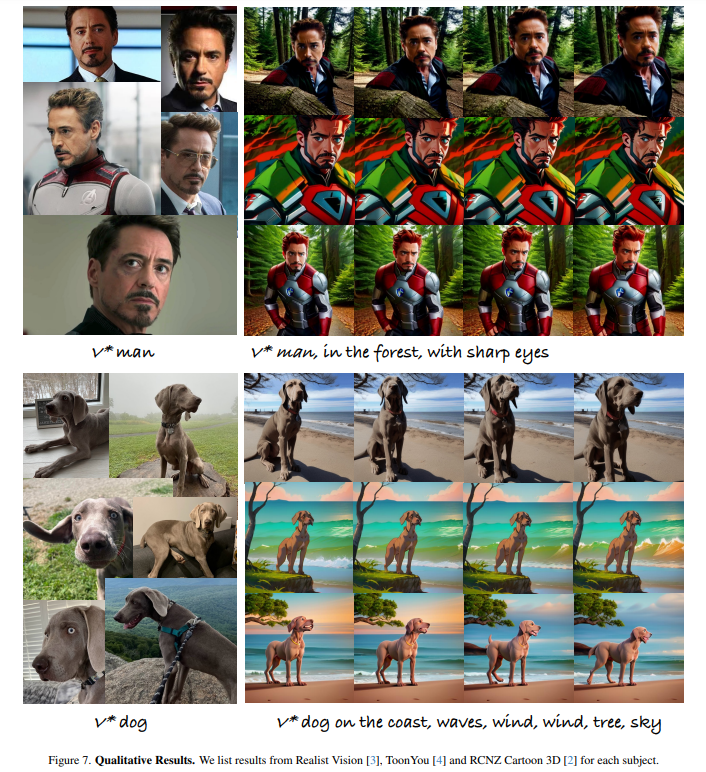

The VCD model maintains the identity of the character in various realistic and stylized models. The researchers meticulously selected subjects from diverse data sets and evaluated the method against multiple baselines using CLIP-I and DINO for identity alignment, text alignment, and temporal smoothness. The details of the training involved using Stable Diffusion 1.5 for the identification module and adjusting learning rates and batch sizes accordingly. The study obtained data from the DreamBooth and CustomConcept101 datasets and evaluated the model's performance against several metrics. The study highlighted the critical role of 3D Gaussian noise prior and segmentation module in improving video smoothness and image alignment. Realistic Vision overall outperformed Stable Diffusion, underscoring the importance of model selection.

In conclusion, VCD revolutionizes the generation of subject identity-controllable videos by seamlessly integrating identity information and frame correlation. Through innovative components such as the ID module, trained with fast segmentation to accurately disentangle identity, and the VCD T2V module to improve frame consistency, VCD sets a new benchmark for identity preservation in video. Its adaptability with existing text-to-image models improves practicality. With features like VCD 3D Gaussian Noise Prior and Face/Tiled modules, VCD ensures stability, clarity and higher resolution. Extensive experiments confirm its superiority over existing methods, making it an indispensable tool for generating stable, high-quality identity-preserved videos.

Review the Paper, GitHuband Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER