Retrieval Augmented Generation systems, better known as RAG systems have become the de-facto standard to build Customized Intelligent ai Assistants answering questions on custom enterprise data without the hassles of expensive fine-tuning of Large Language Models (LLMs). One of the major challenges of naive RAG systems is getting the right retrieved context information to answer user queries. Chunking breaks down documents into smaller context pieces or chunks which can often end up losing the overall context information of the whole document. In this guide, we will discuss and build a Contextual RAG System inspired by Anthropic’s famous Contextual Retrieval approach and couple it with Hybrid Search and Reranking using a complete step-by-step hands-on example. Let’s get started!

Naive RAG System Architecture

A standard Naive Retrieval Augmented Generation (RAG) system architecture typically consists of two major steps:

- Data Processing and Indexing

- Retrieval and Response Generation

In Step 1, Data Processing and Indexing, we focus on getting our custom enterprise data into a more consumable format by loading typically the text content from these documents, splitting large text elements into smaller chunks (which are usually independent and isolated), converting them into embeddings using an embedder model and then storing these chunks and embeddings into a vector database as depicted in the following figure.

In Step 2, the workflow starts with the user asking a question, relevant text document chunks which are similar to the input question are retrieved from the vector database and then the question and the context document chunks are sent to an LLM to generate a human-like response as depicted in the following figure.

This two-step workflow is commonly used in the industry to build a standard naive RAG system, however it does come with its own set of limitations, some of which we discuss below in detail.

Naive RAG System limitations

Naive RAG systems have several limitations, some of which are mentioned as follows:

- Large documents are broken down into independent isolated chunks

- Loses contextual information and overall theme of the document in smaller independent chunks

- Retrieval performance and quality can get affected because of the above issues

- Standard semantic similarity based search is often not enough

In this article we will focus particularly on solving the limitations of naive RAG systems in terms of adding contextual information to document chunks and enhancing standard semantic search with hybrid search and reranking.

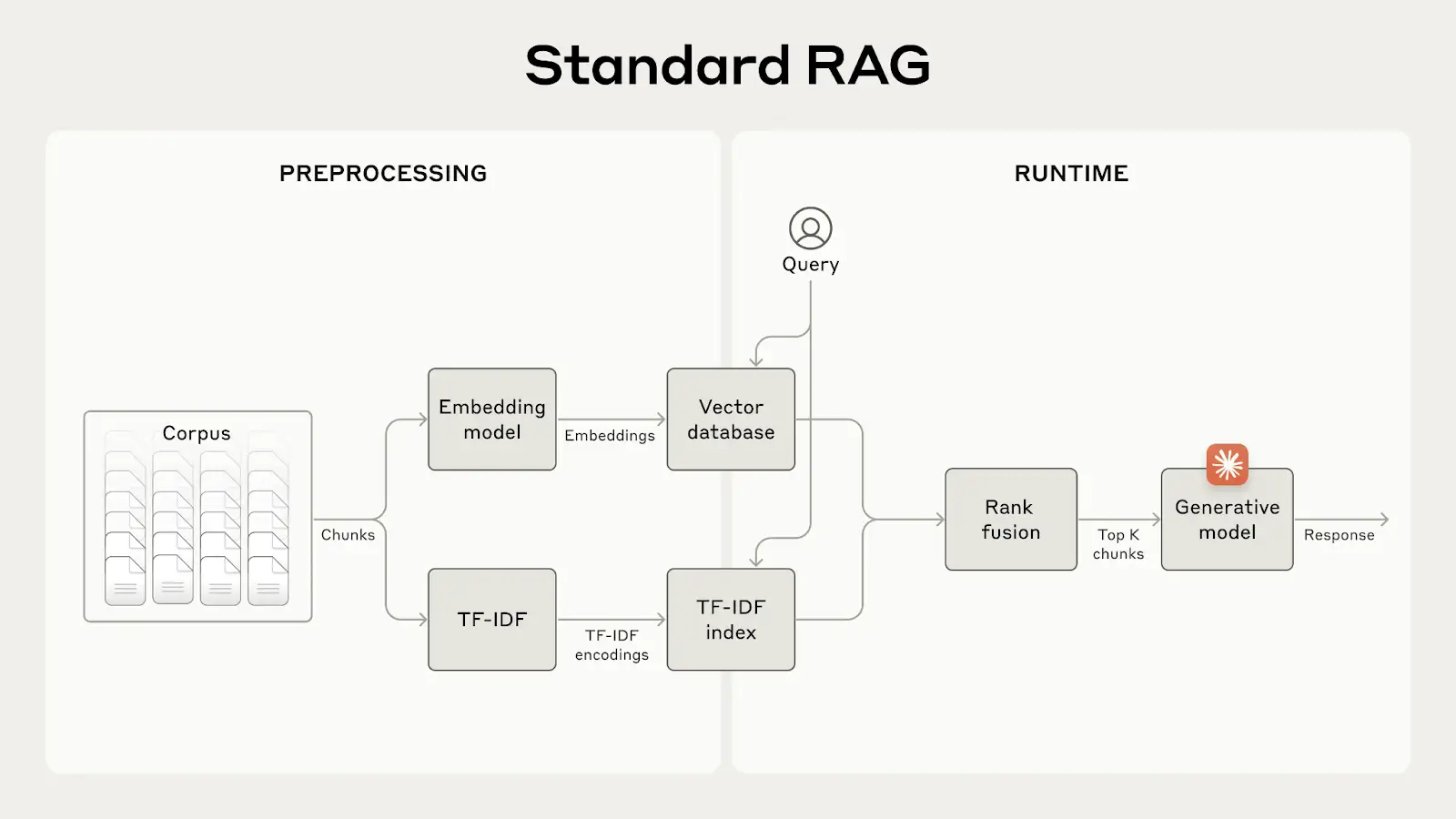

Standard Hybrid RAG Workflow

One way of improving the performance of standard naive RAG systems is to use a Hybrid RAG approach. This is basically a RAG system powered by Hybrid search, using a combination of semantic and keyword search as depicted in the following figure.

The idea as showcased in the above figure is to take your documents, chunk them using any standard chunking mechanism like recursive character text splitting and then create embeddings out of these chunks and store it in a vector database to focus on semantic search. Also we extract the words out of these chunks, count their frequencies and normalize it to get TF-IDF vectors and store it in a TF-IDF index. We could also use BM25 to represent these chunk vectors focusing more on keyword search. BM25 works by building upon the TF-IDF (Term Frequency-Inverse Document Frequency) vector space model. TF-IDF is usually a value measuring how important a word is to a document in a corpus of documents. BM25 refines this using the following mathematical representation.

Thus, BM25, considers document length and applies a saturation function to term frequency, which helps prevent common words from dominating the results.

Once the vector database and BM25 vector index is created, the hybrid RAG system operates as follows:

- User query comes in and goes into the vector database embedder model to get a query embedding and the vector DB uses embedding semantic similarity to find top-K similar document chunks

- User query also goes into the BM25 vector index, a query vector representation is created and top-K similar document chunks are retrieved using BM25 similarity

- We combine and deduplicate results from the above two retrievals using Reciprocal Rank Fusion (RRF)

- These document chunks are sent as the context along with the user query in an instruction prompt to the LLM to generate a response

While Hybrid RAG is better than Naive RAG, it still has some problems as highlighted also in the Anthropic research on Contextual RAG. The main problem is because documents are broken into independent and isolated chunks. It works in many cases but often because these chunks lack sufficient context, the quality of retrieval and responses may not be good enough. This is highlighted clearly in the example given by Anthropic in their research.

They also mention that this problem could be solved by Contextual Retrieval and they have run several experiments on the same.

Understanding Contextual Retrieval

The main focus of contextual retrieval is to improve the quality of contextual information in each document chunk. This is done by prepending chunk-specific explanatory context information in each chunk with respect to the overall document. Only then do we send these chunks for creating embeddings and TF-IDF vectors. The following is an example from Anthropic showing how a chunk might be transformed into a contextual chunk.

There have been other approaches also to improve context in the past which include, adding generic document summaries to chunks , hypothetical document embedding, and <a target="_blank" href="https://www.llamaindex.ai/blog/a-new-document-summary-index-for-llm-powered-qa-systems-9a32ece2f9ec” target=”_blank” rel=”noreferrer noopener nofollow”>summary-based indexing. Based on experiments, Anthropic found them to not perform as well as contextual retrieval. However feel free to explore, experiment and even combine approaches!

Implementing Contextual Retrieval

One ideal way to infuse context into each chunk is to have humans read through each document, understand it and then add relevant context information into each chunk. However, that can take forever especially if you have a lot of documents and thousands or even millions of document chunks! Thus, we can leverage the power of long-context LLMs like GPT-4o, Gemini 1.5 or Claude 3.5 and do this automatically with some clever prompting. The following is an example of the prompt used by Anthropic to prompt Claude 3.5 to help get context information for each chunk with respect to its overall document.

The entire document would be put in the WHOLE_DOCUMENT placeholder variable and each chunk would be put in the CHUNK_CONTENT placeholder variable. The resulting contextual text, usually 50-100 tokens (you can control the length via the prompt), is prepended to the chunk before creating the vector database and BM25 indices.

Remember that depending on your use-case, domain and requirements, you can modify the above prompt as necessary. For example, in this guide we will be adding context to chunks belonging to research papers so I used the following customized prompt to generate the context for each chunk which would then be prepended to the chunk.

You can clearly mention what should or should not be there in the context information of each chunk and also specific constraints like number of lines, words and so on.

Contextual Retrieval Pre-Processing Architecture

The following figure shows the pre-processing architectural flow for implementing contextual retrieval. Remember that you are free to choose your own document loaders and splitters as you desire depending on your experiments and use-case.

In our use-case we will be building a RAG system on a mixture of documents from different sources and formats. We have short 1-2 paragraph Wikipedia articles available as JSON documents and we have some popular ai research papers, available as PDFs.

Workflow with Pre-processing pipeline

The following workflow is followed in the pre-processing pipeline.

- We use a JSON Document loader to extract the text content from the JSON Wikipedia articles. Since they are not very large, we keep them as is and do not chunk them further.

- We use a PDF Document loader like PyMuPDF to extract the text content from each PDF file.

- Then, we use a document chunking technique, like Recursive Character Text Splitting, to chunk the PDF document text into smaller document chunks

- Next, we pass in each chunk along with the whole document to an instruction prompt template (depicted as the Context Generator Prompt in the above figure)

- This prompt is then sent to a long-context LLM like GPT-4o to generate contextual information for each chunk

- The context information for each chunk is then prepended to the chunk content

- We collect all the processed chunks which are then ready to be embedded and indexed

Remember creating context for each chunk is costly because the prompt will have the whole document information being sent every time along with the chunk and you are charged based on number of tokens especially if you are using commercial LLMs. There are a few ways you can tackle this:

- Leverage the prompt caching feature of most popular LLMs like Claude and GPT-4o which enables you to save on costs

- Don’t send the whole document but maybe the specific page where the chunk is present or a few pages near to the chunk

- Sent a summary of the document instead of the whole document

Experiment with what works best for your situation always, remember there is no one single best method for contextual preprocessing. Let’s now plug in this pipeline to the overall RAG pipeline and talk about the overall Contextual RAG architecture.

Contextual RAG with Hybrid Search and Reranking Architecture

The following figure depicts the end-to-end architecture flow for our Contextual RAG system which also implements hybrid search and reranking to improve the quality of retrieved document chunks before response generation.

Contextual Pre-processing workflow

The left side of the figure above depicts the Contextual Pre-processing workflow which we just discussed in the previous section. Here we assume that this pre-processing from the previous step has already taken place and now we have the processed document chunks (with added contextual information) ready to be indexed.

First Step

The first step here involves taking these document chunks and passing them through a relevant embedding model like OpenAI’s text-embedding-3-small embedder model and creating chunk embeddings. These are then indexed into a vector database like the Chroma Vector DB which is a lightweight, open-source vector database enabling super-fast semantic retrieval (usually using embedding cosine similarity) to retrieve similar document chunks to user queries.

Second Step

The next step is to take the same document chunks and create sparse keyword frequency vectors (TF-IDF) and index them into a BM25 index which will use BM25 similarity as we described earlier to retrieve similar document chunks to user queries.

Now based on a user query coming into the system, as depicted in the above figure on the right, we first retrieve similar document chunks from the Vector DB and BM25 index. Then, we use an ensemble retriever to enable hybrid search where we take the documents retrieved from both semantic and keyword search from the Vector DB and BM25 index and take unique document chunks (deduplication) and then use Reciprocal Rank Fusion (RRF) to rerank the documents further to try and rank more relevant document chunks higher.

Third Step

Next, we pass in the query and document chunks into a reranker to focus on relevancy-based ranking rather than just similarity-based ranking. The reranker we use in our implementation is the popular BGE Reranker from BAAI which is hosted on Hugging Face and is open-source. Do note that you need a GPU to run this faster (or you can use API-based rerankers also which are usually commercial and have a cost). In this step, the context document chunks are reranked based on their relevancy to the input query.

Final Step

Finally, we send the user query and the reranked context document chunks to an instruction prompt template which instructs the LLM to use the context information only to answer the user query. This is then sent to the LLM (in our case we use GPT-4o) for response generation.

Finally, we get the relevant contextual response to the user query from the LLM and that completes the overall flow. Let’s implement this end-to-end workflow now in the next section!

Hands-on Implementation of our Contextual RAG System

We will now implement the end-to-end workflow for our Contextual RAG system based on the architecture we discussed in detail in the previous section step-by-step with detailed explanations, code and outputs.

Install Dependencies

We start by installing the necessary dependencies which are going to be the libraries we will be using to build our system. This includes langchain, pymupdf, jq, as well as necessary dependencies like openai, chroma and bm25.

!pip install langchain==0.3.4

!pip install langchain-openai==0.2.3

!pip install langchain-community==0.3.3

!pip install jq==1.8.0

!pip install pymupdf==1.24.12

!pip install httpx==0.27.2

# install vectordb and bm25 utils

!pip install langchain-chroma==0.1.4

!pip install rank_bm25==0.2.2<h3 class="wp-block-heading" id="h-enter-open-ai-api-key”>Enter Open ai API Key

We enter our Open ai key using the getpass() function so we don’t accidentally expose our key in the code.

from getpass import getpass

OPENAI_KEY = getpass('Enter Open ai API Key: ')Setup Environment Variables

Next, we setup some system environment variables which will be used later when authenticating our LLM.

import os

os.environ('OPENAI_API_KEY') = OPENAI_KEYGet the Dataset

We downloaded our dataset which consists of some Wikipedia articles in JSON format and a few research paper PDFs from our Google Drive as follows

!gdown 1aZxZejfteVuofISodUrY2CDoyuPLYDGZOutput:

Downloading...

From: https://drive.google.com/uc?id=1aZxZejfteVuofISodUrY2CDoyuPLYDGZ

To: /content/rag_docs.zip

100% 5.92M/5.92M (00:00<00:00, 134MB/s)

Then we unzip and extract the documents from the zipped file.

!unzip rag_docs.zipOutput:

Archive: rag_docs.zip

creating: rag_docs/

inflating: rag_docs/attention_paper.pdf

inflating: rag_docs/cnn_paper.pdf

inflating: rag_docs/resnet_paper.pdf

inflating: rag_docs/vision_transformer.pdf

inflating: rag_docs/wikidata_rag_demo.jsonl

We will now preprocess the documents based on their types.

Load and Process JSON Wikipedia Documents

We will now load up the Wikipedia documents from the JSON file and process them.

from langchain.document_loaders import JSONLoader

loader = JSONLoader(file_path="./rag_docs/wikidata_rag_demo.jsonl",

jq_schema=".",

text_content=False,

json_lines=True)

wiki_docs = loader.load()

wiki_docs(3)Output:

Document(metadata={'source': '/content/rag_docs/wikidata_rag_demo.jsonl',

'seq_num': 4}, page_content="{"id": "71548", "title": "Chi-square

distribution", "paragraphs": ("In probability theory and statistics, the

chi-square distribution (also chi-squared or formula_1\\u00a0 distribution)

is one of the most widely used theoretical probability distributions. Chi-

square distribution with formula_2 degrees of freedom is written as

formula_3. ... Another one is that the different random variables (or

observations) must be independent of each other.")}")

We now convert these into LangChain Documents as it becomes easier to process and index them later on and even add additional metadata fields if necessary.

import json

from langchain.docstore.document import Document

wiki_docs_processed = ()

for doc in wiki_docs:

doc = json.loads(doc.page_content)

metadata = {

"title": doc('title'),

"id": doc('id'),

"source": "Wikipedia",

"page": 1

}

data=" ".join(doc('paragraphs'))

wiki_docs_processed.append(Document(page_content=data, metadata=metadata))

wiki_docs_processed(3)Output

Document(metadata={'title': 'Chi-square distribution', 'id': '71548',

'source': 'Wikipedia', 'page': 1}, page_content="In probability theory and

statistics, the chi-square distribution (also chi-squared or formula_1\xa0

distribution) is one of the most widely used theoretical probability

distributions. Chi-square distribution with formula_2 degrees of freedom is

written as formula_3. ... Another one is that the different random variables

(or observations) must be independent of each other.")

Load and Process PDF Research Papers with Contextual Information

We will now load up the research paper PDFs, process them and also add in contextual information to each chunk to enable contextual retrieval as we discussed earlier. We start by creating a LangChain chain to generate context information for chunks as follows.

# create chunk context generation chain

from langchain.prompts import ChatPromptTemplate

from langchain.schema import StrOutputParser

from langchain_openai import ChatOpenAI

chatgpt = ChatOpenAI(model_name="gpt-4o-mini", temperature=0)

def generate_chunk_context(document, chunk):

chunk_process_prompt = """You are an ai assistant specializing in research

paper analysis. Your task is to provide brief,

relevant context for a chunk of text based on the

following research paper.

Here is the research paper:

{paper}

Here is the chunk we want to situate within the whole

document:

{chunk}

Provide a concise context (3-4 sentences max) for this

chunk, considering the following guidelines:

- Give a short succinct context to situate this chunk

within the overall document for the purposes of

improving search retrieval of the chunk.

- Answer only with the succinct context and nothing

else.

- Context should be mentioned like 'Focuses on ....'

do not mention 'this chunk or section focuses on...'

Context:

"""

prompt_template = ChatPromptTemplate.from_template(chunk_process_prompt)

agentic_chunk_chain = (prompt_template

|

chatgpt

|

StrOutputParser())

context = agentic_chunk_chain.invoke({'paper': document, 'chunk': chunk})

return contextWe use this to generate context information for chunks of our research papers using LangChain.

Here’s a brief explanation:

- ChatGPT Model: Initializes ChatOpenAI with 0 temperature for consistent outputs and uses the GPT-4o-mini LLM.

- generate_chunk_context Function:

- Inputs: document (full paper) and chunk (specific section).

- Constructs a prompt to instruct the ai to summarize the chunk’s context in relation to the document.

- Prompt: Guides the LLM to create a short (3-4 sentences) context focused on improving search retrieval, and avoiding repetitive phrasing.

- Chain Setup: Combines the prompt, chatgpt model, and StrOutputParser() for structured processing.

- Execution: Generates and returns a succinct context for the chunk.

Next, we define a preprocessing function to load each PDF document, chunk it using recursive character text splitting, generate context for each chunk using the above pipeline and add the context to the beginning (prepend) of each chunk.

from langchain.document_loaders import PyMuPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

import uuid

def create_contextual_chunks(file_path, chunk_size=3500, chunk_overlap=0):

print('Loading pages:', file_path)

loader = PyMuPDFLoader(file_path)

doc_pages = loader.load()

print('Chunking pages:', file_path)

splitter = RecursiveCharacterTextSplitter(chunk_size=chunk_size,

chunk_overlap=chunk_overlap)

doc_chunks = splitter.split_documents(doc_pages)

print('Generating contextual chunks:', file_path)

original_doc="\n".join((doc.page_content for doc in doc_chunks))

contextual_chunks = ()

for chunk in doc_chunks:

chunk_content = chunk.page_content

chunk_metadata = chunk.metadata

chunk_metadata_upd = {

'id': str(uuid.uuid4()),

'page': chunk_metadata('page'),

'source': chunk_metadata('source'),

'title': chunk_metadata('source').split("https://www.analyticsvidhya.com/")(-1)

}

context = generate_chunk_context(original_doc, chunk_content)

contextual_chunks.append(Document(page_content=context+'\n'+chunk_content,

metadata=chunk_metadata_upd))

print('Finished processing:', file_path)

print()

return contextual_chunksThe above function processes PDF research papers into contextualized chunks for better analysis and retrieval. Here’s a brief explanation:

- Imports:

- Uses PyMuPDFLoader for PDF loading and RecursiveCharacterTextSplitter for chunking text.

- uuid generates unique IDs for each chunk.

- create_contextual_chunks Function:

- Inputs: File path, chunk size, and overlap size.

- Process:

- Loads the document pages using PyMuPDFLoader.

- Splits the document into smaller chunks using the RecursiveCharacterTextSplitter.

- For each chunk:

- Metadata is updated with a unique ID, page number, source, and title.

- Generates contextual information for the chunk using generate_chunk_context which we defined earlier.

- Prepends the context to the original chunk and then appends it to a list as a Document object.

- Output: Returns a list of processed chunks with contextual metadata and content.

This function loads our research paper PDFs, chunks them and adds in a meaningful context to each chunk. Now we execute this function on our PDFs as follows.

from glob import glob

pdf_files = glob('./rag_docs/*.pdf')

paper_docs = ()

for fp in pdf_files:

paper_docs.extend(create_contextual_chunks(file_path=fp,

chunk_size=3500))Output:

Loading pages: ./rag_docs/attention_paper.pdf

Chunking pages: ./rag_docs/attention_paper.pdf

Generating contextual chunks: ./rag_docs/attention_paper.pdf

Finished processing: ./rag_docs/attention_paper.pdfLoading pages: ./rag_docs/resnet_paper.pdf

Chunking pages: ./rag_docs/resnet_paper.pdf

Generating contextual chunks: ./rag_docs/resnet_paper.pdf

Finished processing: ./rag_docs/resnet_paper.pdf

...

paper_docs(0)Output:

Document(metadata={'id': 'd5c90113-2421-42c0-bf09-813faaf75ac7', 'page': 0,

'source': './rag_docs/resnet_paper.pdf', 'title': 'resnet_paper.pdf'},

page_content="Focuses on the introduction of a residual learning framework

designed to facilitate the training of significantly deeper neural networks,

addressing challenges such as vanishing gradients and degradation of

accuracy. It highlights the empirical success of residual networks,

particularly their performance on the ImageNet dataset and their

foundational role in winning multiple competitions in 2015.\nDeep Residual

Learning for Image Recognition\nKaiming He\nXiangyu Zhang\nShaoqing

Ren\nJian Sun\nMicrosoft Research\n{kahe, v-xiangz, v-shren,

jiansun}@microsoft.com\nAbstract\nDeeper neural networks are more difficult

to train. We\npresent a residual learning framework to ease the training\nof

networks that are substantially deeper than those used\npreviously...")

You can see in the above chunk that we have some LLM generated contextual information followed by the actual chunk content. Finally, we combine all our document chunks from our JSON and PDF documents into one single list.

total_docs = wiki_docs_processed + paper_docs

len(total_docs)Output:

1880

Create Vector Database Index and Setup Semantic Retrieval

We will now create embeddings for our document chunks and index them into our vector database using the following code:

from langchain_openai import OpenAIEmbeddings

from langchain_chroma import Chroma

openai_embed_model = OpenAIEmbeddings(model="text-embedding-3-small")

# create vector DB of docs and embeddings - takes < 30s on Colab

chroma_db = Chroma.from_documents(documents=total_docs,

collection_name="my_context_db",

embedding=openai_embed_model,

collection_metadata={"hnsw:space": "cosine"},

persist_directory="./my_context_db")We then setup a semantic retrieval strategy which uses cosine embedding similarity and retrieves the top 5 document chunks similar to user queries.

similarity_retriever = chroma_db.as_retriever(search_type="similarity",

search_kwargs={"k": 5})Create BM25 Index and Setup Keyword Retrieval

We will now create TF-IDF vectors for our document chunks and index them into our BM25 index and setup a retriever to use BM25 to return the top 5 document chunks similar to user queries using the following code.

from langchain.retrievers import BM25Retriever

bm25_retriever = BM25Retriever.from_documents(documents=total_docs,

k=5)Enable Hybrid Search with Ensemble Retrieval

We will now enable hybrid search to be executed during retrieval by using an ensemble retriever which combines the results from the semantic and keyword retrieval and uses Reciprocal Rank Fusion (RRF) as we have discussed earlier. We can give specific weights to each retriever also, and in this case we give equal weightage to each retriever.

from langchain.retrievers import EnsembleRetriever

# reciprocal rank fusion

ensemble_retriever = EnsembleRetriever(

retrievers=(bm25_retriever, similarity_retriever),

weights=(0.5, 0.5)

)Improving Retriever with Reranker

We will now plug in our reranker model we discussed earlier to rerank the context document chunks from the ensemble retriever based on their relevancy to the input query. We use an open-source cross-encoder reranker model here.

from langchain_community.cross_encoders import HuggingFaceCrossEncoder

from langchain.retrievers.document_compressors import CrossEncoderReranker

from langchain.retrievers import ContextualCompressionRetriever

# download an open-source reranker model - BAAI/bge-reranker-v2-m3

reranker = HuggingFaceCrossEncoder(model_name="BAAI/bge-reranker-v2-m3")

reranker_compressor = CrossEncoderReranker(model=reranker, top_n=5)

# Retriever 2 - Uses a Reranker model to rerank retrieval results from the previous retriever

final_retriever = ContextualCompressionRetriever(

base_compressor=reranker_compressor,

base_retriever=ensemble_retriever

)Testing our Retrieval Pipeline

We will now test our retrieval pipeline leveraging hybrid search and reranking to see how it works on some sample user queries.

from IPython.display import display, Markdown

def display_docs(docs):

for doc in docs:

print('Metadata:', doc.metadata)

print('Content Brief:')

display(Markdown(doc.page_content(:1000)))

print()

query = "what is machine learning?"

top_docs = final_retriever.invoke(query)

display_docs(top_docs)Output:

Metadata: {'id': '564928', 'page': 1, 'source': 'Wikipedia', 'title':

'Machine learning'}Content Brief:

Machine learning gives computers the ability to learn without being

explicitly programmed (Arthur Samuel, 1959). It is a subfield of computer

science. The idea came from work in artificial intelligence. Machine

learning explores the study and construction of algorithms ...

Metadata: {'id': '663523', 'page': 1, 'source': 'Wikipedia', 'title': 'Deep

learning'}

Content Brief:

Deep learning (also called deep structured learning or hierarchical learning)

is a kind of machine learning, which is mostly used with certain kinds of

neural networks...

...

query = "what is the difference between transformers and vision transformers?"

top_docs = final_retriever.invoke(query)

display_docs(top_docs)Output:

Metadata: {'id': '07117bc3-34c7-4883-aa9b-6f9888fc4441', 'page': 0, 'source':

'./rag_docs/vision_transformer.pdf', 'title': 'vision_transformer.pdf'}Content Brief:

Focuses on the introduction of the Vision Transformer (ViT) model, which

applies a pure Transformer architecture to image classification tasks by

treating image patches as tokens...

Metadata: {'id': 'b896c93d-6330-421c-a236-af9437e9c725', 'page': 1, 'source':

'./rag_docs/vision_transformer.pdf', 'title': 'vision_transformer.pdf'}

Content Brief:

Focuses on the performance of the Vision Transformer (ViT) in comparison to

convolutional neural networks (CNNs), highlighting the advantages of large-

scale training on datasets like ImageNet-21k and JFT-300M. It discusses how

ViT achieves state-of-the-art results in image recognition benchmarks despite

lacking certain inductive biases inherent to CNNs. Additionally, it

references related work on self-attention mechanisms...

...

Overall, it seems to be working pretty well and getting the right context chunks with added contextual information. Let’s build our RAG pipeline now.

Building our Contextual RAG Pipeline

We will now put all the components together and build our end-to-end Contextual RAG pipeline. We start by constructing a standard RAG instruction prompt template.

from langchain_core.prompts import ChatPromptTemplate

rag_prompt = """You are an assistant who is an expert in question-answering tasks.

Answer the following question using only the following pieces of

retrieved context.

If the answer is not in the context, do not make up answers, just

say that you don't know.

Keep the answer detailed and well formatted based on the

information from the context.

Question:

{question}

Context:

{context}

Answer:

"""

rag_prompt_template = ChatPromptTemplate.from_template(rag_prompt)The prompt template takes in retrieved context document chunks and instructs the LLM to use it to answer user queries. Finally, we create our RAG pipeline using LangChain’s LCEL declarative syntax which clearly showcases the flow of information in the pipeline step-by-step.

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI

chatgpt = ChatOpenAI(model_name="gpt-4o-mini", temperature=0)

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

qa_rag_chain = (

{

"context": (final_retriever

|

format_docs),

"question": RunnablePassthrough()

}

|

rag_prompt_template

|

chatgpt

)The chain is our Retrieval-Augmented Generation (RAG) pipeline that processes retrieved document chunks to answer user queries using LangChain. Here are they key components:

- Input Handling:

- “context”:

- Starts with our final_retriever (retrieves relevant documents using hybrid search + reranking).

- Passes the retrieved documents to the format_docs function, which formats the document content into a structured string.

- “question”:

- Uses RunnablePassthrough() to directly pass the user’s query without any modifications.

- “context”:

- Prompt Template:

- Combines the formatted context and the user question into the rag_prompt_template.

- This instructs the model to answer based only on the provided context.

- Model Execution:

- Passes the populated prompt to the chatgpt model (gpt-4o-mini) for response generation with 0 temperature for deterministic answers.

This chain ensures the LLM answers questions using only the relevant retrieved information, providing context-driven responses without hallucinations. The only thing left now is to test out our RAG System!

Testing our Contextual RAG System

Let’s now test our Contextual RAG System on some sample queries as depicted in the examples below.

from IPython.display import display, Markdown

query = "What is machine learning?"

result = qa_rag_chain.invoke(query)

display(Markdown(result.content))Output

Machine learning is a subfield of computer science that provides computers

the ability to learn without being explicitly programmed. The concept was

introduced by Arthur Samuel in 1959 and is rooted in artificial

intelligence. Machine learning focuses on the study and construction of

algorithms that can learn from data and make predictions or decisions based

on that data. These algorithms follow programmed instructions but can also

adapt and improve their performance by building models from sample inputs.Machine learning is particularly useful in scenarios where designing and

programming explicit algorithms is impractical. Some common applications of

machine learning include:1. Spam filtering

2. Detection of network intruders or malicious insiders

3. Optical character recognition (OCR)

4. Search engines

5. Computer visionWithin the realm of machine learning, there is a subset known as deep

learning, which primarily utilizes certain types of neural networks. Deep

learning involves learning sessions that can be unsupervised, semi-

supervised, or supervised, and it often includes multiple layers of

processing, allowing the model to learn increasingly abstract

representations of the data.Overall, machine learning represents a significant advancement in the ability

of computers to process information and make informed decisions based on

that information.

query = "How is a resnet better than a CNN?"

result = qa_rag_chain.invoke(query)

display(Markdown(result.content))Output

A ResNet (Residual Network) is considered better than a traditional CNN

(Convolutional Neural Network) for several reasons, particularly in the

context of training deeper architectures and achieving better performance in

various tasks. Here are the key advantages of ResNets over standard CNNs:1. Degradation Problem Mitigation: Traditional CNNs often face the

degradation problem, where increasing the depth of the network leads to

higher training error. ResNets address this issue by introducing shortcut

connections that allow gradients to flow more easily during backpropagation.

This makes it easier to optimize deeper networks, as the residual learning

framework allows the model to learn residual mappings instead of the

original unreferenced mappings.2. Higher Accuracy with Increased Depth: ResNets can be significantly deeper

than traditional CNNs without suffering from performance degradation. For

instance, ResNet architectures with 50, 101, or even 152 layers have been

shown to achieve better accuracy compared to shallower networks. The

empirical results demonstrate that deeper ResNets can produce substantially

better results on datasets like ImageNet and CIFAR-10.3. Generalization Performance: ResNets exhibit good generalization

performance across various recognition tasks. The context mentions that

replacing VGG-16 with ResNet-101 in the Faster R-CNN framework led to a

notable increase in detection metrics on challenging datasets like COCO,

indicating that ResNets can generalize better to unseen data.4. Architectural Efficiency: Despite being deeper, ResNets maintain lower

computational complexity compared to traditional architectures like VGG-16.

For example, a 152-layer ResNet has lower complexity (11.3 billion FLOPs)

than VGG-16 (15.3 billion FLOPs), allowing for more efficient training and

inference.5. Empirical Success in Competitions: ResNets have achieved top rankings in

various competitions, such as ILSVRC and COCO 2015, demonstrating their

effectiveness in real-world applications. The context highlights that models

based on deep residual networks won first places in several tracks,

showcasing their superior performance.In summary, ResNets improve upon traditional CNNs by effectively addressing

the degradation problem, enabling deeper architectures to be trained

successfully, achieving higher accuracy, and demonstrating strong

generalization capabilities across different tasks.

query = "How does a resnet work?"

result = qa_rag_chain.invoke(query)

display(Markdown(result.content))Output

A ResNet, or Residual Network, operates on the principle of residual learning

to address the challenges associated with training deep neural networks.

Here’s a detailed explanation of how it works:Key Concepts of ResNet

1. Residual Mapping:

Instead of learning the desired underlying mapping ( H(x) ) directly, ResNets

focus on learning a residual mapping ( F(x) = H(x) - x ). This means that

the network learns the difference between the desired output and the input,

which is often easier to optimize.2. Shortcut Connections:

ResNets utilize shortcut connections that skip one or more layers. These

connections perform identity mapping, allowing the input ( x ) to be added

directly to the output of the stacked layers. This can be mathematically

represented as: ( H(x) = F(x) + x )The addition of the input ( x ) helps in mitigating the vanishing gradient

problem, making it easier for the network to learn.3. Optimization Benefits:

The formulation of ( F(x) + x ) allows the network to push the residual (

F(x) ) towards zero if the identity mapping is optimal. This is generally

easier than fitting a complex mapping directly, especially as the depth of

the network increases.Architecture

1. ResNets can be constructed with various depths, such as 18, 34, 50, 101,

and even 152 layers. The architecture includes:Convolutional Layers: These layers extract features from the input images.

Batch Normalization: Applied after each convolution to stabilize and

accelerate training.Pooling Layers: Used for down-sampling the feature maps.

Fully Connected Layers: At the end of the network for classification tasks.

Performance

1. ResNets have shown significant improvements in accuracy as the depth

increases, unlike traditional plain networks, which suffer from higher

training errors with increased depth. For instance, a 34-layer ResNet

outperforms an 18-layer ResNet, demonstrating that deeper networks can be

effectively trained without degradation in performance.Empirical Results

1. Extensive experiments on datasets like ImageNet and CIFAR-10 have

validated the effectiveness of ResNets. They have achieved state-of-the-art

results, including winning the ILSVRC 2015 competition with a 152-layer

ResNet, which had lower complexity than previous models like VGG-16/19.In summary, ResNets leverage residual learning and shortcut connections to

facilitate the training of very deep networks, overcoming the optimization

difficulties that typically arise with in creased depth. This architecture

has proven to be highly effective in various image recognition tasks.

query = "What is the difference between ai, ML and DL?"

result = qa_rag_chain.invoke(query)

display(Markdown(result.content))Here’s the Output

The difference between ai, ML, and DL can be summarized as follows:1. Definition: ai refers to the ability of a computer program or machine to

think and learn, mimicking human cognition. It encompasses a broad range of

technologies and applications aimed at making machines "smart."2. Origin: The term "artificial intelligence" was coined by John McCarthy in

1955.3. Functionality: ai systems can interpret external data, learn from it, and

adapt to achieve specific goals. As technology advances, tasks once

considered to require intelligence, like optical character recognition, are

no longer classified as ai.Machine Learning (ML)

1. Definition: ML is a subfield of ai that focuses on the development of

algorithms that allow computers to learn from and make predictions based on

data without being explicitly programmed.2. Functionality: ML algorithms build models from sample inputs and can make

decisions or predictions based on data. It is particularly useful in

scenarios where traditional programming is impractical, such as spam

filtering and computer vision.Deep Learning (DL)

1. Definition: DL is a specialized subset of machine learning that primarily

uses neural networks with multiple layers (multi-layer neural networks) to

process data.2. Functionality: In deep learning, the information processed becomes

increasingly abstract with each added layer, making it particularly

effective for complex tasks like speech and image recognition. DL models are

inspired by the biological nervous system but differ significantly from the

structural and functional properties of human brains.In summary, ai is the overarching field that includes both ML and DL, with ML

being a specific approach within ai that enables learning from data, and DL

being a further specialization of ML that utilizes deep neural networks for

more complex data processing tasks.

query = "What is the difference between transformers and vision transformers?"

result = qa_rag_chain.invoke(query)

display(Markdown(result.content))Output

The primary difference between traditional Transformers and Vision

Transformers (ViT) lies in their application and input processing methods.1. Input Representation:

Transformers: In natural language processing (NLP), Transformers operate on

sequences of tokens (words) that are typically represented as embeddings.

The input is a 1D sequence of these token embeddings.Vision Transformers (ViT): ViT adapts the Transformer architecture for image

classification tasks by treating image patches as tokens. An image is

divided into fixed-size patches, which are then flattened and linearly

embedded into a sequence. This sequence of patch embeddings is fed into the

Transformer, similar to how word embeddings are processed in NLP.2. Architecture:

Transformers: The standard Transformer architecture consists of layers of

multi-headed self-attention and feed-forward neural networks, designed to

capture relationships and dependencies in sequential data.Vision Transformers (ViT): While ViT retains the core Transformer

architecture, it modifies the input to accommodate 2D image data. The model

includes additional components such as position embeddings to retain spatial

information about the patches, which is crucial for understanding the

structure of images.3. Performance and Efficiency:

Transformers: In NLP, Transformers have become the standard due to their

ability to scale and perform well on large datasets, often requiring

significant computational resources.Vision Transformers (ViT): ViT has shown that a pure Transformer can achieve

competitive results in image classification, often outperforming traditional

convolutional neural networks (CNNs) in terms of efficiency and scalability

when pre-trained on large datasets. ViT requires substantially fewer

computational resources to train compared to state-of-the-art CNNs, making

it a promising alternative for image recognition tasks.In summary, while both architectures utilize the Transformer framework,

Vision Transformers adapt the input and processing methods to effectively

handle image data, demonstrating significant advantages in performance and

resource efficiency in the realm of computer vision.

Overall you can see our Contextual RAG System does a pretty good job of generating high-quality responses for user queries.

Why Care about Contextual RAG?

We have implemented an end-to-end working prototype of a Contextual RAG System with Hybrid Search and Reranking. But why should you care about building such a system? Is it really worth the effort? While you should always test and benchmark the system on your own data, here are the results from Anthropic when they ran some benchmarks and found that Reranked Contextual Embedding and Contextual BM25 reduced the top-20-chunk retrieval failure rate by 67% (5.7% → 1.9%). This is depicted in the following figure.

It is quite evident that Hybrid Search and Rerankers are worth investing time into regardless of regular or contextual retrieval and if you have the time and effort, you should also definitely invest time into contextual retrieval!

Conclusion

If you are reading this, I commend your efforts in staying right till the end in this massive guide! Here, we went through an in-depth understanding of the current challenges in Naive RAG systems especially with regard to chunking and retrieval. We then discussed in detail what is hybrid search, reranking, contextual retrieval, the inspiration from Anthropic’s recent work and designed our own architecture to handle contextual generation, vector search, keyword search, hybrid search, ensemble retrieval, reranking and tie them together into building our own Contextual RAG System with in-build Hybrid Search and Reranking! Do check out this Colab notebook for easy access to the code and try customizing and improving this system even further!

Frequently Asked Questions

Ans. RAG systems combine information retrieval with language models to generate responses based on relevant context, often from custom datasets.

Ans. Naive RAG systems often break documents into independent chunks, losing context and affecting retrieval accuracy and response quality.

Ans. Hybrid search combines semantic (embedding-based) and keyword (BM25/TF-IDF) searches to improve retrieval accuracy and context relevance.

Ans. Contextual retrieval enriches document chunks with added explanatory context, enhancing relevance and coherence in search results.

Ans. Reranking prioritizes retrieved document chunks based on relevancy, improving the quality of responses generated by the language model.

NEWSLETTER

NEWSLETTER