SQL is one of the key languages widely used in all companies, and requires an understanding of databases and table metadata. This can be overwhelming for non -technical users who lack competition in SQL. Today, the generative ai can help close this knowledge gap so that non -technical users generate SQL queries through the use of a text application to SQL. This application allows users to ask questions in natural language and then generate an SQL query for user request.

Large language models (LLM) are trained to generate precise SQL queries for natural language instructions. However, the listales cannot be used without any modification. First, LLMs do not have access to business databases, and models must be customized to understand the specific database of a company. In addition, complexity increases due to the presence of synonyms for columns and internal metrics available.

The limitation of the LLMs in the understanding of business data sets and the human context can be addressed using augmented recovery generation (RAG). In this publication, we explore the use of amazon Bedrock to create a SQL text application using RAG. We use the Sonet Claude 3.5 model from Anthrope to generate SQL queries, amazon Titan at amazon Bedrock for text embedding and amazon rock bed to access these models.

amazon Bedrock is a fully administered service that offers an option of high performance foundation models (FMS) of the companies of the leaders such as AI21 Labs, Anthrope, Coherer, Metral, Mistral ai, Stability ai and amazon through a single API, together with a wide set of capabilities that you need to build generative applications of Ia with security, privacy and responsibility. ai.

General solution of the solution

This solution is mainly based on the following services:

- Fundamental model – We use the Sonnet Claude 3.5 from Anthrope on amazon Bedrock as our LLM to generate SQL queries for user inputs.

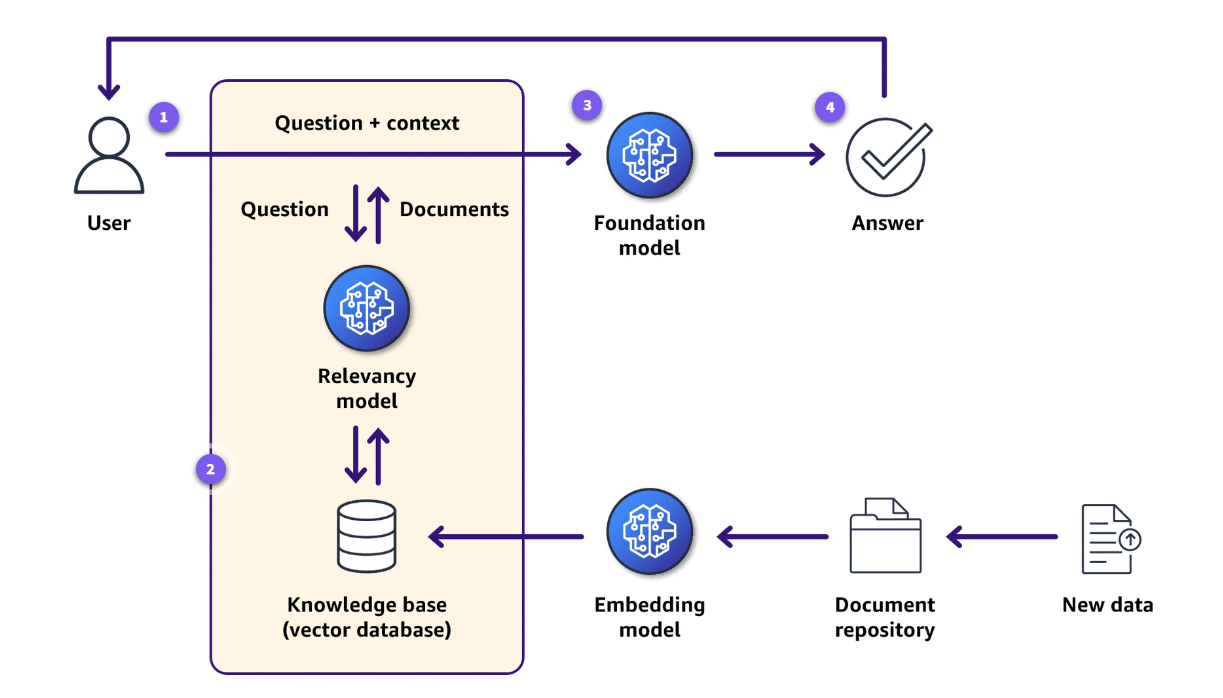

- Vector inlash – We use amazon Titan Text Inacreddings V2 at amazon Bedrock for Incrustations. The embedding is the process by which text, images and audio have numerical representation in a vector space. The embedding is usually done through an automatic learning model (ML). The following diagram provides more details about incrustations.

- RAG – We use RAG to provide more context on the table scheme, columns synonyms and sample queries to the FM. RAG is a framework to build generative ai applications that can use business data sources and vector databases to overcome knowledge limitations. RAG works by using a retriever module to find relevant information from an external data warehouse in response to a user's message. These recovered data are used as a context, combined with the original notice, to create an expanded message that is passed to the LLM. The language model then generates an SQL query that incorporates business knowledge. The following diagram illustrates the RAG frame.

- Rationalize – This open source Python Library makes it easy to create and share beautiful custom web applications for ML and data science. In just a few minutes, you can create powerful data applications using only Python.

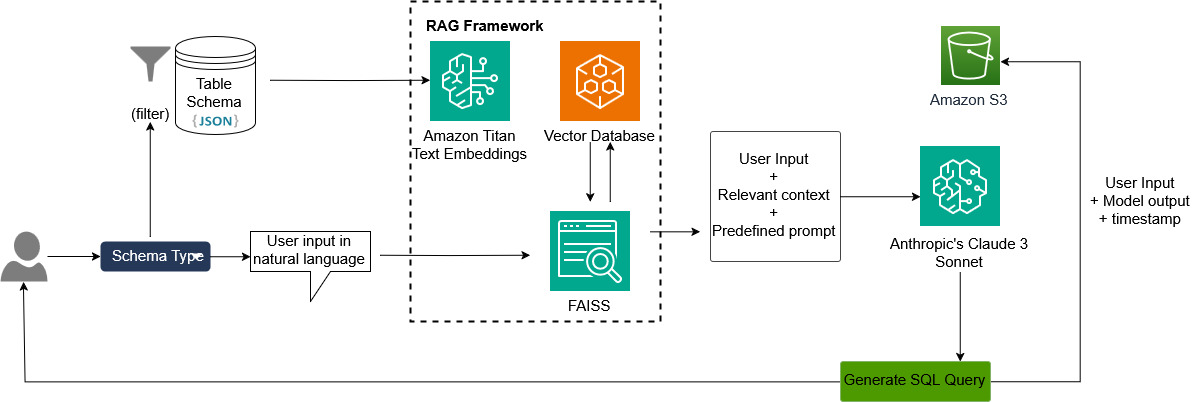

The following diagram shows the solution architecture.

We need to update the LLMs with a specific database. This ensures that the model can correctly understand the database and generate a response adapted to the scheme and data tables based on the company. There are multiple file formats available to store this information, such as JSON, PDF, TXT and YAML. In our case, we create JON files to store table scheme, table descriptions, columns with synonyms and sample queries. JSON's inherently structured format allows a clear and organized representation of complex data such as table schemes, column definitions, synonyms and sample consultations. This structure facilitates rapid analysis and data manipulation in most programming languages, reducing the need for personalized analysis logic.

There may be multiple tables with similar information, which can reduce the precision of the model. To increase precision, we classify the tables into four different types depending on the scheme and create four JSON files to store different tables. We have added a drop -down menu with four options. Each option represents one of these four categories and is aligned to individual JSON files. After the user selects the value of the drop -down menu, the relevant JSON file is passed to amazon Titan Text Incredids V2, which can convert the text into incrustations. These integrations are stored in a vector database for faster recovery.

We add the application template to the FM to define the role and responsibilities of the model. You can add additional information, such as what SQL engine should be used to generate SQL queries.

When the user provides the entrance through the chat indicator, we use the search for similarity to find the relevant table metadata in the vector database for the user consultation. The user entry is combined with relevant table metadata and the application template, which is passed to the FM as a single joint entry. The FM generates the SQL query based on the final entry.

To evaluate the precision of the model and track the mechanism, store all inputs and output of the user in the simple amazon storage service (amazon S3).

Previous requirements

To create this solution, complete the following previous requirements:

- Register to get an AWS account if you still don't have one.

- Enable access to the model for amazon Titan Text Inacreddings V2 and the sonnet Claude 3.5 of Anthrope on amazon Bedrock.

- Create a s3 cube as' simpleql-logs-****', replace '****'With his unique identifier. The names of the cubes are unique worldwide throughout the amazon S3 service.

- Choose your trial environment. We recommend that you try at amazon Sagemaker Studio, although you can use other available local environments.

- Install the following libraries to execute the code:

pip install streamlit

pip install jq

pip install openpyxl

pip install "faiss-cpu"

pip install langchain

Procedure

There are three main components in this solution:

- JSON files store the table scheme and configure the LLM

- VECTOR INDEXATION WITH amazon MOTHER ROCK

- Appreciated for the front-end user interface

Can discharge The three components and code fragments provided in the following section.

Generate the table scheme

We use the JSON format to store the table scheme. To provide more entries to the model, we add a table name and its description, columns and its synonyms, and sample queries in our JSON files. Create a JSON file as a_schema_a.json table by copying the following code in it:

{

"tables": (

{

"separator": "table_1",

"name": "schema_a.orders",

"schema": "CREATE TABLE schema_a.orders (order_id character varying(200), order_date timestamp without time zone, customer_id numeric(38,0), order_status character varying(200), item_id character varying(200) );",

"description": "This table stores information about orders placed by customers.",

"columns": (

{

"name": "order_id",

"description": "unique identifier for orders.",

"synonyms": ("order id")

},

{

"name": "order_date",

"description": "timestamp when the order was placed",

"synonyms": ("order time", "order day")

},

{

"name": "customer_id",

"description": "Id of the customer associated with the order",

"synonyms": ("customer id", "userid")

},

{

"name": "order_status",

"description": "current status of the order, sample values are: shipped, delivered, cancelled",

"synonyms": ("order status")

},

{

"name": "item_id",

"description": "item associated with the order",

"synonyms": ("item id")

}

),

"sample_queries": (

{

"query": "select count(order_id) as total_orders from schema_a.orders where customer_id = '9782226' and order_status="cancelled"",

"user_input": "Count of orders cancelled by customer id: 978226"

}

)

},

{

"separator": "table_2",

"name": "schema_a.customers",

"schema": "CREATE TABLE schema_a.customers (customer_id numeric(38,0), customer_name character varying(200), registration_date timestamp without time zone, country character varying(200) );",

"description": "This table stores the details of customers.",

"columns": (

{

"name": "customer_id",

"description": "Id of the customer, unique identifier for customers",

"synonyms": ("customer id")

},

{

"name": "customer_name",

"description": "name of the customer",

"synonyms": ("name")

},

{

"name": "registration_date",

"description": "registration timestamp when customer registered",

"synonyms": ("sign up time", "registration time")

},

{

"name": "country",

"description": "customer's original country",

"synonyms": ("location", "customer's region")

}

),

"sample_queries": (

{

"query": "select count(customer_id) as total_customers from schema_a.customers where country = 'India' and to_char(registration_date, 'YYYY') = '2024'",

"user_input": "The number of customers registered from India in 2024"

},

{

"query": "select count(o.order_id) as order_count from schema_a.orders o join schema_a.customers c on o.customer_id = c.customer_id where c.customer_name="john" and to_char(o.order_date, 'YYYY-MM') = '2024-01'",

"user_input": "Total orders placed in January 2024 by customer name john"

}

)

},

{

"separator": "table_3",

"name": "schema_a.items",

"schema": "CREATE TABLE schema_a.items (item_id character varying(200), item_name character varying(200), listing_date timestamp without time zone );",

"description": "This table stores the complete details of items listed in the catalog.",

"columns": (

{

"name": "item_id",

"description": "Id of the item, unique identifier for items",

"synonyms": ("item id")

},

{

"name": "item_name",

"description": "name of the item",

"synonyms": ("name")

},

{

"name": "listing_date",

"description": "listing timestamp when the item was registered",

"synonyms": ("listing time", "registration time")

}

),

"sample_queries": (

{

"query": "select count(item_id) as total_items from schema_a.items where to_char(listing_date, 'YYYY') = '2024'",

"user_input": "how many items are listed in 2024"

},

{

"query": "select count(o.order_id) as order_count from schema_a.orders o join schema_a.customers c on o.customer_id = c.customer_id join schema_a.items i on o.item_id = i.item_id where c.customer_name="john" and i.item_name="iphone"",

"user_input": "how many orders are placed for item 'iphone' by customer name john"

}

)

}

)

}

Configure the LLM and initialize vector indexation using amazon Bedrock

Create a python file as a library. Py following these steps:

- Add the following import statements to add the necessary libraries:

import boto3 # AWS SDK for Python

from langchain_community.document_loaders import JSONLoader # Utility to load JSON files

from langchain.llms import Bedrock # Large Language Model (LLM) from Anthropic

from langchain_community.chat_models import BedrockChat # Chat interface for Bedrock LLM

from langchain.embeddings import BedrockEmbeddings # Embeddings for Titan model

from langchain.memory import ConversationBufferWindowMemory # Memory to store chat conversations

from langchain.indexes import VectorstoreIndexCreator # Create vector indexes

from langchain.vectorstores import FAISS # Vector store using FAISS library

from langchain.text_splitter import RecursiveCharacterTextSplitter # Split text into chunks

from langchain.chains import ConversationalRetrievalChain # Conversational retrieval chain

from langchain.callbacks.manager import CallbackManager

- Initialize the amazon Bedrock client and configure the claude 3.5 of Anthrope, you can limit the amount of output tokens to optimize the cost:

# Create a Boto3 client for Bedrock Runtime

bedrock_runtime = boto3.client(

service_name="bedrock-runtime",

region_name="us-east-1"

)

# Function to get the LLM (Large Language Model)

def get_llm():

model_kwargs = { # Configuration for Anthropic model

"max_tokens": 512, # Maximum number of tokens to generate

"temperature": 0.2, # Sampling temperature for controlling randomness

"top_k": 250, # Consider the top k tokens for sampling

"top_p": 1, # Consider the top p probability tokens for sampling

"stop_sequences": ("\n\nHuman:") # Stop sequence for generation

}

# Create a callback manager with a default callback handler

callback_manager = CallbackManager(())

llm = BedrockChat(

model_id="anthropic.claude-3-5-sonnet-20240620-v1:0", # Set the foundation model

model_kwargs=model_kwargs, # Pass the configuration to the model

callback_manager=callback_manager

)

return llm

- Create and return an index for the type of given scheme. This approach is an efficient way of filtering tables and providing a relevant entry to the model:

# Function to load the schema file based on the schema type

def load_schema_file(schema_type):

if schema_type == 'Schema_Type_A':

schema_file = "Table_Schema_A.json" # Path to Schema Type A

elif schema_type == 'Schema_Type_B':

schema_file = "Table_Schema_B.json" # Path to Schema Type B

elif schema_type == 'Schema_Type_C':

schema_file = "Table_Schema_C.json" # Path to Schema Type C

return schema_file

# Function to get the vector index for the given schema type

def get_index(schema_type):

embeddings = BedrockEmbeddings(model_id="amazon.titan-embed-text-v2:0",

client=bedrock_runtime) # Initialize embeddings

db_schema_loader = JSONLoader(

file_path=load_schema_file(schema_type), # Load the schema file

# file_path="Table_Schema_RP.json", # Uncomment to use a different file

jq_schema=".", # Select the entire JSON content

text_content=False) # Treat the content as text

db_schema_text_splitter = RecursiveCharacterTextSplitter( # Create a text splitter

separators=("separator"), # Split chunks at the "separator" string

chunk_size=10000, # Divide into 10,000-character chunks

chunk_overlap=100 # Allow 100 characters to overlap with previous chunk

)

db_schema_index_creator = VectorstoreIndexCreator(

vectorstore_cls=FAISS, # Use FAISS vector store

embedding=embeddings, # Use the initialized embeddings

text_splitter=db_schema_text_splitter # Use the text splitter

)

db_index_from_loader = db_schema_index_creator.from_loaders((db_schema_loader)) # Create index from loader

return db_index_from_loader

- Use the following function to create and return the memory for the chat session:

# Function to get the memory for storing chat conversations

def get_memory():

memory = ConversationBufferWindowMemory(memory_key="chat_history", return_messages=True) # Create memory

return memory

- Use the following application template to generate SQL queries based on user entry:

# Template for the question prompt

template = """ Read table information from the context. Each table contains the following information:

- Name: The name of the table

- Description: A brief description of the table

- Columns: The columns of the table, listed under the 'columns' key. Each column contains:

- Name: The name of the column

- Description: A brief description of the column

- Type: The data type of the column

- Synonyms: Optional synonyms for the column name

- Sample Queries: Optional sample queries for the table, listed under the 'sample_data' key

Given this structure, Your task is to provide the SQL query using amazon Redshift syntax that would retrieve the data for following question. The produced query should be functional, efficient, and adhere to best practices in SQL query optimization.

Question: {}

"""

- Use the following function to obtain a response from the rag chat model:

# Function to get the response from the conversational retrieval chain

def get_rag_chat_response(input_text, memory, index):

llm = get_llm() # Get the LLM

conversation_with_retrieval = ConversationalRetrievalChain.from_llm(

llm, index.vectorstore.as_retriever(), memory=memory, verbose=True) # Create conversational retrieval chain

chat_response = conversation_with_retrieval.invoke({"question": template.format(input_text)}) # Invoke the chain

return chat_response('answer') # Return the answer

Configure streamlit for the front-end user interface

Create the app.py file following these steps:

- Import the necessary libraries:

import streamlit as st

import library as lib

from io import StringIO

import boto3

from datetime import datetime

import csv

import pandas as pd

from io import BytesIO

- Initialize customer S3:

s3_client = boto3.client('s3')

bucket_name="simplesql-logs-****"

#replace the 'simplesql-logs-****’ with your S3 bucket name

log_file_key = 'logs.xlsx'

- Configure Strewlit for UI:

st.set_page_config(page_title="Your App Name")

st.title("Your App Name")

# Define the available menu items for the sidebar

menu_items = ("Home", "How To", "Generate SQL Query")

# Create a sidebar menu using radio buttons

selected_menu_item = st.sidebar.radio("Menu", menu_items)

# Home page content

if selected_menu_item == "Home":

# Display introductory information about the application

st.write("This application allows you to generate SQL queries from natural language input.")

st.write("")

st.write("**Get Started** by selecting the button Generate SQL Query !")

st.write("")

st.write("")

st.write("**Disclaimer :**")

st.write("- Model's response depends on user's input (prompt). Please visit How-to section for writing efficient prompts.")

# How-to page content

elif selected_menu_item == "How To":

# Provide guidance on how to use the application effectively

st.write("The model's output completely depends on the natural language input. Below are some examples which you can keep in mind while asking the questions.")

st.write("")

st.write("")

st.write("")

st.write("")

st.write("**Case 1 :**")

st.write("- **Bad Input :** Cancelled orders")

st.write("- **Good Input :** Write a query to extract the cancelled order count for the items which were listed this year")

st.write("- It is always recommended to add required attributes, filters in your prompt.")

st.write("**Case 2 :**")

st.write("- **Bad Input :** I am working on XYZ project. I am creating a new metric and need the sales data. Can you provide me the sales at country level for 2023 ?")

st.write("- **Good Input :** Write an query to extract sales at country level for orders placed in 2023 ")

st.write("- Every input is processed as tokens. Do not provide un-necessary details as there is a cost associated with every token processed. Provide inputs only relevant to your query requirement.")

- Generate the consultation:

# SQL-ai page content

elif selected_menu_item == "Generate SQL Query":

# Define the available schema types for selection

schema_types = ("Schema_Type_A", "Schema_Type_B", "Schema_Type_C")

schema_type = st.sidebar.selectbox("Select Schema Type", schema_types)

- Use the following for SQL generation:

if schema_type:

# Initialize or retrieve conversation memory from session state

if 'memory' not in st.session_state:

st.session_state.memory = lib.get_memory()

# Initialize or retrieve chat history from session state

if 'chat_history' not in st.session_state:

st.session_state.chat_history = ()

# Initialize or update vector index based on selected schema type

if 'vector_index' not in st.session_state or 'current_schema' not in st.session_state or st.session_state.current_schema != schema_type:

with st.spinner("Indexing document..."):

# Create a new index for the selected schema type

st.session_state.vector_index = lib.get_index(schema_type)

# Update the current schema in session state

st.session_state.current_schema = schema_type

# Display the chat history

for message in st.session_state.chat_history:

with st.chat_message(message("role")):

st.markdown(message("text"))

# Get user input through the chat interface, set the max limit to control the input tokens.

input_text = st.chat_input("Chat with your bot here", max_chars=100)

if input_text:

# Display user input in the chat interface

with st.chat_message("user"):

st.markdown(input_text)

# Add user input to the chat history

st.session_state.chat_history.append({"role": "user", "text": input_text})

# Generate chatbot response using the RAG model

chat_response = lib.get_rag_chat_response(

input_text=input_text,

memory=st.session_state.memory,

index=st.session_state.vector_index

)

# Display chatbot response in the chat interface

with st.chat_message("assistant"):

st.markdown(chat_response)

# Add chatbot response to the chat history

st.session_state.chat_history.append({"role": "assistant", "text": chat_response})

- Record the conversations to Cubo S3:

timestamp = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

try:

# Attempt to download the existing log file from S3

log_file_obj = s3_client.get_object(Bucket=bucket_name, Key=log_file_key)

log_file_content = log_file_obj('Body').read()

df = pd.read_excel(BytesIO(log_file_content))

except s3_client.exceptions.NoSuchKey:

# If the log file doesn't exist, create a new DataFrame

df = pd.DataFrame(columns=("User Input", "Model Output", "Timestamp", "Schema Type"))

# Create a new row with the current conversation data

new_row = pd.DataFrame({

"User Input": (input_text),

"Model Output": (chat_response),

"Timestamp": (timestamp),

"Schema Type": (schema_type)

})

# Append the new row to the existing DataFrame

df = pd.concat((df, new_row), ignore_index=True)

# Prepare the updated DataFrame for S3 upload

output = BytesIO()

df.to_excel(output, index=False)

output.seek(0)

# Upload the updated log file to S3

s3_client.put_object(Body=output.getvalue(), Bucket=bucket_name, Key=log_file_key)

Try the solution

Open your terminal and invoke the following command to execute the streamlit application.

streamlit run app.py

To visit the application using your browser, navigate to the localhost.

To visit the application with SageMaker, copy your notebook url and replace 'predetermined/laboratory' In the URL with 'Predetermined/proxy/8501/' . It should look like the following:

https://your_sagemaker_lab_url.studio.us-east-1.sagemaker.aws/jupyterlab/default/proxy/8501/

Choose Generate SQL queries To open the chat window. Try your application by asking questions in natural language. We tested the application with the following questions and generated precise SQL queries.

Count of orders made of India last month?

Write a query to extract the canceled orders count for the articles that were listed this year.

Write a query to extract the 10 main names of elements that have the highest order for each country.

Troubleshooting Councils

Use the following solutions to address errors:

Mistake – An error raised by end point of inference means that an error (Access Dedexception) occurred by calling the invokemodel operation. You have no access to the model with the ID of the specified model.

Solution – Be sure to have access to the FMS on amazon Bedrock, amazon Titan Text Incredids V2 and the sonnet Claude 3.5 of Anthrope.

Mistake – App.py does not exist

Solution – Make sure your JSON file and Python files are in the same folder and be invoking the command in the same folder.

Mistake – There is no module called streamlit

Solution – Open the terminal and install the transmission module running the command pip install streamlit

Mistake – There was an error (Nostuchbucket) when calling the Getobject operation. The specified cube does not exist.

Solution – Check the name of your bucket in the APP.py file and update the name based on your s3 cube name.

Clean

Clean the resources you created to avoid incurring positions. To clean your s3 cube, see the emptying of a cube.

Conclusion

In this publication, we show how amazon rock can be used to create a SQL text application based on specific data sets. We use amazon S3 to store the outputs generated by the model for the corresponding inputs. These records can be used to prove precision and improve the context by providing more details in the knowledge base. With the help of a tool like this, you can create automated solutions that are accessible to non -technical users, which allows them to interact with the data more efficiently.

Ready to start with amazon Bedrock? Start learning with <a target="_blank" href="https://workshops.aws/categories/amazon%20Bedrock” target=”_blank” rel=”noopener”>These interactive workshops.

For more information about the SQL generation, see these publications:

Recently we launched an NL2SQL module administered to recover structured data in the knowledge of amazon Bedrock. For more information, visit amazon Bedrock's knowledge bases now supports structured data recovery.

About the author

Rajendra Choudhary He is a business analyst on amazon. With 7 years of experience in the development of data solutions, it has a deep experience in data visualization, data modeling and data engineering. He is passionate to support customers by taking advantage of ai -based generative solutions. Outside work, Rajendra is an avid enthusiast of food and music, and likes to swim and walk.

Rajendra Choudhary He is a business analyst on amazon. With 7 years of experience in the development of data solutions, it has a deep experience in data visualization, data modeling and data engineering. He is passionate to support customers by taking advantage of ai -based generative solutions. Outside work, Rajendra is an avid enthusiast of food and music, and likes to swim and walk.

Rajendra Choudhary He is a business analyst on amazon. With 7 years of experience in the development of data solutions, it has a deep experience in data visualization, data modeling and data engineering. He is passionate to support customers by taking advantage of ai -based generative solutions. Outside work, Rajendra is an avid enthusiast of food and music, and likes to swim and walk.

Rajendra Choudhary He is a business analyst on amazon. With 7 years of experience in the development of data solutions, it has a deep experience in data visualization, data modeling and data engineering. He is passionate to support customers by taking advantage of ai -based generative solutions. Outside work, Rajendra is an avid enthusiast of food and music, and likes to swim and walk. NEWSLETTER

NEWSLETTER