Retrieval Augmented Generation (RAG) is a state-of-the-art approach to building question answering systems that combines the strengths of retrieval models and FMs. RAG models first retrieve relevant information from a large corpus of text and then use a FM to synthesize an answer based on the retrieved information.

An end-to-end RAG solution includes several components, including a knowledge base, a retrieval system, and a generation system. Building and deploying these components can be complex and error-prone, especially when working with large-scale data and models.

This post demonstrates how to seamlessly automate the deployment of an end-to-end RAG solution using knowledge bases for amazon Bedrock and AWS CloudFormation, enabling organizations to quickly and effortlessly set up a powerful RAG system.

Solution Overview

The solution provides an automated end-to-end deployment of a RAG workflow using knowledge bases for amazon Bedrock. We use AWS CloudFormation to configure the necessary resources, including:

- An AWS Identity and Access Management (IAM) role

- An amazon OpenSearch Serverless collection and index

- A knowledge base with its associated data source

The RAG workflow allows you to use your document data stored in an amazon Simple Storage Service (amazon S3) bucket and integrate it with the powerful natural language processing capabilities of FMs provided in amazon Bedrock. The solution simplifies the setup process, allowing you to quickly deploy and start querying your data using the selected FM.

Prerequisites

To implement the solution provided in this post, you must have the following:

- An active AWS account and familiarity with FM, amazon Bedrock, and OpenSearch Serverless.

- An S3 bucket where your documents are stored in a supported format (.txt, .md, .html, .doc/docx, .csv, .xls/.xlsx, .pdf).

- The amazon Titan Embeddings G1-Text model is enabled on amazon Bedrock. You can confirm that it is enabled on Access to the model amazon Bedrock console page. If the amazon Titan Embeddings G1-Text model is enabled, the access status will be displayed as Access grantedas shown in the following screenshot.

Configure the solution

Once you have completed the previous steps, you are ready to configure the solution:

- Clone the GitHub repository containing the solution files:

- Go to the solution directory:

- Run the sh script, which will create the deployment bucket, prepare the CloudFormation templates, and upload the ready CloudFormation templates and required artifacts to the deployment bucket:

When running deployment.sh, if you provide a bucket name as an argument to the script, a deployment bucket with the specified name will be created. Otherwise, the default name format will be used: e2e-rag-deployment-${ACCOUNT_ID}-${AWS_REGION}

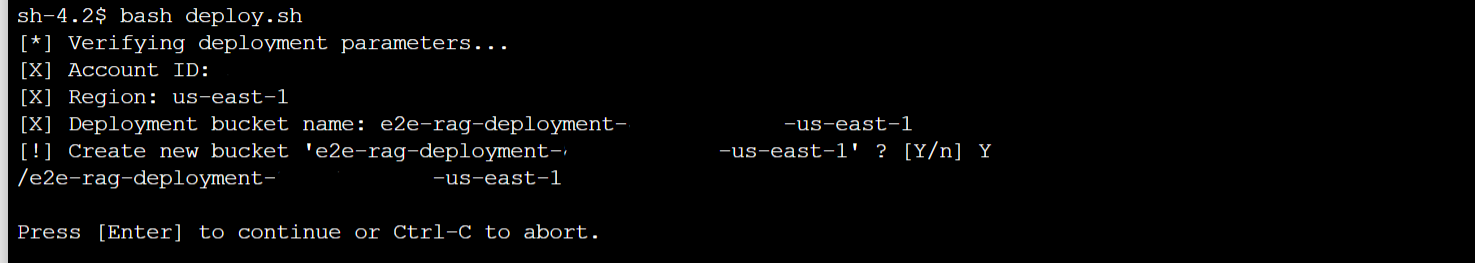

As shown in the following screenshot, if you complete the steps above on an amazon SageMaker notebook instance, you can run bash deployment.sh in the terminal, which creates the deployment bucket in your account (account number redacted).

- Once the script is complete, note the S3 URL of main-template-out.yml.

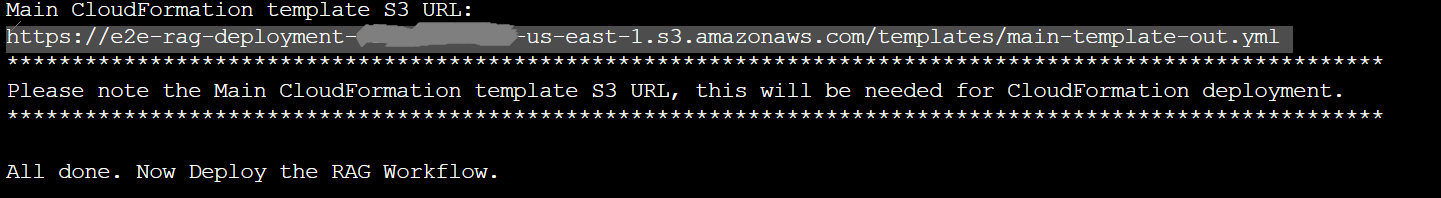

- In the AWS CloudFormation console, create a new stack.

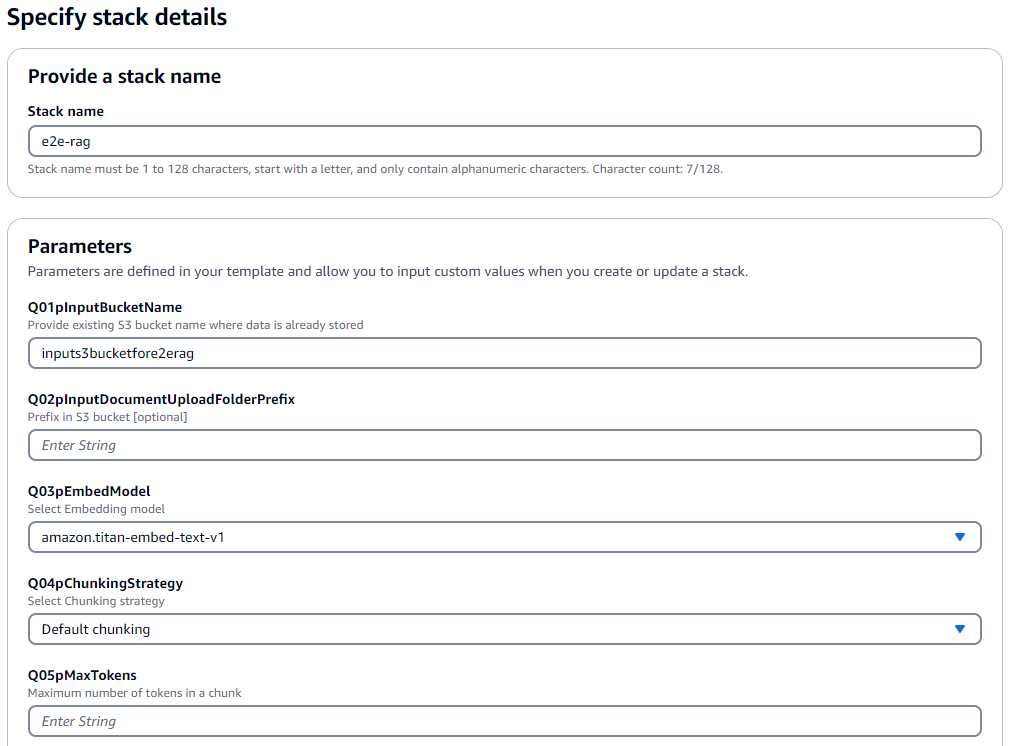

- For Template sourceselect amazon S3 URL and enter the URL you copied earlier.

- Choose Next.

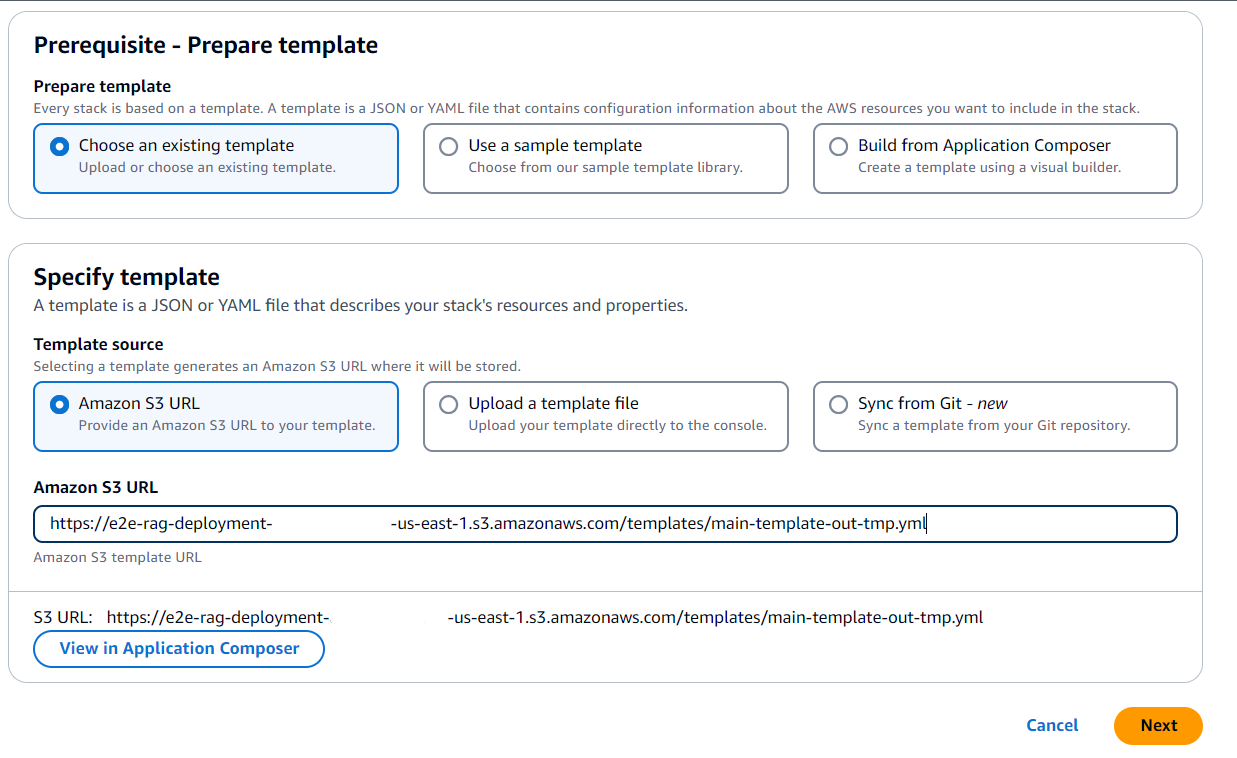

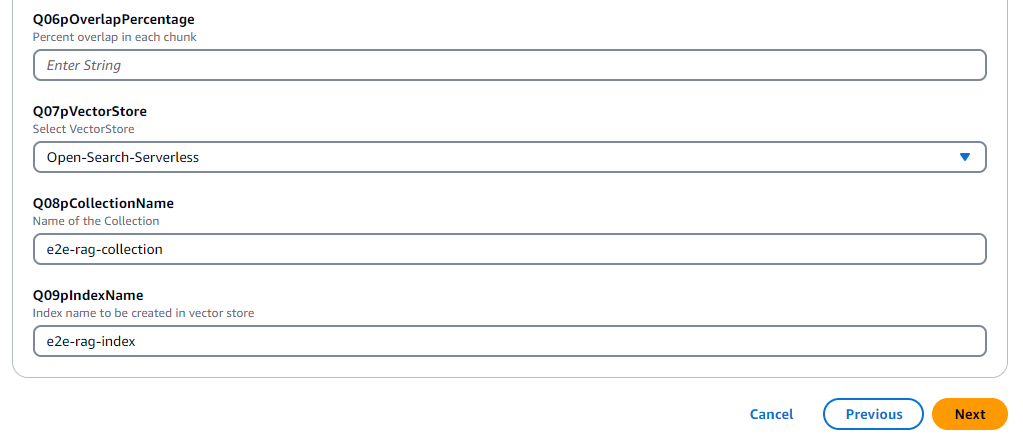

- Provide a first name and specify the RAG workflow details based on your use case and then choose Next.

- Leave everything else as default and choose Next on the following pages.

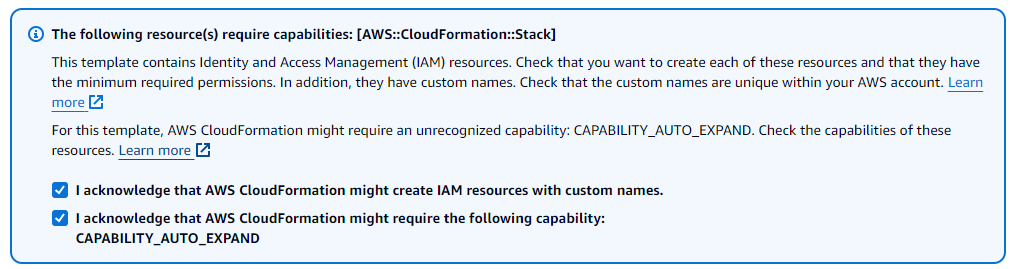

- Review the stack details and select the acknowledgement check boxes.

- Choose Deliver to start the implementation process.

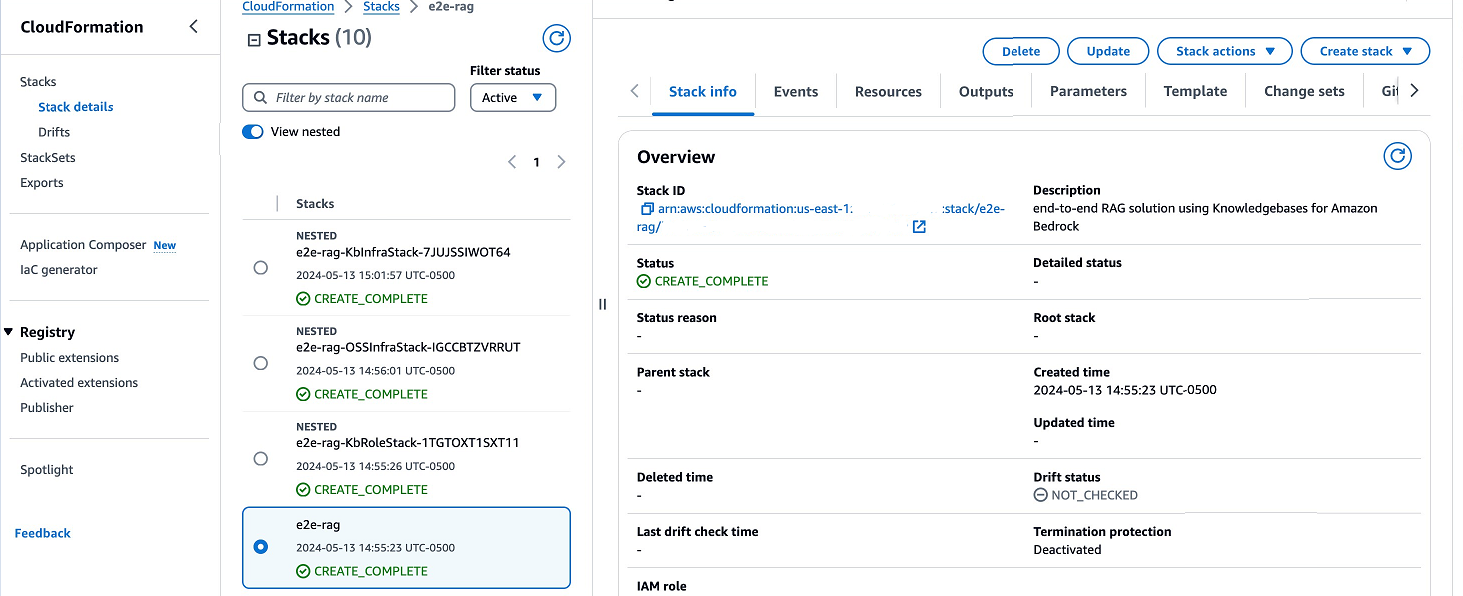

You can monitor the progress of your stack deployment in the AWS CloudFormation console.

Test the solution

Once the deployment is successful (which may take 7-10 minutes to complete), you can begin testing the solution.

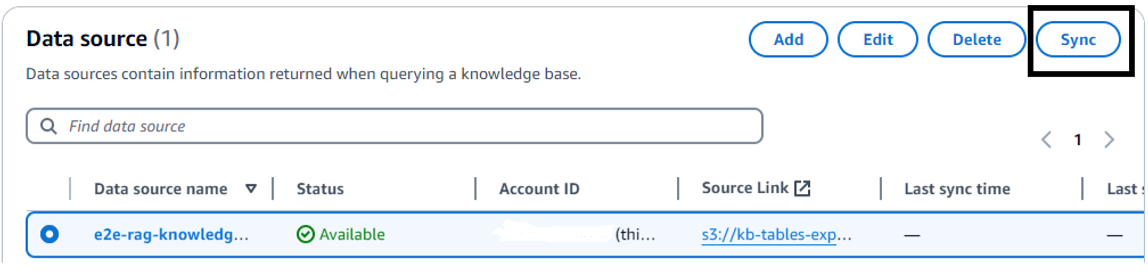

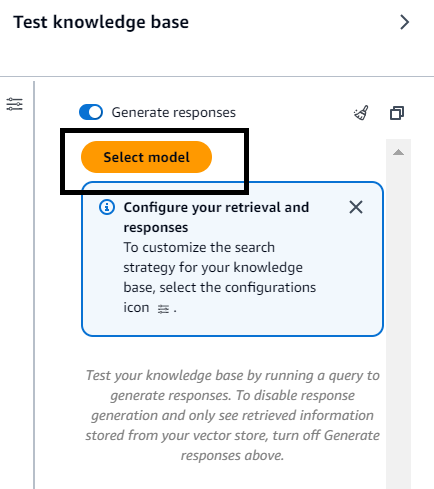

- In the amazon Bedrock console, navigate to the knowledge base you created.

- Choose Sync up to start the data ingestion job.

- After data synchronization is complete, select the FM you want to use for retrieval and generation (granting the model access to this FM in amazon Bedrock is required before using it).

- Start querying your data using natural language queries.

That’s it! You can now interact with your documents using the RAG workflow powered by amazon Bedrock.

Clean

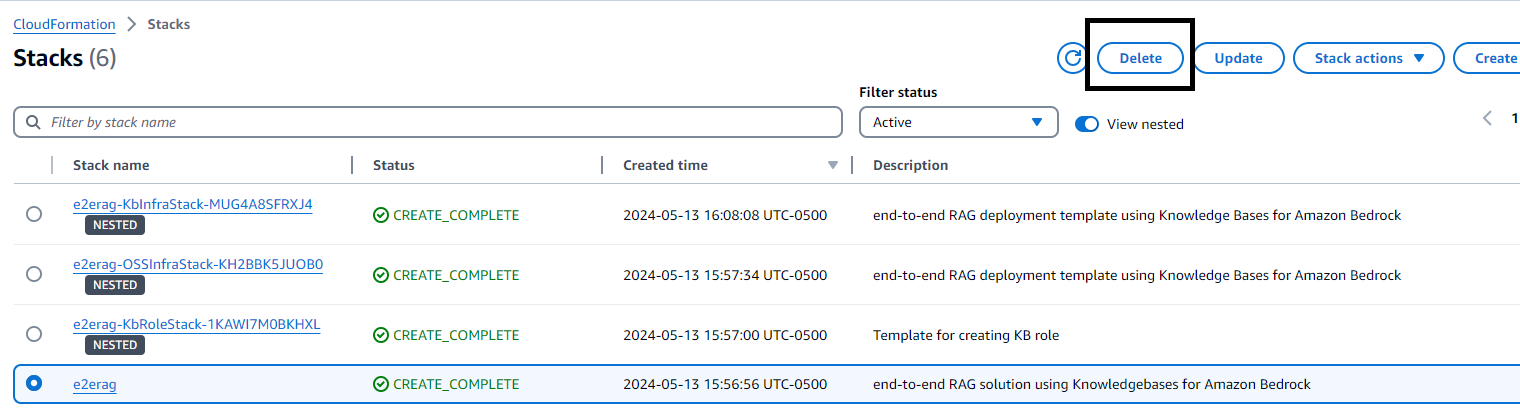

To avoid incurring future charges, please remove the resources used in this solution:

- In the amazon S3 console, manually delete the contents inside the bucket you created for the template deployment, and then delete the bucket.

- In the AWS CloudFormation console, select Batteries In the navigation pane, select the main stack and choose Delete.

The created knowledge base will be deleted when you delete the stack.

Conclusion

In this post, we present an automated solution to deploy an end-to-end RAG workflow using knowledge bases for amazon Bedrock and AWS CloudFormation. By using the power of AWS services and pre-configured CloudFormation templates, you can quickly set up a powerful question answering system without the complexities of creating and deploying individual components for RAG applications. This automated deployment approach not only saves time and effort, but also provides a consistent and reproducible configuration, allowing you to focus on using the RAG workflow to extract valuable insights from your data.

Give it a try and see first-hand how it can streamline your RAG workflow implementation and improve efficiency. Share your feedback with us!

About the authors

Sandeep Singh is a Senior Data Scientist for Generative ai at amazon Web Services, helping companies innovate with generative ai. He specializes in generative ai, machine learning, and system design. He has successfully delivered cutting-edge ai and ML-powered solutions to solve complex business problems for various industries, optimizing efficiency and scalability.

Sandeep Singh is a Senior Data Scientist for Generative ai at amazon Web Services, helping companies innovate with generative ai. He specializes in generative ai, machine learning, and system design. He has successfully delivered cutting-edge ai and ML-powered solutions to solve complex business problems for various industries, optimizing efficiency and scalability.

Yanyan Zhang is a Senior Generative ai Data Scientist at amazon Web Services, where she has worked on cutting-edge ai/ML technologies as a Generative ai Specialist, helping customers use generative ai to achieve their desired outcomes. With a keen interest in exploring new frontiers in the field, she continually strives to push the boundaries. Outside of work, she loves traveling, exercising, and exploring new things.

Yanyan Zhang is a Senior Generative ai Data Scientist at amazon Web Services, where she has worked on cutting-edge ai/ML technologies as a Generative ai Specialist, helping customers use generative ai to achieve their desired outcomes. With a keen interest in exploring new frontiers in the field, she continually strives to push the boundaries. Outside of work, she loves traveling, exercising, and exploring new things.

Mani Khanuja is a technology leader and generative ai specialist, author of Applied Machine Learning and High Performance Computing on AWS, and a board member of the Women in Manufacturing Education Foundation. She leads machine learning projects across a variety of domains, including computer vision, natural language processing, and generative ai. She speaks at internal and external conferences, including AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she enjoys long runs on the beach.

Mani Khanuja is a technology leader and generative ai specialist, author of Applied Machine Learning and High Performance Computing on AWS, and a board member of the Women in Manufacturing Education Foundation. She leads machine learning projects across a variety of domains, including computer vision, natural language processing, and generative ai. She speaks at internal and external conferences, including AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she enjoys long runs on the beach.

NEWSLETTER

NEWSLETTER