Retrieval Augmented Generation (RAG) is a cutting-edge approach to building question answering systems that combines the strengths of retrieval and generative language models. RAG models retrieve relevant information from a large corpus of text and then use a generative language model to synthesize an answer based on the retrieved information.

The complexity of developing and deploying an end-to-end RAG solution involves several components, including a knowledge base, a retrieval system, and a generative language model. Building and deploying these components can be complex and error-prone, especially when working with large-scale data and models.

This post demonstrates how to seamlessly automate the deployment of an end-to-end RAG solution using knowledge bases for amazon Bedrock and AWS Cloud Development Kit (AWS CDK), enabling organizations to quickly set up a powerful question answering system.

Solution Overview

The solution provides an automated, end-to-end implementation of a RAG workflow using knowledge bases for amazon Bedrock. Using AWS CDK, the solution configures the necessary resources, including an AWS Identity and Access Management (IAM) role, an amazon OpenSearch Serverless collection and index, and a knowledge base with its associated data source.

The RAG workflow allows you to use your document data stored in an amazon Simple Storage Service (amazon S3) bucket and integrate it with the powerful natural language processing (NLP) capabilities of the bedrock models (FMs) provided by amazon Bedrock. The solution simplifies the setup process by allowing you to programmatically modify the infrastructure, deploy the model, and start querying your data using the selected FM.

Prerequisites

To implement the solution provided in this post, you must have the following:

- An active AWS account and familiarity with FMs, amazon Bedrock, and amazon OpenSearch Service.

- Model access enabled for the required models you want to experiment with.

- The AWS CDK is now configured. For installation instructions, see the AWS CDK Workshop.

- An S3 bucket configured with your documents in a supported format (.txt, .md, .html, .doc/docx, .csv, .xls/.xlsx, .pdf).

- The amazon Titan Embeddings V2 model is enabled on amazon Bedrock. You can confirm that it is enabled on Access to the model amazon Bedrock console page. If the amazon Titan Embeddings V2 model is enabled, the access status will be displayed as Access grantedas shown in the following screenshot.

Configure the solution

Once you have completed the previous steps, you are ready to configure the solution:

- Clone the GitHub repository containing the solution files:

- Go to the solution directory:

- Create and activate the virtual environment:

Activating the virtual environment varies depending on the operating system; see the AWS CDK Workshop to activate in other environments.

- Once the virtual environment is activated, you can install the necessary dependencies:

You can now prepare the code .zip file and synthesize the AWS CloudFormation template for this code.

- In your terminal, export your AWS credentials for a role or user in

ACCOUNT_IDThe role must have all the permissions required for deploying the CDK:

export AWS_REGION=”” # Same region asACCOUNT_REGIONabove

export AWS_ACCESS_KEY_ID=”” # Set the access key for your role/user

export AWS_SECRET_ACCESS_KEY=”” # Set the secret key for your role/user - Create the dependency:

- If you are deploying AWS CDK for the first time, run the following command:

- To synthesize the CloudFormation template, run the following command:

- Since this deployment contains multiple stacks, you must deploy them in a specific sequence. Deploy the stacks in the following order:

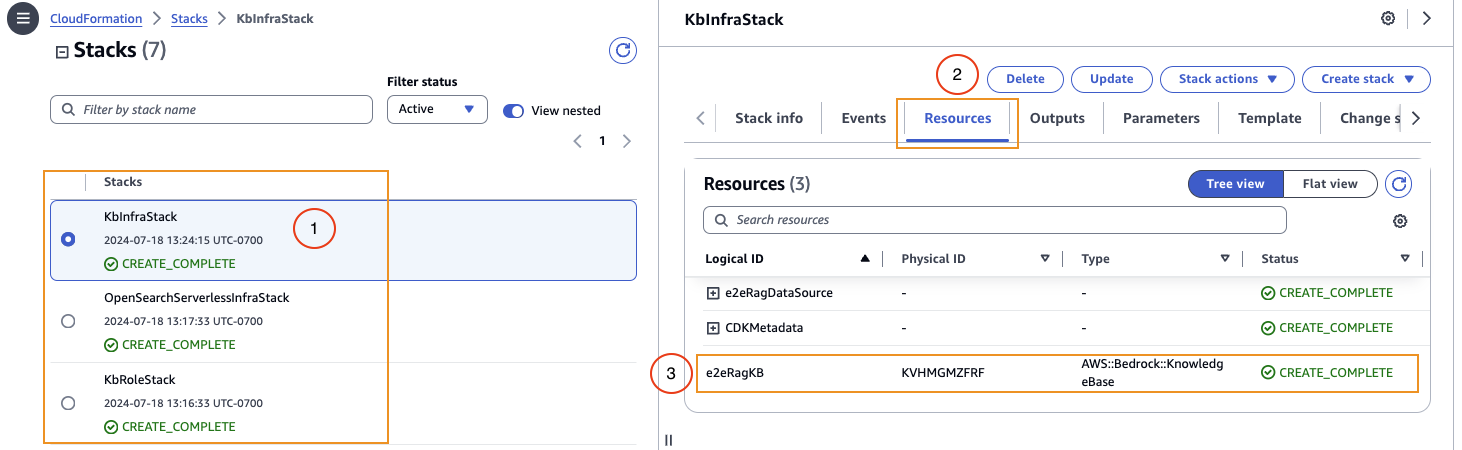

- Once the deployment is complete, you can view these deployed stacks by visiting the AWS CloudFormation console, as shown below. You can also note down the knowledge base details (i.e. name, ID) in the resources tab.

Test the solution

Now that you have deployed the solution using AWS CDK, you can test it with the following steps:

- In the amazon Bedrock console, select Knowledge bases on the navigation page.

- Select the knowledge base you created.

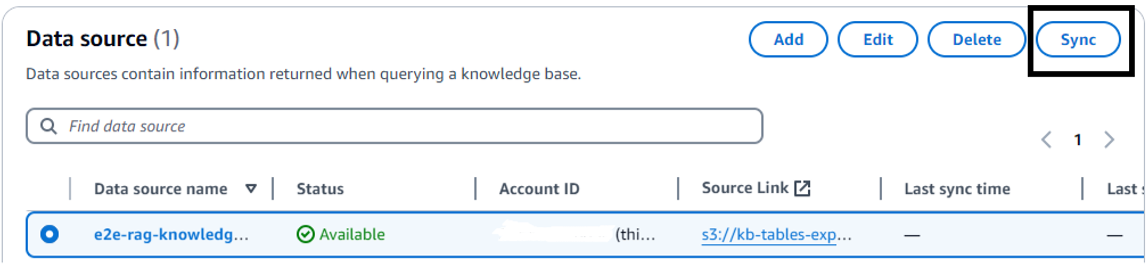

- Choose Synchronize to start the data ingestion job.

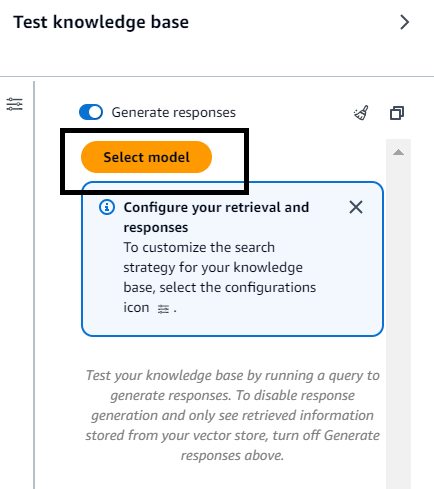

- After the data ingestion job is complete, select the FM you want to use for retrieval and generation. (This requires that the model be granted access to this FM in amazon Bedrock before using it.)

- Start querying your data using natural language queries.

That’s it! You can now interact with your documents using the RAG workflow powered by amazon Bedrock.

Clean

To avoid incurring future charges to your AWS account, complete the following steps:

- Delete all files inside the provisioned S3 bucket.

- Run the following command in the terminal to delete the CloudFormation stack provisioned using AWS CDK:

Conclusion

In this post, we demonstrate how to quickly deploy an end-to-end RAG solution using knowledge bases for amazon Bedrock and AWS CDK.

This solution simplifies the process of setting up the necessary infrastructure, including an IAM role, an OpenSearch Serverless collection and index, and a knowledge base with an associated data source. The automated deployment process enabled by AWS CDK minimizes the complexities and potential errors associated with manually configuring and deploying the various components required for a RAG solution. By leveraging the power of FMs offered by amazon Bedrock, you can seamlessly integrate your document data with advanced NLP capabilities, allowing you to efficiently retrieve relevant information and generate high-quality responses to natural language queries.

This solution not only simplifies the deployment process, but also provides a scalable and efficient way to utilize RAG capabilities for Q&A systems. With the ability to programmatically modify the infrastructure, you can quickly adapt the solution to help meet the specific needs of your organization, making it a valuable tool for a wide range of applications that require the retrieval and generation of accurate and contextualized information.

About the authors

Sandeep Singh is a Senior Data Scientist for Generative ai at amazon Web Services, helping companies innovate with generative ai. He specializes in generative ai, machine learning, and system design. He has successfully delivered cutting-edge ai and ML-powered solutions to solve complex business problems for various industries, optimizing efficiency and scalability.

Sandeep Singh is a Senior Data Scientist for Generative ai at amazon Web Services, helping companies innovate with generative ai. He specializes in generative ai, machine learning, and system design. He has successfully delivered cutting-edge ai and ML-powered solutions to solve complex business problems for various industries, optimizing efficiency and scalability.

Manoj Krishna Mohan Manoj is a Machine Learning Engineer at amazon. He specializes in building ai and machine learning solutions using amazon SageMaker. He is passionate about developing turnkey solutions for customers. Manoj holds a Master of Science in Computer Science with a concentration in Data Science from the University of North Carolina, Charlotte.

Manoj Krishna Mohan Manoj is a Machine Learning Engineer at amazon. He specializes in building ai and machine learning solutions using amazon SageMaker. He is passionate about developing turnkey solutions for customers. Manoj holds a Master of Science in Computer Science with a concentration in Data Science from the University of North Carolina, Charlotte.

Mani Khanuja is a technology leader and generative ai specialist, author of Applied Machine Learning and High-Performance Computing on AWS, and a board member of the Women in Manufacturing Education Foundation. She leads machine learning projects across a variety of domains, including computer vision, natural language processing, and generative ai. She speaks at internal and external conferences, including AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she enjoys long runs on the beach.

Mani Khanuja is a technology leader and generative ai specialist, author of Applied Machine Learning and High-Performance Computing on AWS, and a board member of the Women in Manufacturing Education Foundation. She leads machine learning projects across a variety of domains, including computer vision, natural language processing, and generative ai. She speaks at internal and external conferences, including AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she enjoys long runs on the beach.

NEWSLETTER

NEWSLETTER