Organizations are striving to implement efficient, scalable, cost-effective, and automated customer support solutions without compromising on customer experience. Generative artificial intelligence (ai)-based chatbots play a crucial role in delivering human-like interactions by providing answers from a knowledge base without the involvement of live agents. These chatbots can be efficiently used to handle generic queries, freeing up live agents to focus on more complex tasks.

amazon Lex offers advanced conversational interfaces using voice and text channels. It features natural language understanding capabilities to more accurately recognize user intent and fulfill it faster.

amazon Bedrock simplifies the process of developing and scaling generative ai applications based on large language models (LLMs) and other foundational models (FMs). It offers access to a wide range of FMs from leading vendors such as Anthropic Claude, AI21 Labs, Cohere, and Stability ai, as well as amazon’s proprietary models, amazon Titan. Additionally, Knowledge Bases for amazon Bedrock enables you to develop applications that leverage the power of Retrieval Augmented Generation (RAG), an approach in which retrieving relevant information from data sources improves the model’s ability to generate contextually appropriate and informed responses.

QnAIntent's generative ai capability in amazon Lex enables you to securely connect FMs with business data for RAG. QnAIntent provides an interface to use business data and FMs in amazon Bedrock to generate relevant, accurate, and contextual responses. You can use QnAIntent with new or existing amazon Lex bots to automate frequently asked questions over text and voice channels, such as amazon Connect.

With this capability, you no longer need to create intent variations, sample utterances, slots, and messages to predict and handle a wide range of frequently asked questions. You can simply connect QnAIntent to your company’s knowledge sources and the bot can immediately handle the questions using the allowed content.

In this post, we demonstrate how you can build chatbots with QnAIntent that connect to a knowledge base in amazon Bedrock (powered by amazon OpenSearch Serverless as a vector database) and generate rich, self-service conversational experiences for your customers.

Solution Overview

The solution uses amazon Lex, amazon Simple Storage Service (amazon S3), and amazon Bedrock in the following steps:

- Users interact with the chatbot through a pre-built amazon Lex web user interface.

- amazon Lex processes each user request to determine the user's intent through a process called intent recognition.

- amazon Lex provides the built-in generative ai feature QnAIntent, which can connect directly to a knowledge base to fulfill user requests.

- Knowledge bases for amazon Bedrock use the amazon Titan embedding model to convert the user's query into a vector and query the knowledge base to find fragments that are semantically similar to the user's query. The user's request is then expanded with the results returned from the knowledge base as additional context and sent to the LLM to generate a response.

- The generated response is returned via QnAIntent and sent to the user in the chat application via amazon Lex.

The following diagram illustrates the solution architecture and workflow.

In the following sections, we discuss in more detail the key components of the solution and the high-level steps to implement the solution:

- Build a knowledge base in amazon Bedrock for OpenSearch Serverless.

- Create an amazon Lex bot.

- Create a new ai-powered generative intent in amazon Lex using the built-in QnAIntent and point it to the knowledge base.

- Deploy the sample amazon Lex web UI available at GitHub repositoryUse the AWS CloudFormation template provided in your preferred AWS Region and configure the bot.

Prerequisites

To implement this solution, you need the following:

- An AWS account with privileges to create AWS Identity and Access Management (IAM) roles and policies. For more information, see Access Management Overview: Permissions and Policies.

- Familiarity with AWS services such as amazon S3, amazon Lex, amazon OpenSearch Service, and amazon Bedrock.

- Enabled access to the amazon Titan Embeddings G1 and Anthropic Claude 3 Haiku text model on amazon Bedrock. For instructions, see Accessing the Model.

- A data source on amazon S3. For this post, we used amazon Shareholder Documents (amazon Shareholder Letters – 2023 and 2022) as a source of data to hydrate the knowledge base.

Create a knowledge base

To create a new knowledge base in amazon Bedrock, follow these steps. For more information, see Create a Knowledge Base.

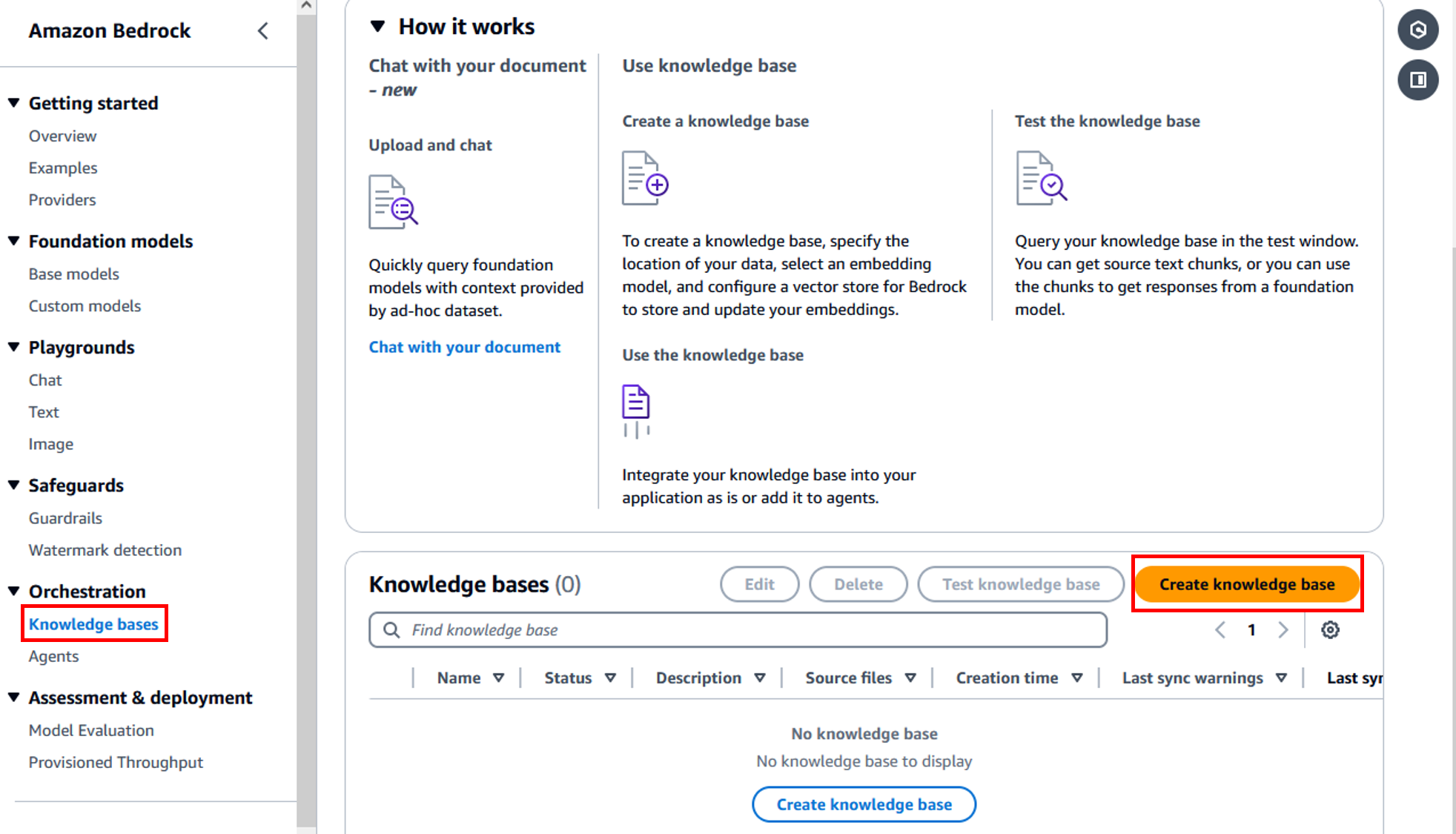

- In the amazon Bedrock console, select Knowledge bases in the navigation panel.

- Choose Create a knowledge base.

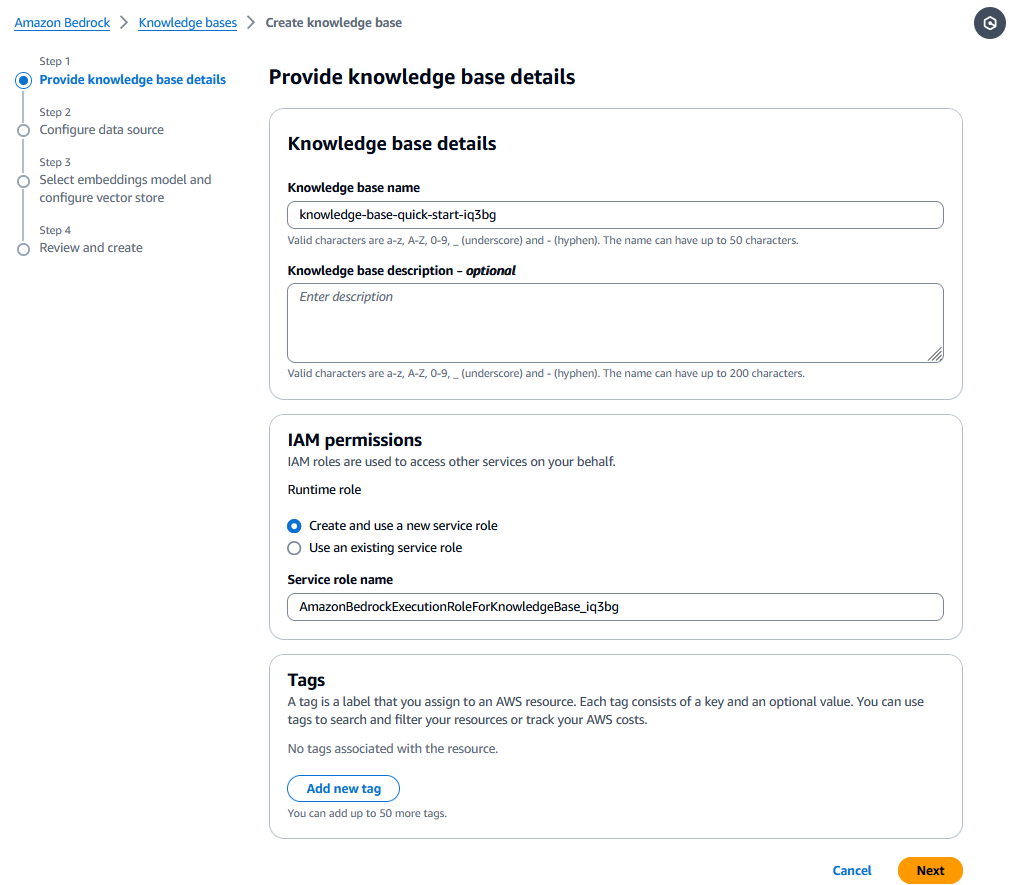

- About him Provide knowledge base details page, enter a knowledge base name, IAM permissions, and tags.

- Choose Next.

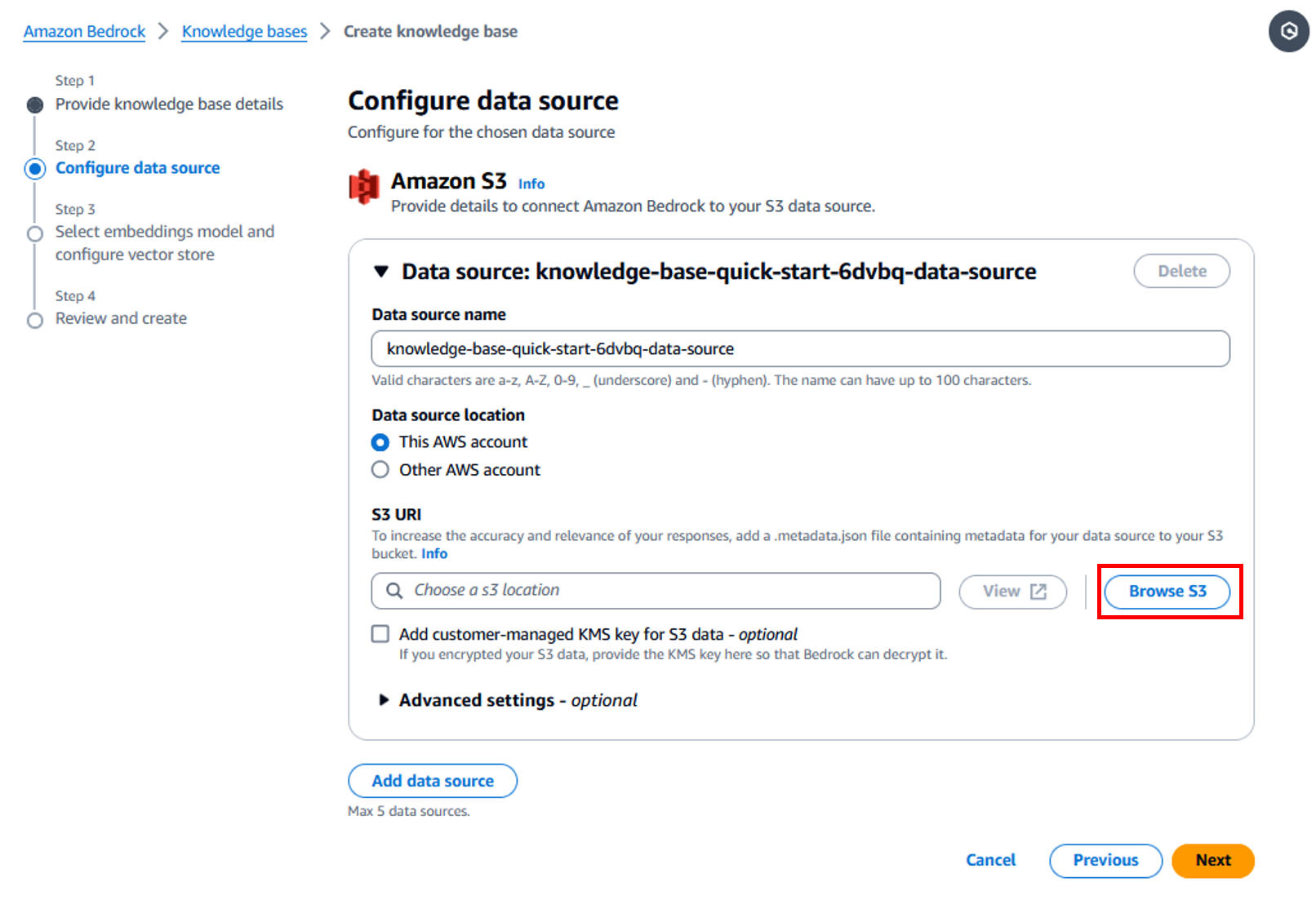

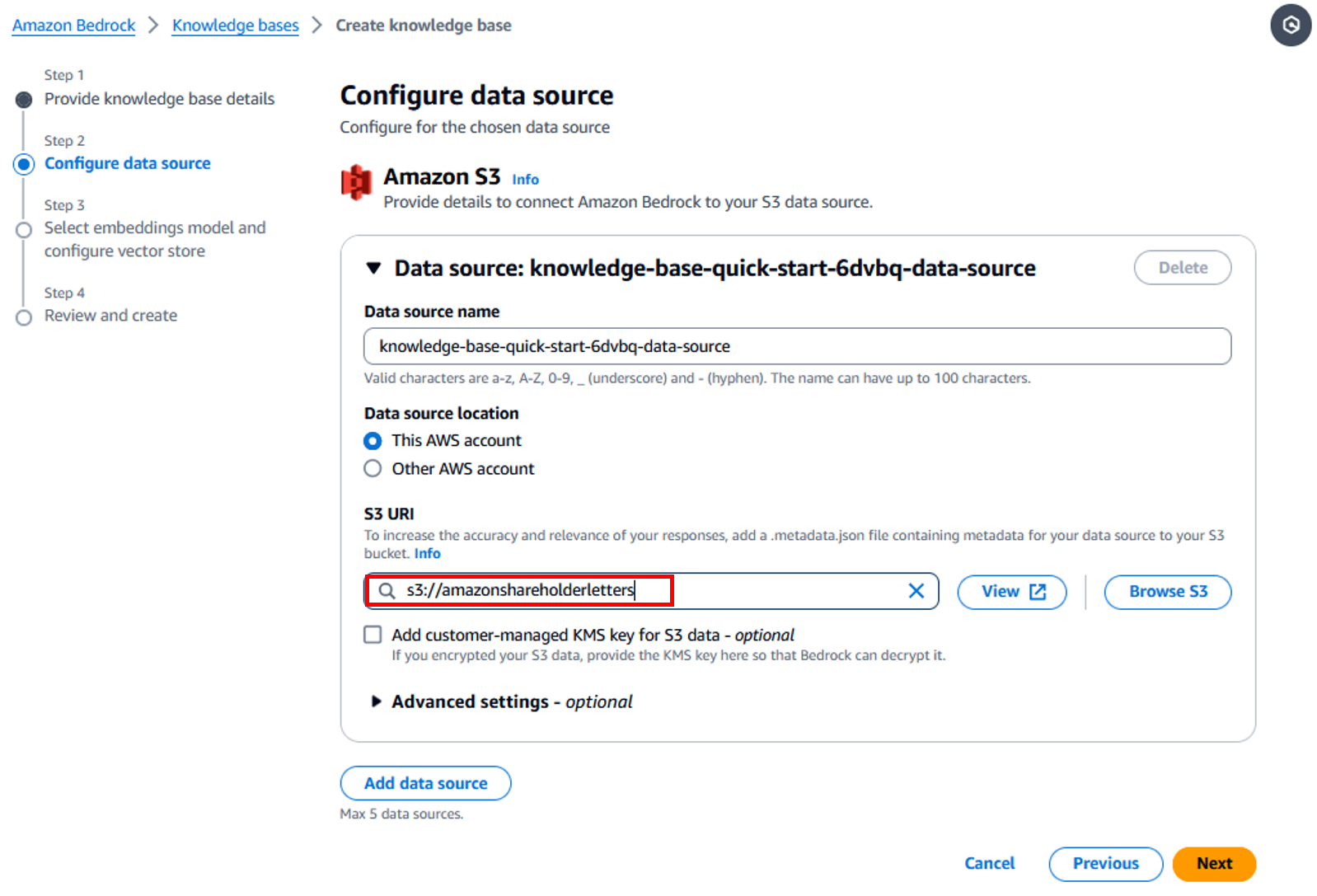

- For Data source nameamazon Bedrock automatically populates the auto-generated data source name, however, you can change it as per your requirements.

- Keep the data source location as the same AWS account and choose Explore S3.

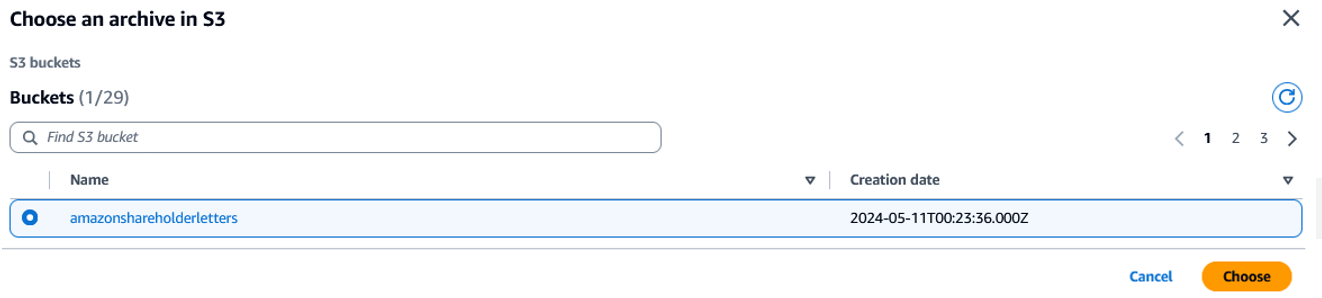

- Select the S3 bucket where you uploaded the amazon shareholder documents and choose Choose.

This will populate the S3 URI as shown in the following screenshot.

This will populate the S3 URI as shown in the following screenshot. - Choose Next.

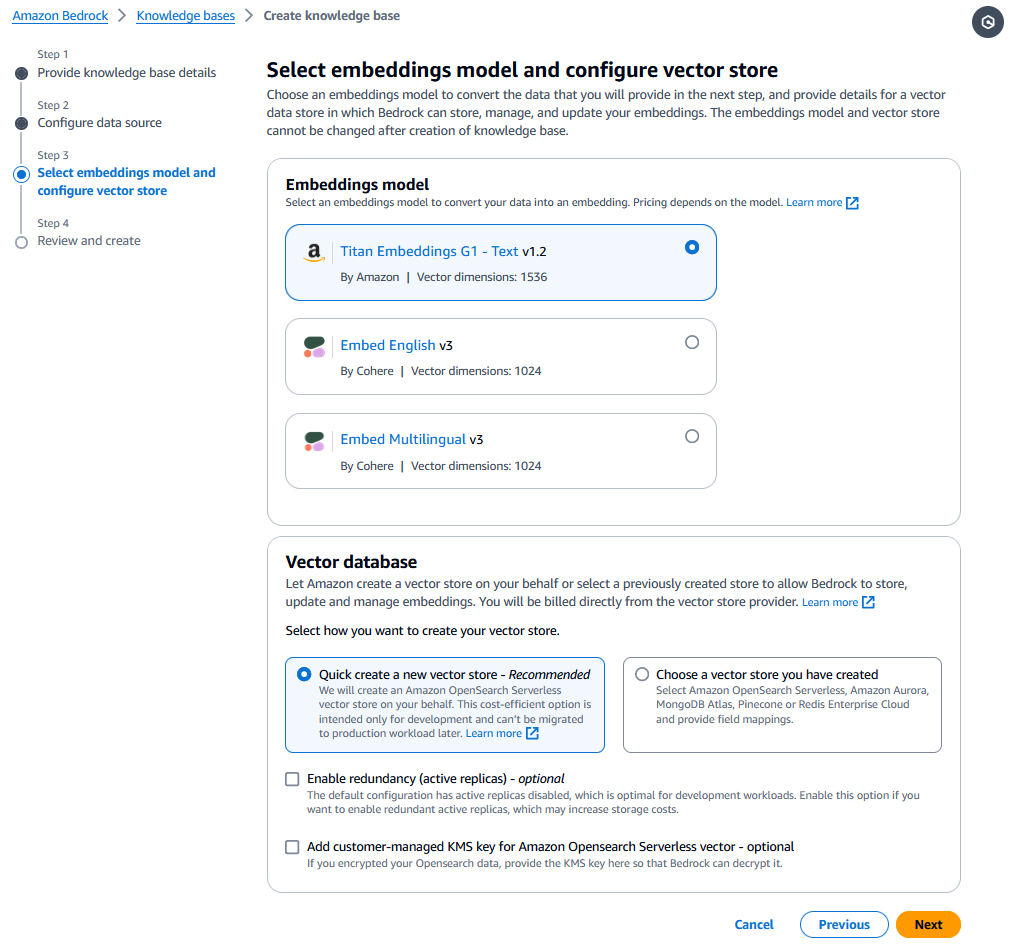

- Select the embedding model to vectorize the documents. For this post, we selected Titan G1 Addition – Text v1.2.

- Select Quickly create a new vector store to create a default vector store with OpenSearch Serverless.

- Choose Next.

- Review your settings and build your knowledge base.

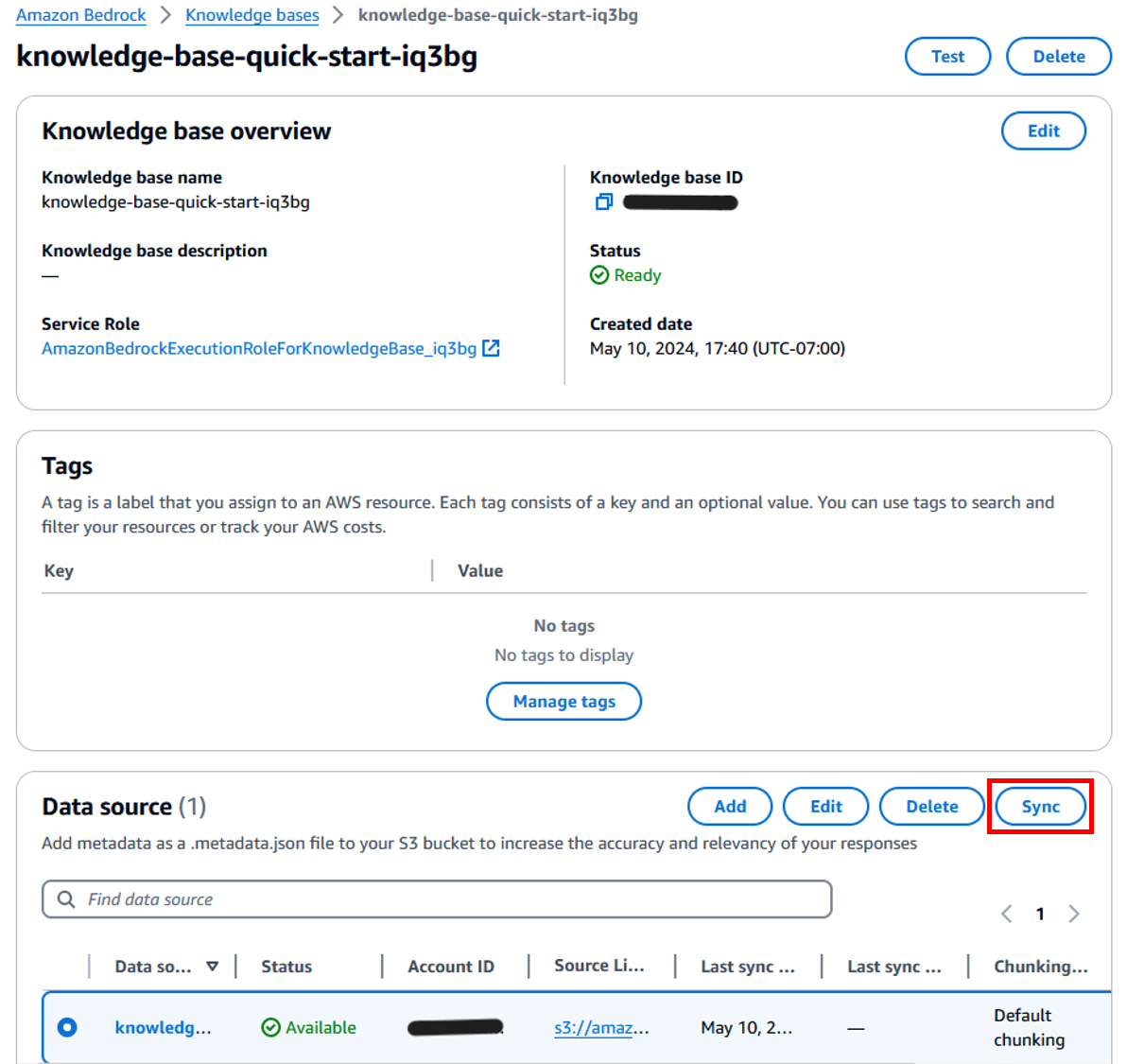

After your knowledge base is successfully created, you should see a knowledge base ID, which you need when creating your amazon Lex bot. - Choose Sync up to index documents.

Create an amazon Lex bot

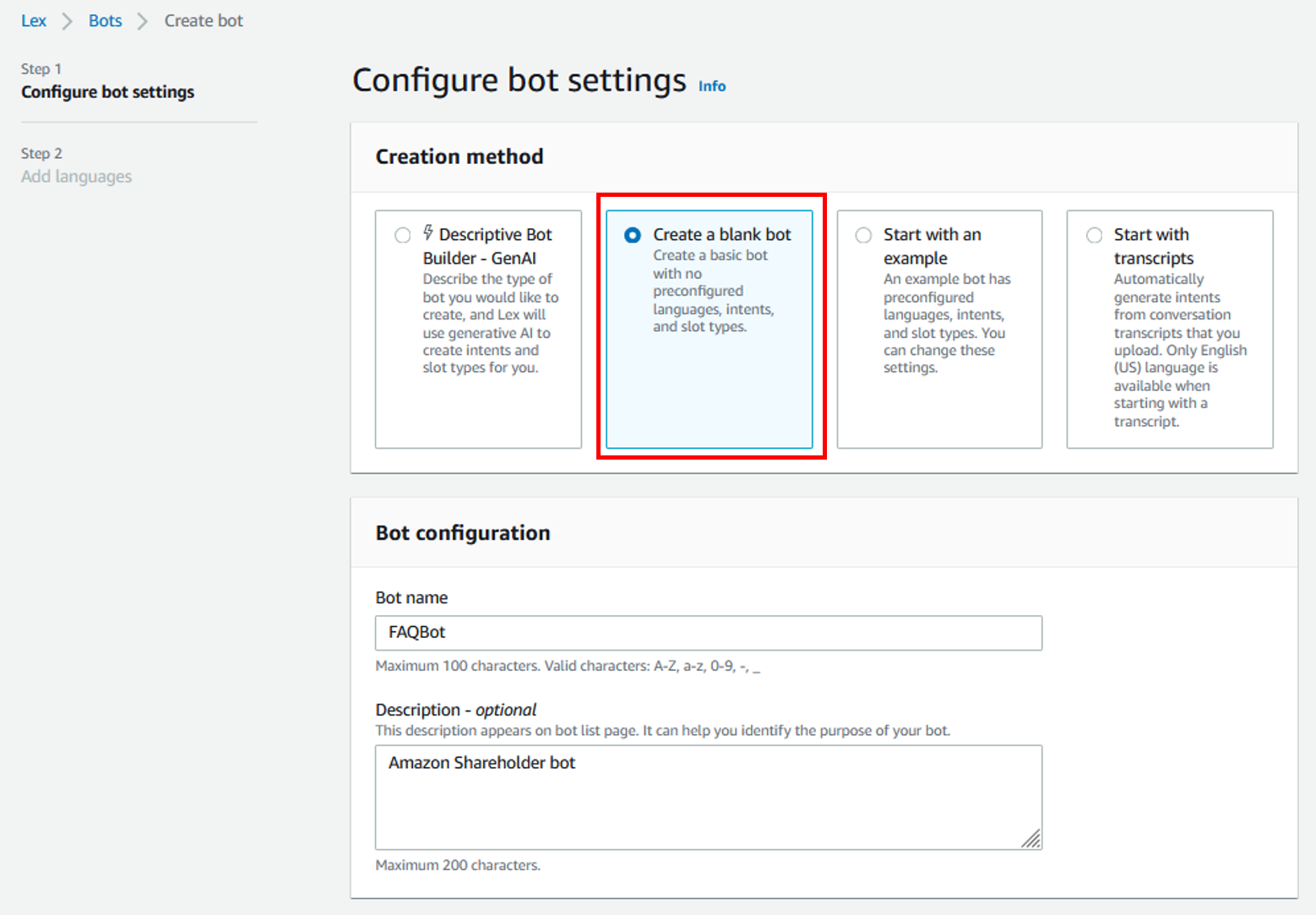

Complete the following steps to create your bot:

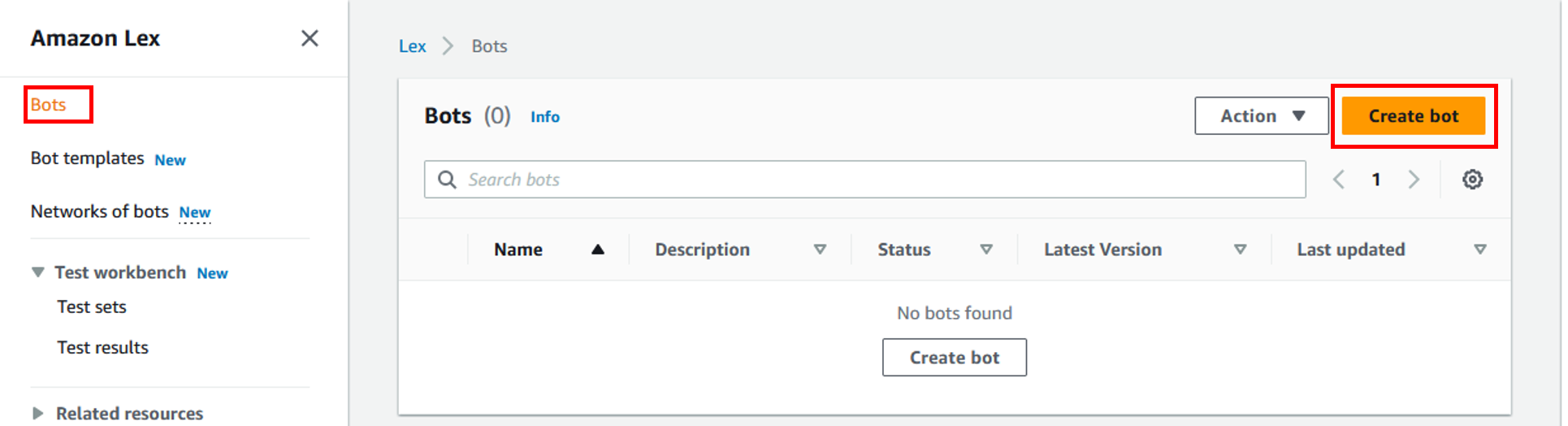

- In the amazon Lex console, select Bots in the navigation panel.

- Choose Create bot.

- For Method of creation, select Create a blank bot.

- For Bot nameEnter a name (for example,

FAQBot).

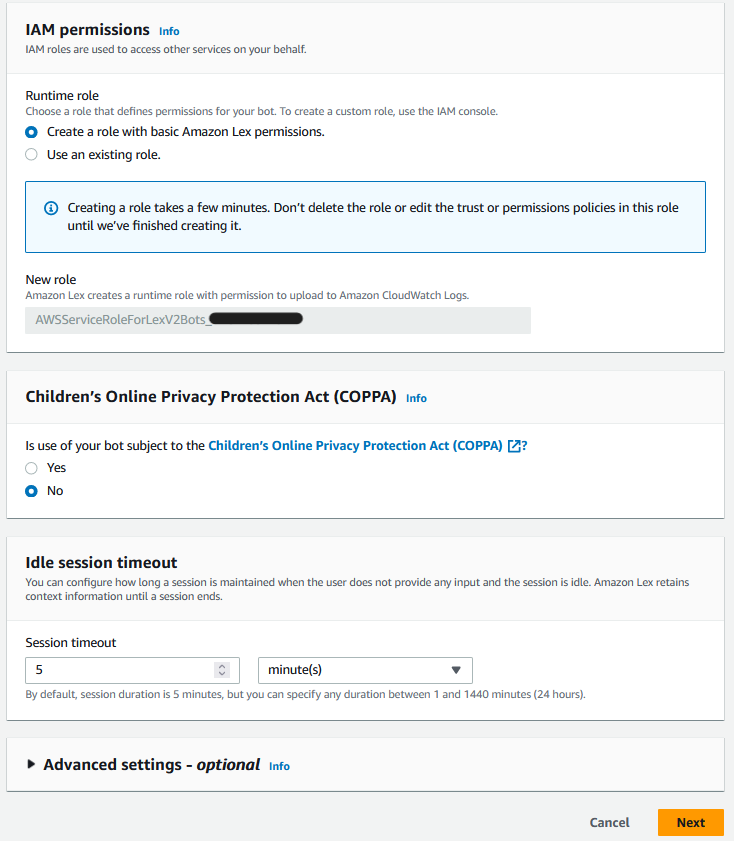

- For Runtime Roleselect Create a new IAM role with basic amazon Lex permissions to access other services on your behalf.

- Configure the remaining settings as per your requirements and choose Next.

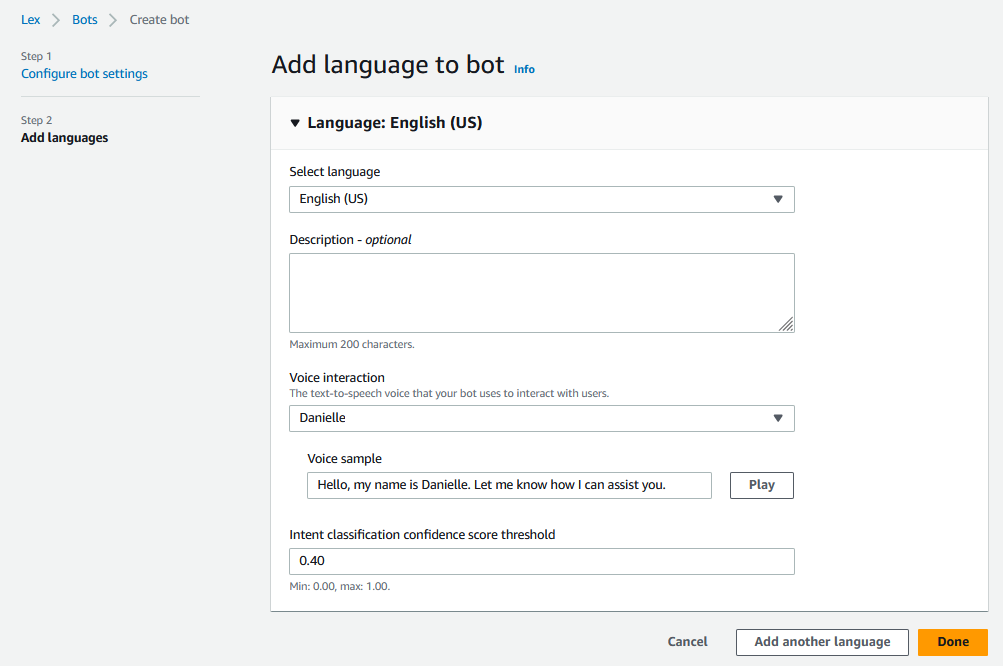

- About him Add language to bot page, you can choose from different supported languages.

For this post we chose English (US). - Choose Made.

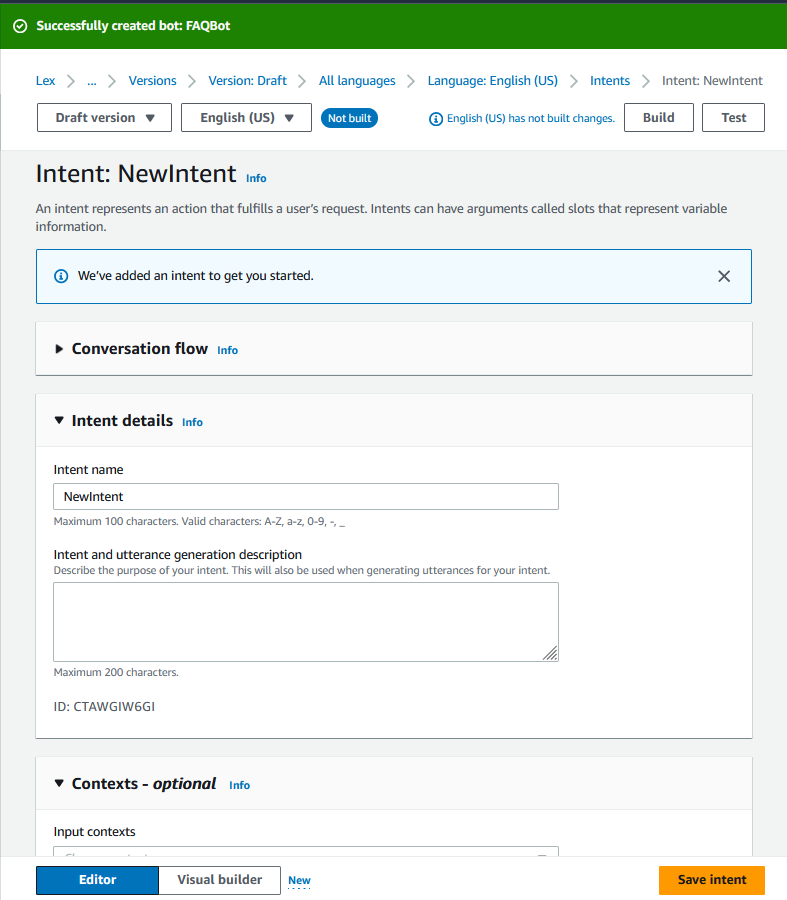

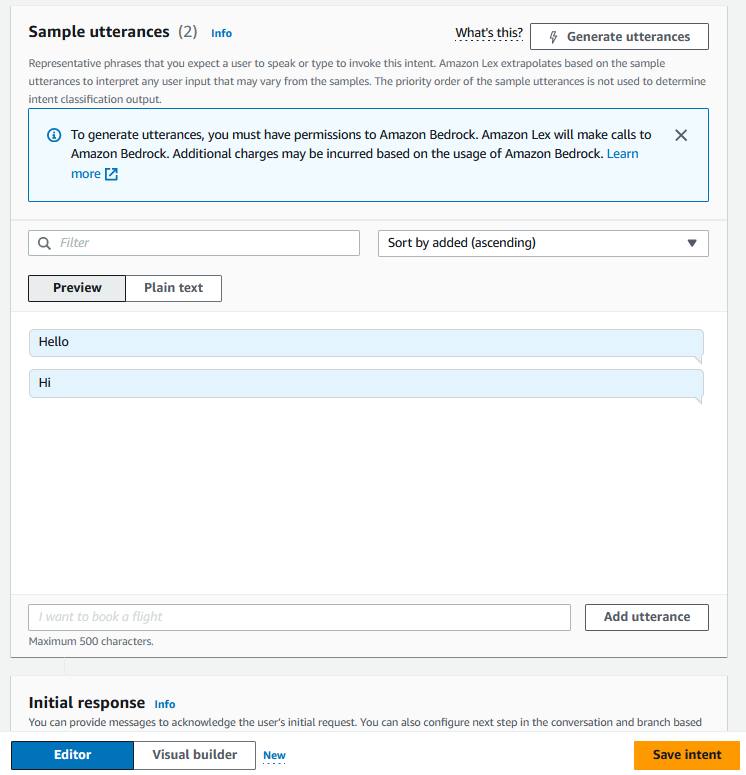

Once the bot is successfully created, you will be redirected to create a new intent.

- Add statements for the new intent and choose Save intention.

Add QnAIntent to your intent

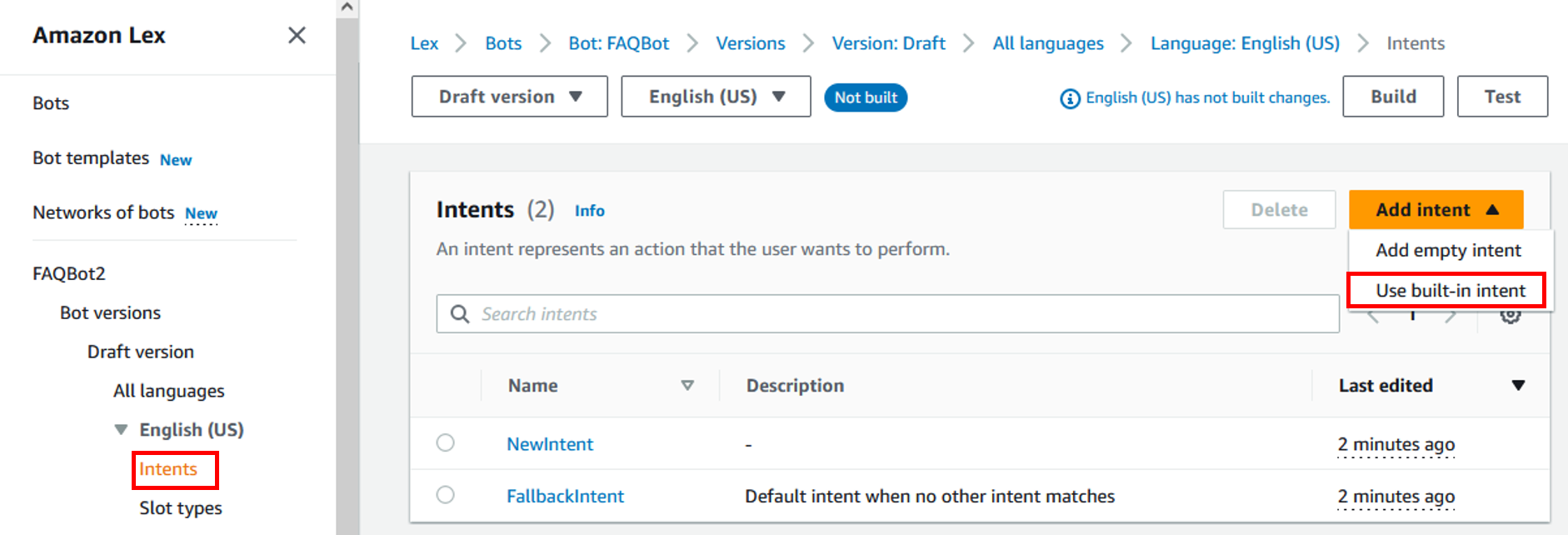

Complete the following steps to add QnAIntent:

- In the amazon Lex console, navigate to the intent you created.

- About him Add intent Dropdown menu, select Use the built-in intent.

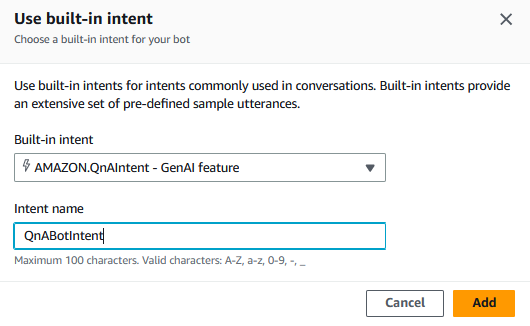

- For Built-in intention, choose amazon.QnAIntent – GenAI Function.

- For Name of the intentionEnter a name (for example,

QnABotIntent). - Choose Add.

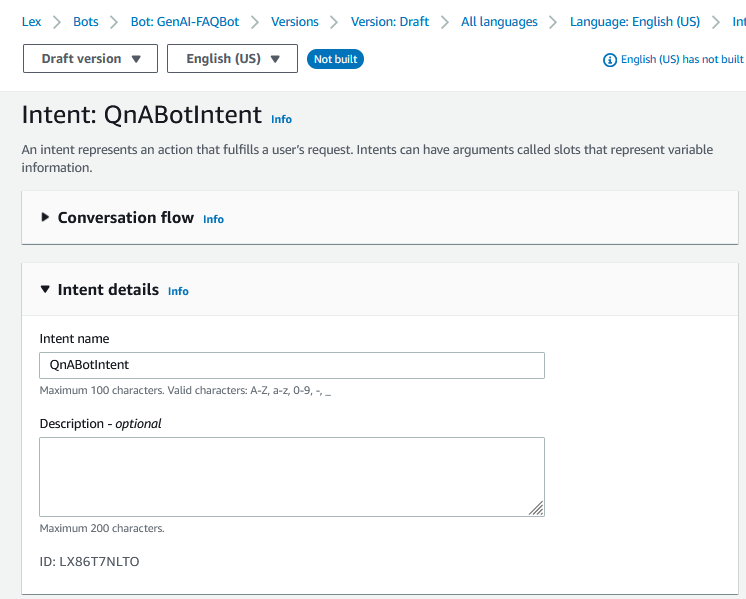

After adding QnAIntent, you will be redirected to configure the knowledge base.

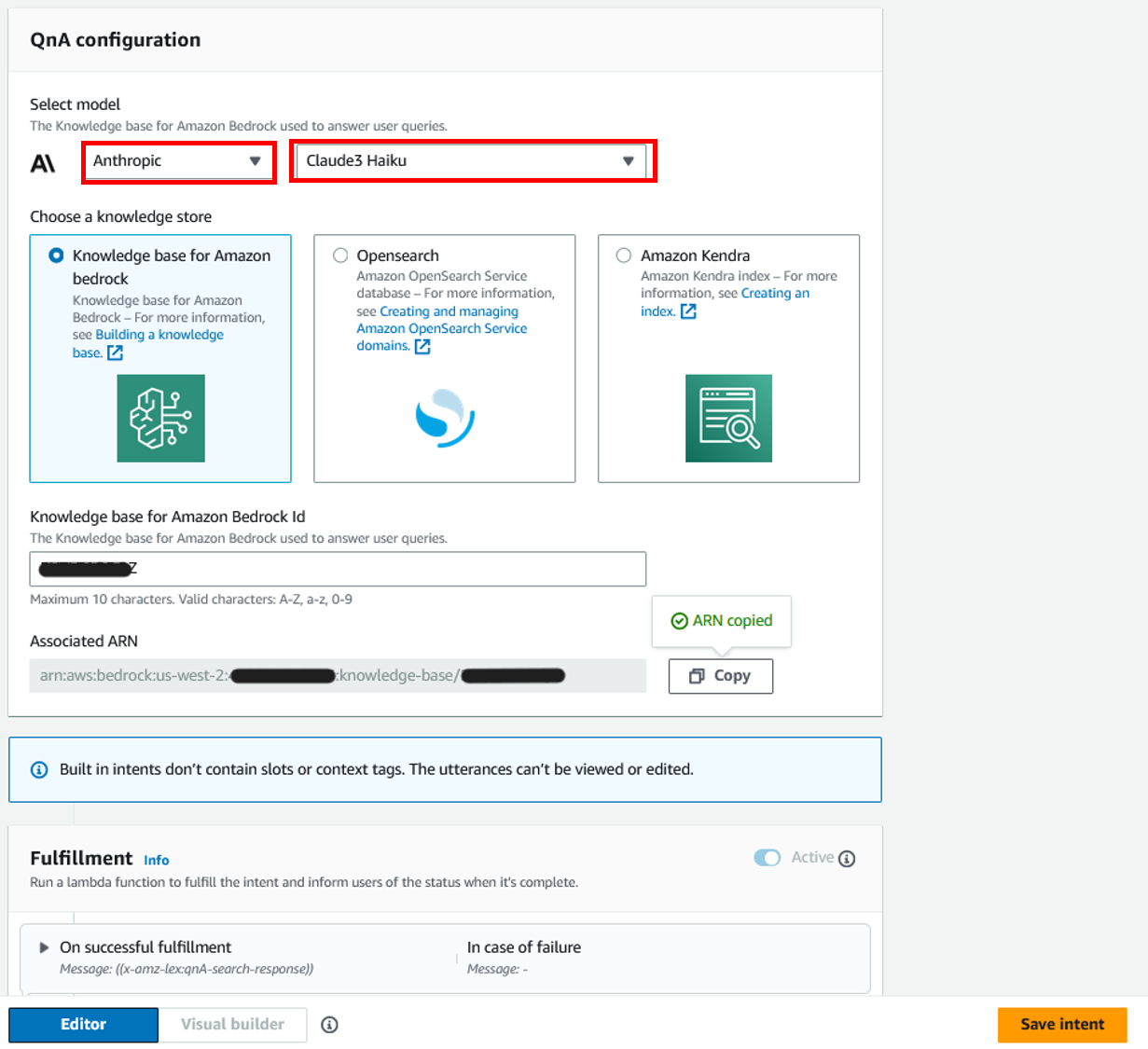

- For Select modelchoose Anthropic and Haiku by Claude3.

- For Choose a knowledge storeselect amazon Bedrock Knowledge Base and enter your knowledge base ID.

- Choose Save intention.

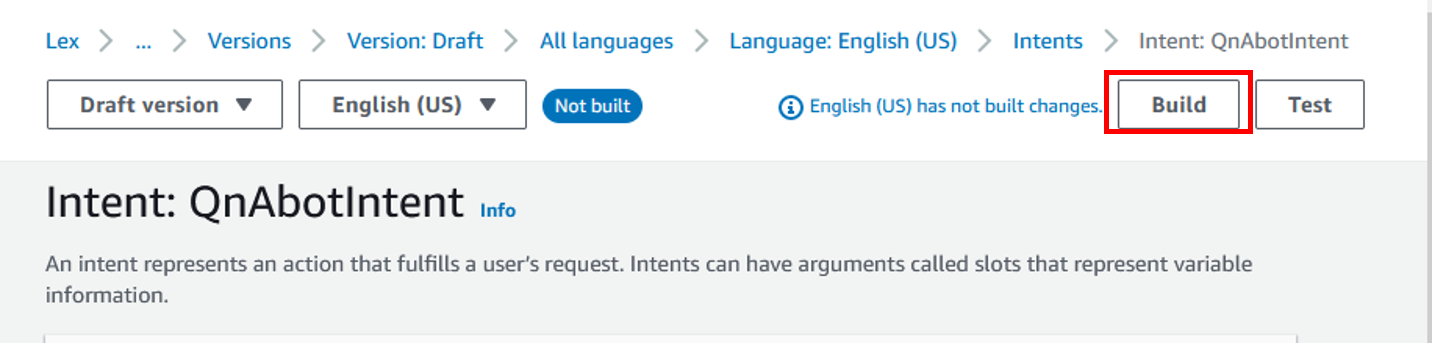

- After saving the intent, select Build to build the bot.

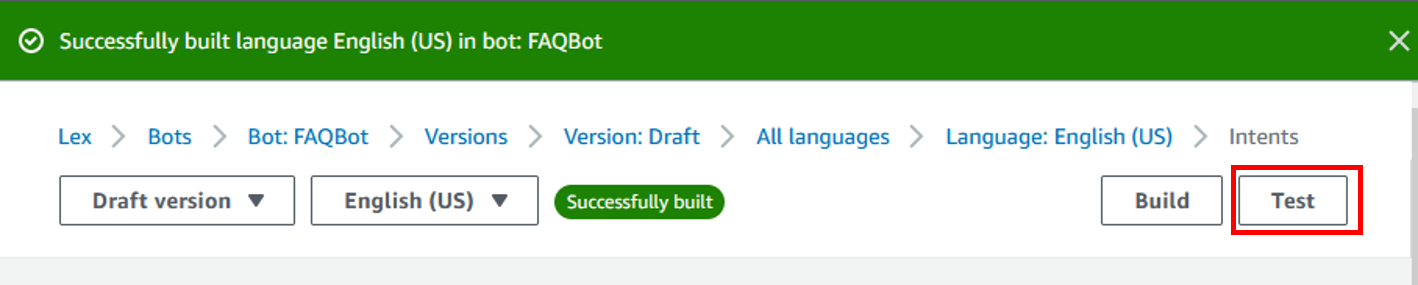

You should see a Successfully built Message when compilation is complete.

You should see a Successfully built Message when compilation is complete.

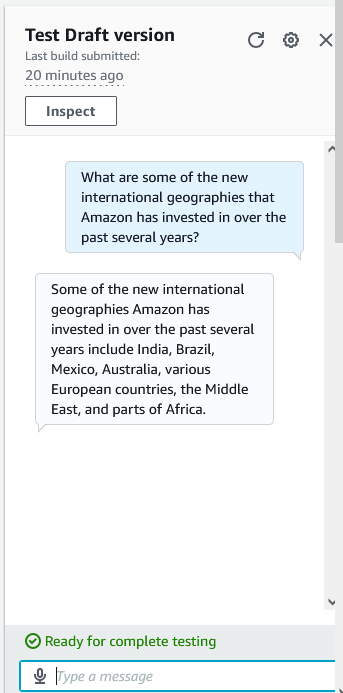

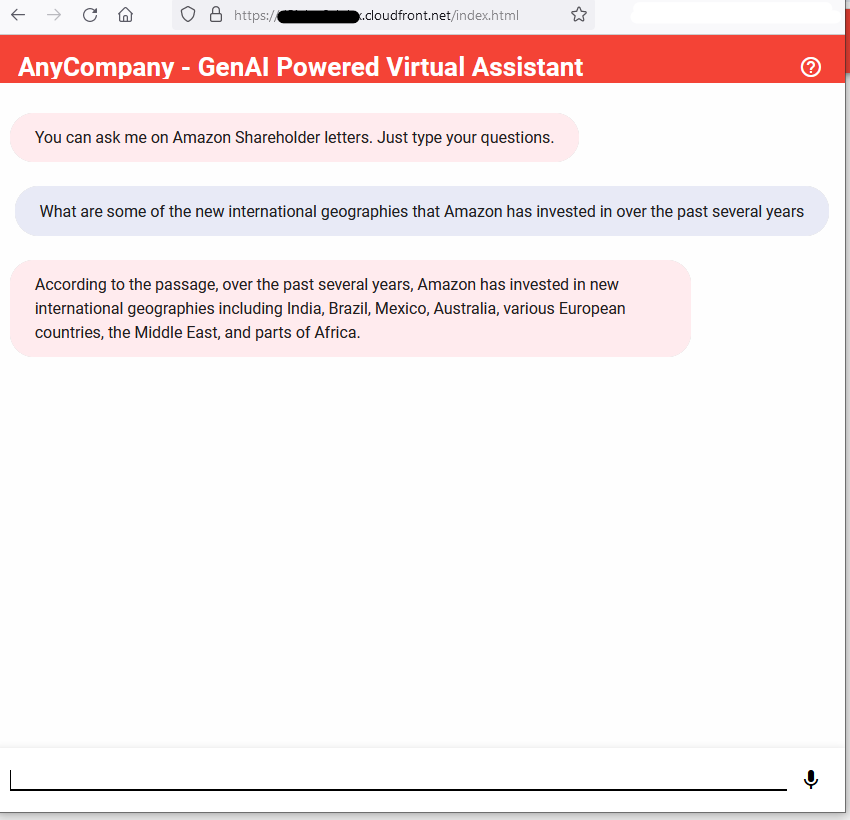

You can now test the bot in the amazon Lex console. - Choose Proof to launch a draft version of your bot in a chat window within the console.

- Enter questions to get answers.

Deploying the amazon Lex Web UI

The amazon Lex Web UI is a pre-built, full-featured web client for amazon Lex chatbots. It eliminates the heavy lifting of recreating a chat UI from scratch. You can quickly deploy its features and minimize time to value for your chatbot-powered applications. Complete the following steps to deploy the UI:

- Follow the instructions on the GitHub repository.

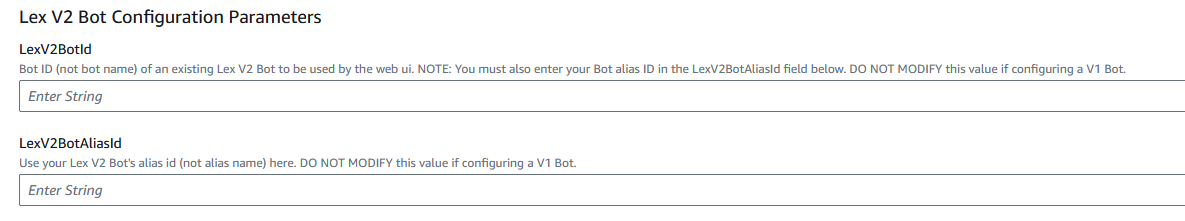

- Before you deploy the CloudFormation template, update the

LexV2BotIdandLexV2BotAliasIdValues in the template based on the chatbot you created in your account.

- Once the CloudFormation stack has been successfully deployed, copy the

WebAppUrlstack value Departures eyelash. - Navigate to the web user interface to test the solution in your browser.

Clean

To avoid incurring unnecessary charges in the future, clean up the resources you created as part of this solution:

- Delete the amazon Bedrock knowledge base and the data in the S3 bucket if you created one specifically for this solution.

- Delete the amazon Lex bot you created.

- Delete the CloudFormation stack.

Conclusion

In this post, we discuss the importance of generative ai-powered chatbots in customer support systems. Then, we provide an overview of amazon Lex’s new feature, QnAIntent, designed to connect account managers with their company’s data. Finally, we demonstrate a practical use case of setting up a Q&A chatbot to analyze amazon shareholder documents. This implementation not only provides fast and consistent customer service, but also allows live agents to dedicate their expertise to solving more complex issues.

Stay up to date with the latest advancements in generative ai and start developing on AWS. If you're looking for help getting started, check out the Generative ai Innovation Center.

About the authors

Supriya Puragundla is a Senior Solutions Architect at AWS. She has over 15 years of IT experience in software development, design, and architecture. She helps key customer accounts on their data, generative ai, and ai/ML journeys. She is passionate about data-driven ai and the deep area of ML and generative ai.

Supriya Puragundla is a Senior Solutions Architect at AWS. She has over 15 years of IT experience in software development, design, and architecture. She helps key customer accounts on their data, generative ai, and ai/ML journeys. She is passionate about data-driven ai and the deep area of ML and generative ai.

Manjula Nagineni is a Senior Solutions Architect at AWS based in New York. She works with leading financial services institutions, designing and modernizing their large-scale applications as they adopt AWS Cloud services. She is passionate about designing cloud-centric big data workloads. She has over 20 years of IT experience in software development, analytics, and architecture across multiple domains including finance, retail, and telecom.

Manjula Nagineni is a Senior Solutions Architect at AWS based in New York. She works with leading financial services institutions, designing and modernizing their large-scale applications as they adopt AWS Cloud services. She is passionate about designing cloud-centric big data workloads. She has over 20 years of IT experience in software development, analytics, and architecture across multiple domains including finance, retail, and telecom.

Mani Khanuja is a technology leader and generative ai specialist, author of Applied Machine Learning and High Performance Computing on AWS, and a board member of the Women in Manufacturing Education Foundation. She leads machine learning projects across a variety of domains, including computer vision, natural language processing, and generative ai. She speaks at internal and external conferences, including AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she enjoys long runs on the beach.

Mani Khanuja is a technology leader and generative ai specialist, author of Applied Machine Learning and High Performance Computing on AWS, and a board member of the Women in Manufacturing Education Foundation. She leads machine learning projects across a variety of domains, including computer vision, natural language processing, and generative ai. She speaks at internal and external conferences, including AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she enjoys long runs on the beach.

NEWSLETTER

NEWSLETTER