Imagine the ai that not only thinks, but sees and acts, interacting with its Windows 11 interface as a professional. Microsoft omniparser V2 and Omnitool are here to make it a reality, which drives autonomous Gui agents that redefine task automation and user experience. This article is immersed in its abilities, offering a practical guide to configure your local environment and unlock your potential. From rationalization workflows to addressing real world challenges, let's explore how these tools can transform the way it works and plays. Ready to build your own vision agent? Let's start!

Learning objectives

- Understand the central functionalities of Omniparser V2 and omnitool in the automation of the GUI directed by ai.

- Learn to configure and configure Omniparser V2 and omnitool for local use.

- Explore the interaction between ai agents and user graphic interfaces using vision models.

- Identify applications of the real world of Omniparser V2 and omnitool in automation and accessibility.

- Recognize the considerations responsible for ai and risk mitigation strategies in the implementation of autonomous Gui agents.

What is Microsoft Omniparser V2?

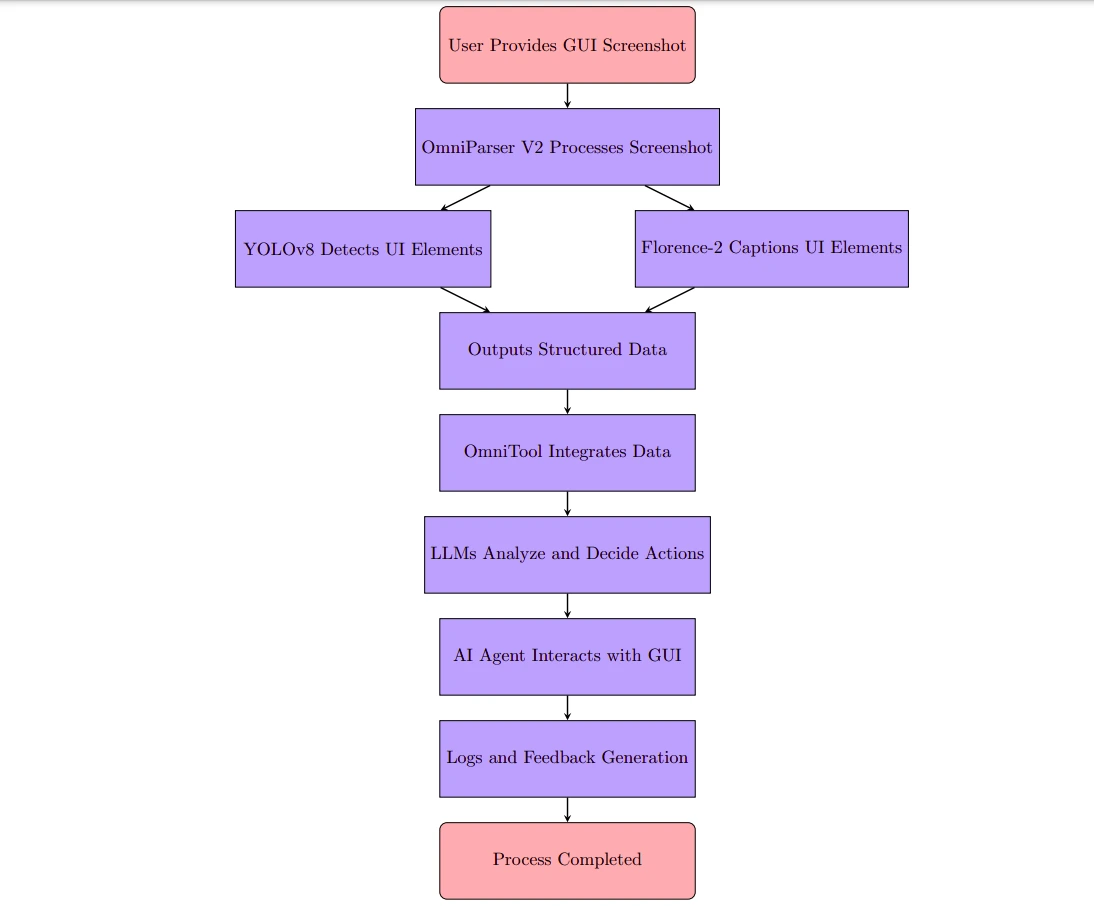

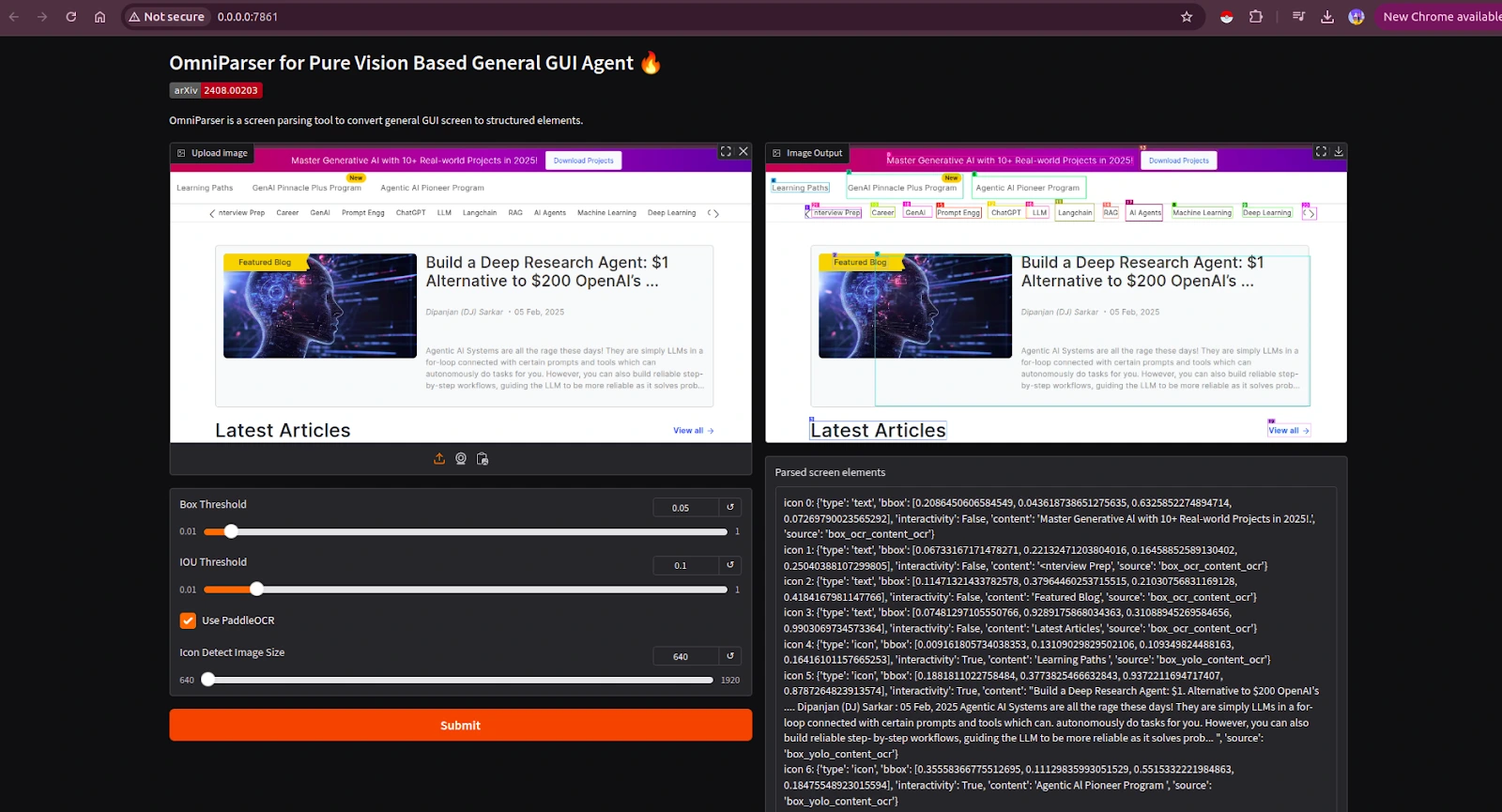

Omniparser V2 is a sophisticated ai screen analyzer designed to extract detailed and structured data user graphic interfaces. It works through a two -step process:

- Detection module: Use a finely adjusted Yolov8 model to identify interactive elements such as buttons, icons and menus within screen catches.

- Subtitles module: Use the Florence-2 Foundation model to generate descriptive labels for these elements, clarifying their functions within the interface.

This dual approach allows large language models (LLM) to understand the GUIs, facilitating precise interactions and tasks execution. Compared to its predecessor, V2 Omniparser has significant improvements, including a 60% reduction in latency and greater precision, particularly for smaller elements.

Omnitool is a documented Windows system that integrates V2 omniparser with main LLM such as OpenAi, Deepseek, Qwen and Anthrope. This integration allows totally autonomous agents by ai agents, which allows them to perform tasks independently and optimize repetitive Gui interactions. Omnitool provides a sandbox environment to test and implement agents, ensuring security and efficiency in real world applications.

Omniparser v2 configuration configuration

To take advantage of the potential of Omniparser V2, follow these steps to configure your local environment:

Previous requirements

- Be sure to have Python installed in your system.

- Install the necessary units using a Conda environment.

Facility

Clone the omniparser v2 repository of Github.

- Git clone https://github.com/microsoft/omniparser

- CD omniparser

Activate your condemnation environment and install the required packages.

- conda create -n "omni" python==3.12

#conda activate omni- Download the V2 (ICON_CAPTION_FLORENCE) weights using HuggingFace-Cli.

rm -rf weights/icon_detect weights/icon_caption weights/icon_caption_florence huggingface-cli download microsoft/OmniParser-v2.0 --local-dir weights

mv weights/icon_caption weights/icon_caption_florenceEvidence

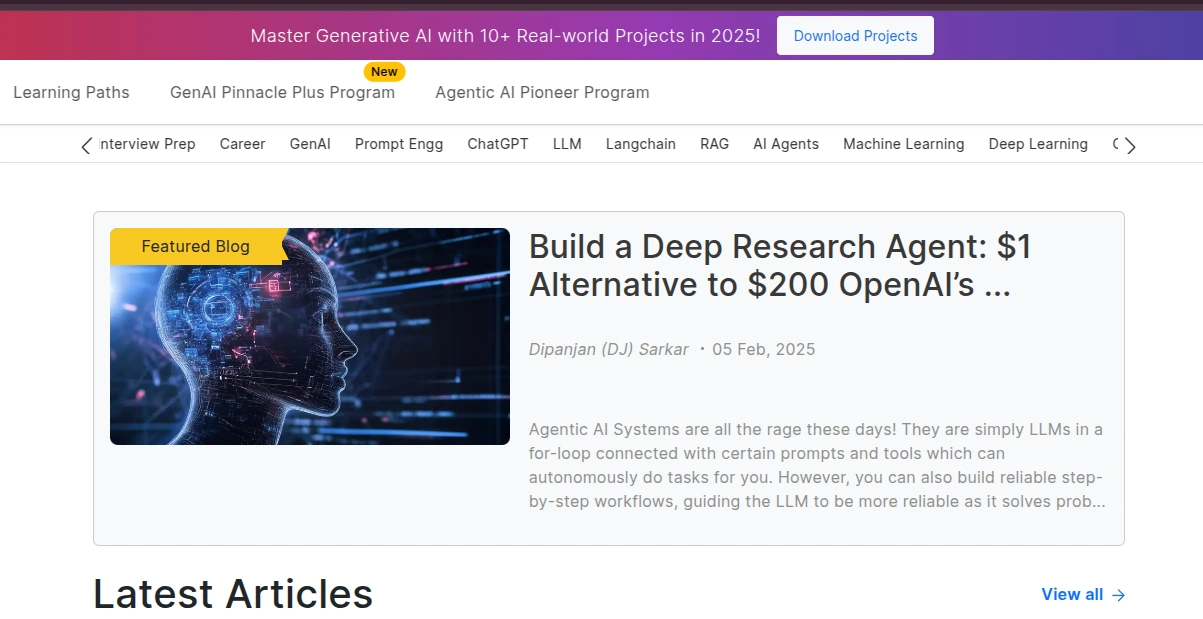

Start the Omniparser V2 server and test its functionality using sample screenshots.

- python gradio_demo.pyYou can read this article to configure Omniparser V2 on your machine.

To take advantage of the potential of omnitool, follow these steps to configure your local environment:

Previous requirements

- Be sure to have 30 GB of remaining space (5 GB for ISO, 400 MB for Docker Container, 20 GB for the storage folder)

- Install Docker Desktop on your system.

https://docs.docker.com/desktop/ - Download the Windows 11 business evaluation ISO from the Microsoft Evaluation Center. Change the name of the file to custom. Iiso and copy the board Omniparser / omnitool / omnibox / vm / win11iso.

VM configuration

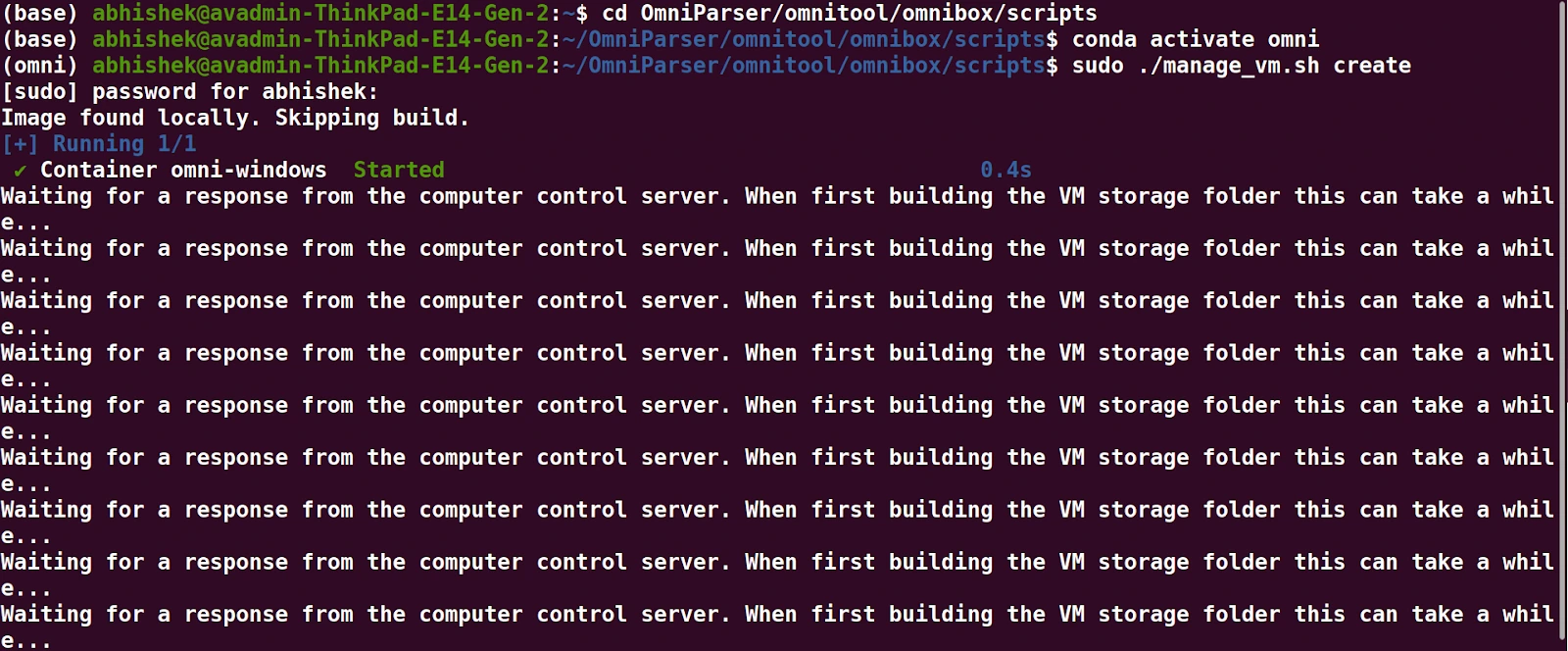

Navigate to the VM management script directory with:

cd OmniParser/omnitool/omnibox/scriptsBuild the Docker container (400 MB) and install the ISO in a storage folder (20 GB) with ./MANage_vm.sh Create. The process is shown in the screenshots below and will take 20-90 minutes depending on the download speeds (commonly about 60 minutes). When it is completed, the terminal will show that VM + Server is in operation! You can see the applications that are installed in the VM looking at the desktop through the Viewer Novnc (http: // localhost: 8006/vnc.html ver_only = 1 & autoconnect = 1 & resize = scale). The terminal window shown in the Viewer Novnc will not be open on the desk after the configuration is made. If you can see it, wait and not click!

After creating the first time, it will store a VM state guard in VM/Win11 storage. Then you can administer the VM with ./MANage_vm.sh start and ./MANage_VM.Sh stop. To eliminate VM, use ./MANage_VM.Sh eliminate and eliminate the omniparser/omnitool/omnibox/vm/win11storage directory.

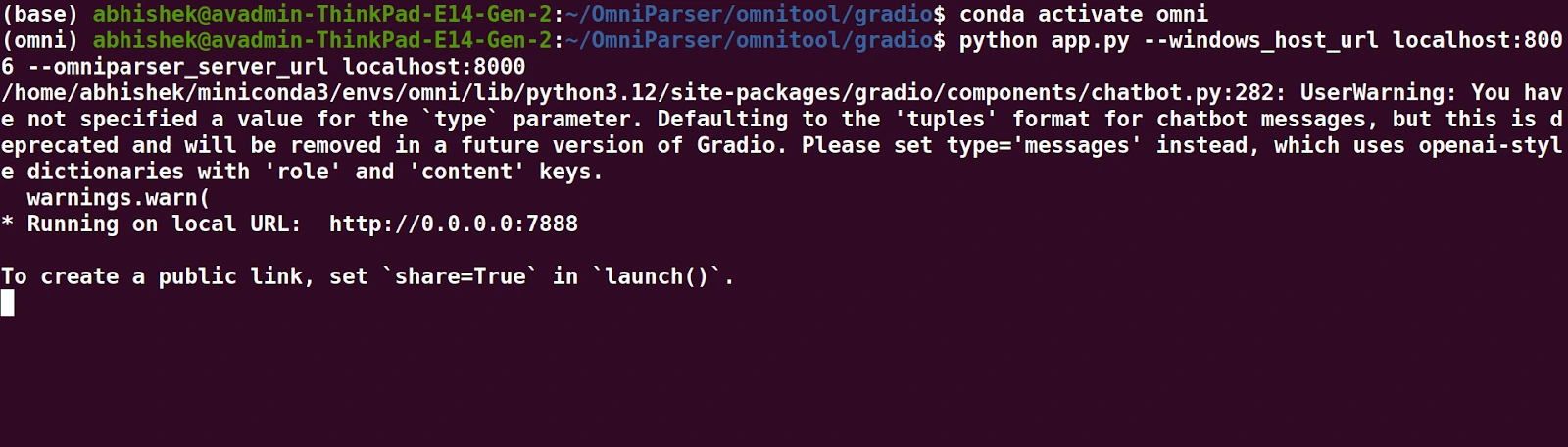

Executing omnitool in graduate

- Change in the Board of Directors of Graduate Executing: CD Omniparser/Omnitool/Gradio

- Activate your Conda environment with: Conda activate omni

- Start the server using: python app.py –Windows_host_urlhost: 8006 –omniparser_server_url localhost: 8000

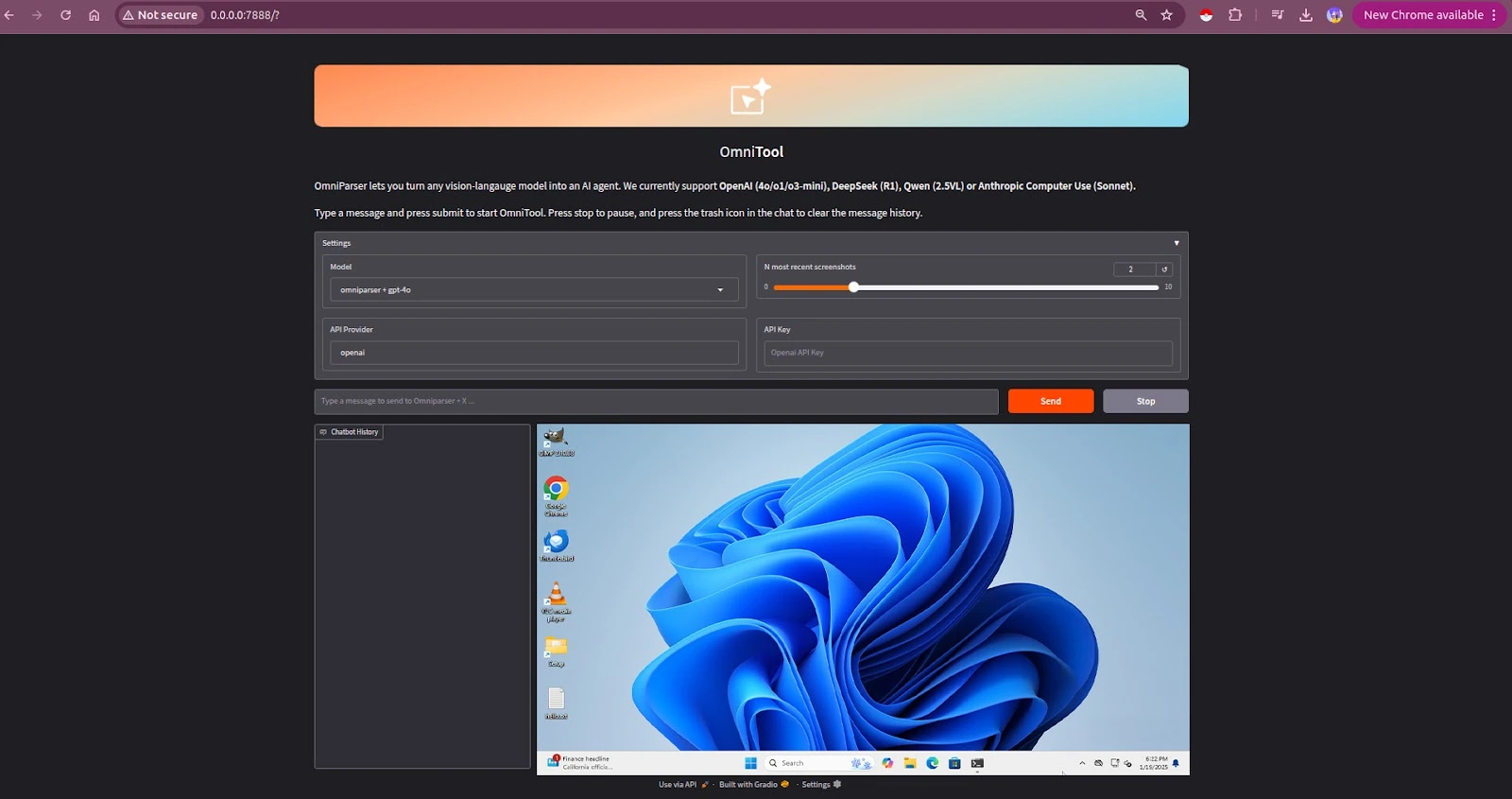

- Open the URL shown in your terminal, enter your API key and begin to interact with the ai agent.

- Make sure the Omniparser server, omnitool VM and the graduation interface are executed in separate terminal windows.

Production:

Interacting with the agent

Once your environment is configured, you can use the graduate user interface to provide commands to the agent. This interface allows you to observe the reasoning and execution of the agent within the VM Omnibox. Example use cases include:

- Opening applications: Use the agent to start applications recognizing icons or menu elements.

Navigation menus: Automate the navigation of the menu identifying and interacting with specific user interface elements. - Searches: Take advantage of the agent to search within applications or web browsers.

Omnitool admits a variety of vision models of the box, which include:

- OpenAI (4o/O1/O3-mini): Known for its versatility and performance in the understanding of the complex elements of the user interface.

- Deepseek (R1): It offers robust capabilities to recognize and interact with the Gui components.

- Qwen (2.5vl): Provides advanced features for detailed analysis and automation of the user interface.

- Anthropic (sonnet): Improves agent's abilities with sophisticated language and generation.

<h2 class="wp-block-heading" id="h-responsible-ai-considerations-and-risks”>Considerations and risks of the responsible

To align with the principles of Microsoft and the practices of the responsible, omniparser v2 and omnitool incorporate several risk mitigation strategies:

- Training data: The icon subtitles model is trained with data from responsible to avoid infiding confidential attributes of icon images.

- Analysis of the threat model: Made using the Microsoft threat modeling tool to identify and address potential risks.

- User Guide: Users are recommended to apply omniparser only for screenshots that do not contain harmful or violent content.

- Human supervision: Encourage human supervision to minimize the risks associated with autonomous agents.

Real world applications

The abilities of Omniparser V2 and omnitool allow a wide range of applications:

- UI automation: Automate interactions with graphic user interfaces to expedite workflows.

- Accessibility solutions: Provide structured data for assistance technologies to improve user experiences.

- User interface analysis: Evaluation and improvement of the designs of the user interface based on structured data extracted.

Conclusion

Omniparser V2 and omnitool represent a significant advance in the visual analysis of ai and the automation of the GUI. When integrating these tools, developers can create sophisticated agents that interact without problems with the graphic interfaces of users, unlocking new possibilities for automation and accessibility. As ai technology continues to evolve, the potential applications of Omniparser V2 and Omnitool will only grow, shaping the future of how we interact with digital interfaces.

Key control

- Omniparser V2 Improves the Auto's automation driven by ai through the analysis and labeling of precision labeling interface elements.

- Omnitool Integra omniparser V2 with LLM leaders to enable fully autonomous agent actions.

- Omniparser v2 and omnitool configuration It requires configuring dependencies, Docker and a virtualized Windows environment.

- Real world applications Include user interface automation, accessibility solutions and user interface analysis.

- Responsible practices Ensure ethical implementation addressing risks through training, supervision and modeling data of threats.

Frequent questions

A. omniparser V2 is a tool with ai that extracts structured data from graphic user interfaces using detection and subtitles models.

A. omnitool integrates omniparser v2 with LLM to allow ia agents to interact autonomously with gui elements.

A. You need Python, Conda and the necessary units installed, together with the weights of the Omniparser model.

A. omnitool is executed within a Dockerized Windows VM, allowing ai agents to interact safely with Gui applications.

A. They are used for the automation of the user interface, accessibility solutions and improvement of the user interface design.

NEWSLETTER

NEWSLETTER