This post is a joint collaboration between Salesforce and AWS and is being cross-published on both the ai-how-sagemaker-enhances-salesforce-einsteins-large-language-model-latency-and-throughput/” target=”_blank” rel=”noopener”>Salesforce Engineering Blog and the AWS Machine Learning Blog.

Salesforce, Inc. is an American cloud-based software company headquartered in San Francisco, California. It provides customer relationship management (CRM) software and applications focused on sales, customer service, marketing automation, ecommerce, analytics, and application development. Salesforce is building toward artificial general intelligence (AGI) for business, enabling predictive and generative functions within their flagship software-as-a-service (SaaS) CRM, and working toward intelligent automations using artificial intelligence (ai) as well as agents.

Salesforce Einstein is a set of ai technologies that integrate with Salesforce’s Customer Success Platform to help businesses improve productivity and client engagement. Einstein has a list of over 60 features, unlocked at different price points and segmented into four main categories: machine learning (ML), natural language processing (NLP), computer vision, and automatic speech recognition. Einstein delivers advanced ai capabilities into sales, service, marketing, and other functions, empowering companies to deliver more personalized and predictive customer experiences. Einstein has out-of-the-box ai features such as sales email generation in Sales Cloud and service replies in Service Cloud. They also have tools such as Copilot, Prompt, and Model Builder, ai-builder/?d=7013y000002Exu7AAC&nc=7013y000002EygaAAC&utm_content=7013y000002Exu7AAC&utm_source=google&utm_medium=paid_search&utm_campaign=21161033675&utm_adgroup=160919875419&utm_term=einstein%201%20studio&utm_matchtype=e&gad_source=1&gclid=Cj0KCQjw-uK0BhC0ARIsANQtgGPZBrxL84X0kOUO1wlTlaluascGakIX7Drq5goLb23I96cVfuyPxfkaAsXOEALw_wcB&gclsrc=aw.ds” target=”_blank” rel=”noopener”>three tools contained in the Einstein 1 Studio, that allow organizations to build custom ai functionality and roll it out to their users.

The Salesforce Einstein ai Platform team is the group supporting development of Einstein applications. They are committed to enhancing the performance and capabilities of ai models, with a particular focus on large language models (LLMs) for use with Einstein product offerings. These models are designed to provide advanced NLP capabilities for various business applications. Their mission is to continuously refine these LLMs and ai models by integrating state-of-the-art solutions and collaborating with leading technology providers, including open source communities and public cloud services like AWS and building it into a ai-platform-from-siloed-legacy-stacks/” target=”_blank” rel=”noopener”>unified ai platform. This helps make sure Salesforce customers receive the most advanced ai technology available.

In this post, we share how the Salesforce Einstein ai Platform team boosted latency and throughput of their code generation LLM using amazon SageMaker.

The challenge with hosting LLMs

In the beginning of 2023, the team started looking at solutions to host CodeGen, Salesforce’s in-house open source LLM for code understanding and code generation. The CodeGen model allows users to translate natural language, such as English, into programming languages, such as Python. Because they were already using AWS for inference for their smaller predictive models, they were looking to extend the Einstein platform to help them host CodeGen. Salesforce developed an ensemble of CodeGen models (Inline for automatic code completion, BlockGen for code block generation, and FlowGPT for process flow generation) specifically tuned for the Apex programming language. Salesforce Apex is a certified framework for building SaaS apps on top of Salesforce’s CRM functionality. They were looking for a solution that can securely host their model and help them handle a large volume of inference requests as well as multiple concurrent requests at scale. They also needed to be able to meet their throughput and latency requirements for their co-pilot application (EinsteinGPT for Developers). EinsteinGPT for Developers simplifies the start of development by creating smart Apex based on natural language prompts. Developers can accelerate coding tasks by scanning for code vulnerabilities and getting real-time code suggestions within the Salesforce integrated development environment (IDE), as shown in the following screenshot.

The Einstein team conducted a comprehensive evaluation of various tools and services, including open source options and paid solutions. After assessing these options, they found that SageMaker provided the best access to GPUs, scalability, flexibility, and performance optimizations for a wide range of scenarios, particularly in addressing their challenges with latency and throughput.

Why Salesforce Einstein chose SageMaker

SageMaker offered several specific features that proved essential to meeting Salesforce’s requirements:

- Multiple serving engines – SageMaker includes specialized deep learning containers (DLCs), libraries, and tooling for model parallelism and large model inference (LMI) containers. LMI containers are a set of high-performance Docker Containers purpose built for LLM inference. With these containers, you can use high performance open source inference libraries like FasterTransformer, TensorRT-LLM, vLLM and Transformers NeuronX. These containers bundle together a model server with open source inference libraries to deliver an all-in-one LLM serving solution. The Einstein team liked how SageMaker provided quick-start notebooks that get them deploying these popular open source models in minutes.

- Advanced batching strategies – The SageMaker LMI allows customers to optimize performance of their LLMs by enabling features like batching, which groups multiple requests together before they hit the model. Dynamic batching instructs the server to wait a predefined amount of time and batch up all requests that occur in that window with a maximum of 64 requests, while paying attention to a configured preferred size. This optimizes the use of GPU resources and balances throughput with latency, ultimately reducing the latter. The Einstein team liked how they were able to use dynamic batching through the LMI to increase throughput for their Codegen models while minimizing latency.

- Efficient routing strategy – By default, SageMaker endpoints have a random routing strategy. SageMaker also supports a least outstanding requests (LOR) strategy, which allows SageMaker to optimally route requests to the instance that’s best suited to serve that request. SageMaker makes this possible by monitoring the load of the instances behind your endpoint and the models or inference components that are deployed on each instance. Customers have the flexibility to choose either algorithm depending on their workload needs. Along with the capability to handle multiple model instances across several GPUs, the Einstein team liked how the SageMaker routing strategy ensures that traffic is evenly and efficiently distributed to model instances, preventing any single instance from becoming a bottleneck.

- Access to high-end GPUs – SageMaker provides access to top-end GPU instances, which are essential for running LLMs efficiently. This is particularly valuable given the current market shortages of high-end GPUs. SageMaker allowed the Einstein team to use auto-scaling of these GPUs to meet demand without manual intervention.

- Rapid iteration and deployment – While not directly related to latency, the ability to quickly test and deploy changes using SageMaker notebooks helps in reducing the overall development cycle, which can indirectly impact latency by accelerating the implementation of performance improvements. The use of notebooks enabled the Einstein team to shorten their overall deployment time and get their models hosted in production much faster.

These features collectively help optimize the performance of LLMs by reducing latency and improving throughput, making amazon SageMaker a robust solution for managing and deploying large-scale machine learning models.

One of the key capabilities was how using SageMaker LMI provided a blueprint of model performance optimization parameters for NVIDIA’s FasterTransformer library to use with CodeGen. When the team initially deployed CodeGen 2.5, a 7B parameter model on amazon Elastic Compute Cloud (amazon EC2), the model wasn’t performing well for inference. Initially, for a code block generation task, it could only handle six requests per minute, with each request taking over 30 seconds to process. This was far from efficient and scalable. However, after using the SageMaker FasterTransformer LMI notebook and referencing the advanced SageMaker-provided guides to understand how to optimize the different endpoint parameters provided, there was a significant improvement in model performance. The system now handles around 400 requests per minute with a reduced latency of approximately seven seconds per request, each containing about 512 tokens. This represents an over 6,500 percent increase in throughput after optimization. This enhancement was a major breakthrough, demonstrating how the capabilities of SageMaker were instrumental in optimizing the throughput of the LLM and reducing cost. (The FasterTransformer backend has been deprecated by NVIDIA; the team is working toward migrating to the TensorRT (TRT-LLM) LMI.)

To assess the performance of LLMs, the Einstein team focuses on two key metrics:

- Throughput – Measured by the number of tokens an LLM can generate per second

- Latency – Determined by the time it takes to generate these tokens for individual requests

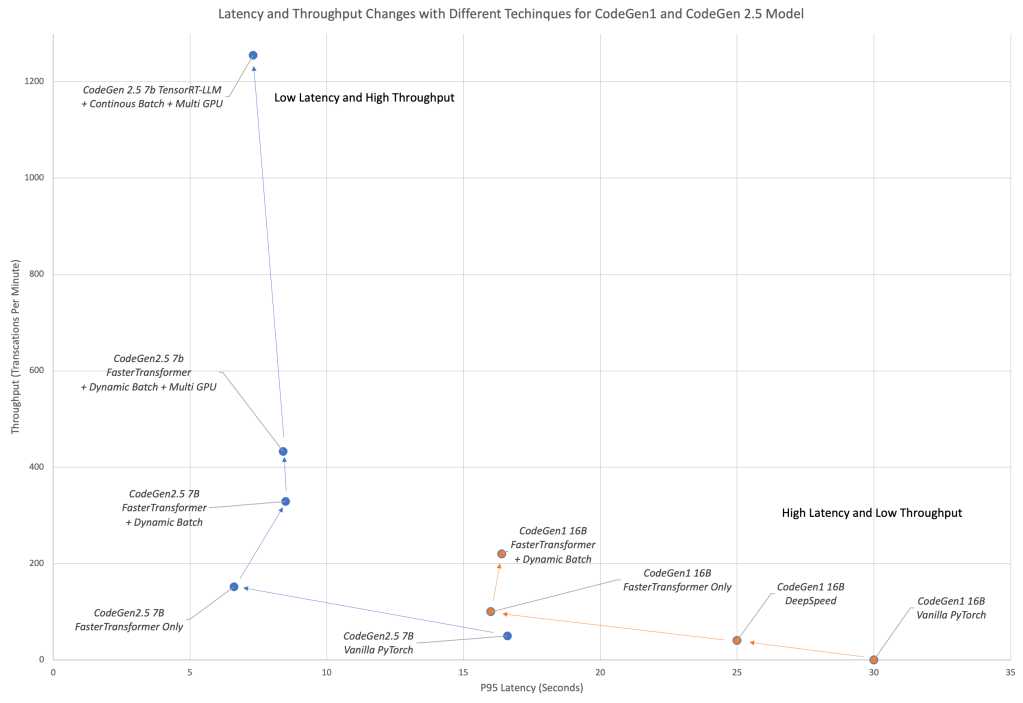

Extensive performance testing and benchmarking was conducted to track these metrics. Before using SageMaker, CodeGen models had a lower token-per-second rate and higher latencies. With SageMaker optimization, the team observed significant improvements in both throughput and latency, as shown in the following figure.

Latency and throughput changes with different techniques for CodeGen1 and CodeGen2.5 models. CodeGen1 is the original version of CodeGen, which is a 16B model. CodeGen2.5 is the optimized version, which is a 7B model. For more information about CodeGen 2.5, refer to CodeGen2.5: Small, but mighty.

New challenges and opportunities

The primary challenge that the team faced when integrating SageMaker was enhancing the platform to include specific functionalities that were essential for their projects. For instance, they needed additional features for NVIDIA’s FasterTransformer to optimize their model performance. Through a productive collaboration with the SageMaker team, they successfully integrated this support, which initially was not available.

Additionally, the team identified an opportunity to improve resource efficiency by hosting multiple LLMs on a single GPU instance. Their feedback helped develop the inference component feature, which now allows Salesforce and other SageMaker users to utilize GPU resources more effectively. These enhancements were crucial in tailoring the platform to Salesforce’s specific needs.

Key takeaways

The team took away the following key lessons from optimizing models in SageMaker for future projects:

- Stay updated – It’s crucial to keep up with the latest inferencing engines and optimization techniques because these advancements significantly influence model optimization.

- Tailor optimization strategies – Model-specific optimization strategies like batching and quantization require careful handling and coordination, because each model might require a tailored approach.

- Implement cost-effective model hosting – You can optimize the allocation of limited GPU resources to control expenses. Techniques such as virtualization can be used to host multiple models on a single GPU, reducing costs.

- Keep pace with innovations – The field of model inferencing is rapidly evolving with technologies like amazon SageMaker JumpStart and amazon Bedrock. Developing strategies for adopting and integrating these technologies is imperative for future optimization efforts.

Conclusion

In this post, we shared how the Salesforce Einstein ai Platform team boosted latency and throughput of their code generation LLM using SageMaker, and saw an over 6,500 percent increase in throughput after optimization.

Looking to host your own LLMs on SageMaker? To get started, see this guide.

_______________________________________________________________________

About the Authors

Pawan Agarwal is the Senior Director of Software Engineering at Salesforce. He leads efforts in Generative and Predictive ai, focusing on inferencing, training, fine-tuning, and notebooking technologies that power the Salesforce Einstein suite of applications.

Pawan Agarwal is the Senior Director of Software Engineering at Salesforce. He leads efforts in Generative and Predictive ai, focusing on inferencing, training, fine-tuning, and notebooking technologies that power the Salesforce Einstein suite of applications.

Rielah De Jesus is a Principal Solutions Architect at AWS who has successfully helped various enterprise customers in the DC, Maryland, and Virginia area move to the cloud. In her current role she acts as a customer advocate and technical advisor focused on helping organizations like Salesforce achieve success on the AWS platform. She is also a staunch supporter of Women in IT and is very passionate about finding ways to creatively use technology and data to solve everyday challenges.

Rielah De Jesus is a Principal Solutions Architect at AWS who has successfully helped various enterprise customers in the DC, Maryland, and Virginia area move to the cloud. In her current role she acts as a customer advocate and technical advisor focused on helping organizations like Salesforce achieve success on the AWS platform. She is also a staunch supporter of Women in IT and is very passionate about finding ways to creatively use technology and data to solve everyday challenges.