Image by author

Predicting the future is not magic; It's an ai.

As we stand on the brink of the ai revolution, Python allows us to participate.

In this one, we will discover how you can use Python and Machine Learning to make predictions.

We'll start with real fundamentals and get to the place where we'll apply algorithms to the data to make a prediction. Let us begin!

What is machine learning?

Machine learning is a way of giving the computer the ability to make predictions. It's too popular now; You probably use it daily without realizing it. Below are some technologies that are benefiting from machine learning;

- autonomous cars

- Face detection system

- Netflix movie recommendation system

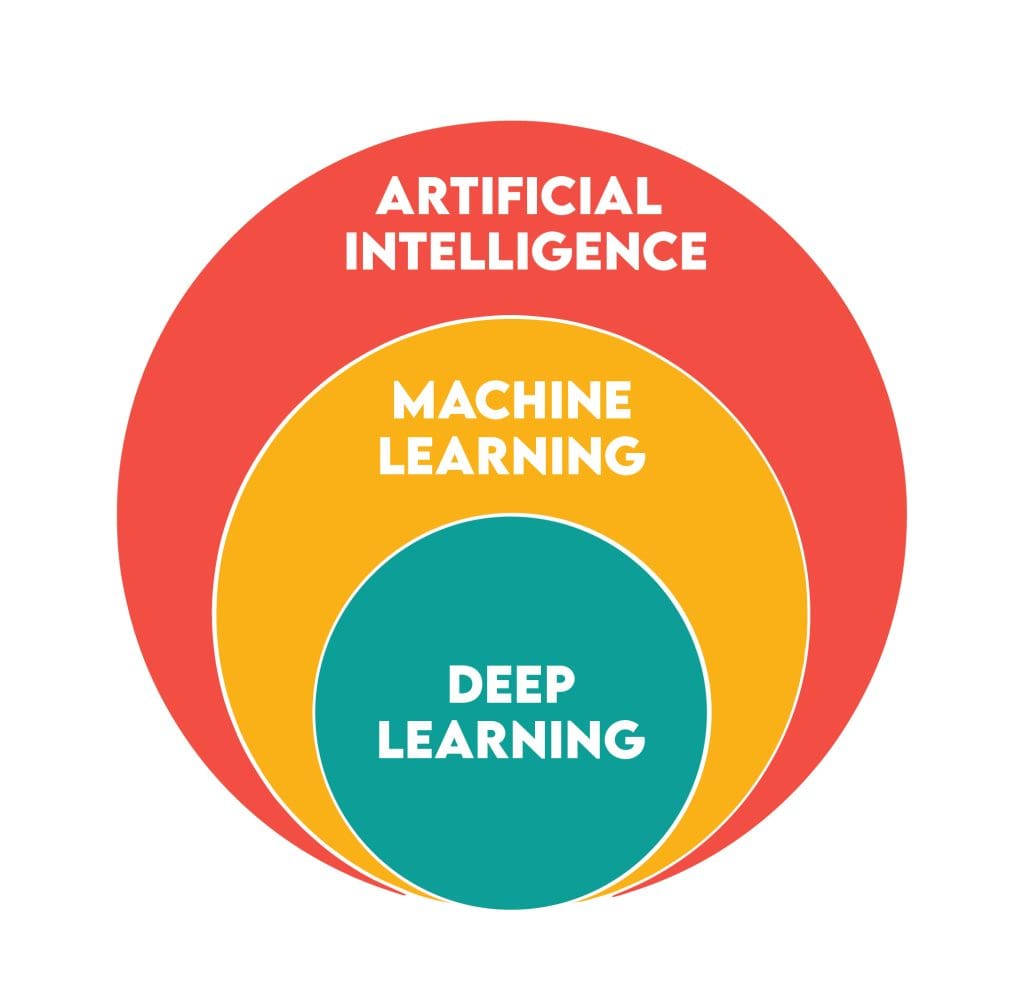

But sometimes ai, machine learning, and deep learning can't be distinguished well.

Here's a great diagram that better represents those terms.

Classify machine learning as beginner

Machine learning algorithms can be grouped using two different methods. One of these methods involves determining whether a “tag” is associated with the data points. In this context, a 'label' refers to the specific attribute or characteristic of the data points that you want to predict.

If there is a label, your algorithm is classified as a supervised algorithm; otherwise it is an unsupervised algorithm.

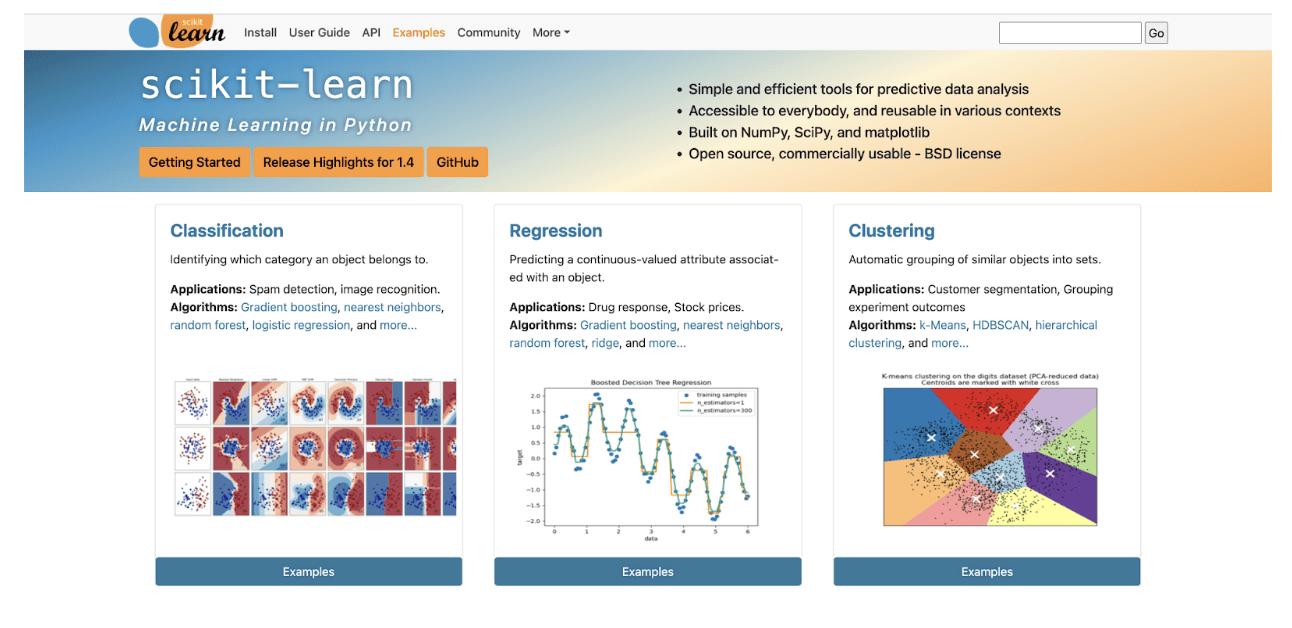

Another method of classifying machine learning algorithms is to classify the algorithm. If you do that, the machine learning algorithms can be grouped as follows:

Like Sci-kit Learn did, here.

Image source: scikit-learn.org

What is Sci-kit Learn?

Sci-kit learn is the most famous Python machine learning library; We will use this in this article. By using Sci-kit Learn, you'll skip defining algorithms from scratch and use Sci-kit Learn's built-in features, making it easier for you to develop machine learning.

In this article, we will create a machine learning model using different regression algorithms from sci-kit Learn. Let's first explain regression.

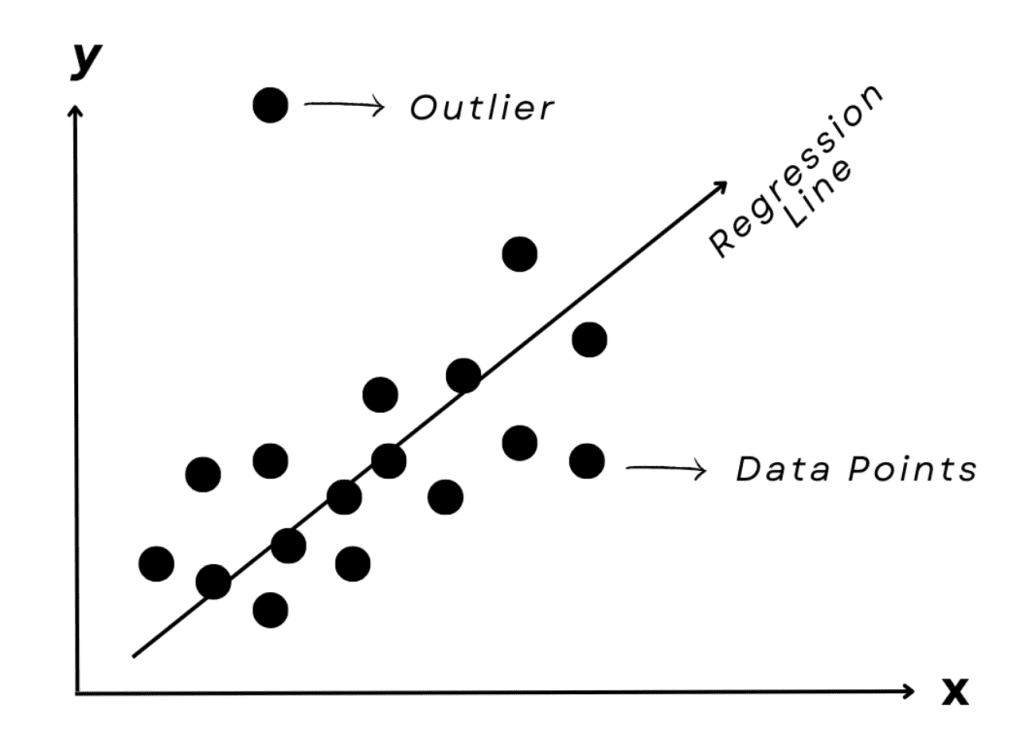

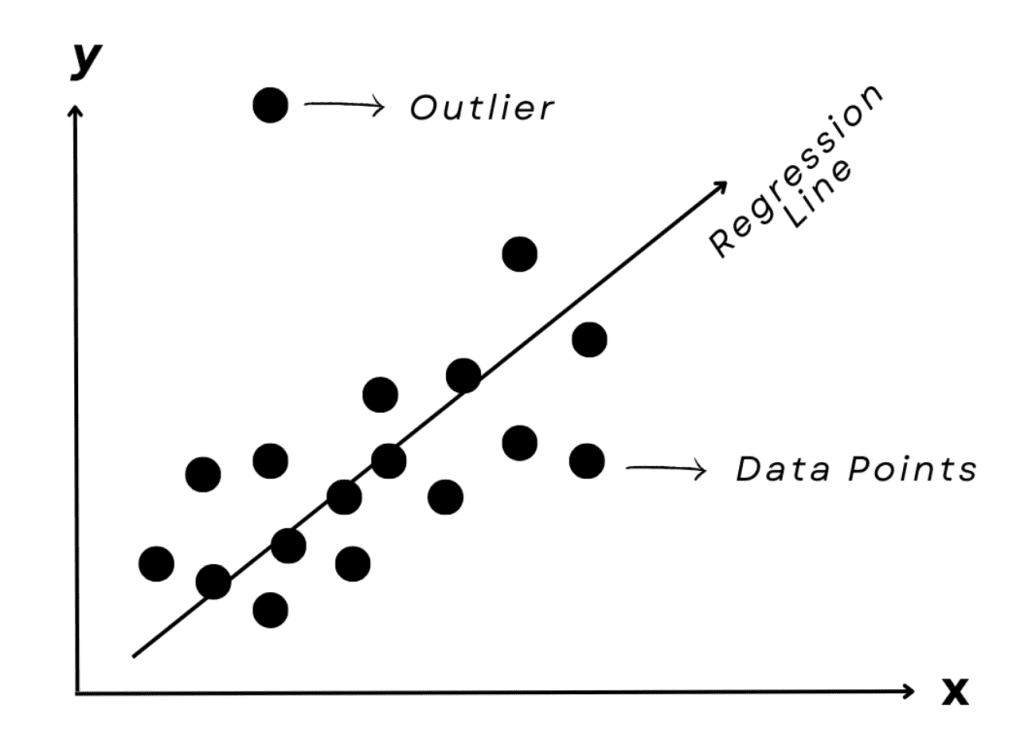

What is regression?

Regression is a machine learning algorithm that makes predictions on continuous values. Below are some real life regression examples,

Now before applying the regression models, let's look at three different regression algorithms with simple explanations;

- Multiple linear regression: Predicts using a linear combination of multiple predictor variables.

- Decision tree regressor: Creates a tree-like decision model to predict the value of a target variable based on various input characteristics.

- Support Vector Regression: Find the line of best fit (or hyperplane in higher dimensions) with the maximum number of points within a given distance.

Before applying machine learning, you need to follow specific steps. Sometimes these steps may differ; However, most of the time they include;

- Data exploration and analysis

- Data manipulation

- Train Test Division

- Building a machine learning model

- Data visualization

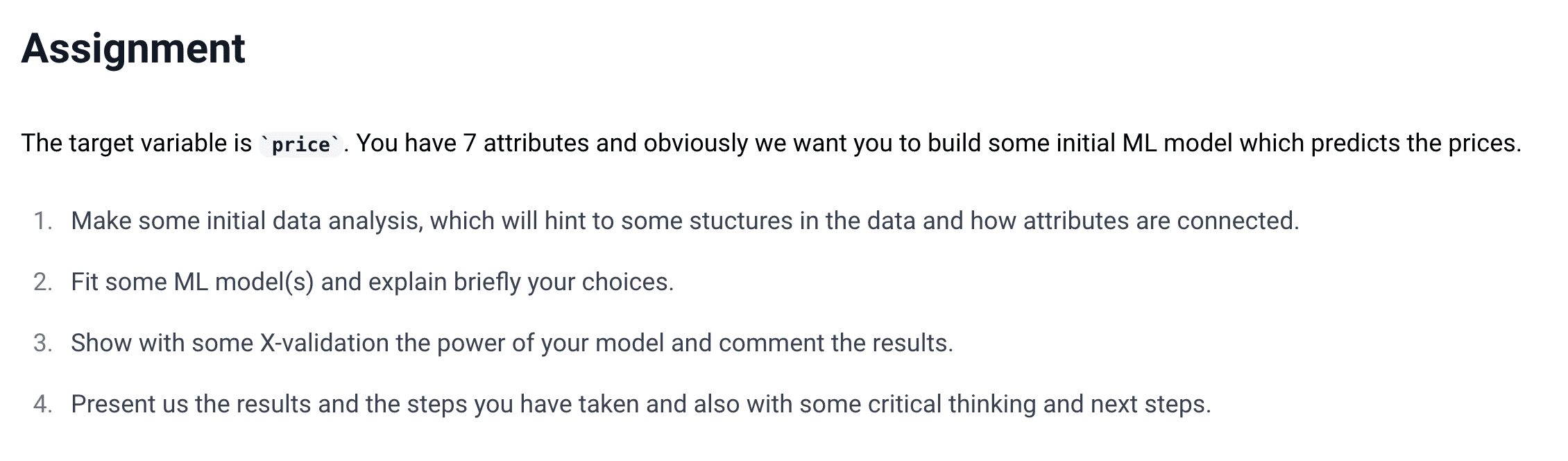

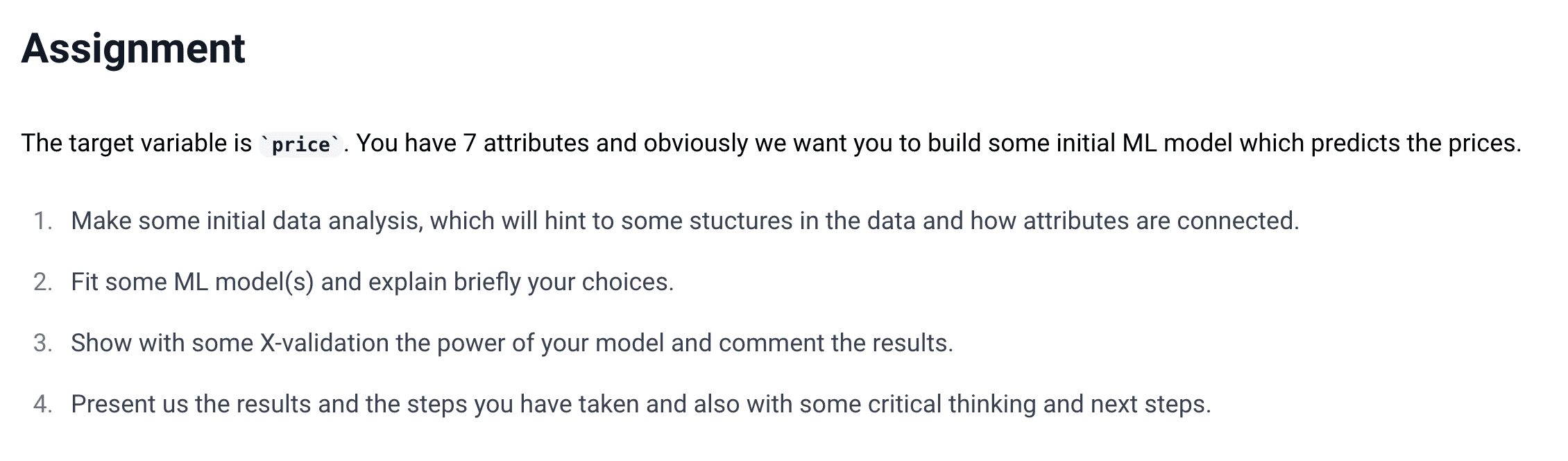

In this one, let's use a data project from our platform to predict the price. here.

Data exploration and analysis

In Python we have several functions. By using them, you can become familiar with the data you use.

But first of all, you need to load the libraries with these functions.

import pandas as pd

import sklearn

from sklearn.linear_model import LinearRegression

from sklearn.ensemble import RandomForestRegressor

from sklearn import svm

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score

from sklearn.metrics import mean_squared_errorGreat, let's load up our data and explore a little.

data = pd.read_csv('path')Enter the file path in your directory. Python has three functions that will help you explore data. Let's apply them one by one and see the result.

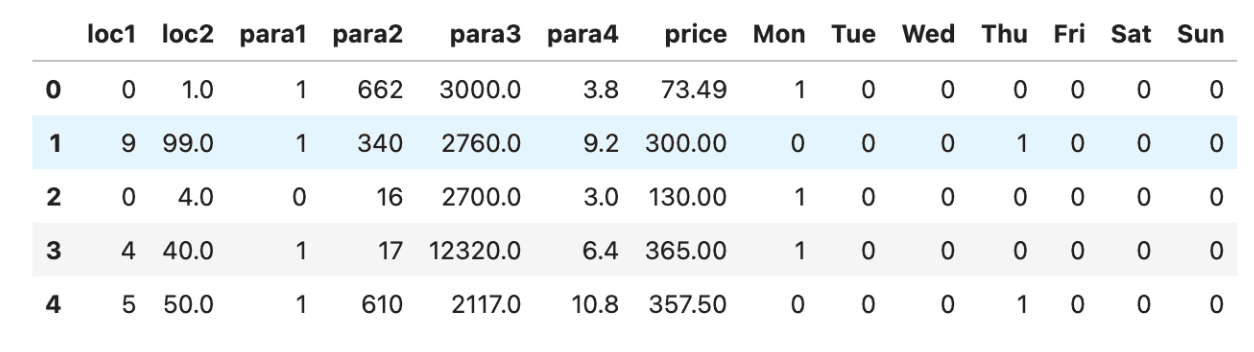

Here is the code to view the first five rows of our data set.

Here is the result.

Now, let's examine our second function: viewing the information about our data sets column.

Here is the result.

RangeIndex: 10000 entries, 0 to 9999

Data columns (total 8 columns):

# Column Non-Null Count Dtype

- - - - - - - - - - - - - - - - - - -

0 loc1 10000 non-null object

1 loc2 10000 non-null object

2 para1 10000 non-null int64

3 dow 10000 non-null object

4 para2 10000 non-null int64

5 para3 10000 non-null float64

6 para4 10000 non-null float64

7 price 10000 non-null float64

dtypes: float64(3), int64(2), object(3)

memory usage: 625.1+ KB

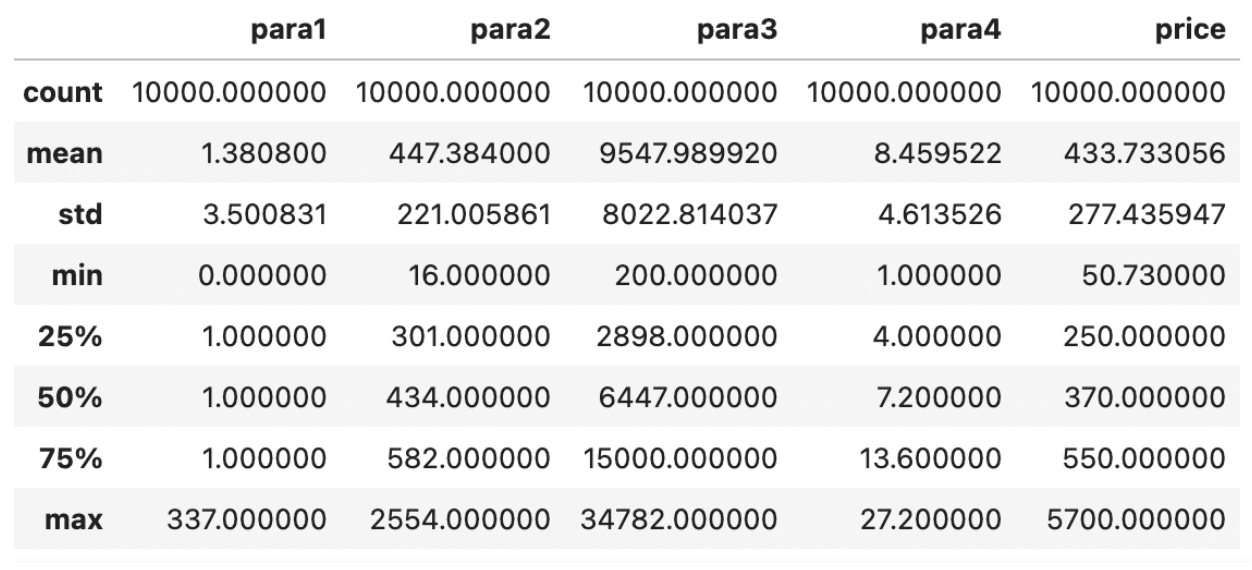

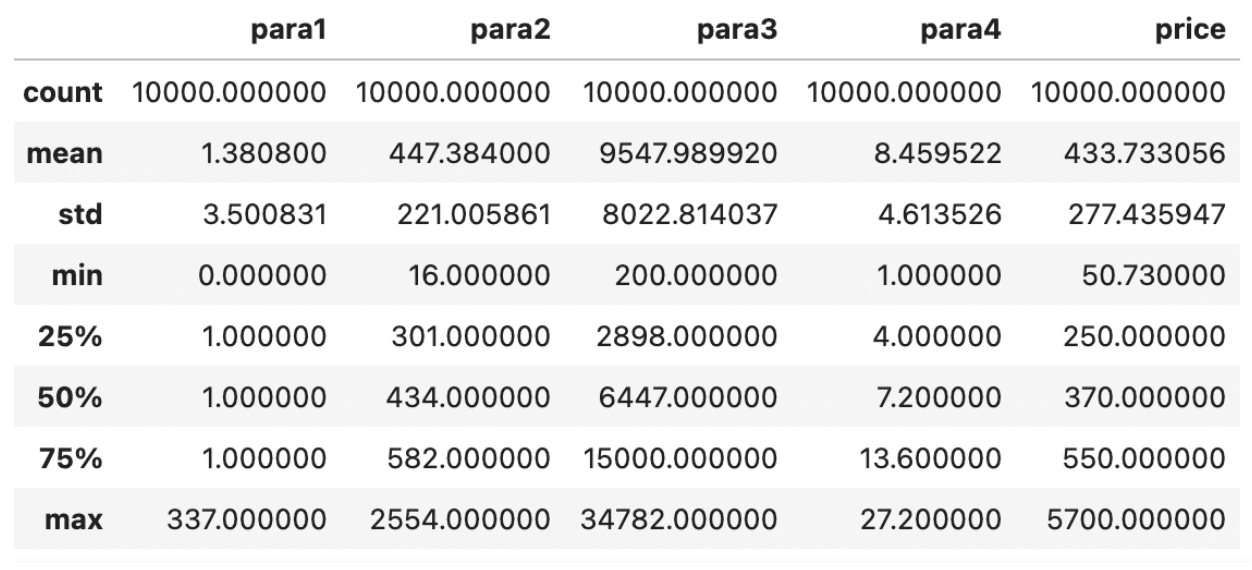

Here is the last function, which will summarize our data statistically. Here is the code.

Here is the result.

You are now more familiar with our data. In machine learning, all predictor variables, that is, the columns you intend to use to make a prediction, must be numerical.

In the next section, we will make sure of that.

Data Handling

Now, we all know that we need to convert the “dow” column into numbers, but before that, let's check if other columns consist of numbers just for the sake of our machine learning models.

We have two suspicious columns, loc1 and loc2, because, as you can see from the output of the info() function, we only have two columns that are object data types, which can include both numeric and string values.

Let's use this code to verify;

data("loc1").value_counts()Here is the result.

loc1

2 1607

0 1486

1 1223

7 1081

3 945

5 846

4 773

8 727

9 690

6 620

S 1

T 1

Name: count, dtype: int64

Now, using the following code, you can delete those rows.

data = data((data("loc1") != "S") & (data("loc1") != "T"))However, we need to ensure that the other column, loc2, does not contain string values. Let's use the following code to ensure that all values are numeric.

data("loc2") = pd.to_numeric(data("loc2"), errors="coerce")

data("loc1") = pd.to_numeric(data("loc1"), errors="coerce")

data.dropna(inplace=True)

At the end of the above code, we use the dropna() function because the pandas cast function will convert “na” to non-numeric values.

Excellent. We can solve this problem; Let's convert the weekday columns into numbers. Here is the code to do that;

# Assuming data is already loaded and 'dow' column contains day names

# Map 'dow' to numeric codes

days_of_week = {'Mon': 1, 'Tue': 2, 'Wed': 3, 'Thu': 4, 'Fri': 5, 'Sat': 6, 'Sun': 7}

data('dow') = data('dow').map(days_of_week)

# Invert the days_of_week dictionary

week_days = {v: k for k, v in days_of_week.items()}

# Convert dummy variable columns to integer type

dow_dummies = pd.get_dummies(data('dow')).rename(columns=week_days).astype(int)

# Drop the original 'dow' column

data.drop('dow', axis=1, inplace=True)

# Concatenate the dummy variables

data = pd.concat((data, dow_dummies), axis=1)

data.head()

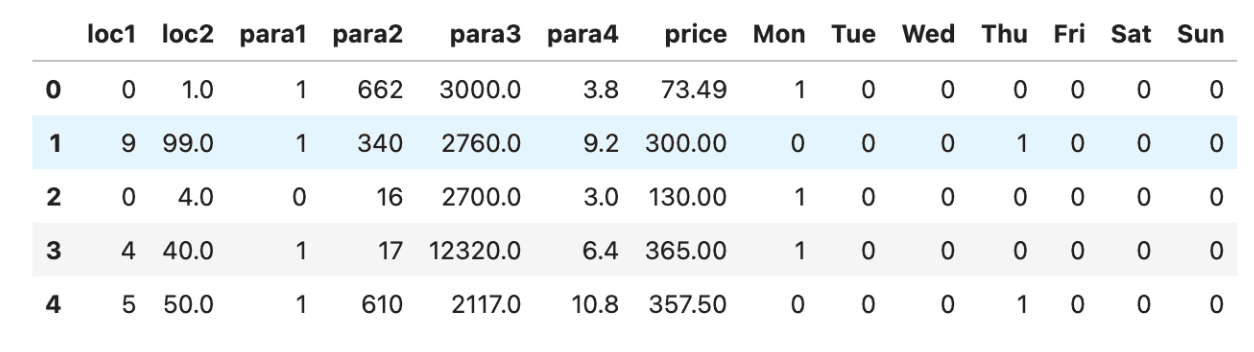

In this code, we define the days of the week by defining a number for each day in the dictionary and then simply changing the day names with those numbers. Here is the result.

Now we're almost there.

Train Test Division

Before applying a machine learning model, you must split your data into training and test sets. This allows you to objectively evaluate the efficiency of your model by training it on the training set and then evaluating its performance on the test set, which the model has not seen before.

x = data.drop('price', axis=1) # Assuming 'price' is the target variable

y = data('price')

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)Building a machine learning model

Now everything is ready. At this stage, we will apply the following algorithms at once.

- Multiple linear regression

- Decision tree regression

- Support Vector Regression

If you are a beginner, this code may seem complicated, but rest assured it is not. In the code, we first map model names and their corresponding scikit-learn functions to the model dictionary.

Next, we create an empty dictionary called results to store these results. In the first cycle, we simultaneously apply all machine learning models and evaluate them using metrics such as R^2 and MSE, which evaluate how well the algorithms perform.

In the final loop, we print the results we have saved. Here is the code

# Initialize the models

models = {

"Multiple Linear Regression": LinearRegression(),

"Decision Tree Regression": DecisionTreeRegressor(random_state=42),

"Support Vector Regression": SVR()

}

# Dictionary to store the results

results = {}

# Fit the models and evaluate

for name, model in models.items():

model.fit(X_train, y_train) # Train the model

y_pred = model.predict(X_test) # Predict on the test set

# Calculate performance metrics

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

# Store results

results(name) = {'MSE': mse, 'R^2 Score': r2}

# Print the results

for model_name, metrics in results.items():

print(f"{model_name} - MSE: {metrics('MSE')}, R^2 Score: {metrics('R^2 Score')}")

Here is the result.

Multiple Linear Regression - MSE: 35143.23011545407, R^2 Score: 0.5825954700994046

Decision Tree Regression - MSE: 44552.00644904675, R^2 Score: 0.4708451884787034

Support Vector Regression - MSE: 73965.02477382126, R^2 Score: 0.12149975134965318

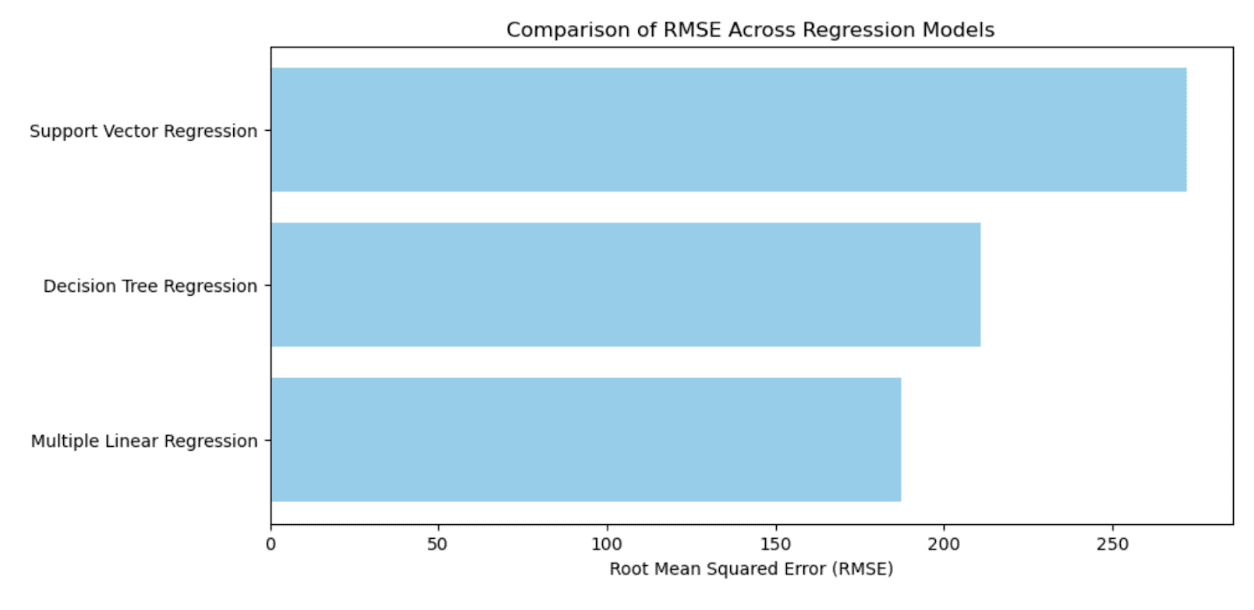

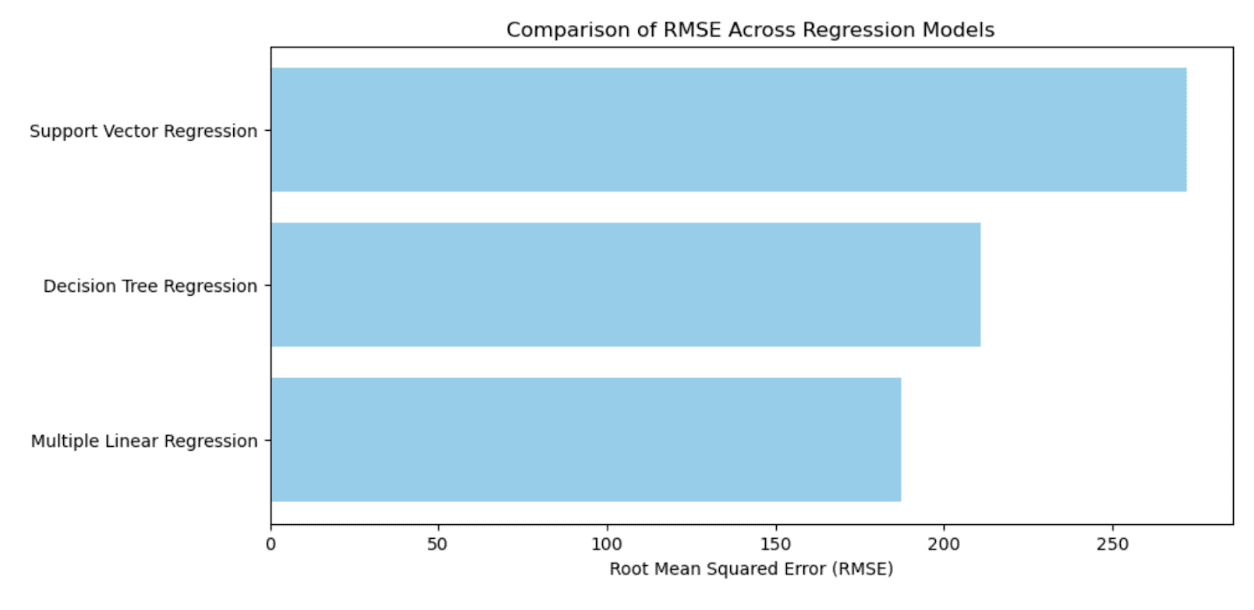

Data visualization

To better see the results, let's visualize the result.

Here is the code where we first calculate RMSE (square root of MSE) and display the result.

import matplotlib.pyplot as plt

from math import sqrt

# Calculate RMSE for each model from the stored MSE and prepare for plotting

rmse_values = (sqrt(metrics('MSE')) for metrics in results.values())

model_names = list(results.keys())

# Create a horizontal bar graph for RMSE

plt.figure(figsize=(10, 5))

plt.barh(model_names, rmse_values, color="skyblue")

plt.xlabel('Root Mean Squared Error (RMSE)')

plt.title('Comparison of RMSE Across Regression Models')

plt.show()

Here is the result.

Data projects

Before we finish, here are some data projects to get you started.

Also, if you want to do data projects on interesting data sets, here are some data sets you might find interesting;

Conclusion

Our results could be better because there are too many steps to improve the efficiency of the model, but we made a great start here. Verify Sci-kit Learn official document to see what you can do more.

Of course, after learning, you need to repeatedly do data projects to improve your capabilities and learn a few more things.

twitter.com/StrataScratch” rel=”noopener”>twitter.com/StrataScratch” target=”_blank” rel=”noopener noreferrer”>Nate Rosidi He is a data scientist and in product strategy. He is also an adjunct professor of analytics and is the founder of StrataScratch, a platform that helps data scientists prepare for their interviews with real questions from top companies. Nate writes about the latest trends in the career market, provides interview tips, shares data science projects, and covers all things SQL.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>