Image by author

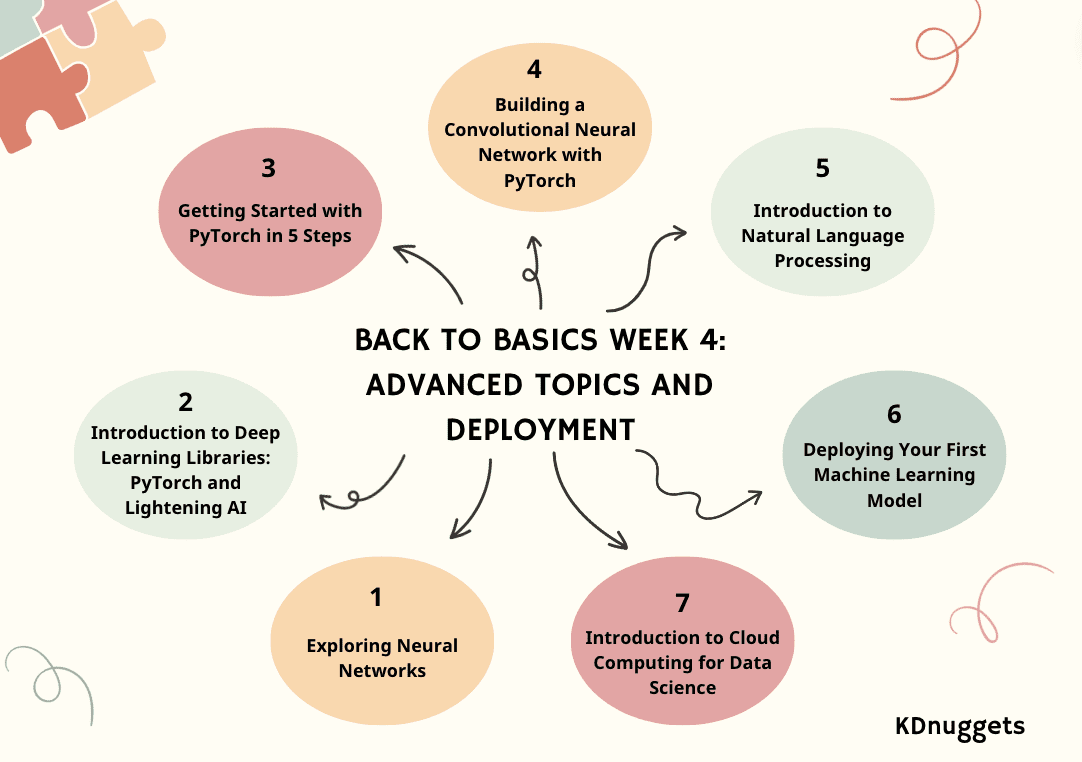

Join KDnuggets with our back-to-basics journey to start a new career or brush up on your data science skills. The Back to Basics path is divided into 4 weeks with an extra week. We hope you can use these blogs as a guide to the course.

If you haven’t already, check out:

Moving into the third week, we will delve into advanced topics and implementation.

- Day 1: Exploring neural networks

- Day 2: Introduction to Deep Learning Libraries: PyTorch and Lightening ai

- Day 3: Introduction to PyTorch in 5 steps

- Day 4: Building a convolutional neural network with PyTorch

- Day 5: Introduction to natural language processing

- Day 6: Deploy your first machine learning model

- Day 7: Introduction to Cloud Computing for Data Science

Week 4 – Part 1: Exploring neural networks

Unlocking the Power of ai: A Guide to Neural Networks and Their Applications.

Imagine a machine that thinks, learns and adapts like the human brain and discovers hidden patterns within data.

The algorithms of this technology, neural networks (NN), are imitating cognition. We will explore what NNs are and how they work later.

In this article, I will explain to you the fundamentals of neural networks (NN): structure, types, real-life applications, and key terms that define how they work.

Week 4 – Part 2: Introduction to Deep Learning Libraries: PyTorch and Lightning ai

A simple explanation of PyTorch and Lightning ai.

Deep learning is a branch of machine learning model based on Neural networks. In the other machine model, data processing to find meaningful features is often done manually or depends on experience in the field; However, deep learning can mimic the human brain to discover essential features, increasing model performance.

There are many applications for deep learning models, including facial recognition, fraud detection, speech-to-text, text generation, and many more. Deep learning has become a standard approach in many advanced machine learning applications and we have nothing to lose by learning about them.

To develop this deep learning model, there are several library frameworks that we can rely on instead of working from scratch. In this article, we will discuss two different libraries that we can use to develop deep learning models: PyTorch and Lighting ai.

Week 4 – Part 3: Introduction to PyTorch in 5 steps

This tutorial provides a detailed introduction to machine learning using PyTorch and its high-level wrapper, PyTorch Lightning. The article covers essential steps from installation to advanced topics, offers a practical approach to building and training neural networks, and emphasizes the benefits of using Lightning.

PyTorch is a popular open source machine learning framework based on Python and optimized for GPU-accelerated computing. Originally developed by Meta ai in 2016 and now part of the Linux Foundation, PyTorch has quickly become one of the most widely used frameworks for deep learning research and applications.

ai/docs/pytorch/stable/” rel=”noopener” target=”_blank”>PyTorch Lightning is a lightweight wrapper built on top of PyTorch that further simplifies the researcher’s workflow and model development process. With Lightning, data scientists can focus more on designing models rather than boilerplate code.

Week 4 – Part 4: Building a Convolutional Neural Network with PyTorch

This blog post provides a tutorial on building a convolutional neural network for image classification in PyTorch, leveraging convolutional and pooling layers for feature extraction, as well as fully connected layers for prediction.

A convolutional neural network (CNN or ConvNet) is a deep learning algorithm designed specifically for tasks where object recognition is crucial, such as image classification, detection, and segmentation. CNNs can achieve state-of-the-art accuracy in complex vision tasks, powering many real-life applications such as surveillance systems, warehouse management, and more.

As humans, we can easily recognize objects in images by analyzing patterns, shapes, and colors. CNNs can also be trained to perform this recognition, learning which patterns are important for differentiation. For example, when we try to distinguish between a photo of a cat and one of a dog, our brain focuses on unique shapes, textures, and facial features. A CNN learns to capture these same types of distinctive features. Even for very fine-grained categorization tasks, CNNs can learn complex feature representations directly from pixels.

Week 4 – Part 5: Introduction to Natural Language Processing

An overview of natural language processing (NLP) and its applications.

We are learning a lot about ChatGPT and large language models (LLM). Natural language processing has been an interesting topic, a topic that is currently sweeping the world of technology and artificial intelligence. Yes, LLMs like ChatGPT have helped its growth, but wouldn’t it be nice to understand where it all comes from? So let’s get back to basics: NLP.

NLP is a subfield of artificial intelligence and is the ability of a computer to detect and understand human language, through speech and text, just as humans do. NLP helps models process, understand and generate human language.

The goal of NLP is to bridge the communication gap between humans and computers. NLP models are typically trained on tasks such as next word prediction, allowing them to build contextual dependencies and then generate relevant results.

Week 4 – Part 6: Deploying your first machine learning model

With just 3 easy steps, you can create and deploy a glass classification model faster than you can say… glass classification model!

In this tutorial, we will learn how to build a simple multiple classification model using the Glass classification data set. Our goal is to develop and implement a web application that can predict various types of glass, such as:

- Processed floating construction windows.

- Construction of processed non-floating windows.

- Floating processed vehicle windows

- Processed non-floating vehicle windows (missing from data set)

- Containers

- Crockery

- headlights

Additionally, we will learn about:

- Skops – Share your scikit-learn based models and put them into production.

- Gradio – Machine Learning Web Application Framework.

- HuggingFace Spaces – Free machine learning model and app hosting platform.

By the end of this tutorial, you will have hands-on experience building, training, and deploying a basic machine learning model as a web application.

Week 4 – Part 7: Introduction to Cloud Computing for Data Science

And the Power Duo of modern technology.

In today’s world, two major forces have emerged that are changing the rules of the game: data science and cloud computing.

Imagine a world where colossal amounts of data are generated every second. Well… you don’t have to imagine it… It’s our world!

From social media interactions to financial transactions, from health records to e-commerce preferences, data is everywhere.

But what good is this data if we can’t get value? That’s exactly what data science does.

And where do we store, process and analyze this data? That’s where cloud computing shines.

Embark on a journey to understand the intertwined relationship between these two technological wonders. Let’s try) to discover it all together!

Congratulations on completing week 4!!

The KDnuggets team hopes that the Back to Basics path has provided readers with a comprehensive and structured approach to mastering the fundamentals of data science.

Bonus week will be posted next week on Monday. Pay attention!

nisha arya is a data scientist and freelance technical writer. She is particularly interested in providing professional data science advice or tutorials and theory-based data science insights. She also wants to explore the different ways in which artificial intelligence can benefit the longevity of human life. A great student looking to expand her technological knowledge and writing skills, while she helps guide others.

NEWSLETTER

NEWSLETTER