Today, we are pleased to announce the availability of Meta Llama 3 inference on AWS Trainium and AWS Inferentia-based instances in amazon SageMaker JumpStart. Meta Llama 3 models are a collection of pre-trained and fine-tuned generative text models. amazon Elastic Compute Cloud (amazon EC2) Trn1 and Inf2 instances, powered by AWS Trainium and AWS Inferentia2, provide the most cost-effective way to deploy Llama 3 models on AWS. They offer up to 50% lower deployment cost than comparable amazon EC2 instances. They not only reduce the time and expense involved in training and deploying large language models (LLMs), but also give developers easier access to high-performance accelerators to meet the scalability and efficiency needs of applications. real-time applications, such as chatbots and ai. assistants.

In this post, we demonstrate how easy it is to deploy Llama 3 on AWS Trainium and AWS Inferentia-based instances in SageMaker JumpStart.

Meta Llama 3 Model in SageMaker Studio

SageMaker JumpStart provides access to proprietary, publicly available base models (FMs). Foundation models are built and maintained by proprietary and third-party vendors. As such, they are released under different licenses as designated by the model source. Be sure to check the license of any FM you use. You are responsible for reviewing and complying with the applicable license terms and ensuring that they are acceptable for your use case before downloading or using the content.

You can access Meta Llama 3 FM through SageMaker JumpStart in the amazon SageMaker Studio console and the SageMaker Python SDK. In this section, we go over how to discover models in SageMaker Studio.

SageMaker Studio is an integrated development environment (IDE) that provides a single, web-based visual interface where you can access tools specifically designed to perform all machine learning (ML) development steps, from data preparation to creation, training and implementing your ML. Models. For more details on how to get started and set up SageMaker Studio, see Getting Started with SageMaker Studio.

In the SageMaker Studio console, you can access SageMaker JumpStart by choosing Good start in the navigation panel. If you are using SageMaker Studio Classic, see Open and use JumpStart in Studio Classic to navigate to SageMaker JumpStart models.

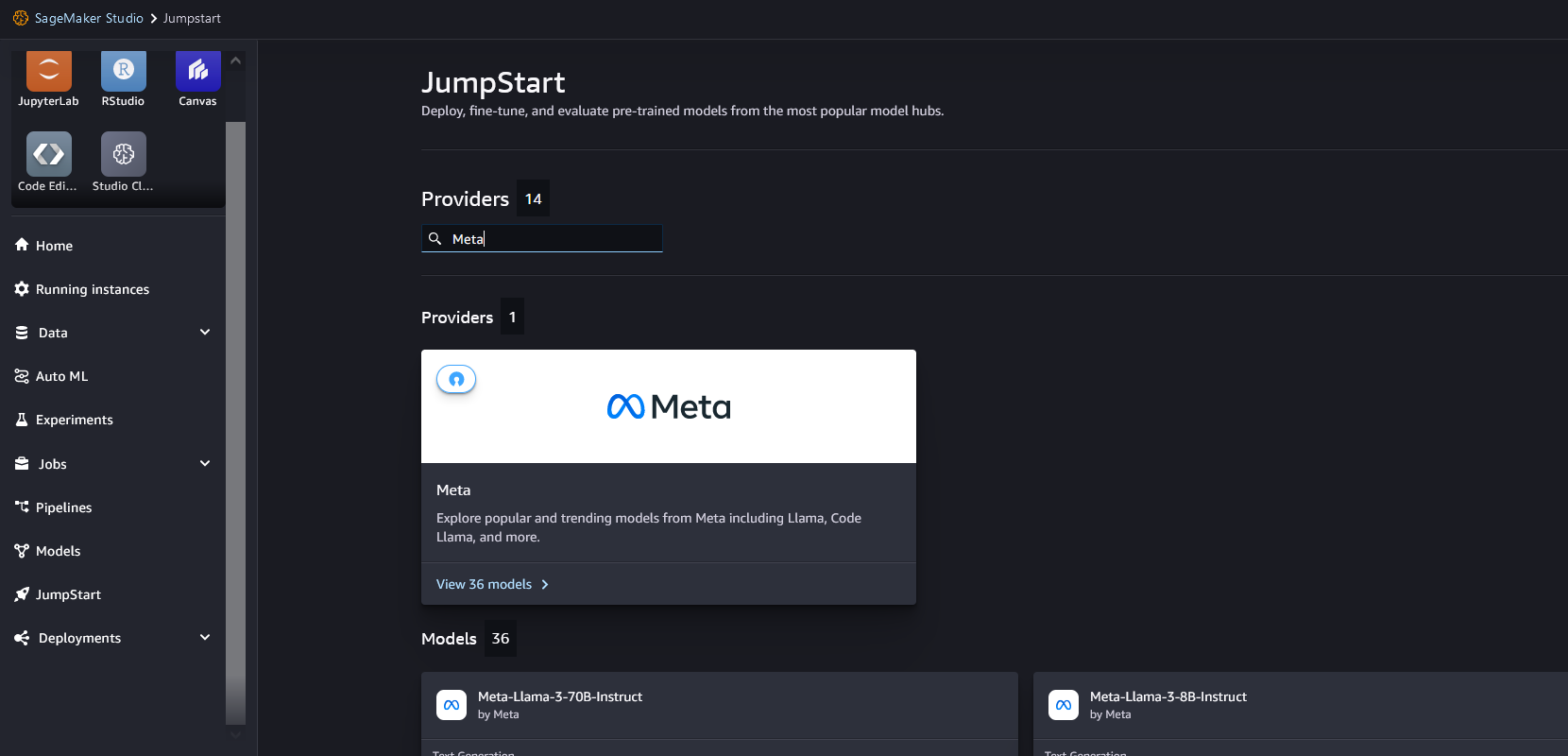

From the SageMaker JumpStart home page, you can search for “Meta” in the search box.

Choose the Meta model card to list all Meta models in SageMaker JumpStart.

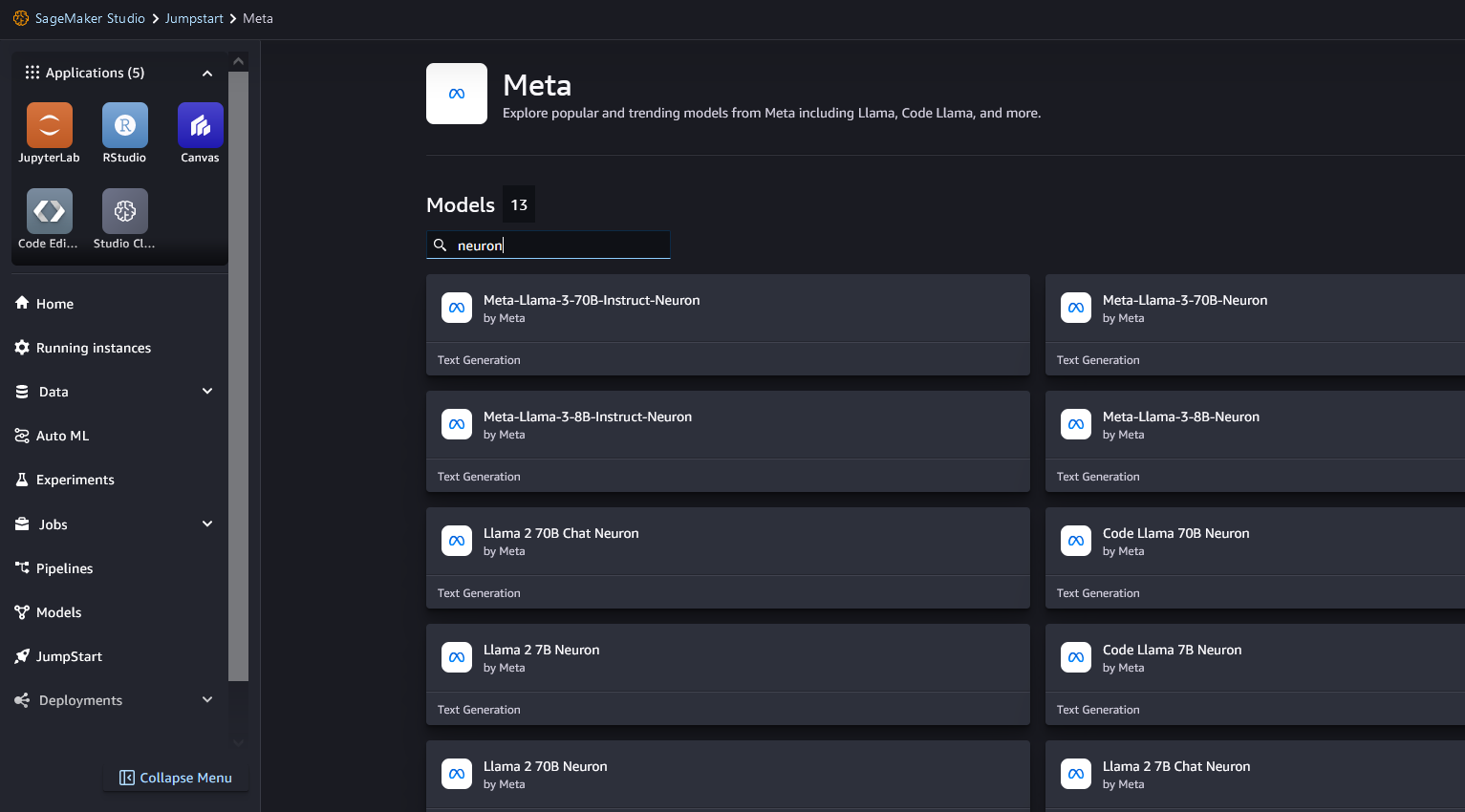

You can also find relevant model variants by searching for “neuron”. If you do not see the Meta Llama 3 models, update your version of SageMaker Studio by closing and restarting SageMaker Studio.

Code-free implementation of the Llama 3 Neuron model in SageMaker JumpStart

You can choose the model card to view details about the model, such as the license, the data used to train, and how to use it. You can also find two buttons, Deploy and Notebook previewthat will help you implement the model.

when you choose Deploy, the page shown in the following screenshot appears. The top section of the page displays the end user license agreement (EULA) and acceptable use policy that you should acknowledge.

After acknowledging the policies, provide your endpoint configuration and choose Deploy to implement the endpoint of the model.

Alternatively, you can implement via the example notebook by choosing open notebook. The example notebook provides comprehensive guidance on how to implement the model for inference and resource cleansing.

Deploying Meta Llama 3 on AWS Trainium and AWS Inferentia using SageMaker JumpStart SDK

In SageMaker JumpStart, we have precompiled the Meta Llama 3 model for a variety of configurations to avoid runtime compilation during deployment and tuning. He Neuron Compiler FAQ has more details about the build process.

There are two ways to deploy Meta Llama 3 on AWS Inferentia and Trainium-based instances using the SageMaker JumpStart SDK. You can deploy the model with two lines of code for simplicity or focus on having more control over deployment configurations. The following code snippet shows the simplest implementation mode:

To make inferences about these models, it is necessary to specify the argument. accept_eula as true as part of the model.deploy() call. This means that you have read and accepted the EULA for the model. The EULA can be found in the description of the model card or in ai.meta.com/resources/models-and-libraries/llama-downloads/” target=”_blank” rel=”noopener”>https://ai.meta.com/resources/models-and-libraries/llama-downloads/.

The default instance type for Meta LIama-3-8B is ml.inf2.24xlarge. The other model IDs supported for deployment are as follows:

meta-textgenerationneuron-llama-3-70bmeta-textgenerationneuron-llama-3-8b-instructmeta-textgenerationneuron-llama-3-70b-instruct

SageMaker JumpStart has preselected settings that can help you get started, listed in the table below. For more information on how to further optimize these settings, see ai/docs/serving/serving/docs/lmi/user_guides/tnx_user_guide.html” target=”_blank” rel=”noopener”>advanced deployment configurations

| Instruction LIama-3 8B and LIama-3 8B | ||||

| instance type |

OPTION_N_POSITI ONS |

OPTION_MAX_ROLLING_BATCH_SIZE | OPTION_TENSOR_PARALLEL_DEGREE | OPTION_DTYPE |

| ml.inf2.8xlarge | 8192 | 1 | 2 | bf16 |

| ml.inf2.24xlarge (default) | 8192 | 1 | 12 | bf16 |

| ml.inf2.24xlarge | 8192 | 12 | 12 | bf16 |

| ml.inf2.48xlarge | 8192 | 1 | 24 | bf16 |

| ml.inf2.48xlarge | 8192 | 12 | 24 | bf16 |

| Instruction LIama-3 70B and LIama-3 70B | ||||

| ml.trn1.32xlarge | 8192 | 1 | 32 | bf16 |

| ml.trn1.32xlarge (Default) |

8192 | 4 | 32 | bf16 |

The following code shows how you can customize deployment settings such as stream length, tensor parallelism, and maximum continuous batch size:

Now that you have implemented the Meta Llama 3 neuron model, you can run inferences from it by invoking the endpoint:

For more information about payload parameters, see Detailed parameters.

See Cost-effectively tuning and deploying Llama 2 models in amazon SageMaker JumpStart with AWS Inferentia and AWS Trainium for details on how to pass parameters to control text generation.

Clean

Once you have completed your training work and no longer wish to use existing resources, you can delete them using the following code:

Conclusion

Deploying Meta Llama 3 models on AWS Inferentia and AWS Trainium using SageMaker JumpStart demonstrates the lowest cost to deploy large-scale generative ai models like Llama 3 on AWS. These models, including variants such as Meta-Llama-3-8B, Meta-Llama-3-8B-Instruct, Meta-Llama-3-70B, and Meta-Llama-3-70B-Instruct, use AWS Neuron to perform inference on AWS . Trainium and Inference. AWS Trainium and Inferentia offer up to 50% lower deployment cost than comparable EC2 instances.

In this post, we demonstrate how to deploy Meta Llama 3 models to AWS Trainium and AWS Inferentia using SageMaker JumpStart. The ability to deploy these models through the SageMaker JumpStart console and the Python SDK offers flexibility and ease of use. We are excited to see how these models are used to create interesting generative ai applications.

To get started with SageMaker JumpStart, see Getting Started with amazon SageMaker JumpStart. For more examples of deploying models to AWS Trainium and AWS Inferentia, see the GitHub repository. For more information about deploying Meta Llama 3 models on GPU-based instances, see Meta Llama 3 models are now available in amazon SageMaker JumpStart.

About the authors

Xin Huang is a senior applied scientist

Rachna Chadha is Principal Solutions Architect – ai/ML

Qinglan is a senior SDE – ML System

Pink Panigrahi is a senior solutions architect Annapurna ML

Christopher Whitten He is a software development engineer

Kamran Khan He is director of BD/GTM Annapurna ML

Ashish Khaitan is a senior applied scientist

Pradeep Cruz He is a senior SDM

NEWSLETTER

NEWSLETTER