With Amazon Rekognition custom labels, you can have Amazon Rekognition train a custom model for object detection or image classification specific to your business needs. For example, Rekognition’s custom labels can find your logo in social media posts, identify your products on store shelves, sort machine parts on an assembly line, distinguish healthy and infected plants, or detect characters. animated in videos.

Developing a custom Rekognition tag model for image analysis is a significant undertaking that requires time, expertise, and resources, often taking months to complete. Additionally, it often requires thousands or tens of thousands of hand-labeled images to provide the model with enough data to make accurate decisions. Generating this data can take months to collect and requires large teams of labelers to prepare it for use in machine learning (ML).

With Rekognition custom labels, we do the heavy lifting for you. Rekognition custom labels build on the existing capabilities of Amazon Rekognition, which is already trained on tens of millions of images across many categories. Instead of thousands of images, you simply need to upload a small set of training images (usually a few hundred images or less) that are specific to your use case through our easy-to-use console. If your images are already labeled, Amazon Rekognition can start training with just a few clicks. Otherwise, you can label them directly in the Amazon Rekognition labeling interface, or use Amazon SageMaker Ground Truth to label them for you. Once Amazon Rekognition starts training from your image set, it produces a custom image analysis model for you in just a few hours. Behind the scenes, Rekognition’s custom tags automatically load and inspect training data, select the correct ML algorithms, train a model, and provide model performance metrics. You can then use your custom model via the Rekognition Custom Labels API and integrate it into your applications.

However, creating a Rekognition custom label model and hosting it for real-time predictions involves several steps: creating a project, creating the training and validation data sets, training the model, evaluating the model, and then creating an endpoint. After you deploy the model for inference, you may need to retrain the model when new data becomes available or if real-world inference feedback is received. Automating the entire workflow can help reduce manual work.

In this post, we show how you can use AWS Step Functions to create and automate your workflow. Step Functions is a visual workflow service that helps developers use AWS services to build distributed applications, automate processes, orchestrate microservices, and build data and machine learning pipelines.

Solution Overview

The Step Functions workflow is as follows:

- First we create an Amazon Rekognition project.

- In parallel, we create the training and validation data sets using existing data sets. We can use the following methods:

- Import an Amazon Simple Storage Service (Amazon S3) folder structure with the folders that the tags represent.

- Use a local computer.

- Use the basic truth.

- Create a dataset using an existing dataset with the AWS SDK.

- Create a dataset with a manifest file using the AWS SDK.

- After creating the data sets, we train a custom label model using the CreateProjectVersion API. This could take minutes to hours to complete.

- Once the model is trained, we evaluate it using the F1 score result from the previous step. We use the F1 score as our evaluation metric because it provides a balance between accuracy and recall. You can also use precision or recall as evaluation metrics for your model. For more information on custom tag evaluation metrics, see Metrics for evaluating your model.

- We then start using the model for predictions if we are happy with the F1 score.

The following diagram illustrates the Step Functions workflow.

previous requirements

Before implementing the workflow, we need to create the existing training and validation data sets. Complete the following steps:

- First, create an Amazon Rekognition project.

- Next, create the training and validation data sets.

- Finally, install the AWS SAM CLI.

Implement the workflow

To implement the workflow, clone the GitHub repository:

These commands build, package, and deploy your application to AWS, with a series of prompts, as explained in the repository.

Run the workflow

To test the workflow, navigate to the implemented workflow in the Step Functions console, then choose start execution.

The workflow can take anywhere from a few minutes to a few hours to complete. If the model passes the evaluation criteria, an endpoint for the model is created in Amazon Rekognition. If the model does not pass the evaluation criteria or the training fails, the workflow fails. You can check the status of the workflow in the Step Functions console. For more information, see Viewing and debugging runs in the Step Functions console.

Make model predictions

To make predictions against the model, you can call the Amazon Rekognition DetectCustomLabels API. To invoke this API, the caller must have the necessary AWS Identity and Access Management (IAM) permissions. For more details on how to make predictions with this API, see Analyzing an image with a trained model.

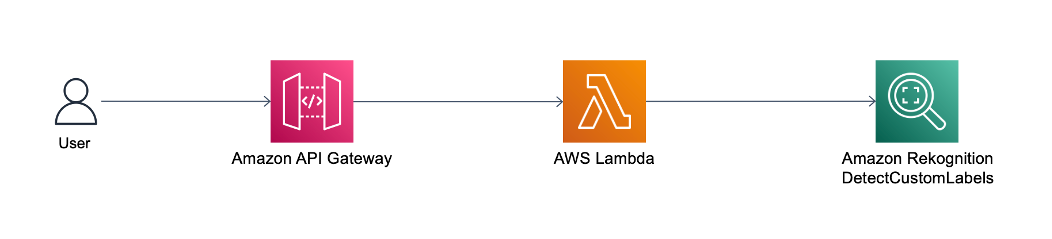

However, if you need to publicly expose the DetectCustomLabels API, you can present the DetectCustomLabels API with Amazon API Gateway. API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. API Gateway acts as the gateway for your DetectCustomLabels API, as shown in the following architecture diagram.

API Gateway forwards the user’s inference request to AWS Lambda. Lambda is a serverless, event-based computing service that enables you to run code for virtually any type of application or back-end service without provisioning or managing servers. Lambda receives the API request and calls the Amazon Rekognition DetectCustomLabels API with the necessary IAM permissions. For more information about how to configure API Gateway with the Lambda integration, see Configure Lambda proxy integrations in API Gateway.

The following is sample Lambda function code to call the DetectCustomLabels API:

Clean

To delete the workflow, use the AWS SAM CLI:

To remove the Rekognition custom label model, you can use the Amazon Rekognition console or the AWS SDK. For more information, see Deleting an Amazon Rekognition Custom Label Model.

Conclusion

In this post, we walk through a Step Functions workflow to create a dataset and then train, test, and use a Rekognition custom label model. The workflow enables application developers and ML engineers to automate custom tag classification steps for any machine vision use case. The code for the workflow is open source.

For more serverless learning resources, visit earth without a server. To learn more about Rekognition custom labels, visit Amazon Rekognition Custom Labels.

About the Author

veda raman is a Senior Specialized Solutions Architect for Machine Learning based in Maryland. Veda works with clients to help them build efficient, secure, and scalable machine learning applications. Veda is interested in helping customers take advantage of serverless technologies for machine learning.

veda raman is a Senior Specialized Solutions Architect for Machine Learning based in Maryland. Veda works with clients to help them build efficient, secure, and scalable machine learning applications. Veda is interested in helping customers take advantage of serverless technologies for machine learning.